Aby wyświetlić tę treść, wymagana jest subskrypcja JoVE. Zaloguj się lub rozpocznij bezpłatny okres próbny.

Method Article

Long-term Video Tracking of Cohoused Aquatic Animals: A Case Study of the Daily Locomotor Activity of the Norway Lobster (Nephrops norvegicus)

* Wspomniani autorzy wnieśli do projektu równy wkład.

W tym Artykule

Podsumowanie

Here we present a protocol to individually track animals over a long period of time. It uses computer vision methods to identify a set of manually constructed tags by using a group of lobsters as case study, simultaneously providing information on how to house, manipulate, and mark the lobsters.

Streszczenie

We present a protocol related to a video-tracking technique based on the background subtraction and image thresholding that makes it possible to individually track cohoused animals. We tested the tracking routine with four cohoused Norway lobsters (Nephrops norvegicus) under light-darkness conditions for 5 days. The lobsters had been individually tagged. The experimental setup and the tracking techniques used are entirely based on the open source software. The comparison of the tracking output with a manual detection indicates that the lobsters were correctly detected 69% of the times. Among the correctly detected lobsters, their individual tags were correctly identified 89.5% of the times. Considering the frame rate used in the protocol and the movement rate of lobsters, the performance of the video tracking has a good quality, and the representative results support the validity of the protocol in producing valuable data for research needs (individual space occupancy or locomotor activity patterns). The protocol presented here can be easily customized and is, hence, transferable to other species where the individual tracking of specimens in a group can be valuable for answering research questions.

Wprowadzenie

In the last few years, automated image-based tracking has provided highly accurate datasets which can be used to explore basic questions in ecology and behavior disciplines1. These datasets can be used for the quantitative analysis of animal behavior2,3. However, each image methodology used for tracking animals and behavior evaluation has its strengths and limitations. In image-based tracking protocols that use spatial information from previous frames in a movie to track animals4,5,6, errors can be introduced when the paths of two animals cross. These errors are generally irreversible and propagate through time. Despite computational advances that reduce or almost eliminate this problem5,7, these techniques still need homogeneous experimental environments for accurate animal identification and tracking.

The employment of marks that can be uniquely identified in animals avoids these errors and allows the long-term tracking of identified individuals. Widely used markers (e.g., barcodes and QR codes) exist in industry and commerce and can be identified using well-known computer vision techniques, such as augmented reality (e.g., ARTag8) and camera calibration (e.g., CALTag9). Tagged animals have previously been used for high-throughput behavioral studies in different animal species, for example, ants3 or bees10, but some of these previous systems are not optimized for recognizing isolated tags3.

The tracking protocol presented in this paper is especially suitable for tracking animals in one-channel imagery, such as infrared (IR) light or monochromatic light (particularly, we use blue light). Therefore, the method developed does not use color cues, being also applicable to other settings where there are constraints in the illumination. In addition, we use customized tags designed so as not to disturb the lobsters and, at the same time, allow recording with low-cost cameras. Moreover, the method used here is based on frame-independent tag detection (i.e., the algorithm recognizes the presence of each tag in the image regardless of the previous trajectories). This feature is relevant in applications where animals can be temporarily occluded, or animals' trajectories may intersect.

The tag design allows its use in different groups of animals. Once the parameters of the method are set, it could be transferred to tackle other animal-tracking problems without the need for training a specific classifier (other crustaceans or gastropods). The main limitations of exporting the protocol are the size of the tag and the need for attachment to the animal (which makes it not suitable for small insects, such as flies, bees, etc.) and the 2D assumption for the animal movement. This constraint is significant, given that the proposed method assumes the tag size remains constant. An animal moving freely in a 3D environment (e.g., fish) would show different tag sizes depending on its distance to the camera.

The purpose of this protocol is to provide a user-friendly methodology for tracking multiple tagged animals over a long period of time (i.e., days or weeks) in a 2D context. The methodological approach is based on the use of open source software and hardware. Free and open source software permits adaptations, modifications, and free redistribution; therefore, the generated software improves at each step11,12.

The protocol presented here focuses on a laboratory set up to track and evaluate the locomotor activity of four aquatic animals in a tank for 5 days. The video files are recorded from a 1 s time-lapse image and compiled in a video at 20 frames per second (1 recorded day occupies approximately 1 h of video). All video recordings are automatically postprocessed to obtain animal positions, applying computer vision methods and algorithms. The protocol allows obtaining large amounts of tracking data, avoiding their manual annotation, which has been shown to be time-intensive and laborious in previous experimental papers13.

We use the Norway lobster (Nephrops norvegicus) for the case study; thus, we provide species-specific laboratory conditions to maintain them. Lobsters perform well-studied burrow emergence rhythms that are under the control of the circadian clock14,15, and when cohoused, they form dominance hierarchy16,17. Hence, the model presented here is a good example for researchers interested in the social modulation of behavior with a specific focus on circadian rhythms.

The methodology presented here is easily reproduced and can be applied to other species if there is a possibility to distinguish between animals with individual tags. The minimum requirements for reproducing such an approach in the laboratory are (i) isothermal rooms for the experimental setup; (ii) a continuous water supply; (iii) water temperature control mechanisms; (iv) a light control system; (v) a USB camera and a standard computer.

In this protocol, we use Python18 and OpenCV19 (Open Source Computer Vision Library). We rely on fast and commonly applied operations (both in terms of implementation and execution), such as background subtraction20 and image thresholding21,22.

Access restricted. Please log in or start a trial to view this content.

Protokół

The species used in this study is not an endangered or protected species. Sampling and laboratory experiments followed the Spanish legislation and internal institutional (ICM-CSIC) regulations regarding animal welfare. Animal sampling was conducted with the permission of the local authority (Regional Government of Catalonia).

1. Animal Maintenance and Sampling

NOTE: The following protocol is based on the assumption that researchers can sample N. norvegicus in the field during the night to avoid damage to the photoreceptors23. Exposure of N. norvegicus to sunlight must be avoided. After sampling, the lobsters are supposed to be housed in an acclimation facility similar to the one reported on previously17,24, with a continuous flow of refrigerated seawater (13 °C). The animals used in this study are male at the intermoult state with a cephalothorax length (CL; mean ± SD) of 43.92 ± 2.08 mm (N = 4).

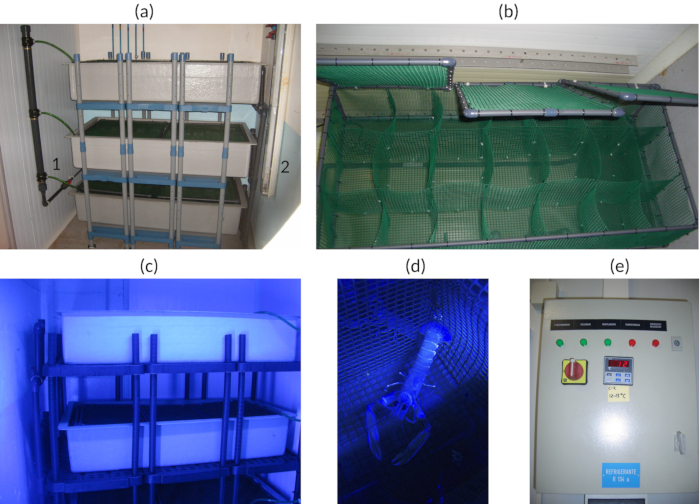

- Keep the individuals in isolated compartments to avoid any damages due to individual fights (see Figure 1a-d).

- Feed them about 3x a week at random times to not interfere with the circadian rhythms.

NOTE: In this experiment, mussels (approximately 4 g per lobster) were used as food. Mussels were bought from frozen food suppliers and were suitable for human consumption. - Use blue light (425 - 515 nm) to simulate light hours according to the spectral sensitivity of the species25 and the environmental conditions at 400 m deep26 (see Figure 1c,d).

NOTE: The facility used here has a vertical ceiling of two blue (478 nm) fluorescent lamps that produced a light intensity of 12 lx at 1 m of distance from the lamps. See Figure 1a for the ceiling lamps' position and see the Table of Materials for the manufacturer's and technical lamps' characteristics. - Adjust the photoperiod of the acclimation facility to 12/12 light/darkness hours or simulate the natural photoperiod of the local latitude.

- Regulate the facility temperature to 13 °C and monitor 2x daily to check the temperature of the inflowing seawater is around 13 °C (see Figure 1e).

- Regulate the inflow of seawater at a rate of about 4 L/min to maintain good oxygenation.

NOTE: The seawater circulates in an open circuit (no filters and additional pumps are used). The water supply depends on the main aquarium plant services.

Figure 1: Facility acclimation views. (a) Tank shelves. (a1) Seawater input. (a2) Fluorescent ceiling lights. (b) Detail of blue light illumination. (c) Animal cell detail. (d) Detail of an isolated facility control panel. (e) Temperature setting for one of the entrances. Please click here to view a larger version of this figure.

2. Tag's Construction

NOTE: The tag used here can be changed according to the characteristics of the target animal or other specific considerations.

- Cut four circles of 40 mm in diameter from a black plastic sheet.

- Cut from a white PVC plastic sheet two equilateral triangles with 26 mm sides.

- Cut from a white PVC plastic sheet two circles of 26 mm in diameter.

- Mark the center of the white triangles and circles and make a 10 mm hole in it.

- Glue the four white shapes to the center of the four black circles.

Figure 2: The four tags used for the individual tagging of the lobsters. Circle, circle-hole, triangle, triangle-hole. Please click here to view a larger version of this figure.

3. Experimental Setup

NOTE: The experimental arena is supposed to be in an experimental chamber independent from but in close proximity to the acclimation facility.

- Set up an experimental chamber where the air temperature can be controlled and maintained at the same temperature as the seawater in the experimental arena.

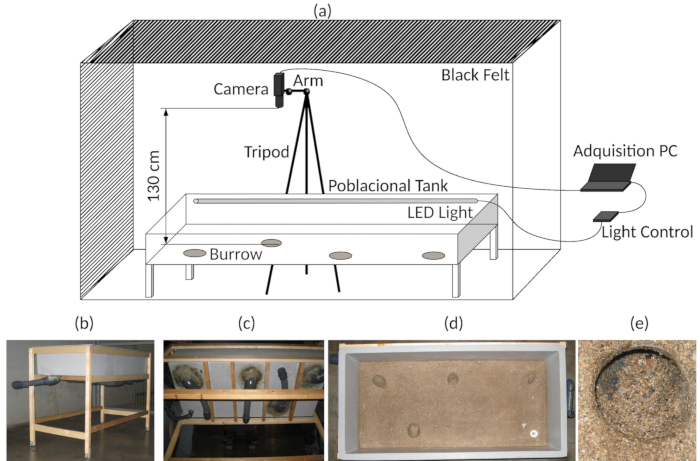

- Modify a fiberglass tank (1,500 x 700 x 300 mm) to be used as an experimental arena. Add four burrows using PVC flexible pipes at the bottom of the tank and stick sand on the surface where the lobsters are supposed to move (Figure 3b-e). For more details, see17,27.

- Provide the experimental arena with submergible blue LEDs (472 nm, simulating light hours) and IR LEDs (850 nm, dark conditions) (see also Figure 3a)17,24.

NOTE: LED light is used due to its low heat impact and the availability of usable electronic control and free hardware. An isolated facility with an environmental and seawater temperature of 13 ± 0.5 °C was used. - Always keep the IR LEDs switched on.

NOTE: The IR is needed to video record in dark conditions and in light conditions. It is not necessary to switch it off. - Connect the blue LEDs with an apparatus to manage the photoperiod. See the suggestions in the Table of Materials, and for more details, consult Sbragaglia et al.17 (also shown in Figure 3a).

NOTE: Illumination in video- or image-automated analyses is a critical factor. Regular illumination without shadows all over the arena avoiding water surface reflections makes the posterior video or image analysis easier. In the context of this protocol, only 12/12 light/darkness conditions were used. Light and darkness were gradually achieved within 30 min, and a light-controller script is added as Supplementary File 1. - Place the chilled seawater inlet at one corner of the tank and the corresponding outlet at the opposite corner.

- Regulate the seawater input at a flow rate of about 4 L/min.

- Surround the tank with a black curtain in order to provide a full isolation from other light (Figure 3a).

- Provide the experimental arena with submergible blue LEDs (472 nm, simulating light hours) and IR LEDs (850 nm, dark conditions) (see also Figure 3a)17,24.

- Place the tripod to which the web camera is fixed to the side of the experimental arena and position the video camera above (130 cm) and at the center of the experimental arena (75 cm x 32.5 cm (see Figure 3a).

- Check whether the video camera is in the centered position (see step 3.3) to make sure it has not been moved involuntarily.

- Connect the web camera to a computer that is placed outside the curtain (Figure 3a).

- Install the software to manage the time-lapse recording with the video camera.

NOTE: Time-lapse recordings depend on the movement's speed of the species. Also, see the Table of Materials for the camera, fisheye lens, PC, and software characteristics and manufacturers used here. - Adjust the parameters of the video recording according to the characteristics of the species.

NOTE: Considering the mobility rate of N. norvegicus, a 1 s time-lapse recording was used here, and the video was saved every 24 h. - Make sure to create a timestamp (including the date) in the time-lapse video (as this can help for the future manual scoring of the behavior).

- Install the software to manage the time-lapse recording with the video camera.

Figure 3: Experimental setup. (a) Diagram of the assembly of the experimental tank and video acquisition. (b) General view of the experimental tank. (c) Bottom view of the experimental tank, indicating the artificial burrows. (d) Top view, showing the bottom of the experimental tank. (e) Detail of one of the burrow entrances. Please click here to view a larger version of this figure.

4. Experimental Trial and Animal Preparation

NOTE: All steps with animals must be done in the acclimation facility and under red light conditions according to the spectral sensitivity of the Norway lobster25. When moving the animals between the acclimation and the experimental facility, avoid any exposure of the lobsters to light, using an opaque black bag to cover the icebox.

- Prepare an icebox previously separated into four submerged compartments with water at about 7 °C.

- Prepare the four tags previously constructed and fast glue, like cyanoacrylate.

- Prepare a tray with crushed ice.

- Select the four lobsters to be tagged in the acclimation facility and put each of them in a compartment of the icebox.

- Wait 30 min and, then, start the tagging procedure.

- Take a lobster and put it on the crushed ice for 5 min to immobilize it and facilitate the tagging operation.

- Dry the upper part of the lobster's cephalothorax with adsorptive paper and put a drop of fast glue on it.

- Place the tag horizontally on top of the animal's cephalothorax, in contact with the glue, and wait enough time for it to harden (for about 20 s).

- Return the lobster to its compartment in the icebox and proceed with the other three animals in the same way.

- Put the lobsters back in the cell where they were previously and wait for 24 h to be sure that the tag is properly glued on.

- Transfer the lobsters from the acclimation facility to the experimental chamber using the same icebox that was used for the tagging procedure.

- Launch the video recording and wait 5 min before introducing the tagged lobsters. Obtain an averaged background image from the initial 100 frames.

NOTE: Waiting a minimum of 1 min is mandatory to obtain background frames without tagged lobsters; they are needed for video processing. - Introduce the animals one by one in the experimentation tank inside their respective compartment, keeping the water in it.

- Wait for them to get out; if they do not come out, help them gently by tilting the compartment. See Figure 4 as example of the animals inside the tank under experimental conditions.

Figure 4: Raw video frame. An example of a representative frame from one of the time-lapse videos collected during the experiments. At the upper right corner, we show the time stamp with the date, time, and frame. Notice the differences in the tank illumination in the image's lower corner. Please click here to view a larger version of this figure.

5. Video Analysis Script

- Perform the analysis after completion of the experiment.

- Launch the computer vision script for video analysis.

- Launch Java program to calculate the positions and distance covered by the lobsters and insert the data in the database.

NOTE: This program is a Euclidean distance-based algorithm28. - Launch SQL script to binning data as desired time interval (ex. 10 min).

6. Computer Vision Script for Video Analysis

NOTE: The script avoids fisheye image correction because it does not introduce a relevant error in the experimental setup. Nonetheless, it is possible to correct this with OpenCV camera calibration functions29 based on vector and matrix rotation methods30,31.

- Select the Python18 program language.

- Select the OpenCV19 image and video processing library.

- Load a video.

NOTE: Video formats .avi or .mp4 were used in this experiment, but this is not mandatory. It depends on the FourCC32 codecs installed in the operating system. - Perform the following steps for each frame Fi in the video.

- Subtract the background20 B (average of the last 100 frames, obtained from step 4.6) from the current frame Fi, and update the background image B as Fi. Use the function BackgroundSubtractorMOG2 from the OpenCV19 library (see the scripts in the Supplementary File 2).

- Determine the set of regions of interest (ROIs) R from the pixels with relevant motion indicated by the background subtractor. Use the method apply from BackgroundSubtractorMOG2 in the OpenCV19 library (see the scripts in the Supplementary File 2). In the set, include the animal detections from the previous frame, to take into account nonmoving animals.

- Perform the following steps for each ROI Ri

- Apply the dilate function and compute the contours33 of ROI Ri. Use the functions dilate and findContours from the OpenCV19 library (see the scripts in the Supplementary File 2).

- Compute the hull area34 hi in the number of pixels. Use the function convexHull from the OpenCV19 library (see the scripts in the Supplementary File 2).

- Compute the radius35 ri of the ROI Ri. Use the function minEnclosingCircle from the OpenCV19 library (see the scripts in the Supplementary File 2).

- Compute the solidity si of the ROI Ri. Solidity is the ratio of the contour area (obtained in step 6.4.3.1) to its convex hull area (obtained in step 6.4.3.2) of the Ri.

- Compute the aspect ratio ai of the ROI Ri. Aspect ratio is the ratio between the width and the height of the Ri-bounding rectangle. The bounding rectangle is computed using the function boundingRect from the OpenCV19 library.

- Select a reduced set of ROIs as a candidate to contain the animals, by adjusting the properties for hull area, radius, solidity, and aspect ratio.

- Check if hi is less than 500.0 or greater than 100000.0. If so, discard the ROI Ri. Otherwise, keep the Ri as a candidate ROI for the animal location.

- Check if the ri is less than 40.0. If so, discard the ROI Ri. Otherwise, keep the Ri as a candidate ROI for the animal location.

- Check if the si is less than -4.0 discard the ROI Ri. Otherwise, keep the Ri as a candidate ROI for the animal location.

- Check if the ai is less than 0.15 or greater than 4.0. Is so, discard the ROI Ri. Otherwise, keep the Ri as a candidate ROI for the animal location.

NOTE: The use of ROIs reduces the computational cost, focusing the tag search on the animal's body region. Animal detections from previous frames are included to avoid wrong detections when the animals are not moving.

- Analyze the animal ROIs to determine the tag identities. Execute de following steps for each ROI Ri and for each internal ROI Pi, and extract the internal ROIs P.

- Binarize the grayscale image Pi using the Otsu36 thresholding algorithm.

- Compute the contours33 of Pi, as in step 6.4.3.1.

- Compute the hull area34 hi and the aspect ratio ai, as in steps 6.4.3.2 and 6.4.3.5.

- Compute the shape moments37,38 mi of Pi. Use the function moments from the OpenCV19 library (see the scripts in the Supplementary File 2).

- Select a reduced set of ROIs as a candidate to contain the tags, using the following criteria.

- Check if hi is less than 150.0 or greater than 500.0. If so, discard the ROI Pi. Otherwise, keep the Pi as a candidate ROI for the tag location.

- Check if the ai is less than 0.5 or greater than 1.5. If so, discard the ROI Pi. Otherwise, keep the Pi as a candidate ROI for the animal location.

- Check if the mi is greater than 0.3. If so, discard the ROI Pi. Otherwise, keep the Pi as a candidate ROI for the animal location.

- Classify the tag ROIs. Approximate a polygon39 using the OpenCV19 function38 for each selected ROI Pi19.

- Check if there are exactly three vertices in the approximated polygon; assign the tag to the triangle class. Otherwise, assign the circle class to the tag region.

NOTE: Approximated polygon is stored using a matrix with the vertices. - Check the central pixel of the ROI Pi. If it is a black pixel, assign the Pi to the holed class. Otherwise, assign the Pi to the white class.

NOTE: The shape center is deduced from the moments calculated in step 6.4.5.4. Search the black pixels in an area of a 4-pixel radius around the center.

- Check if there are exactly three vertices in the approximated polygon; assign the tag to the triangle class. Otherwise, assign the circle class to the tag region.

- Save the frame data: frame date, frame time, shape class, x center shape coordinate, and y center shape coordinate.

- Continue with the next frame or end the process. See Figure 5 below as a visual example of the working script steps and watch Video 1 as an example of script functioning.

Figure 5: Relevant steps of the video-processing script. (1) Evaluate the background subtraction motion over the mean of the last 100 frames. (2) Result of the background subtraction algorithm. (3) Apply a dilate morphological operation to the white-detected areas. (4) Apply fix, static, main ROI; the yellow polygon corresponds to the bottom tank area. (5) Calculate contours for each white-detected region in the main ROI and perform a structural analysis for each detected contour. (6) Check structural property values and, then, select second-level ROI candidates. (7) Binarize the frame using an Otsu thresholding algorithm; the script works only with second-level ROIs. (8) For each binarized second-level ROI, calculate the contours of the white regions and perform a structural analysis for each detected contour. (9) Check the structural property values and, then selects internal ROI candidates. (10) For each contour in the internal ROI candidate, calculate the descriptors/moments. (11) Check if the detected shape matches with the model shape and approximate a polygon to the best match candidates. (12) Check the number of vertices of the approximate polygon and determine the geometric figure: circle or triangle. (13) Calculate the figure center and check if black pixels occur; if yes, it is a holed figure. (14) Visual result after frame analysis. Please click here to view a larger version of this figure.

Access restricted. Please log in or start a trial to view this content.

Wyniki

We manually constructed a subset of the experimental data to validate the automated video analysis. A sample size of 1,308 frames with a confidence level of 99% (which is a measure of security that shows whether the sample accurately reflects the population, within its margin of error) and a margin of error of 4% (which is a percentage that describes how close the response the sample gave is to the real value in the population) was randomly selected, and a manual annotation of the correct...

Access restricted. Please log in or start a trial to view this content.

Dyskusje

The performance and representative results obtained with the video-tracking protocol confirmed its validity for applied research in the field of animal behavior, with a specific focus on social modulation and circadian rhythms of cohoused animals. The efficiency of animal detection (69%) and the accuracy of tag discrimination (89.5%) coupled with the behavioral characteristics (i.e., movement rate) of the target species used here suggest that this protocol is a perfect solution for long-term experimental trials (e.g., da...

Access restricted. Please log in or start a trial to view this content.

Ujawnienia

The authors have nothing to disclose.

Podziękowania

The authors are grateful to the Dr. Joan B. Company that funded the publication of this work. Also, the authors are grateful to the technicians of the experimental aquarium zone at the Institute of Marine Sciences in Barcelona (ICM-CSIC) for their help during the experimental work.

This work was supported by the RITFIM project (CTM2010-16274; principal investigator: J. Aguzzi) founded by the Spanish Ministry of Science and Innovation (MICINN), and the TIN2015-66951-C2-2-R grant from the Spanish Ministry of Economy and Competitiveness.

Access restricted. Please log in or start a trial to view this content.

Materiały

| Name | Company | Catalog Number | Comments |

| Tripod 475 | Manfrotto | A0673528 | Discontinued |

| Articulated Arm 143 | Manfrotto | D0057824 | Discontinued |

| Camera USB 2.0 uEye LE | iDS | UI-1545LE-M | https://en.ids-imaging.com/store/products/cameras/usb-2-0-cameras/ueye-le.html |

| Fish Eye Len C-mount f = 6 mm/F1.4 | Infaimon | Standard Optical | https://www.infaimon.com/es/estandar-6mm |

| Glass Fiber Tank 1500 x 700 x 300 mm3 | |||

| Black Felt Fabric | |||

| Wood Structure Tank | 5 Wood Strips 50x50x250 mm | ||

| Wood Structure Felt Fabric | 10 Wood Strips 25x25x250 mm | ||

| Stainless Steel Screws | As many as necessary for fix wood strips structures | ||

| PC | 2-cores CPU, 4GB RAM, 1 GB Graphics, 500 GB HD | ||

| External Storage HDD | 2 TB capacity desirable | ||

| iSPY Sotfware for Windows PC | iSPY | https://www.ispyconnect.com/download.aspx | |

| Zoneminder Software Linux PC | Zoneminder | https://zoneminder.com/ | |

| OpenCV 2.4.13.6 Library | OpenCV | https://opencv.org/ | |

| Python 2.4 | Python | https://www.python.org/ | |

| Camping Icebox | |||

| Plastic Tray | |||

| Cyanocrylate Gel | To glue tag’s | ||

| 1 black PVC plastic sheet (1 mm thickness) | Tag's construction | ||

| 1 white PVC plastic sheet (1 mm thickness) | Tag's construction | ||

| 4 Tag’s Ø 40 mm | Maked with black & white PVC plastic sheet | ||

| 3 m Blue Strid Led Ligts (480 nm) | Waterproof as desirable | ||

| 3 m IR Strid Led Ligts (850 nm) | Waterproof as desirable | ||

| 6 m Methacrylate Pipes Ø 15 mm | Enclosed Strid Led | ||

| 4 PVC Elbow 45o Ø 63 mm | Burrow construction | ||

| 3 m Flexible PVC Pipe Ø 63 mm | Burrow construction | ||

| 4 PVC Screwcap Ø 63 mm | Burrow construction | ||

| 4 O-ring Ø 63 mm | Burrow construction | ||

| 4 Female PVC socket glue / thread Ø 63 mm | Burrow construction | ||

| 10 m DC 12V Electric Cable | Light Control Mechanism | ||

| Ligt Power Supply DC 12 V 300 W | Light Control Mechanism | ||

| MOSFET, RFD14N05L, N-Canal, 14 A, 50 V, 3-Pin, IPAK (TO-251) | RS Components | 325-7580 | Light Control Mechanism |

| Diode, 1N4004-E3/54, 1A, 400V, DO-204AL, 2-Pines | RS Components | 628-9029 | Light Control Mechanism |

| Fuse Holder | RS Components | 336-7851 | Light Control Mechanism |

| 2 Way Power Terminal 3.81 mm | RS Components | 220-4658 | Light Control Mechanism |

| Capacitor 220 µF 200 V | RS Components | 440-6761 | Light Control Mechanism |

| Resistance 2K2 7 W | RS Components | 485-3038 | Light Control Mechanism |

| Fuse 6.3 x 32 mm2 3A | RS Components | 413-210 | Light Control Mechanism |

| Arduino Uno Atmel Atmega 328 MCU board | RS Components | 715-4081 | Light Control Mechanism |

| Prototipe Board CEM3,3 orific.,RE310S2 | RS Components | 728-8737 | Light Control Mechanism |

| DC/DC converter,12 Vin,+/-5 Vout 100 mA 1 W | RS Components | 689-5179 | Light Control Mechanism |

| 2 SERA T8 blue moonlight fluorescent bulb 36 watts | SERA | Discontinued/Light isolated facility |

Odniesienia

- Dell, A. I., et al. Automated image-based tracking and its application in ecology. Trends in Ecology & Evolution. 29 (7), 417-428 (2014).

- Berman, G. J., Choi, D. M., Bialek, W., Shaevitz, J. W. Mapping the stereotyped behaviour of freely moving fruit flies. Journal of The Royal Society Interface. 11 (99), (2014).

- Mersch, D. P., Crespi, A., Keller, L. Tracking Individuals Shows Spatial Fidelity Is a Key Regulator of Ant Social Organization. Science. 340 (6136), 1090(2013).

- Tyson, L. Hedrick Software techniques for two- and three-dimensional kinematic measurements of biological and biomimetic systems. Bioinspiration & Biomimetics. 3 (3), 034001(2008).

- Branson, K., Robie, A. A., Bender, J., Perona, P., Dickinson, M. H. High-throughput ethomics in large groups of Drosophila. Nature Methods. 6 (6), 451-457 (2009).

- de Chaumont, F., et al. Computerized video analysis of social interactions in mice. Nature Methods. 9, 410(2012).

- Pérez-Escudero, A., Vicente-Page, J., Hinz, R. C., Arganda, S., de Polavieja, G. G. idTracker: tracking individuals in a group by automatic identification of unmarked animals. Nature Methods. 11 (7), 743-748 (2014).

- Fiala, M. ARTag, a fiducial marker system using digital techniques. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05). 2, 590-596 (2005).

- Koch, R., Kolb, A., Rezk-Salama, C. CALTag: High Precision Fiducial Markers for Camera Calibration. Koch, R., Kolb, A., Rezk-salama, C. , (2010).

- Crall, J. D., Gravish, N., Mountcastle, A. M., Combes, S. A. BEEtag: A Low-Cost, Image-Based Tracking System for the Study of Animal Behavior and Locomotion. PLOS ONE. 10 (9), e0136487(2015).

- Charpentier, R. Free and Open Source Software: Overview and Preliminary Guidelines for the Government of Canada. Open Source Business Resource. , (2008).

- Crowston, K., Wei, K., Howison, J. Free/Libre Open Source Software Development: What We Know and What We Do Not Know. ACM Computing Surveys. 37, (2012).

- Edmonds, N. J., Riley, W. D., Maxwell, D. L. Predation by Pacifastacus leniusculus on the intra-gravel embryos and emerging fry of Salmo salar. Fisheries Management and Ecology. 18 (6), 521-524 (2011).

- Sbragaglia, V., et al. Identification, Characterization, and Diel Pattern of Expression of Canonical Clock Genes in Nephrops norvegicus (Crustacea: Decapoda) Eyestalk. PLOS ONE. 10 (11), e0141893(2015).

- Sbragaglia, V., et al. Dusk but not dawn burrow emergence rhythms of Nephrops norvegicus (Crustacea: Decapoda). Scientia Marina. 77 (4), 641-647 (2013).

- Katoh, E., Sbragaglia, V., Aguzzi, J., Breithaupt, T. Sensory Biology and Behaviour of Nephrops norvegicus. Advances in Marine Biology. 64, 65-106 (2013).

- Sbragaglia, V., Leiva, D., Arias, A., Antonio García, J., Aguzzi, J., Breithaupt, T. Fighting over burrows: the emergence of dominance hierarchies in the Norway lobster (Nephrops norvegicus). The Journal of Experimental Biology. 220 (24), 4624-4633 (2017).

- Welcome to Python.org. , https://www.python.org/ (2018).

- Bradski, G. OpenCV Library. Dr. Dobb’s Journal of Software Tools. , (2000).

- Piccardi, M. Background subtraction techniques: a review. 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No.04CH37583). 4, 3099-3104 (2004).

- Sankur, B. Survey over image thresholding techniques and quantitative performance evaluation. Journal of Electronic Imaging. 13 (1), 146(2004).

- Lai, Y. K., Rosin, P. L. Efficient Circular Thresholding. IEEE Transactions on Image Processing. 23 (3), 992-1001 (2014).

- Gaten, E. Light‐induced damage to the dioptric apparatus of Nephrops norvegicus (L.) and the quantitative assessment of the damage. Marine Behaviour and Physiology. 13 (2), 169-183 (1988).

- Sbragaglia, V., et al. An automated multi-flume actograph for the study of behavioral rhythms of burrowing organisms. Journal of Experimental Marine Biology and Ecology. 446, 177-186 (2013).

- Johnson, M. L., Gaten, E., Shelton, P. M. J. Spectral sensitivities of five marine decapod crustaceans and a review of spectral sensitivity variation in relation to habitat. Journal of the Marine Biological Association of the United Kingdom. 82 (5), 835-842 (2002).

- Markager, S., Vincent, W. F. Spectral light attenuation and the absorption of UV and blue light in natural waters. Limnology and Oceanography. 45 (3), 642-650 (2000).

- Aguzzi, J., et al. A New Laboratory Radio Frequency Identification (RFID) System for Behavioural Tracking of Marine Organisms. Sensors. 11 (10), 9532-9548 (2011).

- Audin, M. Geometry [Electronic Resource. , Springer Berlin Heidelberg:, Imprint: Springer. Berlin, Heidelberg. (2003).

- OpenCV Team Structural Analysis and Shape Descriptors - OpenCV 2.4.13.7 documentation. , https://docs.opencv.org/2.4/modules/imgproc/doc/structural_analysis_and_shape_descriptors.html?highlight=findcontours#void%20HuMoments(const%20Moments&%20m,%20OutputArray%20hu) (2018).

- Slabaugh, G. G. Computing Euler angles from a rotation matrix. 7, (1999).

- Zhang, Z. A flexible new technique for camera calibration. IEEE Transactions on Pattern Analysis and Machine Intelligence. 22 (11), 1330-1334 (2000).

- www.FOURCC.org - Video Codecs and Pixel Formats. , https://www.fourcc.org/ (2018).

- Suzuki, S., be, K. Topological structural analysis of digitized binary images by border following. Computer Vision, Graphics, and Image Processing. 30 (1), 32-46 (1985).

- Sklansky, J. Finding the convex hull of a simple polygon. Pattern Recognition Letters. 1 (2), 79-83 (1982).

- Fitzgibbon, A., Fisher, R. A Buyer’s Guide to Conic Fitting. , 51.1-51.10 (1995).

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Transactions on Systems, Man, and Cybernetics. 9 (1), 62-66 (1979).

- Hu, M. K. Visual pattern recognition by moment invariants. IRE Transactions on Information Theory. 8 (2), 179-187 (1962).

- Structural Analysis and Shape Descriptors - OpenCV 2.4.13.6 documentation. , https://docs.opencv.org/2.4/modules/imgproc/doc/structural_analysis_and_shape_descriptors.html?highlight=cvmatchshapes#humoments (2018).

- Douglas, D. H., Peucker, T. K. Algorithms for the Reduction of the Number of Points Required to Represent a Digitized Line or its Caricature. Cartographica: The International Journal for Geographic Information and Geovisualization. 10 (2), 112-122 (1973).

- Vanajakshi, B., Krishna, K. S. R. Classification of boundary and region shapes using Hu-moment invariants. Indian Journal of Computer Science and Engineering. 3, 386-393 (2012).

- Kahle, D., Wickham, H. ggmap : Spatial Visualization with ggplot2. The R Journal. , 144-162 (2013).

- Venables, W. N., Ripley, B. D. Modern Applied Statistics with S. , Springer. New York. (2010).

- Abbas, Q., Ibrahim, M. E. A., Jaffar, M. A. A comprehensive review of recent advances on deep vision systems. Artificial Intelligence Review. , (2018).

- Menesatti, P., Aguzzi, J., Costa, C., García, J. A., Sardà, F. A new morphometric implemented video-image analysis protocol for the study of social modulation in activity rhythms of marine organisms. Journal of Neuroscience Methods. 184 (1), 161-168 (2009).

- Chapman, C. J., Shelton, P. M. J., Shanks, A. M., Gaten, E. Survival and growth of the Norway lobster Nephrops norvegicus in relation to light-induced eye damage. Marine Biology. 136 (2), 233-241 (2000).

- Video tracking software | EthoVision XT. , https://www.noldus.com/animal-behavior-research/products/ethovision-xt (2018).

- Correll, N., Sempo, G., Meneses, Y. L. D., Halloy, J., Deneubourg, J., Martinoli, A. SwisTrack: A Tracking Tool for Multi-Unit Robotic and Biological Systems. 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems. , 2185-2191 (2006).

- MATLAB - MathWorks. , https://www.mathworks.com/products/matlab.html (2018).

- Leggat, P. A., Smith, D. R., Kedjarune, U. Surgical Applications of Cyanoacrylate Adhesives: A Review of Toxicity. ANZ Journal of Surgery. 77 (4), 209-213 (2007).

- Dizon, R. M., Edwards, A. J., Gomez, E. D. Comparison of three types of adhesives in attaching coral transplants to clam shell substrates. Aquatic Conservation: Marine and Freshwater Ecosystems. 18 (7), 1140-1148 (2008).

- Cary, R. Methyl cyanoacrylate and ethyl cyanoacrylate. , World Health Organization. Geneva. (2001).

- Krizhevsky, A., Sutskever, I., Hinton, G. E. Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems. , http://papers.nips.cc/paper/4824-imagenet-classification-w 1097-1105 (2012).

Access restricted. Please log in or start a trial to view this content.

Przedruki i uprawnienia

Zapytaj o uprawnienia na użycie tekstu lub obrazów z tego artykułu JoVE

Zapytaj o uprawnieniaPrzeglądaj więcej artyków

This article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. Wszelkie prawa zastrzeżone