A subscription to JoVE is required to view this content. Sign in or start your free trial.

Cereal Crop Ear Counting in Field Conditions Using Zenithal RGB Images

In This Article

Summary

We present a protocol for counting durum wheat and barley ears, using natural color (RGB) digital photographs taken in natural sunlight under field conditions. With minimal adjustments for camera parameters and some environmental condition limitations, the technique provides precise and consistent results across a range of growth stages.

Abstract

Ear density, or the number of ears per square meter (ears/m2), is a central focus in many cereal crop breeding programs, such as wheat and barley, representing an important agronomic yield component for estimating grain yield. Therefore, a quick, efficient, and standardized technique for assessing ear density would aid in improving agricultural management, providing improvements in preharvest yield predictions, or could even be used as a tool for crop breeding when it has been defined as a trait of importance. Not only are the current techniques for manual ear density assessments laborious and time-consuming, but they are also without any official standardized protocol, whether by linear meter, area quadrant, or an extrapolation based on plant ear density and plant counts postharvest. An automatic ear counting algorithm is presented in detail for estimating ear density with only sunlight illumination in field conditions based on zenithal (nadir) natural color (red, green, and blue [RGB]) digital images, allowing for high-throughput standardized measurements. Different field trials of durum wheat and barley distributed geographically across Spain during the 2014/2015 and 2015/2016 crop seasons in irrigated and rainfed trials were used to provide representative results. The three-phase protocol includes crop growth stage and field condition planning, image capture guidelines, and a computer algorithm of three steps: (i) a Laplacian frequency filter to remove low- and high-frequency artifacts, (ii) a median filter to reduce high noise, and (iii) segmentation and counting using local maxima peaks for the final count. Minor adjustments to the algorithm code must be made corresponding to the camera resolution, focal length, and distance between the camera and the crop canopy. The results demonstrate a high success rate (higher than 90%) and R2 values (of 0.62-0.75) between the algorithm counts and the manual image-based ear counts for both durum wheat and barley.

Introduction

The world cereal utilization in 2017/2018 is reported expand by 1% from the previous year1. Based on the latest predictions for cereal production and population utilization, world cereal stocks need to increase yields at a faster rate in order to meet growing demands, while also adapting to increasing effects of climate change2. Therefore, there is an important focus on yield improvement in cereal crops through improved crop breeding techniques. Two the most important and harvested cereals in the Mediterranean region are selected as examples for this study, namely, durum wheat (Triticum aestivum L. ssp. durum [Desf.]) and barley (Hordeum vulgare L.). Durum wheat is, by extension, the most cultivated cereal in the south and east margins of the Mediterranean Basin and is the 10th most important crop worldwide, owing to its annual production of 37 million tons annually3, while barley is the fourth global grain in terms of production, with a global production at 144.6 million tons annually4.

Remote sensing and proximal image analysis techniques are increasingly key tools in the advancement of field high-throughput plant phenotyping (HTPP) as they not only provide more agile but also, often, more precise and consistent retrievals of target crop biophysiological traits, such as assessments of photosynthetic activity and biomass, preharvest yield estimates, and even improvements in trait heritability, such as efficiency in resource use and uptake5,6,7,8,9. Remote sensing has traditionally focused on multispectral, hyperspectral, and thermal imaging sensors from aerial platforms for precision agriculture at the field scale or for plant phenotyping studies at the microplot scale10. Common, commercially available digital cameras that measure only visible reflected light were often overlooked, despite their very high spatial resolution, but have recently become popular as new innovative image-processing algorithms are increasingly able to take advantage of the detailed color and spatial information that they provide. Many of the newest innovations in advanced agricultural image analyses increasingly rely on the interpretation of data provided by very high-resolution (VHR) RGB images (for their measurement of red, green, and blue visible light reflectance), including crop monitoring (vigor, phenology, disease assessments, and identification), segmentation and quantification (emergence, ear density, flower and fruit counts), and even full 3D reconstructions based on a new structure from motion workflows11.

One of the most essential points for improvement in cereal productivity is a more efficient assessment of yield, which is determined by three major components: ear density or the number of ears per square meter (ears/m2), the number of the grains per ear, and the thousand-kernel weight. Ear density can be obtained manually in the field, but this method is laborious, time-consuming, and lacking in a single standardized protocol, which together may result in a significant source of error. Incorporating the automatic counting of ears is a challenging task because of the complex crop structure, close plant spacing, high extent of overlap, background elements, and the presence of awns. Recent work has advanced in this direction by using a black background structure supported by a tripod in order to acquire suitable crop images, demonstrating fairly good results in ear counting12. In this way, excessive sunlight and shadow effects were avoided, but such a structure would be cumbersome and a major limitation in an application to field conditions. Another example is an automatic ear counting algorithm developed using a fully automated phenotyping system with a rigid motorized gantry, which was used with good accuracy for counting ear density in a panel composed of five awnless bread wheat (Triticum aestivum L.) varieties growing under different nitrogen conditions13. Recent work by Fernandez-Gallego14 has optimized this process for quicker and easier data capture, using VHR RGB color images followed by more advanced, yet still fully automated, image analyses. The efficient and high-quality data collection in field conditions emphasizes a simplified standardized protocol for consistency and high data capture throughput, while the image-processing algorithm employs the novel use of Laplacian and frequency domain filters to remove undesired image components before applying a segmentation for counting based on finding local maxima (as opposed to full delineation as in other previous studies, which may result in more errors with overlapping ears).

This work proposes a simple system for the automatic quantification of ear density in field conditions, using images acquired from commercially available digital cameras. This system takes advantage of natural light in field conditions and, therefore, requires consideration of some related environmental factors, such as time of day and cloud cover, but remains, in effect, simple to implement. The system has been demonstrated on examples for durum wheat and barley but should be extendable in application to bread wheat, which, besides exhibiting ears with similar morphology, are frequently awnless, but further experiments would be required in order to confirm this. In the data capture protocol presented here, zenithal images are taken by simply holding the camera by hand or using a monopod for positioning the digital camera above the crop. Validation data can be acquired by counting the ears manually for subplots in the field or during postprocessing, by counting ears in the image itself. The image-processing algorithm is composed of three processes that, first, effectively remove unwanted components of the image in a manner that, then, allows for the subsequent segmentation and counting of the individual wheat ears in the acquired images. First, a Laplacian frequency filter is used in order to detect changes in the different spatial directions of the image using the default ImageJ filter settings without window kernel size adjustments (Find Maxima segmentation technique determines the local peaks after the median spatial filter step, at which stage the pixels related with ears have higher pixel values than soil or leaves. Therefore, Find Maxima is used to segment the high values in the image, and those regions are labeled as ears, which identifies ears while also reducing overlapping ear errors. Analyze Particles is then used on the binary images to count and/or measure parameters from the regions created by the contrast between the white and black surface created by the Find Maxima step. The result is then processed to create a binary image segmentation by analyzing the nearest neighbor pixel variance around each local maximum to identify the wheat ear shapes in the filtered image. Finally, the ear density is counted using Analyze Particles, as implemented in Fiji15. Both Find Maxima and Analyze Particles are standalone functions and available as plugins in Fiji (https://imagej.nih.gov/ij/plugins/index.html). Though not presented specifically in the protocol here, preliminary results presented as supplementary material suggest that this technique may be adaptable to conducting ear count surveys from unmanned aerial vehicles (UAVs), providing that the resolution remains sufficiently high14.

Protocol

1. Prefield crop growth stage and environmental conditions

- Make sure that the crop growth stage is approximately between grain filling and near crop maturity, with ears that are still green even if the leaves are senescent (which corresponds in the case of wheat to the range 60-87 of Zadoks' scale16). Some yellowing of the leaves is acceptable but not necessary.

- Prepare a sampling plan for image capture with various replicates (pictures per plot) in order to capture plot/area variability; the image-processing algorithm will count the number of ears in the image and convert that to ears per square meter (ears/m2) based on the camera specifications.

- Plan the field excursions to capture the images within two hours of solar noon or, alternatively, on an overcast day in diffuse lighting conditions in order to avoid the negative effects of ear shadowing on the ear counting algorithm.

- Once in the field, check the top of the crop canopy to make sure that it is dry in order to avoid specular light reflection from moisture.

NOTE: In considering the objectives of this protocol, it is important to first consider whether the growth stage of the crop is suitable for applying ear counts. Capturing images outside of the recommended growth stage will either result in suboptimal or in meaningless results (if ears are not present or fully emerged). Image quality also has a considerable impact on processing the results, including resolution and sensor size, and some environmental conditions, such as time of day and cloud cover, need to be carefully considered before proceeding with image capture.

2. Image capture in field conditions with natural light

- Prepare a "phenopole" as shown in Figure 1 or a similar acquisition system (even handheld) to capture images quickly and yet in a standardized and consistent manner at each plot or target location.

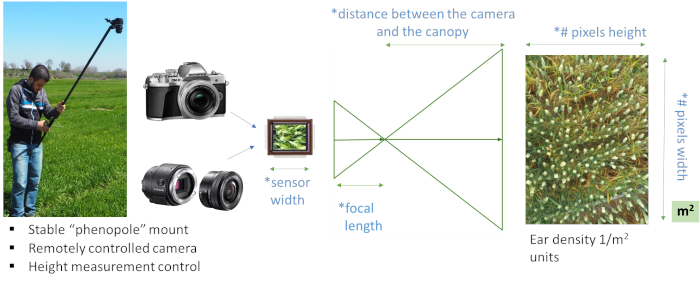

Figure 1: Ear counting system. Ear counting system using the "phenopole" shown in the field on the left, with a remotely controlled natural color (RGB) large sensor and high-resolution digital camera system with camera tilt and height, indicating the necessary parameters for adjusting the image-processing algorithm. The sensor and image resolution are detected automatically by the image properties, while the user should input the specifics for the lens focal length and the distance from the canopy. These are necessary to adjust the algorithm for the estimated number of pixels per ear and also the conversion of the image-based total ear count to ear density (ears/m2). For that reason, it is recommended to use the same camera and lens focal length for all field images. Please click here to view a larger version of this figure.

- Position the camera on a suitable monopod or "selfie" pole so that it may be maintained level, either using level bubbles or an in-camera stabilization system, to obtain zenithal images.

- Use a mobile phone, tablet, or another device to connect the camera for both remote control image capture and image visualization for the best results with correctly focused images. Program the camera for autofocus in order to reduce any errors in case the user is not familiar enough with their camera or photography techniques to set a correct manual focus, as demonstrated by the examples of zenithal images with correct focus and exposure in Figure 2.

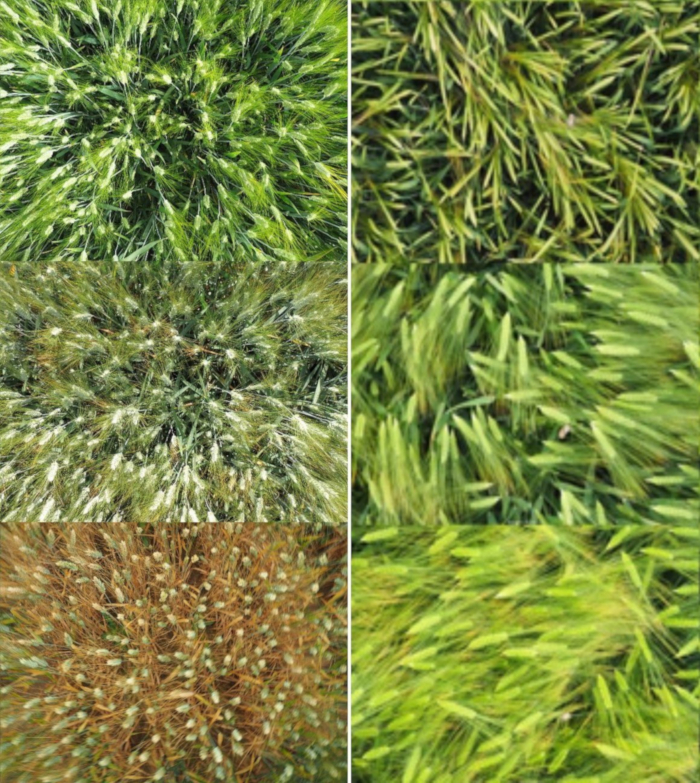

Figure 2: Zenithal crop images. Durum wheat and barley ear zenithal images for ear counting data set examples with an acceptable stage of growth and senescence from approximately 61 to 87 according to Zadoks' scale. (Left) Durum wheat zenithal image data set example. (Right) Barley zenithal image data set example. Please click here to view a larger version of this figure.

- Take note of the image number prior to image capture in order to match the images correctly with the field plots. Record one image of the general field area at the start and one image of the ground/field between blocks for postprocessing controls.

- Position the camera at approximately 80 cm above the top of the crop canopy, using a ruler or measurement string to periodically check the camera height above the canopy. Ensure that the camera is level and capture the image. This technique may require 1-2 researcher(s).

- If additional field ear count validation is desired apart from a manual image count validation, install an extension arm to the frame (e.g., a small circle) and position it in the middle of the image in order to conduct manual field counts of a precise image subset; this technique may require 2-3 people to implement.

NOTE: Three major considerations in selecting a camera, therefore, include: (1) camera specifications; in this case, the sensor's physical size; (2) focal length of the image lens; (3) distance between the canopy and the camera: smaller distances or greater zoom lenses will capture a smaller area while images captured from a greater distance will capture a bigger crop area. See Figure 1 for the details on the relevant camera specifications.

3. Algorithm implementation and adjustments

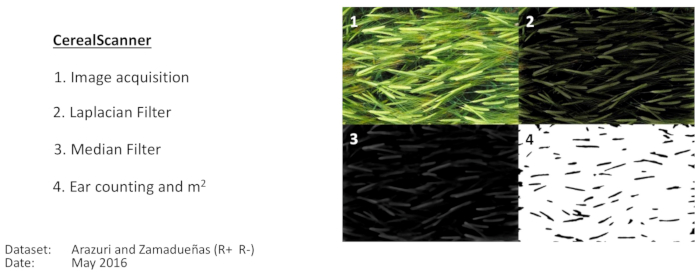

NOTE: Here we present algorithm implementation and adjustments for different camera specifications (sensor size, megapixels, focal length, distance to crop) and crop (durum wheat or barley) for automatic ear counting. An overview of the algorithm is presented graphically in Figure 3.

Figure 3: Image-processing pipeline for two-row barley ear counting. Image-processing pipeline for two-row barley ear counting as implemented using specific computer code or using the CerealScanner software, both of which operate within Fiji (ImageJ). Panel 1 shows the original image. Panel 2 shows the results of the applications of the Laplacian filter. Panel 3 shows the application of the median filter, and Panel 4 shows the results of the final Find Maxima and segmentation for producing the final ear count. Then, the calculations are made to convert the image count to ear density, as shown in Figure 1. These images are an example taken from the Arazuri field site (Northeastern Spain, 42°48'33.9"N 1°43'37.9"W) in diffuse light conditions. Please click here to view a larger version of this figure.

- Download and install Fiji, Java 8, and the processing code or the University of Barcelona’s proprietary CerealScanner plugin (https://fiji.sc/, https://www.java.com/en/download/, and https://integrativecropecophysiology.com/software-development/cerealscanner/ [information] or https://gitlab/sckefauver/CerealScanner [code repository]); contact the corresponding authors for access permissions. The plugin is installed within Fiji by simply copying it into the plugins folder.

- Open the plugin from the top menu through Plugins > CerealScanner > Open Cereal Scanner.

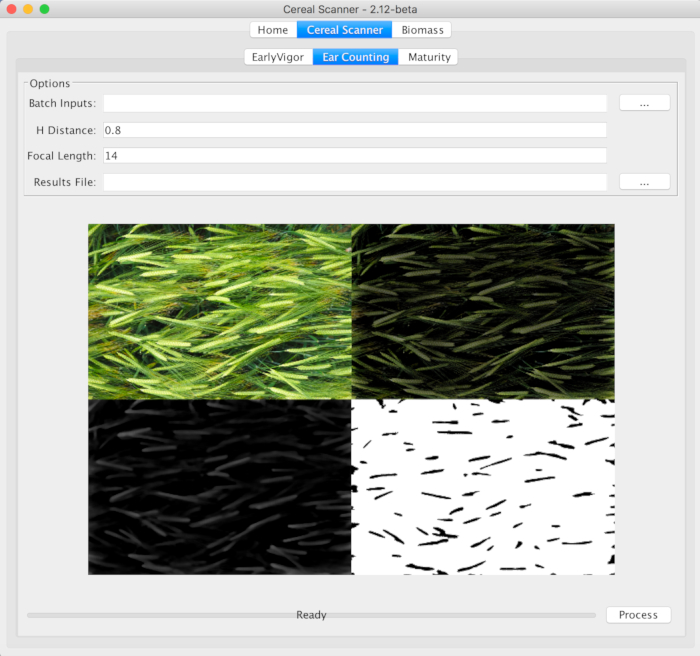

NOTE: Apart from the work presented here, the CerealScanner plugin includes several different RGB-based vegetation indices related to crop vigor, stress, or chlorophyll17,18. The specific CerealScanner portion includes specific algorithms for Early Vigor (Fernandez-Gallego, In review), Ear Counting14, and Crop Senescence19, as shown in Figure 4. - Enter the adjustments of the camera specifications and image capture details if they are different from the default values (see Figure 1 and Figure 4 for details).

- Adjust the algorithm parameter for the camera focal length.

- Adjust the algorithm parameter for the distance from the crop canopy.

Figure 4: The CerealScanner 2.12 Beta central tab on both levels, marking the Ear Counting function within the CerealScanner algorithm collection. The user must select the … button to the right of Batch Inputs to select the folder where the image files are stored, change the default values of the H Distance (distance from the camera to the top of the crop canopy) and Focal Length, if different from the default values, and then select the … button to the right of Results File to choose the name and location of the final results file. The other tabs of the CerealScanner provide algorithms for trait-based phenotyping for Early Vigor and onset of Maturity as part of the CerealScanner code suite. Under the Biomass tab, there are several algorithms for estimations of more general crop vigor and biomass calculations, also for RGB digital images. The example refers to two-row barley, as it was demonstrated in detail in Figure 3.Please click here to view a larger version of this figure.

Figure 5: Algorithm adjustments. Adjustments required in the image-processing pipeline in order to successfully count both wheat and barley ears using the same algorithm are managed automatically as part of the camera-specific adjustments of H Distance (distance between the camera and the crop canopy) and Focal Length and serve to ensure that the number of pixels per ear remains more or less constant between different applications. Please click here to view a larger version of this figure.

- Select the center tab CerealScanner and the subsequent central tab Ear Counting in order to calculate the number of ears in each image of a field data set.

- Under Options, enter, in Batch Inputs, the location of the photos to analyze.

- In the Results Files, select where to save the results file. The results file will include two columns with the image file name and the ear counting results.

- Finally, click on Process, and the results file with the ear density in square meters (ears/m2), using a simple ratio using the camera settings and the distance between canopy and camera to convert the image area to an actual canopy area in square meters following Figure 1, will be automatically produced in a few minutes, depending on the computer speed.

- Conduct a post-processing validation after the data collection by manually counting the number of wheat or barley ears in the image and then converting this to the number of ears per square meters (ears/m2), as is shown in Figure 1, to compare the results with those based on the algorithm values.

- Use the simple point placement tool built within Fiji, which provides easy support for this process, and the Fiji Analyze Particles function for producing the counts automatically; this is shown graphically in Figure 6.

- Optionally, conduct a validation using a small area circle during field data acquisition as described in step 2.6; manual counts in the field and manual image counts in the laboratory can, then, be used for algorithm validation as shown in Figure 7.

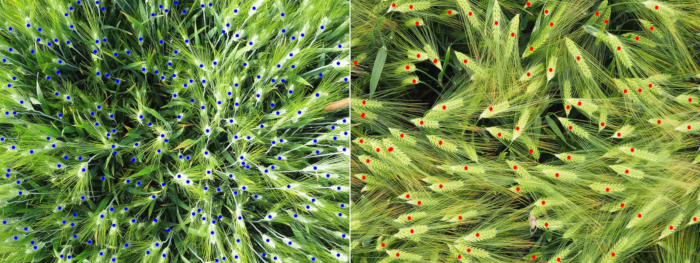

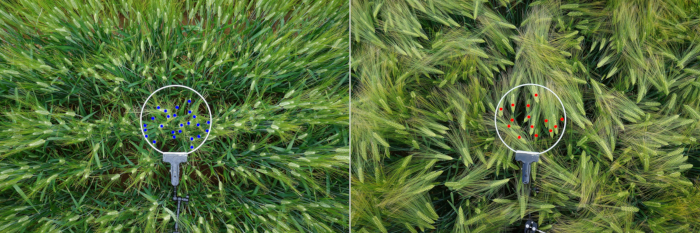

Figure 6: Algorithm validation using manual in-image ear counts. Manual in-image ear counts for (left) durum wheat and (right) barley. The small dots were created using the Fiji Point Tool and then counted using the Analyze Particles Function with a 0.90-1.00 Circularity constraint after applying a Color Threshold from the Hue Saturation Intensity color space for the color specified by the Point Tool. This method ensures more accurate image-based manual ear counts. Please click here to view a larger version of this figure.

Figure 7: Algorithm validation using manual counts in the field and manual in-image ear counts of wheat and barley, using a circle. (Left) Wheat image count validation example using a circle. (Right) Barley image count validation example using a circle. The subset counts of the ears within the white circle were counted using the same technique as described in Figure 6 with the Point Tool, Color Threshold, and then, Analyze Particles Function with Circularity constraints and color selection using Hue. Please click here to view a larger version of this figure.

Results

In Figure 8, the results show the determination coefficient between the ear density (number of ears per square meters) using manual counting and the ear counting algorithm for wheat and barley at three different crop growth stages. The first one is durum wheat with a Zadoks' scale between 61 and 65 (R2 = 0.62). The second one is two-row barley with a Zadoks' scale between 71 and 77 (R2 = 0.75), and the last one ...

Discussion

Increased agility, consistency, and precision are key to developing useful new phenotyping tools to assist the crop-breeding community in their efforts to increase grain yield despite negative pressures related to global climate change. Efficient and accurate assessments of cereal ear density, as a major agronomic component of yield of important staple crops, will help provide the tools needed for feeding future generations. Focusing on the improvement and support of crop-breeding efforts in field conditions helps keep t...

Disclosures

The authors have nothing to disclose.

Acknowledgements

The authors of this research would like to thank the field management staff at the experimental stations of Colmenar de Oreja (Aranjuez) of the Instituto Nacional de Investigación y Tecnología Agraria y Alimentaria (INIA) and Zamadueñas (Valladolid) of the Instituto de Tecnología Agraria de Castilla y León (ITACyL) for their field support of the research study crops used. This study was supported by the research project AGL2016-76527-R from MINECO, Spain and part of a collaboration project with Syngenta, Spain. The BPIN 2013000100103 fellowship from the "Formación de Talento Humano de Alto Nivel, Gobernación del Tolima - Universidad del Tolima, Colombia" was the sole funding support for the first author Jose Armando Fernandez-Gallego. The primary funding source of the corresponding author, Shawn C. Kefauver, came from the ICREA Academia program through a grant awarded to Prof. Jose Luis Araus.

Materials

| Name | Company | Catalog Number | Comments |

| ILCE-QX1 Camera | Sony | WW024382 | Compact large sensor digital camera with 23.2 x 15.4 mm sensor size. |

| E-M10 Camera | Olympus | E-M10 | Compact large sensor digital camera with 17.3 x 13.0 mm sensor size. |

| Multipod Monpod | Sony | VCT MP1 | "Phenopole" in the JoVE article |

| Computer | Any PC/Mac/Linux | -- | Data and image analysis |

| ImageJ/FIJI (FIJI is just Image J) | NIH | http://fiji.sc | Plug-in and algorithms for data and image analysis |

| Circle/Metal Ring | Generic | Generic | Metal ring for in-field validation |

| Crab Pliers Clip | Newer | 90087340 | Circle support and extension arm |

References

- Food and Agriculture Organization (FAO). . Food outlook: Biannual report on global food markets. , (2017).

- Araus, J. L., Kefauver, S. C. Breeding to adapt agriculture to climate change: affordable phenotyping solutions. Current Opinion in Plant Biology. , (2018).

- Ranieri, R. Geography of the Durum Wheat Crop. Pastaria International. 6, (2015).

- Food Agriculture Organization (FAO). . The State of Food Insecurity in the World. , (2014).

- Araus, J. L., Cairns, J. E. Field high-throughput phenotyping: the new crop breeding frontier. Trends in Plant Science. 19 (1), 52-61 (2014).

- Fiorani, F., Schurr, U. Future Scenarios for Plant Phenotyping. Annual Review of Plant Biology. 64 (1), 267-291 (2013).

- Cabrera-Bosquet, L., Crossa, J., von Zitzewitz, J., Serret, M. D., Luis Araus, J. High-throughput Phenotyping and Genomic Selection: The Frontiers of Crop Breeding ConvergeF. Journal of Integrative Plant Biology. 54 (5), 312-320 (2012).

- Araus, J. L., Ferrio, J. P., Voltas, J., Aguilera, M., Buxó, R. Agronomic conditions and crop evolution in ancient Near East agriculture. Nature Communications. 5 (1), 3953 (2014).

- Furbank, R. T., Tester, M. Phenomics – technologies to relieve the phenotyping bottleneck. Trends in Plant Science. 16 (12), 635-644 (2011).

- Araus, J. L., Kefauver, S. C., Zaman-Allah, M., Olsen, M. S., Cairns, J. E. Translating High-Throughput Phenotyping into Genetic Gain. Trends in Plant Science. 23 (5), P451-P466 (2018).

- Duan, T., et al. Dynamic quantification of canopy structure to characterize early plant vigour in wheat genotypes. Journal of Experimental Botany. 67 (15), 4523-4534 (2016).

- Cointault, F., Guerin, D., Guillemin, J., Chopinet, B. In-field Triticum aestivum ear counting using colour-texture image analysis. New Zealand Journal of Crop and Horticultural Science. 36 (2), 117-130 (2008).

- Dornbusch, T., et al. . Digital Field Phenotyping by LemnaTec. , (2015).

- Fernandez-Gallego, J. A., Kefauver, S. C., Gutiérrez, N. A., Nieto-Taladriz, M. T., Araus, J. L. Wheat ear counting in-field conditions: high throughput and low-cost approach using RGB images. Plant Methods. 14 (1), 22 (2018).

- Schneider, C. A., Rasband, W. S., Eliceiri, K. W. NIH Image to ImageJ: 25 years of image analysis. Nature Methods. 9 (7), 671-675 (2012).

- Zadoks, J., Chang, T., Konzak, C. A decimal growth code for the growth stages of cereals. Weed Research. 14 (14), 415-421 (1974).

- Casadesús, J., et al. Using vegetation indices derived from conventional digital cameras as selection criteria for wheat breeding in water-limited environments. Annals of Applied Biology. 150 (2), 227-236 (2007).

- Hunt, E. R., et al. A visible band index for remote sensing leaf chlorophyll content at the canopy scale. International Journal of Applied Earth Observation and Geoinformation. 21 (1), 103-112 (2013).

- Zaman-Allah, M., et al. Unmanned aerial platform-based multi-spectral imaging for field phenotyping of maize. Plant Methods. 11 (1), 35 (2015).

- Slafer, G. A., Savin, R., Sadras, V. O. Coarse and fine regulation of wheat yield components in response to genotype and environment. Field Crops Research. 157, 71-83 (2014).

- Liu, T., et al. In-field wheatear counting based on image processing technology. Nongye Jixie Xuebao/Transactions of the Chinese Society for Agricultural Machinery. 45 (2), 282-290 (2014).

- Cointault, F., et al. Color and Frequential Proxy-Detection Image Processing for Crop Characterization in a Context of Precision Agriculture. Agricultural Science. , 49-70 (2012).

- Abbad, H., El Jaafari, S., Bort, J., Araus, J. L. Comparative relationship of the flag leaf and the ear photosynthesis with the biomass and grain yield of durum wheat under a range of water conditions and different genotypes. Agronomie. 24, 19-28 (2004).

- Ko, S. J., Lee, Y. H. Center weighted median filters and their applications to image enhancement. IEEE Transactions on Circuits and Systems. 38 (9), 984-993 (1991).

- Smołka, B. Nonlinear techniques of noise reduction in digital color images. Wydawnictwo Politechniki Śląskiej. , (2004).

Reprints and Permissions

Request permission to reuse the text or figures of this JoVE article

Request PermissionThis article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. All rights reserved

We use cookies to enhance your experience on our website.

By continuing to use our website or clicking “Continue”, you are agreeing to accept our cookies.