Dynamic Inter-subject Functional Connectivity Reveals Moment-to-Moment Brain Network Configurations Driven by Continuous or Communication Paradigms

In This Article

Summary

The goal of the described approach is to determine at what moments of the paradigm (temporal perspective), and between which regions (spatial perspective), significant reconfigurations in functional connectivity occur on functional magnetic resonance imaging recordings during which a time-locked stimulus is played.

Abstract

Task-based functional magnetic resonance imaging bears great potential to understand how our brain reacts to various types of stimulation; however, this is often achieved without considering the dynamic facet of functional processing, and analytical outputs typically account for merged influences of task-driven effects and underlying spontaneous fluctuations of brain activity. Here, we introduce a novel methodological pipeline that can go beyond these limitations: the use of a sliding-window analytical scheme permits tracking of functional changes over time, and through cross-subject correlational measurements, the approach can isolate purely stimulus-related effects. Thanks to a rigorous thresholding process, significant changes in inter-subject functional correlation can be extracted and analyzed.

On a set of healthy subjects who underwent naturalistic audio-visual stimulation, we demonstrate the usefulness of the approach by tying the unraveled functional reconfigurations to particular cues of the movie. We show how, through our method, one can capture either a temporal profile of brain activity (the evolution of a given connection), or focus on a spatial snapshot at a key time point. We provide a publicly available version of the whole pipeline, and describe its use and the influence of its key parameters step by step.

Introduction

Functional magnetic resonance imaging (fMRI) has become the tool of choice to non-invasively monitor the changes in brain activity resulting from external stimulation. More specifically, vivid interest has emerged about the understanding of statistical interdependence between regional activation time courses, known as functional connectivity (FC)1 and typically computed as Pearson's correlation coefficient. Functional interplays across the brain have been extensively shown to reconfigure as a function of the underlying task2,3,4.

Two analytical directions have separately been followed to go beyond this introductory characterization: on the one hand, the response induced in a given brain region by a time-locked stimulus was observed to strongly correlate across distinct subjects5. Quantifying this inter-subject correlation (ISC) showed potential to refine our understanding of cognition6,7,8,9 and brain disorders10,11. Further, this cross-subject correlational approach was also extended to the assessment of cross-regional synchronicity12, in what became known as the inter-subject functional correlation (ISFC) approach13.

On the other hand, the dynamic flavor of FC reconfigurations started to receive increased attention (see Hutchison et al.14, Preti, Bolton and Van De Ville15, Gonzales-Castillo and Bandettini16 for recent reviews on the resting-state and task-based sides of this question). In particular, whole-brain FC changes over time can be tracked through consecutive correlation measurements over a gradually shifted temporal sub-window17,18, revealing additional insight in the context of behavioral tasks19,20.

Here, we present a methodological framework that combines those two avenues. Indeed, we compute ISFC in sliding-window fashion to track the evolution of cross-regional synchronicity between the subjects exposed to a time-locked, naturalistic paradigm. Through the cross-subject aspect of the method, analyses are focused on stimulus-driven effects, while spontaneous fMRI changes (which are uncorrelated across subjects) are strongly damped. This is important because resting-state and task-evoked activity patterns are increasingly understood to be characterized by distinct properties21,22.

As for the dynamic component of the method, it enables a more complete and accurate characterization of task stimuli, particularly when probing a naturalistic paradigm in which a diverse set of cues (auditory, visual, social, etc.) are combined over time. Further, as the sound statistical evaluation of significant dynamic fluctuations has been hotly debated23,24, our approach takes particular care of this aspect of the analyses by isolating significant ISFC changes through comparison to appropriate null data.

We illustrate the method on a set of healthy subjects exposed to an audio-visual movie stimulus, for whom we show that the temporal and spatial ISFC change profiles arising from localized movie sub-intervals can be accurately extracted. In doing so, we also describe the influence of the main analytical parameters to be selected by the user. The presented findings are based on part of formerly published data25,26.

Protocol

The following protocol has been approved by the local ethics committee (Biomedical Inserm 365 protocol C08-39).

1. Pre-imaging

- Enroll a study population of subjects, obtaining written, informed consent for all of them. Seek approval from the local ethics committee.

- Select a paradigm to investigate that can be applied to all subjects in time-locked manner.

NOTE: Here, we used an audio-visual scientific documentary for youngsters (https://miplab.epfl.ch/index.php/miplife/research/supplement-asd-study).

2. Imaging

- For each subject to consider in the analyses, perform at least one functional imaging session in which the scanned volunteer is subjected to the time-locked paradigm of interest.

- Use a 3 Tesla MRI scanner to acquire transverse slices through an echoplanar imaging sequence.

- Employ the following imaging parameters: voxel size = 3 mm x 3 mm x 3 mm, repetition time (TR) = 2 s, echo time = 50 ms, field of view = 192, 40 slices.

NOTE: Faster TR values are encouraged within the scope of feasibility. The protocol can also be applied with a more restricted field of view (e.g., for analyses restricted to a specific brain sub-structure), which would enable either a better temporal resolution (lower TR), or a spatially more precise analysis. - Leave a few seconds of recording (≥ 2 TR) before and after the presentation of the stimulus.

- Perform at least one separate functional imaging session in which the scanned volunteer lies at rest in the scanner, eyes closed and instructed not to fall asleep.

NOTE: Separate stimulus-related and resting-state acquisitions prevent otherwise possible interplays between the conditions (e.g., having watched the movie beforehand may leave a lasting trace to a subsequently acquired resting-state recording)27. If it is not desired to go through the aforementioned additional resting-state acquisition, an alternative (albeit more prone to the detection of false positives; see Discussion) computational option in the pipeline replaces this data by surrogate time courses computed from the paradigm-related signals (see step 5.1.2). - Perform structural imaging.

- Use a 3 Tesla MRI scanner and a T1-weighted magnetization-prepared rapid acquisition gradient echo sequence.

- Employ the following imaging parameters: voxel size = 1 mm x 1 mm x 1 mm, field of view = 256, 176 slices.

3. Data and Software Preparation

- For each session to analyze, ensure the existence of the following data files:

- A set of functional MRI volumes, present as separate 3D NIFTI or HDR/IMG files, with a consistent numbering scheme (e.g., "fMRI_0001", "fMRI_0002", etc.).

- A T1 structural MRI image, in NIFTI or HDR/IMG format.

- An atlas of interest in Montreal Neurological Institute (MNI) space, in NIFTI format.

NOTE: An example of required input files is provided for a representative subject ("S17"), along with the full pipeline code, at https://c4science.ch/source/Intersubj_pipeline.git

- Download the latest version of the publically available Freesurfer software28 (https://surfer.nmr.mgh.harvard.edu/fswiki/DownloadAndInstall).

- Download the latest version of the publically available Statistical Parametric Mapping (SPM) MATLAB toolbox from https://www.fil.ion.ucl.ac.uk/spm/software/spm12/.

- Open MATLAB (version 2017a or more recent) and locate the newly downloaded "freesurfer" and "spm12" folders. For each, right click on it and select the Add to Path > Selected Folders and Subfolders option.

4. Data Preprocessing

- In the MATLAB terminal, type spm to launch the SPM12 main menu, and click on the fMRI button to access the preprocessing options devoted to fMRI data. Perform the following steps separately for each fMRI session to preprocess.

- Click on Realign (Est & Res), and in the newly open Batch Editor window, double click on Data > Session. In the newly open Session window, select all functional images to process. Then, click on the Done button, and afterwards, on the Run Batch icon from the Batch Editor window (green triangle). Wait until the realignment step finishes, as indicated in the MATLAB terminal window.

- Click on Coregister (Est & Res), and in the newly open Batch Editor window, double click on Reference image. In the newly open Reference Image window, select the average functional volume created in the following step, prefixed with "mean", and click on the Done button. Then, double click on Source image, and in the newly open Source Image window, select the T1 image. Click on the Done button, and afterwards, on the Run Batch icon from the Batch Editor window (green triangle). Wait until the coregistration step finishes, as indicated in the MATLAB terminal window.

NOTE: The T1 image is overwritten at this step, so that the updated one lies in the same space as the functional volumes. - Click on Segment, and in the newly open Batch Editor window, double click on Volumes. In the newly open Volumes window, select the T1 image, and click on the Done button. Then, in the Batch Editor window, double click on Deformation Fields and select the Inverse option. Click on the Run Batch icon (green triangle), and wait until the segmentation step finishes, as indicated in the MATLAB terminal window.

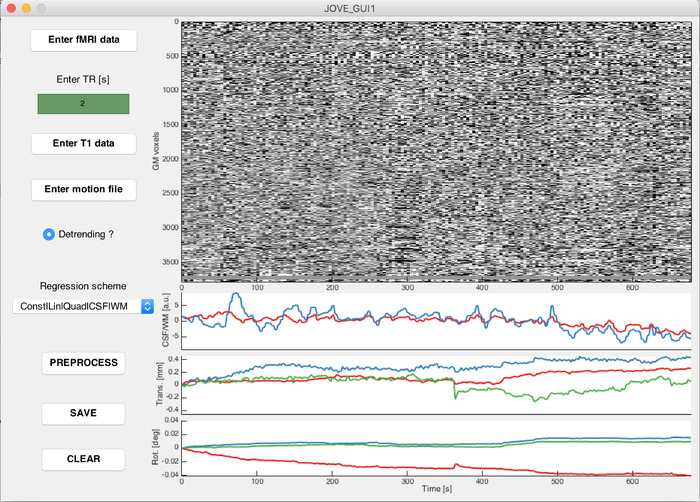

- Type JOVE_GUI1 in the MATLAB terminal to open the first preprocessing graphical user interface window. Perform the following steps for each fMRI session to analyze.

- Click on Enter fMRI data, and select all realigned functional volumes created in step 4.1.1 (prefixed with "r"). For IMG/HDR files, select both the IMG and HDR volumes.

- Enter the TR of the data (in seconds) in the dedicated editable text window.

- Click on Enter T1 data and select the three probabilistic tissue type volumes created in step 4.1.3 (prefixed with "c1", "c2" and "c3").

- Click on Enter motion file, and select the text file containing motion parameters from the session of interest, created in step 4.1.1 and prefixed with "rp".

- Select the desired type of preprocessing, that is, whether the data should be detrended or not (respectively setting the dedicated radio button on or off), and what covariates should be regressed out (by selecting the appropriate option from the dedicated list).

NOTE: The regression step is inspired from a function originating from the DPARSF toolbox29. The white matter and cerebrospinal fluid signals from individual subjects are averaged over the voxels for which the respective template DPARSF probabilistic tissue map showed a signal larger than 0.99. In our analyses, we detrended the data, and regressed out white matter/cerebrospinal fluid time courses as well as constant, linear and quadratic trends. - To preprocess the data, click on Preprocess, and wait for the display to appear in the window. The data can be re-preprocessed differently by modifying the options, and clicking again on the Preprocess button.

NOTE: The gray matter plot is inspired from the representation suggested by Power et al.30. - To save the output for following steps, click on the Save button. To clear the content of the window, click on the Clear button.

Supplementary Figure 1: Example screenshot from the first preprocessing graphical user interface window. Voxel-wise time courses of gray matter voxels following the selected preprocessing options (top right plot), and covariates that may be used in the preprocessing (from top to bottom: cerebrospinal fluid/white matter average time courses, translational motion parameters, and rotational motion parameters. Please click here to view a larger version of this figure.

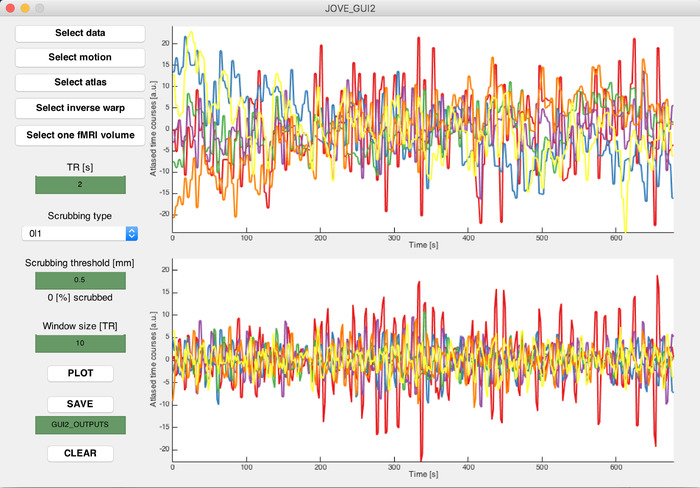

- Type JOVE_GUI2 in the MATLAB terminal to open the second preprocessing graphical user interface window. Perform the following steps for each fMRI session to analyze.

- Click on Select data, and select the data file saved in step 4.2.7 (named "ISFC_VX.mat").

- Click on Select motion, and select the text file containing motion parameters from the session of interest, created in step 4.1.1 and prefixed with "rp".

- Click on Select atlas, and select the NIFTI file representing the atlas to use for parcellation.

- Click on Select inverse warp, and select the NIFTI file representing the deformation field from MNI to native space, created in step 4.1.3 and prefixed with "iy".

- Click on Select fMRI volume, and select any of the fMRI data volumes.

NOTE: This step enables to access the header information of the functional data, hence why the actual chosen volume is not important. - Enter the TR of the data (in seconds) in the dedicated editable text window.

- Enter scrubbing-related information: the type of scrubbing to perform (i.e., how many frames to scrub out before and after the tagged ones) in the "Scrubbing type" list, and the framewise displacement threshold value (Power's criterion31) above which an fMRI volume should be scrubbed in the "Scrubbing threshold" editable text window (in mm).

NOTE: Cubic spline interpolation is performed on the scrubbed data points to replace them with estimated values from neighboring samples. In our analyses, we scrubbed one frame after the tagged volumes, and used a 0.5 mm threshold for scrubbing. - Enter the size of the sliding-window W to use for ISFC computations (see step 5), in TRs.

NOTE: This piece of information will enable filtering of the time courses through a function originating from the DPARSF toolbox29, at f = 1/W Hz32. In our analyses, we used W = 10 TR as a trade-off value to capture dynamic fluctuations while conserving enough samples for robust estimations. - Click on the Plot button to display indicative atlased time courses before (top plot) and after (bottom plot) the scrubbing and filtering steps. Verify, by visual inspection, that following the selected preprocessing steps, those output signals do not incorporate salient artifactual components.

- To save the outputs for following steps, enter a save name in the dedicated editable text window, and click on the Save button. To clear the content of the window, click on the Clear button.

Supplementary Figure 2: Example screenshot from the second preprocessing graphical user interface window. Regional time courses following atlasing, before (top plot) and after (bottom plot) scrubbing and filtering according to selected parameters. Each curve depicts one regional time course randomly selected amongst all the available ones. Please click here to view a larger version of this figure.

5. Sliding-window ISFC Computations

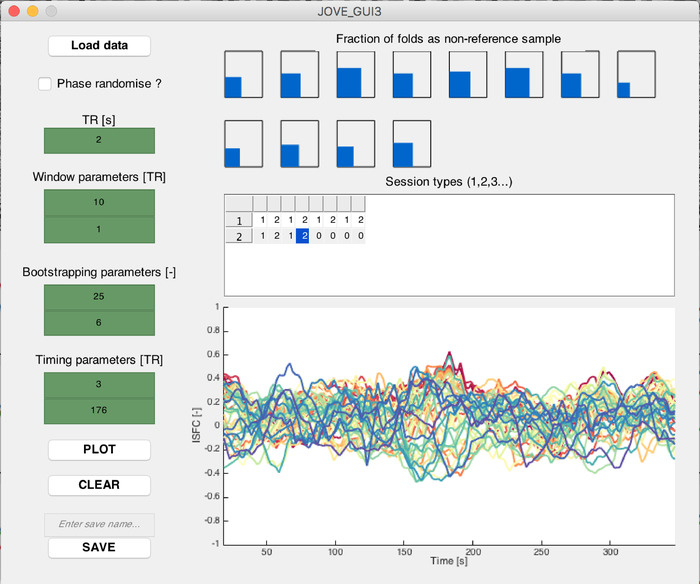

- Type JOVE_GUI3 in the MATLAB terminal to open the first ISFC-related graphical user interface window. Perform the following steps separately for each type of acquired fMRI session segment (stimulus-related segments, resting-state segments of stimulus-related sessions, and purely resting-state segments).

- Click on Load data, and select all the appropriate data files created through step 4.3.

- Select whether the selected session segments should undergo phase randomization.

NOTE: Phase randomization can be used as an alternative option for the generation of null data from stimulus-related signals, if no resting-state recordings are available. - Enter the TR of the data (in seconds) in the dedicated editable text window.

- Enter sliding-window parameters to use for the analysis in the dedicated editable text windows: window size (in TRs) over which connectivity measurements should be computed, and step size (in TRs) by which successive windows should be shifted.

NOTE: In our analyses, we used a window size of 10 TR and a step size of 1 TR. - Modify the "Session types" table to specify which of the loaded session segments were acquired upon the same experimental condition. Use increasing integer numbers from 1 onwards to tag different types of segments (e.g., if the stimulus was displayed for the first or for the second time in a given recording). Leave the table untouched if only one type of session segment was acquired.

NOTE: A session in the present work may refer either to a combined movie/resting-state recording (termed RUN1 and RUN2 in Figure 1A), or to a purely resting-state recording (RUN3). A session segment refers to a sub-portion of a session recording, either when the movie was watched, or when the subjects lied at rest. The above information is used in the subsequently described ISFC computations (see step 5.1.8) to limit the confounding influence of different session segment types. - Enter bootstrapping-related parameters in the dedicated editable text windows: the number of bootstrapping folds over which to perform ISFC computations, and the number of subjects that should constitute the reference group for each fold of ISFC computations.

NOTE: In our analyses, we used 250 bootstrapping folds, and 6 subjects into the reference group. - Enter specifications about which sub-portion of time courses should be analyzed in the Timing parameters section, in the dedicated editable text windows. A start index and an end index (in TRs) should be provided. To analyze the whole recording duration, use 1 as start index and the number of samples as end index.

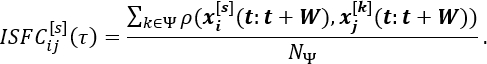

- Click on the Plot button to perform ISFC computations. Displays are gradually updated over time, along with the amount of elapsed bootstrapping folds. For a region pair (i,j) and a sliding window index τ, ISFC is computed as the average of cross-correlations between session segment s and all session segments from the reference group, within a sliding-window of length W; denote this reference group by Ψ, its number of subjects by NΨ, and let xi[s](t) the time course of region i for session segment s at time t; an ISFC estimate is then given by:

ISFC measurements are computed over the specified amount of bootstrapping folds, and with the selected number of session segments used as a reference group at each fold (see step 5.1.6). If several session segment subtypes are included, a mixture of subtype samples always composes the reference group. The final output for each session segment is the average ISFC across all folds in which it was not included as a reference measurement.

NOTE: The reference group is the set of session segments to which the functional time courses of session segment s are compared at each fold of the bootstrapping process. For the results to be more robust to outlier data points, ISFC is computed multiple times on a different reference group (that is, a different subset of session segments). Importantly, the acquisition time t does not match the sliding window index τ, as the latter is computed over a set of W data points, and relies on the window step size for successive estimates. The bootstrapping process was inspired from a former study by Byrge et al.33. - To save the outputs for following steps, enter a save name in the dedicated editable text window, and click on the Save button. To clear the content of the window, click on the Clear button.

Supplementary Figure 3: Example screenshot from the first ISFC-related graphical user interface window. (Top plot) Schematic representation of how often each considered session has its ISFC measurements computed (i.e., is not selected within the reference group). (Bottom plot) On an indicative subject, ISFC time courses computed for fifty example connections, selected as the ones exhibiting the largest summed absolute ISFC values across time. Please click here to view a larger version of this figure.

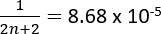

- Type JOVE_GUI4 in the MATLAB terminal to open the second ISFC-related graphical user interface window.

- Click on Load ISFC data and select the stimulus-related ISFC output file(s) created in step 5.1.

- Click on Load null data and select, depending on the used null data generation scheme, either the resting-state ISFC, or the phase randomized stimulus-related ISFC output file(s) created in step 5.1.

- Click on Load codebook and select the codebook file created in step 4.3.

- Enter the TR of the data (in seconds) in the dedicated editable text window.

- Enter the sliding-window parameters used in the computations of step 5.1 (window size and step size, in TRs) in the dedicated editable text windows.

- Enter (in percent) the α-value at which the ISFC time courses should be thresholded to highlight significant changes in the dedicated editable text window.

NOTE: Here and elsewhere, when referring to an α-value of 2.5%, it means that significance is achieved when a value is lower than the 2.5th percentile, or larger than the 97.5th percentile, of the null data. In our analyses, we had 5,762 resting-state data points to our disposal, and selected an α-value of 10-4. This means that we wanted 0.01% of data samples to be larger or equal to the selected thresholds past which an ISFC excursion would be deemed significant. For comparison purposes, the α-level demanded by Bonferroni correction would be 0.05/44,551 = 1.12 x 10-6, and the most stringent possible α-level enabled with our amount of data (n samples) would be .

. - Click on the Plot button to perform the ISFC thresholding process, in which all available null ISFC measurements are aggregated, for a given connection, to construct a null distribution, following which stimulus-related ISFC measurements are thresholded according to the selected α-value. Time points at which a stimulus-related ISFC value statistically significantly exceeds the null distribution are tagged as -1/+1 for significant ISFC decreases and increases, respectively.

NOTE: The thresholding process draws inspiration from the resting-state dynamic FC work of Betzel et al.23. - To visualize the ISFC spatial patterns at different time points, drag the slider below the ISFC excursion plot.

Supplementary Figure 4: Example screenshot from the second ISFC-related graphical user interface window. (Top left plot) On an indicative subject, ISFC time courses computed for three example connections, selected as the ones exhibiting the largest amount of significant ISFC excursions and displayed with their associated computed significance thresholds (horizontal lines). (Bottom left plot) For the same connections, associated excursion time courses averaged across subjects, with two-tailed 95% confidence intervals displayed as error measure. (Right plot) Spatial ISFC pattern (averaged ISFC excursions across subjects) for a selected time point indicated by a vertical black line on the ISFC and excursion plots. Positive ISFC excursions are shown in yellow, and negative ones in pink. The size and color code of the nodes are proportional to their degree. Please click here to view a larger version of this figure.

Representative Results

Here, we considered n = 15 typically developing (TD) subjects for whom we obtained written, informed consent. All were right-handed males (23.42 ± 7.8 years old). The chosen paradigm was an audio-visual scientific documentary for youngsters about the dangers of sun exposure. It contains a large array of visual, auditory and social stimuli, and can be watched at https://miplab.epfl.ch/index.php/miplife/research/supplement-asd-study.

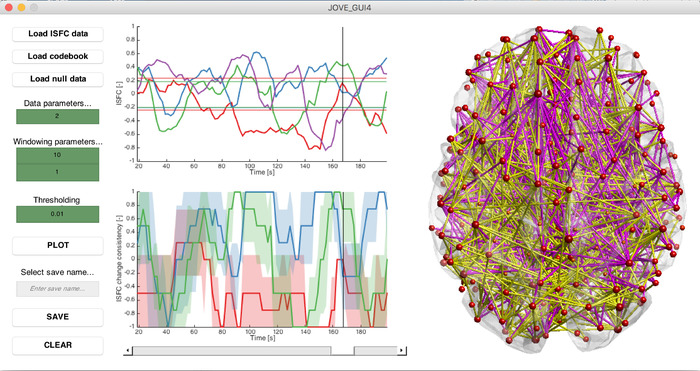

We acquired two sessions per subject (RUN1 and RUN2) in which the assessed movie was displayed from 5 to 353 s (5.8 min duration). A resting-state segment also followed from 386 to 678 s (4.9 min duration). In addition, one solely resting-state session (RUN3) was acquired for each subject (excluding one who suffered from claustrophobia), lasting for 310 s (5.2 min). Example movie scenes and the timing of acquired data are summarized in Figure 1A. Importantly, the acquisition protocol was not optimal in the sense that resting-state recordings acquired just after movie exposure may be partly corrupted by spillover effects27; we make use of this data in the present findings to have a satisfying amount of samples for statistical thresholding, but this should be avoided whenever possible.

We excluded all sessions for which more than 10% of frames were scrubbed, at a threshold of 0.5 mm, and considered the parcellation from Craddock et al.34 (two-level temporal correlation algorithm) to generate regional time courses, for a total of 299 different brain regions.

ISFC was computed separately on (1) the movie-watching subparts of RUN1 and RUN2, (2) the resting-state subparts of RUN1 and RUN2, and (3) the resting-state RUN3 recordings. We used a window length W = 10 TR for the main presented results, and compare them to a lower value of W = 5 TR. Step size always remained equal to 1 TR. Bootstrapping was performed over 250 folds, including 6 session segments in each reference group.

Figure 1B displays ISFC time courses generated at W = 10 TR and W = 5 TR for three different representative connections: connection 1 involved a left inferior parietal region related to the expectation of moving objects (MNI coordinates: 41,9,32)35, and a right frontal opercular area linked to response inhibition (-34,-52,45)36. This latter region was also implicated in connections 2 and 3, respectively with an area implicated in sensory coordination (54,6,34)37, and one tied to the processing of the meaning of words (6,62,9)38.

A comparison across window lengths reveals that in the W = 5 TR setting, temporal variance in the subjects is overall larger in both the movie-watching and resting-state segment cases as compared to W = 10 TR, a known phenomenon in sliding-window analyses39. For connection 1, regardless of the window length, a localized subpart of the movie-watching recording (at around 55 s) shows a strong, synchronized ISFC increase across subjects, which largely exceeds the range of values taken in the resting-state case. Thus, we expect to capture this temporal subpart as a significant ISFC transient with our thresholding method.

For connection 2, we observe similar temporal dynamics, but for W = 5 TR, the increase becomes less easy to disentangle as compared to the resting-state time courses, due to the larger sliding-window methodology-related noise. As for connection 3, it reflects a case in which there is no clear response to the movie, and thus, the fluctuations from movie-watching and resting-state time courses are similar. The expected outcome at this analytical stage is a mix between connections that show clear stimulus-induced reconfigurations, and connections that do not respond.

Figure 1: Acquisition timing and example ISFC time courses. (A) The movie watched by the subjects involved a wide array of social situations (example images 1 and 4), scientific explanations with colorful panels (example images 2 and 5), and landscape sceneries (example image 3). Three sessions were acquired per subject: two (RUN1 and RUN2) included the movie stimulation (from 5 to 353 s, highlighted in green) followed by a resting-state period (from 386 to 678 s, shown in yellow), while one (RUN3) solely consisted in a resting-state recording (310 s duration, displayed in orange). (B) For three indicative connections (C1, C2 and C3, respectively dark green/red, light green/orange and turquoise/yellow traces), evolution of ISFC over time during movie-watching (cold colors) or resting-state (hot colors). For W = 10 TR (left panel), movie-watching ISFC changes more largely stand out as compared to W = 5 TR (right panel). Each trace reflects the ISFC time course of one session. This figure has partly been modified from Bolton et al.25. Please click here to view a larger version of this figure.

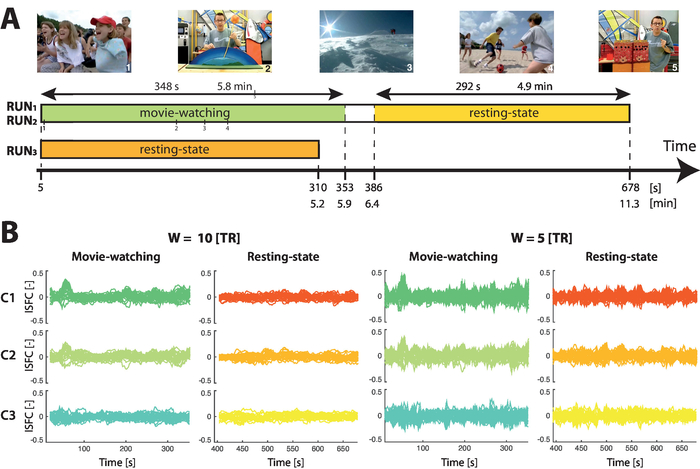

Figure 2A displays the results following statistical thresholding of ISFC time courses, for the same three connections as above. A time course value of 1 means that all subjects underwent the same ISFC increase at the same time point; a value of 0 means that no subject underwent a significant ISFC change; a value of -1 represents a synchronous ISFC decrease across all subjects. As before, we contrast W = 5 TR and W = 10 TR, and we also highlight two α-value cases: α = 0.01%, and α = 5%.

Fitting with the above observations, a lower window length reduces the amount of extracted significant ISFC changes. For connection 1, both W = 5 TR and W = 10 TR, however, extract the same particular moment (t = 55 s) as showing a strong ISFC increase. Taking a hemodynamic delay of roughly 5 s into account, this corresponds to a subpart of the movie when colored lines were extending towards a doll, and abruptly stopped just in front of it (46-49 s), fitting with the role of the involved regions in moving object expectation and response inhibition35,36.

When increasing α from 0.01% to 5%, one can observe a much lower specificity of the detected ISFC transients, likely including many false positives and expectedly showing much less temporal synchrony.

As another perspective that can be set on the data, Figure 2B shows the whole-brain spatial maps of significant ISFC changes at t = 55 s. It can be seen that the response to the movie scene extends far beyond the example connections described here.

Figure 2: Temporal and spatial snapshots of ISFC patterns. (A) ISFC transient time courses, averaged across subjects, for three indicative connections (C1, C2 and C3, respectively dark green, light green and turquoise traces). The movie scene that drove the ISFC changes is highlighted in light grey, and depicted by example images. For W = 10 TR (left column of plots), ISFC changes are more strongly detected than for W = 5 TR (right column of plots). For α = 0.01% (top row of plots), specificity to localized movie cues is larger than for α = 5% (bottom row of plots). Each trace reflects the ISFC transient time course of one session, and the two-tailed 95% confidence intervals are displayed as error measure. (B) For W = 10 TR and α = 0.01%, there is a neat, restricted spatial pattern of ISFC transients at t = 55 s (the peak ISFC transient value for C1); for W = 5 TR and α = 5%, connections undergoing a significant ISFC change at this time are much more numerous. Note that we assume a hemodynamic delay of around 5 s in the described temporality (i.e., a value of 55 s here relates to the movie stimulus at 50 s). This figure has partly been modified from Bolton et al.25. Please click here to view a larger version of this figure.

Discussion

On a dataset of healthy subjects, we demonstrated how synchronous cross-subject increases and decreases in FC, the ISFC transients, would match temporally localized movie cues, providing information that goes beyond a static description. Although the use of cross-subject correlation measures enables to focus the analysis on stimulus-driven functional reconfigurations, one must also be aware that it limits the findings to the effects that are shared across the studied population: hence, low-level sensory processing is expected to be over-represented compared to frontal processing40. To bypass this limitation, new methods that also have the ability to extract the regions that most strongly vary across subjects are being developed41.

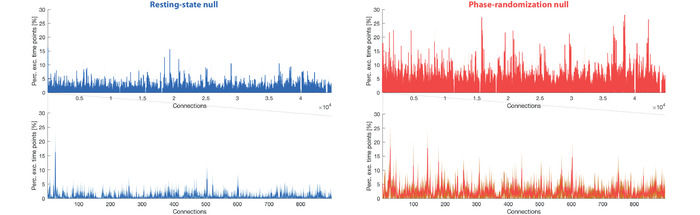

Another limitation from the introduced methodology arises from the sliding-window aspect, as temporal resolution of ISFC transient time courses is lowered compared to frame-wise approaches15. As we showed, a trade-off is needed between a sufficiently low window length to properly resolve dynamic ISFC reconfigurations, and a large enough size to obtain robust estimates. Two critical steps in our framework ensure that the extracted ISFC transients reflect truly occurring changes in connectivity: first, the high-pass filtering of regional time courses with the inverse of the window length32; second, the use of resting-state ISFC data for the generation of a relevant null distribution, with identical acquisition parameters as compared to the stimulus-related data. Of course, the latter also requires a lengthier global acquisition time, so that resting-state data can be gathered on top of stimulus-related sessions. As an alternative approach to avoid the additional resting-state recordings, we also offer the possibility to generate phase randomized data directly from the stimulus-related time courses, an approach often used in dynamic functional connectivity analyses23,24. Further evaluation on a subset of sessions revealed that although the resting-state null method is more conservative, and thus less prone to false positives, the global patterns of ISFC excursion detection were similar across both schemes (see Supplementary Figure 5).

Supplementary Figure 5: Detection of ISFC excursions across null data generation methods. For resting-state (left column, blue plots) or phase randomization (right column, red plots) null data generation methods, percentage of ISFC excursions extracted across connections. The bottom plots are an inset on the connections emanating from the first three considered brain regions. Error represents standard deviation across subjects. Please click here to view a larger version of this figure.

The duration of the resting-state acquisitions actually relates to a critical parameter of the analyses: the α-value. As exemplified above, a too lenient choice will lead to a large amount of false positives in the detected ISFC transients. The larger the amount of available resting-state data, the more stringent the achievable false positive rate, because thresholding can be based on more extreme values from the null distribution. As an indication, for n = 299 atlas regions as here and given our tally of 5,762 resting-state data points, we could at best achieve an α-value close to 0.01% (see step 5.2.6 for mathematical details).

Another key point pertaining to any fMRI analysis lies in the rigorous removal of possible motion-related artifacts from the analyzed data30,42. In particular, if one wishes to apply the introduced pipeline to diseased populations exhibiting marked motion in the scanner, we recommend that on top of including motion variables as covariates in the performed statistical analyses, additional preprocessing steps be run, such as wavelet denoising43 or ICA-AROMA44. Group comparison, for instance to compare ISFC transients between a healthy and a diseased group, can readily be performed by running the described approach in parallel on both groups of interest (see Bolton et al.25 for an example on a population diagnosed with autism spectrum disorders). However, a difference between the groups can then arise in two distinct settings: (1) an absent ISFC change in one group, or (2) a more heterogeneous evolution in that group. To disentangle those two factors, the pipeline should be run once more for the diseased group, using the healthy subject set as the reference group at the bootstrapping step. The former case would still result in an absent response, whereas the latter would not.

On top of what we described here, the introduced methodology also opens up promising future avenues: from an analytical side, ISFC transient maps could be viewed as brain graphs from which metrics quantifying brain connectivity could be derived45, or dynamic ISFC states could be extracted through clustering approaches and assessed in terms of their spatial and temporal characteristics17,46. In addition, one could also envisage the use of more sophisticated connectivity measurement tools than Pearson's correlation coefficient to reveal subtler sides of FC47,48.

From the experimental side, the application of our pipeline to a more extended set of paradigms is a promising perspective: for example, instead of a movie as studied here, one could envisage to use a piece of music49 or a narrative story13,50 as a time-locked stimulus. Alternatively, it could even be envisioned, through hyperscanning51, to probe naturalistic social communication52,53.

Acknowledgements

This work was supported in part by each of the following: the Swiss National Science Foundation (grant number 205321_163376 to DVDV), the Bertarelli Foundation (to TB and DVDV), the Center for Biomedical Imaging (CIBM), and the National Agency for Research (tempofront grant number 04701 to ALG). The authors would like to thank Roberto Martuzzi and Giulia Preti for their contribution to the video content of this work as, respectively, the MRI operator and scanned volunteer.

Materials

| Name | Company | Catalog Number | Comments |

| Freesurfer version 6.0 | Laboratory for Computational Neuroimaging, Martinos Center for Biomedical Imaging, Boston (MA), USA | https://surfer.nmr.mgh.harvard.edu/fswiki/DownloadAndInstall | A MATLAB-compatible toolbox enabling to carry out various processing, visualisation and analytical steps on functional magnetic resonance imaging data |

| MATLAB_R2017a | MathWorks | https://ch.mathworks.com/downloads/ | Working version of the MATLAB computational software (version 2014a or more recent should be used) |

| Statistical Parametric Mapping version 12.0 (SPM12) | Wellcome Trust Center for Neuroimaging, University College London, London, UK | https://www.fil.ion.ucl.ac.uk/spm/software/spm12/ | A MATLAB-compatible toolbox enabling to perform statistical analyses on functional magnetic resonance imaging data |

| Tim-Trio 3 T MRI scanner | Siemens | https://www.healthcare.siemens.ch/magnetic-resonance-imaging/for-installed-base-business-only-do-not-publish/magnetom-trio-tim | Magnetic resonance imaging scanner in which subjects have their functional brain activity recorded (at 3 T) |

References

- Friston, K. J. Functional and effective connectivity in neuroimaging: A synthesis. Human Brain Mapping. 2 (1-2), 56-78 (1994).

- Gonzales-Castillo, J., et al. Tracking ongoing cognition in individuals using brief, whole-brain functional connectivity patterns. Proceedings of the National Academy of Sciences U.S.A. 112 (28), 8762-8767 (2015).

- Peltz, E., et al. Functional connectivity of the human insular cortex during noxious and innocuous thermal stimulation. Neuroimage. 54 (2), 1324-1335 (2011).

- Shirer, W. R., Ryali, S., Rykhlevskaia, E., Menon, V., Greicius, M. D. Decoding subject-driven cognitive states with whole-brain connectivity patterns. Cerebral Cortex. 22 (1), 158-165 (2012).

- Hasson, U., Nir, Y., Levy, I., Fuhrmann, G., Malach, R. Intersubject Synchronization of Cortical Activity During Natural Vision. Science. 303 (5664), 1634-1640 (2004).

- Hasson, U., Furman, O., Clark, D., Dudai, Y., Davachi, L. Enhanced Intersubject Correlations during Movie Viewing Correlate with Successful Episodic Encoding. Neuron. 57 (3), 452-462 (2008).

- Hasson, U., Yang, E., Vallines, I., Heeger, D. J., Rubin, N. A Hierarchy of Temporal Receptive Windows in Human Cortex. Journal of Neuroscience. 28 (10), 2539-2550 (2008).

- Jääskeläinen, I. P., et al. Inter-Subject Synchronization of Prefrontal Cortex Hemodynamic Activity During Natural Viewing. The Open Neuroimaging Journal. 2, 14 (2008).

- Wilson, S. M., Molnar-Szakacs, I., Iacoboni, M. Beyond Superior Temporal Cortex: Intersubject Correlations in Narrative Speech Comprehension. Cerebral Cortex. 18 (1), 230-242 (2008).

- Hasson, U., et al. Shared and idiosyncratic cortical activation patterns in autism revealed under continuous real-life viewing conditions. Autism Research. 2 (4), 220-231 (2009).

- Salmi, J., et al. The brains of high functioning autistic individuals do not synchronize with those of others. NeuroImage: Clinical. 3, 489-497 (2013).

- Mantini, D., et al. Interspecies activity correlations reveal functional correspondence between monkey and human brain areas. Nature Methods. 9 (3), 277 (2012).

- Simony, E., et al. Dynamic reconfiguration of the default mode network during narrative comprehension. Nature Communications. 7, 12141 (2016).

- Hutchison, R. M., et al. Dynamic functional connectivity: promise, issues, and interpretations. Neuroimage. 80, 360-378 (2013).

- Preti, M. G., Bolton, T. A. W., Van De Ville, D. The dynamic functional connectome: state-of-the-art and perspectives. Neuroimage. 160, 41-54 (2017).

- Gonzalez-Castillo, J., Bandettini, P. A. Task-based dynamic functional connectivity: Recent findings and open questions. Neuroimage. 180, 526-533 (2018).

- Allen, E. A., et al. Tracking whole-brain connectivity dynamics in the resting state. Cerebral Cortex. 24 (3), 663-676 (2014).

- Sakoğlu, &. #. 2. 2. 0. ;., et al. A method for evaluating dynamic functional network connectivity and task-modulation: application to schizophrenia. Magnetic Resonance Materials in Physics, Biology and Medicine. 23 (5-6), 351-366 (2010).

- Douw, L., Wakeman, D., Tanaka, N., Liu, H. State-dependent variability of dynamic functional connectivity between frontoparietal and default networks relates to cognitive flexibility. Neuroscience. 339, 12-21 (2016).

- Mooneyham, B. W., et al. States of mind: characterizing the neural bases of focus and mind-wandering through dynamic functional connectivity. Journal of Cognitive Neuroscience. 29 (3), 495-506 (2017).

- Kim, D., Kay, K., Shulman, G. L., Corbetta, M. A New Modular Brain Organization of the BOLD Signal during Natural Vision. Cerebral Cortex. 28 (9), 3065-3081 (2018).

- Lynch, L. K., et al. Task-Evoked Functional Connectivity Does Not Explain Functional Connectivity Differences Between Rest and Task Conditions. Human Brain Mapping. 39, 4939-4948 (2018).

- Betzel, R. F., Fukushima, M., He, Y., Zuo, X. N., Sporns, O. Dynamic fluctuations coincide with periods of high and low modularity in resting-state functional brain networks. Neuroimage. 127, 287-297 (2016).

- Hindriks, R., et al. Can sliding-window correlations reveal dynamic functional connectivity in resting-state fMRI?. Neuroimage. , 242-256 (2016).

- Bolton, T. A. W., Jochaut, D., Giraud, A. L., Van De Ville, D. Brain dynamics in ASD during movie-watching show idiosyncratic functional integration and segregation. Human Brain Mapping. 39 (6), 2391-2404 (2018).

- Jochaut, D., et al. Atypical coordination of cortical oscillations in response to speech in autism. Frontiers in Human Neuroscience. 9, 171 (2015).

- Dodero, L., Sona, D., Meskaldji, D. E., Murino, V., Van De Ville, D. Traces of human functional activity: Moment-to-moment fluctuations in fMRI data. Biomedical Imaging (ISBI), 2016 IEEE 13th International Symposium. , 1307-1310 (2016).

- Fischl, B. Freesurfer. Neuroimage. 62 (2), 774-781 (2012).

- Yan, C., Zang, Y. DPARSF: a MATLAB toolbox for "pipeline" data analysis of resting-state fMRI. Frontiers in Systems Neuroscience. 4, 13 (2010).

- Power, J. D., et al. Methods to detect, characterize, and remove motion artifact in resting state fMRI. Neuroimage. 84, 320-341 (2014).

- Power, J. D., Barnes, K. A., Snyder, A. Z., Schlaggaer, B. L., Petersen, S. E. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage. 59 (3), 2142-2154 (2012).

- Leonardi, N., Van De Ville, D. On spurious and real fluctuations of dynamic functional connectivity during rest. Neuroimage. 104, 430-436 (2015).

- Byrge, L., Dubois, J., Tyszka, J. M., Adolphs, R., Kennedy, D. P. Idiosyncratic brain activation patterns are associated with poor social comprehension in autism. Journal of Neuroscience. 35 (14), 5837-5850 (2015).

- Craddock, R. C., James, G. A., Holtzheimer, P. E., Hu, X. P., Mayberg, H. S. A whole brain fMRI atlas generated via spatially constrained spectral clustering. Human Brain Mapping. 33 (8), 1914-1928 (2012).

- Shulman, G. L., et al. Areas involved in encoding and applying directional expectations to moving objects. Journal of Neuroscience. 19 (21), 9480-9496 (1999).

- Sebastian, A., et al. Disentangling common and specific neural subprocesses of response inhibition. Neuroimage. 64, 601-615 (2013).

- Oullier, O., Jantzen, K. J., Steinberg, F. L., Kelso, J. A. S. Neural substrates of real and imagined sensorimotor coordination. Cerebral Cortex. 15 (7), 975-985 (2004).

- Chan, A. H., et al. Neural systems for word meaning modulated by semantic ambiguity. Neuroimage. 22 (3), 1128-1133 (2004).

- Lindquist, M. A., Xu, Y., Nebel, M. B., Caffo, B. S. Evaluating dynamic bivariate correlations in resting-state fMRI: A comparison study and a new approach. Neuroimage. 101 (1), 531-546 (2014).

- Ren, Y., Nguyen, V. T., Guo, L., Guo, C. C. Inter-subject functional correlation reveal a hierarchical organization of extrinsic and intrinsic systems in the brain. Scientific Reports. 7 (1), 10876 (2017).

- Kauppi, J. P., Pajula, J., Niemi, J., Hari, R., Tohka, J. Functional brain segmentation using inter-subject correlation in fMRI. Human Brain Mapping. 38 (5), 2643-2665 (2017).

- Van Dijk, K. R., Sabuncu, M. R., Buckner, R. L. The influence of head motion on intrinsic functional connectivity MRI. Neuroimage. 59 (1), 431-438 (2012).

- Patel, A. X., et al. A wavelet method for modeling and despiking motion artifacts from resting-state fMRI time series. Neuroimage. 95, 287-304 (2014).

- Pruim, R. H., et al. ICA-AROMA: A robust ICA-based strategy for removing motion artifacts from fMRI data. Neuroimage. , 267-277 (2015).

- Rubinov, M., Sporns, O. Complex network measures of brain connectivity: uses and interpretations. Neuroimage. 52 (3), 1059-1069 (2010).

- Damaraju, E., et al. Dynamic functional connectivity analysis reveals transient states of dysconnectivity in schizophrenia. NeuroImage: Clinical. 5, 298-308 (2014).

- Smith, S., et al. Network modelling methods for FMRI. Neuroimage. 54 (2), 875-891 (2011).

- Meskaldji, D. E., et al. Prediction of long-term memory scores in MCI based on resting-state fMRI. NeuroImage: Clinical. 12, 785-795 (2016).

- Abrams, D. A., et al. Inter-subject synchronization of brain responses during natural music listening. European Journal of Neuroscience. 37 (9), 1458-1469 (2013).

- Huth, A. G., de Heer, W. A., Friffiths, T. L., Theunissen, F. E., Gallant, J. L. Natural speech reveals the semantic maps that tile human cerebral cortex. Nature. 532 (7600), 453 (2016).

- Montague, P. R., et al. Hyperscanning: simultaneous fMRI during linked social interactions. Neuroimage. 16, 1159-1164 (2002).

- Bilek, E., et al. Information flow between interacting human brains: Identification, validation, and relationship to social expertise. Proceedings of the National Academy of Sciences U.S.A. 112 (16), 5207-5212 (2015).

- Kinreich, S., Djalovski, A., Kraus, L., Louzoun, Y., Feldman, R. Brain-to-brain synchrony during naturalistic social interactions. Scientific Reports. 7 (1), 17060 (2017).

This article has been published

Video Coming Soon

ABOUT JoVE

Copyright © 2024 MyJoVE Corporation. All rights reserved