Modulation of the Neurophysiological Response to Fearful and Stressful Stimuli Through Repetitive Religious Chanting

In This Article

Summary

The present event-related potential (ERP) study provides a unique protocol for investigating how religious chanting can modulate negative emotions. The results demonstrate that the late positive potential (LPP) is a robust neurophysiological response to negative emotional stimuli and can be effectively modulated by repetitive religious chanting.

Abstract

In neuropsychological experiments, the late positive potential (LPP) is an event-related potential (ERP) component that reflects the level of one's emotional arousal. This study investigates whether repetitive religious chanting modulates the emotional response to fear- and stress-provoking stimuli, thus leading to a less responsive LPP. Twenty-one participants with at least one year of experience in the repetitive religious chanting of "Amitabha Buddha" were recruited. A 128-channel electroencephalography (EEG) system was used to collect EEG data. The participants were instructed to view negative or neutral pictures selected from the International Affective Picture System (IAPS) under three conditions: repetitive religious chanting, repetitive nonreligious chanting, and no chanting. The results demonstrated that viewing the negative fear- and stress-provoking pictures induced larger LPPs in the participants than viewing neutral pictures under the no-chanting and nonreligious chanting conditions. However, this increased LPP largely disappeared under repetitive religious chanting conditions. The findings indicate that repetitive religious chanting may effectively alleviate the neurophysiological response to fearful or stressful situations for practitioners.

Introduction

The late positive potential (LPP) has long been accompanied by emotional arousal, and it has been reliably used in emotion-related research1,2. Religious practice is widespread in both Eastern and Western countries. It is asserted that it can alleviate the practitioner's anxiety and stress when facing adverse events, especially during times of difficulty3. Nonetheless, this has seldom been demonstrated under rigorous experimental settings.

Numerous studies have confirmed that emotion regulation can be learned with different strategies and frameworks4,5,6. A few studies have shown that mindfulness and meditation can modulate the neural response to affective events7,8. Recently, it was found that meditation practitioners may employ emotion modulation strategies other than cognitive appraisal, suppression, and distraction8,9. Stimuli from the International Affective Picture System (IAPS) can be used to elicit positive or negative emotions reliably, and there are standard criteria for finding designed pictures with specified valence and arousal levels in affective research10.

Emotional stimuli can cause early- and later- responses in the brain3,11. Similarly, Buddhism tradition made analogical analysis on the mind thoughts by initial and secondary mental processes3,12,13. The Sallatha Sutta (The Arrow Sutta), an early Buddhist text, mentions that cognitive training can tame emotion. The Arrow Sutta states that both a well-trained Buddhist practitioner and an untrained person experience an initial and negative perception of pain when facing a harmful event13. This unavoidable initial pain is similar to a person being hit by an arrow, as described in the Sallatha Sutta. Early perceptual pain is identical to the stage of early processing when a person views a highly negative picture. Early neural processing usually elicits an N1 component. Untrained persons may develop excessive emotions, such as worry, anxiety, and stress, after experiencing the initial, unavoidable painful feelings. According to the Sallatha Sutta, this late-developing negative emotion or psychological pain is like being hit by a second arrow. An event-related potential (ERP) experiment may capture the current design's early and later psychological processes, assuming that N1 and LPP could correspond to the two arrows mentioned above.

In this protocol, the repetitive chanting of the name "Amitabha Buddha" (Sanskit: Amitābha) was chosen to test the potential effect of religious chanting when an individual is in a fearful or stressful situation. This religious chanting is one of the most popular practices of individuals with religious orientations among Chinese Buddhists, and it is a core practice of East Asian Pure Land Buddhism14. It was hypothesized that repetitive religious chanting would reduce the brain response to provoking stimuli, namely, the LPP induced by fearful or stressful pictures. Both EEG and electrocardiogram (ECG) data were collected to assess participants' neurophysiological responses under different conditions.

Protocol

This ERP study was approved by The University of Hong Kong Institutional Review Board. Before participating in this study, all participants signed a written informed consent form.

1. Experimental design

- Recruit participants

- Recruit participants with at least 1 year (~ 200-3,000 h) of experience in chanting the name of "Amitabha Buddha" for this study.

NOTE: In the present study, 21 human participants ranging from 40-52 years old were selected; 11 were males.

- Recruit participants with at least 1 year (~ 200-3,000 h) of experience in chanting the name of "Amitabha Buddha" for this study.

- Religious chanting vs. nonreligious chanting

- Chant the name of "Amitabha Buddha" for 40 s. First 20 s with the image of Amitabha Buddha and the next 20 s with IAPS images.

- Chant only four characters of the name of "Amitabha Buddha" while viewing the image of the Amitabha Buddha in Pureland school14.

- Chant the name of Santa Claus (non-religious chanting condition) for 40 s. First 20 s while viewing the image of the Santa Claus and the next 20 s with IAPS images.

- Chant only four characters of the name of Santa Claus and imagine the Santa Claus.

- Keep silent for 40 s. First 20 s with a blank image for control purpose and the next 20 s with IAPS images.

NOTE: No chanting.

- Chant the name of "Amitabha Buddha" for 40 s. First 20 s with the image of Amitabha Buddha and the next 20 s with IAPS images.

- EEG recording system

- Record EEG data using a 128-channel EEG system that consists of an amplifier, headbox, EEG cap, and two desktop computers (see Table of Materials).

- Stimuli presenting system

- Use stimulus presentation software (see Table of Materials) to show neutral and negative pictures from the IAPS on a desktop computer.

- ECG recording system

- Use a physiological data recording system to record ECG data (see Table of Materials).

2. Affective modulation experiment

NOTE: The experiment had two factors with a 2 x 3 design: The first factor was the picture type: neutral and negative (fear- and stress- provoking). The second factor was the chanting type: chanting "Amitabha Buddha", chanting "Santa Claus" and no chanting (silent view).

- Use a block design, as it may more effectively elicit emotion-related components15.

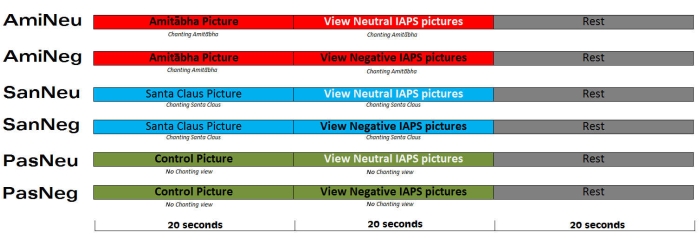

NOTE: There were six conditions, and the sequences were randomized and counterbalanced between the participants (Figure 1). The six conditions were as follows: religious chanting while viewing negative pictures (AmiNeg); religious chanting while viewing neutral pictures (AmiNeu); no chanting while viewing negative pictures (PasNeg); no chanting while viewing neutral pictures (PasNeu); nonreligious chanting while viewing negative pictures (SanNeg); and nonreligious chanting while viewing neutral pictures (SanNeu).

Figure 1: The experimental procedure. There were six pseudorandomized conditions, and each participant received a pseudorandomized sequence. Each condition was repeated six times in two separate sessions. This figure has been adapted from Reference3. Please click here to view a larger version of this figure.

- Show each picture for ~1.8-2.2 s, with an interstimulus interval (ISI) of 0.4-0.6 s.

NOTE: There were 10 pictures of the same type (neutral or negative) in each session. - Allow a rest period of 20 s after each session to counter the potential residual effects of chanting or picture viewing on the next session.

- Present the pictures on a CRT monitor at a distance of 75 cm from the participants' eyes, with visual angles of 15° (vertical) and 21° (horizontal).

- Ask the participants to observe the pictures carefully.

- Provide a brief practice run to the participants to allow them to familiarize themselves with each condition. Use a video monitor to ensure that the participants do not fall asleep.

- Give the participants a 10 min rest in the middle of the 40 min experiment.

3. EEG and ECG data collection

NOTE: Before coming to the experiment, ask each participant to wash their hair and scalp thoroughly without using a conditioner or anything else that may increase the system's impedance. Collect the EEG and ECG data simultaneously by two separate systems.

- Inform each participant of the experimental procedures, that is, that effective pictures were viewed under different chanting conditions.

- Set the sampling rate to 1,000 Hz, and maintain the impedance of each electrode below 30 kΩ whenever possible or according to the system's requirements.

- Collect physiological data, including ECG data using a physiological data recording system (see Table of Materials).

4. EEG data analysis

- Process and analyze the EEG data with EEGLAB (see Table of Materials) , Supplementary File 1-2, an open-source software16 following the steps below.

- Use the EEGLAB function "pop_resample" to resample the data from 1,000 Hz to 250 Hz to maintain a reasonable data file size. Click on Tools > Change sampling rate.

- Use the EEGLAB function "pop_eegfiltnew" to filter the data with a finite impulse response (FIR) filter with 0.1-100 Hz passband. Click on Tools > Filter the data > Basic FIR filter (new, default).

- Filter the data again with a nonlinear infinite impulse response (IIR) filter with a 47-53 Hz stopband to reduce the noise from the alternating current. Click on Tools > Filter the data > select Notch filter the data instead of pass band.

- Visually inspect the data to remove strong artifacts generated by eye and muscle movements. Click on Plot > Channel data (scroll).

- Visually inspect the data again for any consistent noise generated by any channel, and the bad channels were noted.

- Reconstruct the bad channels using spherical interpolation. Click on Tools > Interpolate electrodes > Select from the data channels.

- Run independent component analysis (ICA) with the open-source algorithm "runica"16. Click on Tools > Run ICA.

- Remove the independent components (ICs) corresponding to eye movements, blinks, muscle movement, and line noise. Click on Tools > Reject data using ICA > Reject components by map.

- Reconstruct the data using the remaining ICs. Click on Tools > Remove components.

- Filter the data with a 30 Hz low-pass filter. Click on Tools > Filter the data > Basic FIR filter (new, default).

- Obtain ERP data by extracting and averaging time-locked epochs for each condition with a time window of -200 to 0 ms as the baseline and 0 to 800 ms as the ERP. Click on Tools > Extract epochs.

- Re-reference the ERP data with the average of the left and right mastoid channels. Click on Tools > Re-reference.

- Repeat the above steps for the datasets from all participants and compare the differences between conditions using the t-test or repeated-measures ANOVA in a statistical analysis software (see Table of Materials).

- Define time windows for N1 and LPP based on established theories8,17and the current data3.

NOTE: In this work, N1 was defined as 100-150 ms, while LPP as 300-600 ms from stimulus onset; LPP is most prominent in the central-parietal region (Figure 2). - Find the neutral vs. negative picture difference at the N1 component using paired t-test among three conditions (Figure 3).

- Find the neutral vs. negative picture difference at the LPP component using paired t-test among three conditions (Figure 4).

- Perform region of interest (ROI) analysis on N1 and LPP components by averaging relevant channels to represent a region.

NOTE: To select ROI, the epochs of all three conditions were averaged to calculate those channels where the neutral and negative pictures had a difference that was significant in the specific time window (e.g., for N1 or LPP). - Compare the difference at N1 and LPP separately, using repeated measures ANOVA and post hoc statistics in statistical analysis software.

NOTE: Use post hoc analysis (Bonferroni correction) and determine significant differences between the two conditions separately if the model was significant. The significance threshold was set at p < 0.05.

5. ERP source analysis

- Perform the ERP source analysis18 with the SPM19 open-source software (see Table of Materials) following the steps below.

- Link the EEG cap sensor coordinate system to the coordinate system of a standard structural MRI image (Montreal Neurological Institute (MNI) coordinates) by landmark-based co-registration. In SPM, click on Batch > SPM > M/EEG > Source reconstruction > Head model specification.

- Perform forward computation to calculate the effect of each dipole on the cortical mesh imposed on the EEG sensors. Under the same Batch Editor, click on SPM > M/EEG > Source reconstruction > Source inversion.

NOTE: These results were placed in a G matrix (n x m), where n is the number of sensors (EEG space dimension) and m is the number of mesh vertices (source space dimension). The source model was X = GS, where X is an n x k matrix denoting the ERP data of each condition, k is the number of time points, and S is an m x k matrix indicating the ERP source. - Use the greedy search-based multiple sparse priors algorithm (since S is unknown) in the third step (among the many algorithms available) to perform the inverse reconstruction because it is more reliable than other methods20. Choose MSP (GS) for the Inversion type in the Source Inversion window.

- Determine the difference between conditions using general linear modeling in SPM. Set the significance level to p < 0.05. Under Batch Editor, click on SPM > Stats > Factorial design specification.

6. ECG data and behavioral assessment analysis

- Use physiological and data processing software to process and analyze the ECG data (see Table of Materials). Calculate the mean scores for each condition. In EEGLAB, click on Tools > FMRIB Tools > Detect QRS events21.

NOTE: Similar to the ERP amplitude analysis, statistical software was used to further analyze the data with repeated measures ANOVA. Post hoc analysis was performed to determine the significant differences between the two conditions separately if the model was significant. The significance level was set to p < 0.05. - Ask the participants to rate their belief in the efficacy of chanting the subject's name (Amitabha Buddha, Santa Claus, etc.) on a 1-9 scale, where 1 is considered weakest and 9 the strongest.

Representative Results

Behavioral results

The results for participants' belief of chanting revealed an average score of 8.16 ± 0.96 for "Amitabha Buddha", 3.26 ± 2.56 for "Santa Claus", and 1.95 ± 2.09 for the blank control condition (Supplementary Table 1).

ERP results

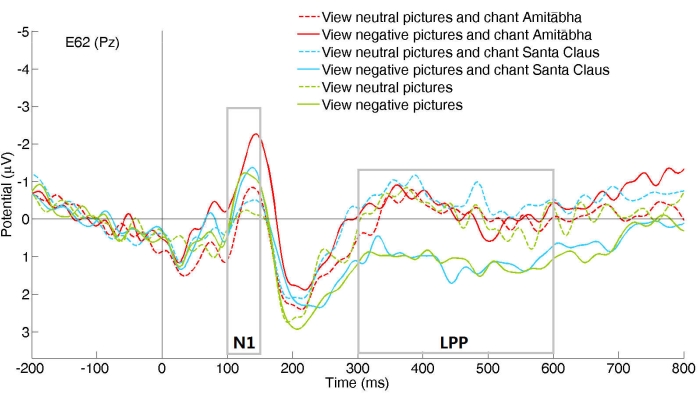

The representative channel of Pz (parietal lobe) demonstrated that the chanting conditions had different effects on the early (N1) and late (LPP) processing of neutral and negative pictures. It showed the time window of N1 and LPP, respectively (Figure 2).

Early perceptual stage

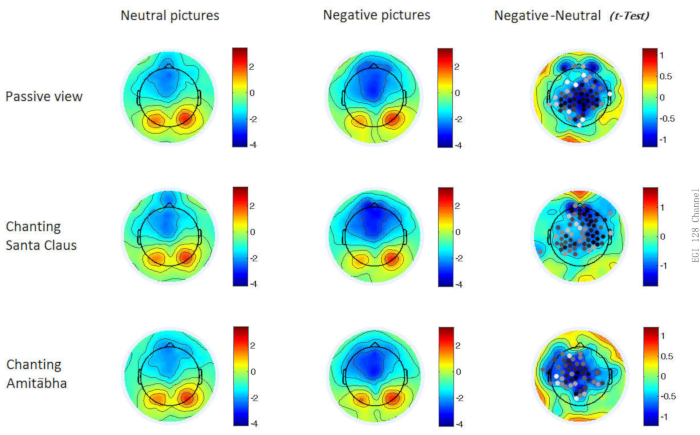

The ERP results showed an increased N1 while viewing the negative pictures in three chanting conditions (Figure 3). It showed that negative images induced stronger central brain activities than neutral images, and the increases are comparable in three conditions.

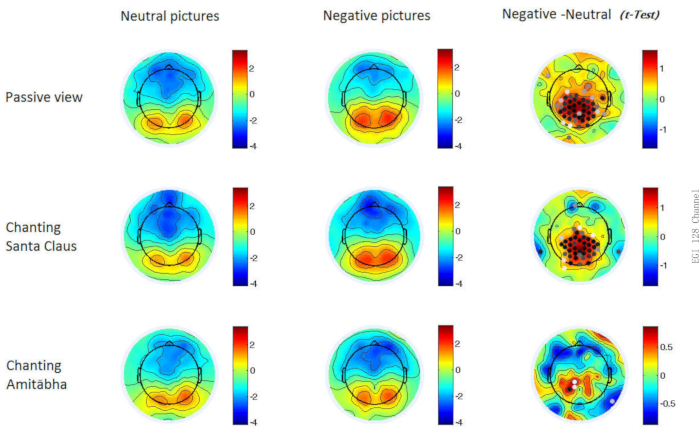

Late emotional/cognitive stage

The ERP demonstrated an increased LPP in the nonreligious chanting and no-chanting conditions. However, the LPP induced by negative pictures is barely visible when the participant chants Amitabha Buddha's name (Figure 4).

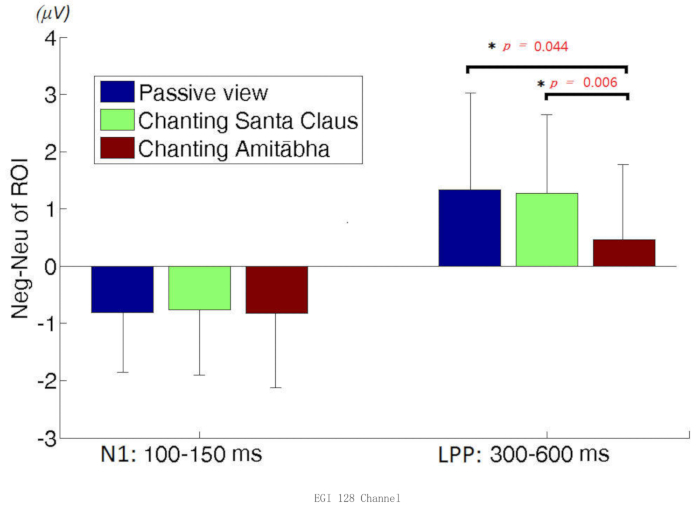

Region of interest (ROI) analysis

The three conditions were combined to estimate the regions that were generally activated at N1 and LPP components. Repeated measures ANOVA was performed with statistical software to calculate the difference in the N1 and LPP components between the chanting conditions (Figure 5).

The left three columns show the difference in the N1 component for the three chanting conditions: the silent viewing condition, the nonreligious chanting condition, and the religious chanting condition. The differences in the N1 component were similar across the three conditions. The right three columns show the difference in the LPP component for the three chanting conditions. This demonstrates that the difference in the LPP component is much smaller in the religious chanting condition than in the nonreligious chanting condition and the silent viewing condition.

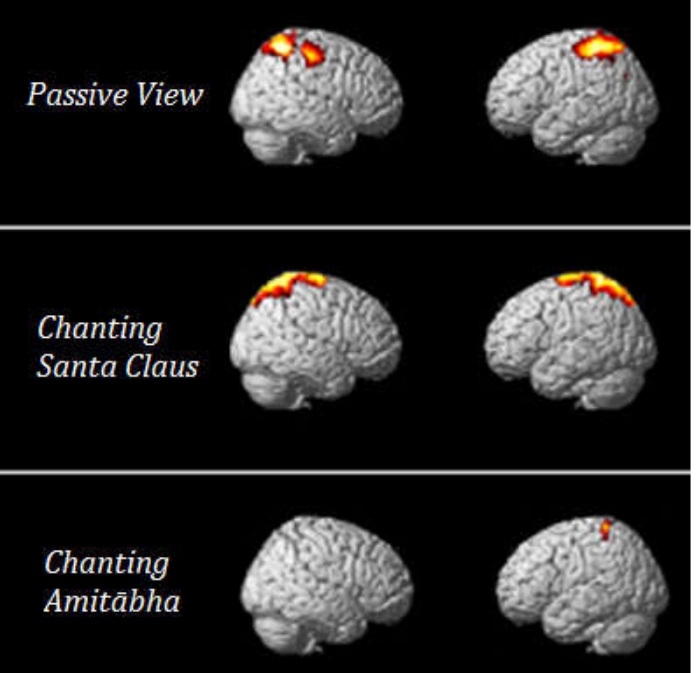

Source analysis

Source analysis was applied to extract the potential brain mapping based on the LPP results (Figure 6). The results show that when compared with neutral pictures, negative pictures induce more parietal activation in the nonreligious chanting condition and no chanting condition. In contrast, this negative picture-induced activation largely disappears in the religious chanting condition.

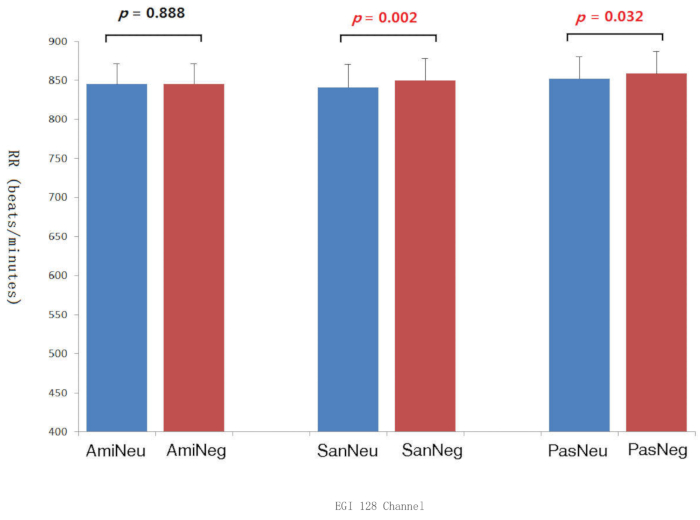

Physiological results: heart rate

There was a significant change in the heart rate (HR) between the negative and neutral pictures in the nonreligious chanting condition. A similar trend was found in the no-chanting condition. However, no such HR difference was found in the religious chanting condition (Figure 7).

Figure 2: A representative channel (Pz) showed different ERPs in six chanting conditions. The six conditions are (1) religious chanting while viewing neutral pictures (AmiNeu); (2) religious chanting while viewing negative pictures (AmiNeg); (3) nonreligious chanting while viewing neutral pictures (SanNeu); (4) nonreligious chanting while viewing negative pictures (SanNeg); (5) no chanting while viewing neutral pictures (PasNeu); and (6) no chanting while viewing negative pictures (PasNeg). The channel Pz located in the mid-parietal area of the scalp. Please click here to view a larger version of this figure.

Figure 3: The ERP results for demonstrating the N1 component in the three chanting conditions. Two-dimensional maps of the N1 component for the three conditions for each picture type. In the last column, channels with significant differences (p < 0.05) are shown with dots; dots that are in darker color indicate greater significance (i.e., smaller p-values). Please click here to view a larger version of this figure.

Figure 4: The ERP results for demonstrating the LPP component in the three chanting conditions. Two-dimensional maps of the late positive potential (LPP) component for the three conditions for each picture type. In the last column, channels with significant differences (p < 0.05) are shown with dots; dots that are in darker color indicate greater significance (i.e., smaller p-values). Please click here to view a larger version of this figure.

Figure 5: Region of interest (ROI) analysis. The Region of interest (ROI) analysis on the difference between negative vs. neutral picture-induced brain responses for the early component, N1, and the late component, the late positive potential (LPP). Please click here to view a larger version of this figure.

Figure 6: Source analysis of the late positive potential (LPP) component under the three conditions. Highlighted areas indicates higher brain activity in negative vs. neutral conditions. Please click here to view a larger version of this figure.

Figure 7: The heart beat intervals under the three chanting conditions. The electrocardiogram's inter-beat intervals (RRs) under each picture type/chanting combination and the corresponding p values. Ami: Amitabha Buddha chanting condition, San: Santa Claus chanting condition, Pas: passive viewing condition, Neu: neutral picture, Neg: negative picture. Please click here to view a larger version of this figure.

Supplementary Table 1: Rating the belief in the efficacy of the chanting subject (Amitabha Buddha, Santa Claus). It uses a 1-9 scale, where 1 indicates the least belief and 9 the strongest belief. Please click here to download this Table.

Supplementary File 1: Code for EEG data batch preprocessing. It removes bad channels, resamples the data to 250 Hz, and then filters the data. Please click here to download this File.

Supplementary File 2: Code for ERP data repairing. It repairs bad epochs with noisy spikes. Please click here to download this File.

Discussion

The uniqueness of this study is the application of a neuroscientific method to probe the neural mechanisms underlying a widespread religious practice, i.e., repetitive religious chanting. Given its prominent effect, this method could enable new interventions for therapists or clinicians to treat clients dealing with emotional problems and suffering from anxiety and stress. Together with previous studies, broader emotion regulation research should be considered in future studies7,8,9,22.

There are few ERP studies on chanting, given the difficulty of constructing experiments that combine chanting and other cognitive events. This study demonstrates a feasible protocol for investigating the affective effect of chanting/praying, which is rather popular in the real world. Previous functional MRI (fMRI) studies found that praying recruits areas of social cognition23. One resting-state fMRI study revealed that chanting "OM" reduced outputs from the anterior cingulate, insula, and orbitofrontal cortices24. Another EEG study found that "OM" meditation increased delta waves, inducing the experience of relaxation and deep sleep25. However, these methods could not precisely investigate the specific event-related changes after religious chanting.

Researchers should control the confounding factors of language processing and familiarity to successfully investigate the potential effect of repetitive religious chanting successfully. As the participants practiced extensively and daily chanting the name "Amitabha Buddha" (Chinese characters:  ; Cantonese pronunciation: o1-nei4-to4-fat6), we used the name "Santa Claus" (Chinese characters:

; Cantonese pronunciation: o1-nei4-to4-fat6), we used the name "Santa Claus" (Chinese characters:  ; Cantonese pronunciation: sing3-daan3-lou5-jan4) as the control condition because the local is familiar with Santa Claus. In Chinese, both names contain four characters, thus controlling for language similarity. Regarding familiarity, Santa Claus is also quite popular in Hong Kong because it is a partially Westernized city. In addition, Santa Claus is also a somewhat positive figure in Hong Kong, where there is official Christmas Holidays. Nevertheless, this control of familiarity is partial, as it is difficult to entirely match the understanding of Amitabha Buddha's name for the practitioners.

; Cantonese pronunciation: sing3-daan3-lou5-jan4) as the control condition because the local is familiar with Santa Claus. In Chinese, both names contain four characters, thus controlling for language similarity. Regarding familiarity, Santa Claus is also quite popular in Hong Kong because it is a partially Westernized city. In addition, Santa Claus is also a somewhat positive figure in Hong Kong, where there is official Christmas Holidays. Nevertheless, this control of familiarity is partial, as it is difficult to entirely match the understanding of Amitabha Buddha's name for the practitioners.

One critical step in the current study was the preparation of the fear- or stress-provoking pictures. As religious chanting may work better when threatening events occur, selecting proper stimuli from the IAPS image pool26 was crucial. It is recommended that potential participants be interviewed and that suitable pictures be chosen to avoid too much fear or disgust. Highly negative pictures could prevent the participants from willfully averting their attention; at the same time, the fear- and stress- provoking stimuli should enable the participants to experience a sufficient threat. Another critical issue is the block design of the study. The EEG/ERP signal is sufficiently sensitive and dynamic to follow every event. However, it would be more appropriate to implement a block design with a 20-30 s viewing period because the pattern of cardiac function or emotion may not change on the order of seconds27. On the other hand, a 60 s block might be too long, and the neural response could become habituated in the ERP studies.

The EEG data processing stage needs to make a backup during each step, as each step alters the data and records the changes made during those steps. This can be used to track changes and make it easier to find errors during batch processing. Improving the data quality is also essential, so experience in raw data cleaning and identifying bad ICs is needed. In the statistical analysis, comparisons were made on grand averages, and ANOVA was applied. We caution that this statistic with the fixed-effect model is susceptible to random effects28. Mixed-effects models can be adapted to control extraneous factors29, and the assumption of linearity can potentially affect inferences drawn from the ERP data30.

Several limitations are worth noting. One limitation is that the current study enrolled only one group of participants who practiced Pureland Buddhism. Enrolling a control group without any experience in religious chanting for comparison could help determine whether the effect of religious chanting is mediated by belief or familiarity. Usually, a randomized controlled trial would be more convincing to examine the impact of emotion modulation on religious chanting31. However, it is difficult to guarantee that any participant would repetitively chant "Amitabha Buddha" with complete willingness. Additionally, the LPP is affected by other factors, such as emotional sound or positive priming32,33. Thus, better-controlled experiments are needed to delineate more clearly the fundamental neuro-mechanism underlying the effect of religious chanting.

In sum, previous studies have demonstrated that the human brain is subjective to neural plasticity and swift alteration of states34,35; with sufficient practice and intention, the brain can reshape itself and respond differently to normally fearful stimuli. This study provides insights into the development of effective coping strategies for handling emotional distress in contemporary contexts. Following this protocol, researchers should examine the effect of religious chanting or other traditional practices to identify feasible ways to help people ameliorate their emotional sufferings.

Acknowledgements

The study was supported by the small fund project of HKU and NSFC.61841704.

Materials

| Name | Company | Catalog Number | Comments |

| E-Prime 2.0 | Psychology Software Tools | stimulus presentation, behavior data collection | |

| EEGLAB | Swartz Center for Computational Neuroscience | EEG analysis software | |

| HydroCel GSN 128 channels | Electrical Geodesics, Inc. (EGI) | EEG cap | |

| LabChart | ADInstruments | physiological data (including ECG) acquisition software | |

| Matlab R2011a | MathWorks | EEGLAB and SPM are based on Matlab; statistical analysis tool for EEG and physiological data | |

| Netstation | Electrical Geodesics, Inc. (EGI) | EEG acquisition software | |

| PowerLab 8/35 | ADInstruments | PL3508 | physiological data (including ECG) acquisition hardware |

| SPM | Wellcome Trust Centre for Neuroimaging | EEG source analysis software | |

| FMRIB | University of Oxford Centre for Functional MRI of the Brain (FMRIB) | Plug-in for EEGLAB to process ECG data | |

| SPSS | IBM | statistical analysis tool for behavior and EEG ROI data | |

| iMac 27" | Apple | running the Netstation software | |

| Windows PC | HP | running the E-Prime 2.0 software | |

| Windows PC | Dell | running the LabChart software |

References

- Kennedy, H., Montreuil, T. C. The late positive potential as a reliable neural marker of cognitive reappraisal in children and youth: A brief review of the research literature. Frontiers in Psychology. 11, 608522 (2020).

- Yu, Q., Kitayama, S. Does facial action modulate neural responses of emotion? An examination with the late positive potential (LPP). Emotion. 21 (2), 442-446 (2021).

- Gao, J. L., et al. Repetitive religious chanting modulates the late-stage brain response to fear and stress-provoking pictures. Frontiers in Psychology. 7, 2055 (2017).

- Barkus, E. Effects of working memory training on emotion regulation: Transdiagnostic review. PsyCh Journal. 9 (2), 258-279 (2020).

- Denny, B. T. Getting better over time: A framework for examining the impact of emotion regulation training. Emotion. 20 (1), 110-114 (2020).

- Champe, J., Okech, J. E. A., Rubel, D. J. Emotion regulation: Processes, strategies, and applications to group work training and supervision. Journal for Specialists in Group Work. 38 (4), 349-368 (2013).

- Sobolewski, A., Holt, E., Kublik, E., Wrobel, A. Impact of meditation on emotional processing-A visual ERP study. Neuroscience Research. 71 (1), 44-48 (2011).

- Katyal, S., Hajcak, G., Flora, T., Bartlett, A., Goldin, P. Event-related potential and behavioural differences in affective self-referential processing in long-term meditators versus controls. Cognitive Affective & Behavioral Neuroscience. 20 (2), 326-339 (2020).

- Uusberg, H., Uusberg, A., Talpsep, T., Paaver, M. Mechanisms of mindfulness: The dynamics of affective adaptation during open monitoring. Biological Psychology. 118, 94-106 (2016).

- Bradley, M. M., Lang, P. J., Coan, J. A., Allen, J. B. . Handbook of emotion elicitation and assessment. Series in affective science. 483, 483-488 (2007).

- Gao, J. L., et al. Repetitive religious chanting invokes positive emotional schema to counterbalance fear: A multi-modal functional and structural MRI study. Frontiers in Behavioral Neuroscience. 14, 548856 (2020).

- Somaratne, G. A. The Jhana-cittas: The methods of swapping existential planes via samatha. Journal Of The Postgraduate Institute Of Pali And Buddhist Studies. 2, 1-21 (2017).

- Bhikkhu, T. Sallatha Sutta: The arrow (SN 36.6), Translated from the pali. Access to Insight Legacy Edition. , (2013).

- Halkias, G. . Luminous bliss : a religious history of Pure Land literature in Tibet : with an annotated English translation and critical analysis of the Orgyan-gling gold manuscript of the short Sukhāvatīvȳuha-sūtra. , (2013).

- Stewart, J. L., et al. Attentional bias to negative emotion as a function of approach and withdrawal anger styles: An ERP investigation. International Journal of Psychophysiology. 76 (1), 9-18 (2010).

- Delorme, A., Makeig, S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods. 134 (1), 9-21 (2004).

- Ito, T. A., Larsen, J. T., Smith, N. K., Cacioppo, J. T. Negative information weighs more heavily on the brain: The negativity bias in evaluative categorizations. Journal of Personality and Social Psychology. 75 (4), 887-900 (1998).

- Grech, R., et al. Review on solving the inverse problem in EEG source analysis. Journal of NeuroEngineering and Rehabilitation. 5, 25 (2008).

- Penny, W. D., Friston, K. J., Ashburner, J. T., Kiebel, S. J., Nichols, T. E. . Statistical Parametric Mapping: The Analysis of Functional BrainIimages: The Analysis of Functional Brain Images. , (2011).

- Friston, K., et al. Multiple sparse priors for the M/EEG inverse problem. NeuroImage. 39 (3), 1104-1120 (2008).

- Niazy, R. K., Beckmann, C. F., Iannetti, G. D., Brady, J. M., Smith, S. M. Removal of FMRI environment artifacts from EEG data using optimal basis sets. Neuroimage. 28 (3), 720-737 (2005).

- Nakamura, H., Tawatsuji, Y., Fang, S. Y., Matsui, T. Explanation of emotion regulation mechanism of mindfulness using a brain function model. Neural Networks. 138, 198-214 (2021).

- Schjoedt, U., Stdkilde-Jorgensen, H., Geertz, A. W., Roepstorff, A. Highly religious participants recruit areas of social cognition in personal prayer. Social Cognitive and Affective Neuroscience. 4 (2), 199-207 (2009).

- Rao, N. P., et al. Directional brain networks underlying OM chanting. Asian Journal of Psychiatry. 37, 20-25 (2018).

- Harne, B. P., Bobade, Y., Dhekekar, R. S., Hiwale, A. SVM classification of EEG signal to analyze the effect of OM Mantra meditation on the brain. 2019 Ieee 16th India Council International Conference (Ieee Indicon 2019). , (2019).

- Coan, J. A., Allen, J. J. B. . Handbook of Emotion Elicitation and Assessment. , (2007).

- Meseguer, V., et al. Mapping the apetitive and aversive systems with emotional pictures using a Block-design fMRI procedure. Psicothema. 19 (3), 483-488 (2007).

- Luck, S. J., Gaspelin, N. How to get statistically significant effects in any ERP experiment (and why you shouldn't). Psychophysiology. 54 (1), 146-157 (2017).

- Meteyard, L., Davies, R. A. I. Best practice guidance for linear mixed-effects models in psychological science. Journal of Memory and Language. 112, 104092 (2020).

- Tremblay, A., Newman, A. J. Modeling nonlinear relationships in ERP data using mixed-effects regression with R examples. Psychophysiology. 52 (1), 124-139 (2015).

- Wu, B. W. Y., Gao, J. L., Leung, H. K., Sik, H. H. A randomized controlled trial of Awareness Training Program (ATP), a group-based mahayana buddhist intervention. Mindfulness. 10 (7), 1280-1293 (2019).

- Brown, D. R., Cavanagh, J. F. The sound and the fury: Late positive potential is sensitive to sound affect. Psychophysiology. 54 (12), 1812-1825 (2017).

- Hill, L. D., Starratt, V. G., Fernandez, M., Tartar, J. L. Positive affective priming decreases the middle late positive potential response to negative images. Brain and Behavior. 9 (1), 01198 (2019).

- Belzung, C., Wigmore, P. . Current Topics in Behavioral Neurosciences,1 online resource. , (2013).

- Denes, G. . Neural Plasticity Across The Lifespan: How The Brain Can Change. , (2016).

This article has been published

Video Coming Soon

ABOUT JoVE

Copyright © 2024 MyJoVE Corporation. All rights reserved