Eye Movements in Visual Duration Perception: Disentangling Stimulus from Time in Predecisional Processes

In This Article

Summary

We present a protocol that employs eye tracking to monitor eye movements during an interval comparison (duration perception) task based on visual events. The aim is to provide a preliminary guide to separate oculomotor responses to duration perception tasks (comparison or discrimination of time intervals) from responses to the stimulus itself.

Abstract

Eye-tracking methods may allow the online monitoring of cognitive processing during visual duration perception tasks, where participants are asked to estimate, discriminate, or compare time intervals defined by visual events like flashing circles. However, and to our knowledge, attempts to validate this possibility have remained inconclusive so far, and results remain focused on offline behavioral decisions made after stimulus appearance. This paper presents an eye-tracking protocol for exploring the cognitive processes preceding behavioral responses in an interval comparison task, where participants viewed two consecutive intervals and had to decide whether it speeded up (first interval longer than second) or slowed down (second interval longer).

Our main concern was disentangling oculomotor responses to the visual stimulus itself from correlates of duration related to judgments. To achieve this, we defined three consecutive time windows based on critical events: baseline onset, the onset of the first interval, the onset of the second interval, and the end of the stimulus. We then extracted traditional oculomotor measures for each (number of fixations, pupil size) and focused on time-window-related changes to separate the responses to the visual stimulus from those related to interval comparison per se. As we show in the illustrative results, eye-tracking data showed significant differences that were consistent with behavioral results, raising hypotheses on the mechanisms engaged. This protocol is embryonic and will require many improvements, but it represents an important step forward in the current state of art.

Introduction

Time perception abilities have attracted increasing research attention over the last years, in part due to accumulating evidence that these may be linked to reading skills or pathological conditions1,2,3,4,5. Visual duration perception-the ability to estimate, discriminate, or compare time intervals defined by visual events-is one subfield of interest6,7in which eye-tracking methods could make a contribution. However, results remain focused on poststimulus behavioral decisions like pressing a button to indicate how much time has passed (estimate), whether time intervals are the same or different (discrimination), or which of a series of time intervals is the longest or shortest. A few studies have attempted to correlate behavioral results with eye-tracking data8,9 but they failed to find correlations between the two, suggesting that a direct relation is absent.

In the current paper, we present a protocol for registering and analyzing oculomotor responses during stimulus presentation in a visual duration perception task. Specifically, the description refers to an interval comparison task where participants saw sequences of three events that defined two time intervals and were asked to judge whether they speeded up (first interval longer than second) or slowed down (first shorter than second). The time intervals used in the study spanned from 133 to 733 ms, adhering to the principles of the Temporal Sampling Framework (TSF)10. TSF suggests that the brain's oscillatory activity, particularly in frequency bands such as delta oscillations (1-4 Hz), synchronizes with incoming speech units such as sequences of stress accents. This synchronization enhances the encoding of speech, improves attention to speech units, and helps extract sequential regularities that may be relevant in understanding conditions like dyslexia, which exhibit atypical low-frequency oscillations. The goal of the study in which we developed the method presented here was to determine whether dyslexics' difficulties in visual duration perception (group effects on the interval comparison task) reflect problems in processing the visual object itself, namely movement and luminance contrasts11. If this was the case, we expected that dyslexics' disadvantage towards controls would be larger for stimuli with movement and low luminance contrasts (interaction between group and stimulus type).

The main result of the original study was driven by poststimulus behavioral judgments. Eye-tracking data - pupil size and number of fixations - recorded during stimulus presentation were used to explore processes preceding the behavioral decisions. We believe, however, that the current protocol may be used independently from behavioral data collection, provided that the goals are set accordingly. It may also be possible to adjust it for interval discrimination tasks. Using it in time estimation tasks is not so immediate, but we would not rule out that possibility. We used pupil size because it reflects cognitive load12,13,14, among other states, and may thus provide information on participants' skills (higher load meaning fewer skills). Regarding the number of fixations, more fixations may reflect the participants' stronger engagement with the task15,16. The original study used five stimulus types. For simplification, we only used two in the current protocol (Ball vs. Flash, representing a movement-related contrast).

The main challenge we tried to address was disentangling responses to the visual stimulus itself from those related to interval comparison since it is known that oculomotor responses change according to characteristics such as movement or luminance contrasts17. Based on the premise that the visual stimulus is processed as soon as it appears on screen (first interval), and interval comparison is only made possible once the second time interval begins, we defined three time windows: prestimulus window, first interval, second interval (behavioral response not included). By analyzing changes from the prestimulus window over the first interval, we would get indices of participants' responses to the stimulus itself. Comparing the first to the second interval would tap into possible oculomotor signatures of interval comparison-the task participants were asked to perform.

Protocol

Fifty-two participants (25 diagnosed with dyslexia or signaled as potential cases and 27 controls) were recruited from the community (through social media and convenience email contacts) and a university course. Following a confirmatory neuropsychological assessment and subsequent data analysis (for more details, see Goswami10), seven participants were excluded from the study. This exclusion comprised four individuals with dyslexia who did not meet the criteria, two dyslexic participants with outlier values in the primary experimental task, and one control participant whose eye-tracking data was affected by noise. The final sample was composed of 45 participants, 19 dyslexic adults (one male), and 26 controls (five male). All participants were native Portuguese speakers, had normal or corrected-to-normal vision, and none had diagnosed hearing, neurological, or speech problems. The protocol described here was approved by the local ethics committee of the Faculty of Psychology and Educational Sciences at the University of Porto (ref. number 2021/06-07b), and all participants signed informed consent according to the Declaration of Helsinki.

1. Stimulus creation

- Define eight sequences of two time intervals (Table 1) wherein the first is shorter than the second (slow-down sequence); choose intervals that are compatible with the frame rate of the animation software (here, 30 frames/s, 33 ms/frame) using a frame-duration conversion table.

- For each slow-down sequence, create a speed-up analog obtained by inverting the order of the intervals (Table 1).

- In a spreadsheet, convert interval length to the number of frames by dividing the target interval (ms) by 33 (e.g., for 300-433 ms interval sequence, indicate 9-13 frames).

- Define key frames for each sequence: stimulus onset at frame 7 (after six blank frames, corresponding to 200 ms), offset of interval 1 at frame 6 + length of interval 1 (6 + 9 for the given example), same for the offset of interval 2 (6 + 9 + 13). Set two more frames at the end of interval 2 to mark the end of the stimulus (6+ 9 + 13 +2).

- Create flash sequences as animations.

- Run the animation software (e.g., Adobe Animate) and create a new file with a black background.

- At frame 7, draw a blue circle at the screen center. Ensure that its dimensions make it occupy around 2° of the visual field with the planned screen-eye distance (55 cm here), meaning that the ball diameter is 1.92 cm.

- Copy and paste this image into the next adjacent frame (starting on frame 7th), such that each flash lasts around 99 ms.

- Copy and paste this two-frame sequence into the other two key frames (onset of intervals 1 and 2).

- Build the remaining 15 animations by creating copies of the file and moving the interval onsets to the appropriate frames.

- Create bouncing ball sequences as animations.

- Open a file in the animation software with the same specifications (size, background) used in flash animations. Open the spreadsheet with key-frame specifications so that key-frames now correspond to squashed balls hitting the ground.

- Start with three frames with a black background (99 ms). In the 4th frame, draw a blue ball at the top center, equal to the one used for flashes.

- Draw a squashed ball (width larger than height) at the stimulus onset point, lasting three frames (onset of interval 1). Ensure that the ball is horizontally centered and vertically below the center of the screen.

- Click on the button Properties of the object and then on Position and Size to position the ball at the chosen squashing height and increase width/decrease height.

- Generate a continuous change using the tween command from the ball at the top to the squashed ball (vertical descent).

- Copy the three-frame sequence featuring the squashed ball into the other two key frames (onset of intervals 1 and 2).

- In the spreadsheet, divide the duration of each interval by 2 to define the middle points between two squashes for intervals 1 and 2, where the ball hits maximum height after ascending and before descending.

- Draw a non-squashed ball vertically above the lowest point of the trajectory at the middle points defined in step 1.6.6. Generate the ascendent animation between the interval onset (when the ball hits the ground) and the highest point and between the highest point and the next squash (descent).

- Adapt the file to the other 15 time structures.

- Export all animations as .xvd. If the option is unavailable, export as .avi and then convert, such that it can be used in the eyelink system.

2. Experiment preparation

- Creating the experiment folder

- Open the Experiment Builder application and choose new from the menu file.

- Save the project by clicking on File | Save as. Specify the name of the project and the location where it is to be saved.

NOTE: This will create an entire folder with subfolders for stimulus files and other materials. The experiment file will appear in the folder with the .ebd extension. - Inside the project folder, click on Library and then in the folder named Video. Upload the .xvid video stimulus files to this folder.

NOTE: All stimuli used in the experiment must be stored in the Library.

- Creating the basic structure for within-system and human-system interaction

- Drag the start panel and the display screen icons to the graph editor window. Create a link between them by clicking and dragging the mouse from the first to the second.

- In the properties of display screen, click on Insert Multiline Text Resource button and type an instruction text explaining the calibration procedure that will follow.

- Select two triggers (input channels to move forward in the experiment): keyboard and el button (button box). Link the display screen to both.

NOTE: These triggers allow the participant or the experimenter to click on any button to proceed. - Select the Camera setup icon and link both triggers to it.

NOTE: This will allow establishing communication with the eye-tracker so that the participant's eye(s) can be monitored for camera adjustment, calibration, and validation (see section 4). - Select the icon Results file and drag it on the right side of the flow chart.

NOTE: This action allows to record the behavioral responses of the experiment.

- Defining the block structure

- Select the Sequence icon and link it (see step 2.2.1) to the Camera setup.

- In Properties, click on Iteration count and select 2 for number of blocks (Flashes and Balls).

- NOTE: This will separate the presentation of flashes from that of balls.

- Enter the sequence (block definition) and drag a start panel icon, a display icon, and the triggers el_button and keyboard. Link them in this order.

- In the display screen icon, click on the Insert Multiline Text Resource button and type an instruction text explaining the experiment.

- Defining the trial structure

- Inside the block sequence, drag a New sequence icon to the editor to create the trial sequence.

NOTE: Nesting the trial sequence inside the block sequence allows running multiple trials in each block. - Inside the trial sequence, drag a start panel and a Prepare sequence icon, and link the second to the first.

NOTE: This action loads the experimental stimuli that will be presented to the participant. - Drag the Drift correction icon to the interface and link it to the prepare sequence icon.

NOTE: The drift correction presents a single fixation target on the stimulation computer monitor and enables the comparison of the cursor gaze position to the actual stimuli position on the recording computer. The drift check and the respective correction will automatically begin after every trial to ensure the initial calibration quality persists.

- Inside the block sequence, drag a New sequence icon to the editor to create the trial sequence.

- Defining the recording structure

- Inside the trial sequence, drag a New sequence icon to the editor to create the recording sequence.

NOTE: The recording sequence is responsible for eye data collection, and it is where the visual stimuli are presented. - Select the option Record in the properties of this sequence.

NOTE: By doing this, the eye tracker starts recording when the stimulus begins and stops when the stimulus ends. - In properties, click on Data Source and fill in each row the table (type or select) with the exact filename of each stimulus, the type of trial-practice or experimental, how many times each stimulus will be presented (1 here), and the expected response button.

NOTE: The filenames must be identical to those uploaded in the Library, file extension included (e.g. ball_sp_1.xvd). - On the top panel of the interface, click on Randomization Settings, and mark the Enable trial randomization boxes to ensure that the stimuli will be randomized within each block. Click on the Ok button to return to the interface.

- In the recording sequence, create the start panel- display screen connection. Inside the display screen, select the Insert video resource button (camera icon) and drag it to the interface.

- Link the keyboard and el button triggers to the display icon (as in step 2.2.1) to allow the participant to respond.

- Drag the Check accuracy icon and link it to the triggers as in step 2.2.1.

NOTE: This action allows the software to check whether the key that was pressed matches the value of the correct response column of the Data Source.

- Inside the trial sequence, drag a New sequence icon to the editor to create the recording sequence.

- Finalizing the experiment

- On the top of the main panel, click on the Run arrow icon to run a test of the experiment.

3. Apparatus setup

- Connect the stimulation computer to a 5-button button box and a keyboard.

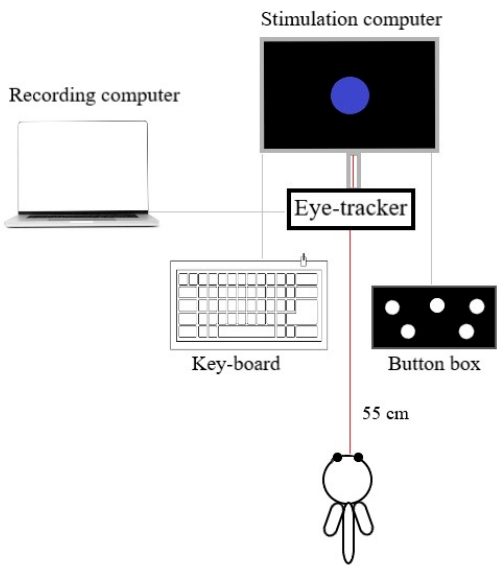

- Connect the stimulation computer (with the system-dedicated presentation software) to the eye-tracker (Figure 1), placed below or in front of the monitor.

- Connect the eye-tracker to the recording computer.

Figure 1: The eye-tracking setup. The spatial arrangement of the recording system, is composed of the stimulation computer, the recording computer, the eye-tracker, the response device (button box), and the keyboard. Participants sat 55 cm away from the stimulation screen. Please click here to view a larger version of this figure.

4. Preparation of data collection

- Obtain informed consent from the participants and describe the experimental format to them. Position the participant at a distance from the stimulation computer such that the stimulus circle (flash or ball) corresponds to 2° of the visual field (typical distance ~ 60 cm).

- Choose the sampling frequency (1,000 Hz for high resolution) and eye(s) to record (dominant eye).

- In the visualization provided by the recording computer, ensure that the eye-tracker tracks the target (a stick placed between the participant's eyebrows) and the dominant eye in a stable manner. Move the camera up or down if necessary.

- Open the experiment. Run the 5-point calibration and validation procedures provided by the system from the recording computer to allow accurate and reliable recording of eye movements. Instruct the participant to gaze at a dot that will appear on the screen at (5) different places (once for calibration, twice for validation).

NOTE: Accept errors only below 0.5°.

5. Running the experiment

- Explain the task to the participant.

- Present the practice trials and clarify participants' doubts.

- Start the experiment by clicking Run.

- Pause the experiment between conditions and explain that the stimulus is now going to be different, but the question is the same.

6. Creating time windows for analysis

- In Dataviewer software18, go to File, then Import Data, and finally Multiple EyeLink Data files. In the dialog box, select the files of all participants.

- Select one trial. Select the square icon to draw an interest area.

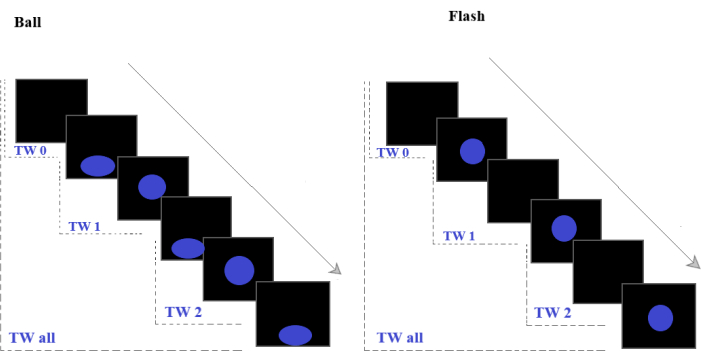

NOTE: The interest area defines both a region of the screen and a time window within the trial. Here, we will always select the full screen. - To create TW all (Figure 2), click on the draw icon and select the full screen. In the open dialog box, label the interest area as TW_all and define a time segment matching the full trial.

- Click on Save the interest area set and apply this template to all trials with the same length (e.g., time structures 1 and 8 from Table 1, for both balls and flashes, for all participants).

- Select one of the 16 time structures from Table 1. Define TW_0, TW_1, and TW_2 as in step 6.3, but following the time limits schematized in Figure 2 (time window boundaries corresponding to flash appearances and ball squashes). The length of TW0 is customizable.

- Label each interest area and apply the template to the trials with the same time structure (balls and flashes, all participants).

- Repeat the process for the 15 remaining time structures.

Figure 2: Stimulus type. Sequences of bouncing balls (left) and flashes (right) that were used in the experiment. The dashed lines indicate the time windows used for analysis: TW0 is the prestimulus period; TW1 is the stimulus's first appearance on the screen and marks the first interval-when the participant has information about stimuli characteristics and the length of the first interval, and TW2 marks the second Interval-when the participant can compare the first to the second interval to elaborate a decision (slowed-down or speeded-up). Please click here to view a larger version of this figure.

7. Extracting measures

- In the menu bar, click on Analysis | Report |Interest Area Report.

- Select the following measures to extract dwell time, number of fixations, and pupil size, and then click next.

NOTE: The output should contain data from 16 flash trials and 16 bouncing ball trials per participant (32 trials x n participants), specified for each of the four time windows (TW0, TW1, TW2, TW all). - Export the matrix as a .xlsx file.

8. Remove trials with artifacts

- Consider dwell time measures for TW all and mark trials with more than 30% of signal loss (dwell time < 70% of trial time).

NOTE: Take into account that each of the 32 trials has a different length. - Exclude noisy (marked) trails from the matrix and save it.

9. Statistical analysis

- Perform two repeated-measures ANOVA (TW x group x stimulus) for each measure, one with TW 0 and 1, the other with TW 1 and 2.

- Correlate TW-related changes with behavioral results if available.

Representative Results

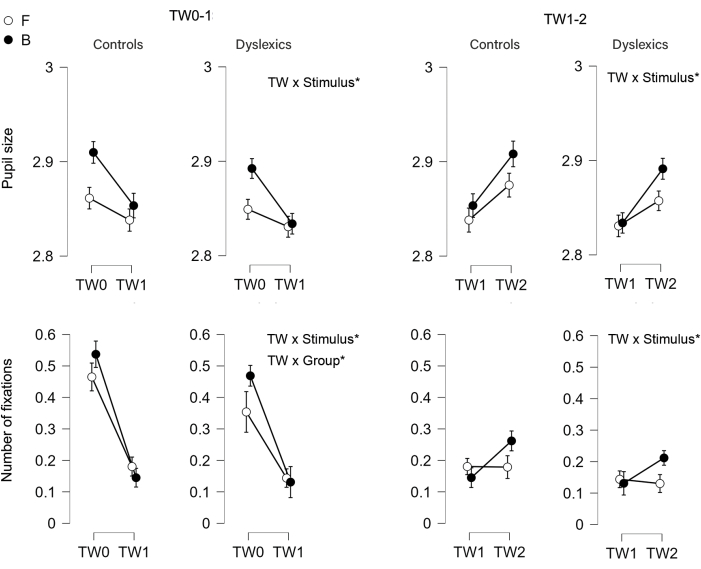

To better understand TW-related changes, our analysis focused on the interaction of time windows (TW0 vs. TW1, TW1 vs.TW2) with stimulus type and group. As depicted in Figure 3, both TW-related comparisons (TW01 and TW12) showed different levels of change according to Stimulus (TW x Stimulus interaction), with Balls eliciting more TW-related changes in oculomotor responses than flashes in both groups (no TW x stimulus x group interaction). This occurred for both the pupil size and the number of fixations. Regarding group influences, we found a TW x group interaction on the change in the number of fixations from TW0 to TW1 (response to stimulus onset): dyslexics showed decreased change, mainly due to lower prestimulus values. Interactions between TW, stimulus, and group were absent. This shows that group influences were similar for both balls and flashes.

Figure 3: Results. Time-window-related changes in pupil size and number of fixations as a function of group (control vs. dyslexic, TW x Group) and stimulus type (Balls, B, vs. Flashes, F, TW x Stimulus). TW 0-1 addresses the contrast between no stimulus and stimulus visibility; TW 1-2 compares the first and second intervals to address interval comparison. The 95% confidence intervals are represented by vertical bars. Balls elicited more changes than flashes from TW0 over TW1 (more decrease) and from TW1 over TW2 (more increase) in both eye-tracking measures and both groups (TW x stimulus, no TW x stimulus x group). Changes in the number of fixations across TW 0-1 were smaller in dyslexics than controls regardless of stimulus type (TW x group, no TW x stimulus x group). Please click here to view a larger version of this figure.

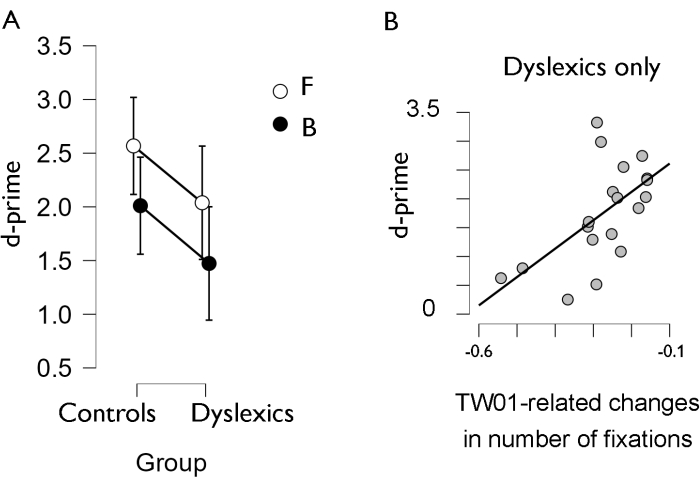

Figure 4: Behavioral results. (A) Discrimination between speed-up and slow-down sequences (d-prime) per group and stimulus type. (B) Significant correlations between behavioral performance (d-prime) and time-window-related changes in eye movements, both stimulus-averaged. Please click here to view a larger version of this figure.

Critically, these values paralleled behavioral findings (Figure 4A), in line with the main study: behavioral findings pointed to stimulus effects (less accuracy for Balls than for Flashes) and group effects (worse performance in dyslexics), without group x stimulus interactions. Moreover, in the original study with five different stimuli, we correlated behavioral with eye-tracking data (number of fixations) averaged for all stimulus types and found a correlation in the dyslexic group: smaller changes from TW0 over TW1 coexisted with improved performance. Altogether, the results seemed consistent with the hypothesis that these (adult) dyslexics may be resorting to compensatory strategies for deliberate control of attention to the stimulus itself in the prestimulus period (fewer fixations on the empty screen would favor focusing on the stimulus by the time it appeared). We found no such correlation in controls, suggesting that they might not need to resort to strategies to keep focus. The restricted dataset used here for illustration (two stimuli only, Balls and Flashes) showed the same pattern (Figure 4B): dyslexics, but not controls, showed significant correlations between d-prime (behavioral discrimination index) and TW01-related changes.

In sum, the eye-tracking results addressing participants' responses to both stimulus onset (TW 0-1) and interval comparison (TW 1-2) replicated the behavioral evidence that balls versus flashes elicit different responses in individuals with and without dyslexia (TW x stimulus on eye-tracking measures, stimulus effects on d-prime). One part of the eye-tracking results also paralleled the group effects on d-prime, in that changes in the number of fixations at stimulus onset (TW 0-1) were smaller in dyslexics. Moreover, interactions between stimulus and group (different levels of deviance in dyslexics for balls vs. flashes) were null for behavioral and eye-tracking data. Finally, the correlation between behavioral performance and the oculomotor response was significant in the dyslexic group.

| Sequence | Type | Interval 1 | Interval 2 | Difference |

| 1 | Speed up | 433 | 300 | 133 |

| 2 | Speed up | 300 | 167 | 133 |

| 3 | Speed up | 467 | 433 | 34 |

| 4 | Speed up | 733 | 167 | 566 |

| 5 | Speed up | 467 | 300 | 167 |

| 6 | Speed up | 433 | 134 | 299 |

| 7 | Speed up | 534 | 233 | 301 |

| 8 | Speed up | 500 | 433 | 67 |

| 9 | Slow down | 300 | 433 | -133 |

| 10 | Slow down | 167 | 300 | -133 |

| 11 | Slow down | 433 | 467 | -34 |

| 12 | Slow down | 167 | 733 | -566 |

| 13 | Slow down | 300 | 467 | -167 |

| 14 | Slow down | 133 | 434 | -301 |

| 15 | Slow down | 233 | 534 | -301 |

| 16 | Slow down | 433 | 500 | -67 |

| Average interval | 377.1 | |||

| Average difference | 212.6 | |||

| Average difference/interval | 294.8 | |||

Table 1: Interval duration. Stimulus sequences for speed-up and slow-down sequences in milliseconds.

Discussion

The current protocol contains a novel component that might be critical to tackling current obstacles to incorporating eye-tracking in visual duration perception tasks. The critical step here is the definition of time windows based on cognitive processes that putatively take place in each of these time windows. In the system we used, time windows can only be defined as Areas of Interest (a space-related concept that is coupled with time in these systems), but in other systems, it is possible to do this by exporting different segments of the trial. In addition to this temporal segmentation of the trial, it is important to focus on analyzing changes across time windows rather than the parameters per time window.

Concerning the modifications to the protocol that had to be made, they were mostly related to the dimensions of the area of interest. We made a first attempt using dynamic AOIs - defining a spatial selection around the stimulus that followed it, rather than the whole screen. However, we soon realized that we could be missing relevant events outside that area. Given that our measures were unrelated to focus on the stimulus (pupil size was expected to change according to cognitive load and not according to attention to the flash or ball; the number of fixations was expected to reflect spatial search), we chose to use the full screen as the region of interest.

The current protocol is an embryonic proposal that is still subject to many refinements. We will only highlight two of these, even though there is much more room for improvement. The first concerns the differences in the length of the three time windows, which preclude us from interpreting time window effects on the number of fixations (e.g., a longer time window entails more fixations, hence the decrease from TW0 to TW1, see Figure 3). One way of addressing this problem would be to consider the number of fixations per time unit.

The second relates to the correspondence between time windows and putative ongoing processes, which includes various issues. One is that TW1 does not represent just stimulus appearance but probably also an explicit form of interval estimation (first interval) subsidiary to interval comparison and likely absent in TW0. In a similar fashion, changes across time windows may also reflect changes in general processes such as sustained attention and working memory18, even though some of these changes could be expected in an interval comparison task (working memory load is expected to increase from TW1 over TW2). One way to attenuate these potential confounds would be to introduce control tasks related to pure duration estimation, sustained attention and working memory, and then base the eye-tracking data analysis on the comparison between experimental (interval comparison) and control tasks. Another issue is that the duration of TW0 was irrelevant to the task, and it is known that task-irrelevant durations may be deleterious to performance19. Future work could focus on improving this, namely by creating a difference of 300 ms between TW0 (irrelevant interval) and TW1 to better delimit visual processing responses, since a short event can be biased to be perceived earlier or later than its presentation by simply adding another event in near temporal proximity20,21.

Finally, spontaneous eye blinks can affect time perception by distorting it (dilating time if an eye blink precedes the interval, contracting if it occurs simultaneously), potentially introducing variability in intra-individual timing performance22. One way of minimizing this problem would be to apply an eye-blink-based correction factor in participants' behavioral judgments (e.g., assign a reliability rate to each judgment depending on the presence of blinks before or during the stimuli. Additionally, incorporating the statistical approach of treating trials as random variables may also aid in addressing this problem.

Regarding future research, an important topic to address would be the association between spontaneous eye blink rate (EBR) and time perception. EBR has been known to be a non-invasive indirect marker of central dopamine function (DA)23, and, more recently, high ERB was associated with poorer temporal perception. The study suggests an implication of dopamine in interval timing and points to the use of ERB as a proxy of dopamine measure24. Another important topic is the functional meaning of the (change-related) measures we analyzed, which is yet to be determined in the context of our paradigm. In the original study, as well as in the current simplified dataset, increases in pupil size from TW0 to TW1 were consistent with the idea of increased cognitive load, but the absence of group effects on this measure precludes further considerations. One pattern that seems to present is that smaller changes across time windows correlated with better behavioral performance (Flashes better than Balls, and d-prime in dyslexics related to smaller changes), but further research is needed.

Despite its limitations, the current protocol is, to our knowledge, the first to show parallel results in eye-tracking and behavioral data (same profile of effects), as well as some evidence of the correlation between the two.

Acknowledgements

This work was supported by the Portuguese Foundation for Science and Technology under grants UIDB/00050/2020; and PTDC/PSI-GER/5845/2020. The APC was entirely funded by the Portuguese Foundation for Science and Technology under grant PTDC/PSI-GER/5845/2020 (http://doi.org/10.54499/PTDC/PSI-GER/5845/2020).

Materials

| Name | Company | Catalog Number | Comments |

| Adobe Animate | Adobe | It is a tool for designing flash animation films, GIFs, and cartoons. | |

| EyeLink Data Viewer | It is robust software that provides a comprehensive solution for visualizing and analyzing gaze data captured by EyeLink eye trackers. It is accessible on Windows, macOS, and Linux platforms. Equipped with advanced capabilities, Data Viewer enables effortless visualization, grouping, processing, and reporting of EyeLink gaze data. | ||

| Eye-tracking system | SR Research | EyeLink 1000 Portable Duo | It has a portable duo camera, a Laptop PC Host, and a response device. The EyeLink integrates with SR Research Experiment Builder, Data Viewer, and WebLink as well as many third-party stimulus presentation software and tools. |

| Monitor | Samsung Syncmaster | 957DF | It is a 19" flat monitor |

| SR Research Experiment Builder | SR Research | It is an advanced and user-friendly drag-and-drop graphical programming platform designed for developing computer-based experiments in psychology and neuroscience. Utilizing Python as its foundation, this platform is compatible with both Windows and macOS, facilitating the creation of experiments that involve both EyeLink eye-tracking and non-eye-tracking functionalities. |

References

- Bellinger, D., Altenmüller, E., Volkmann, J. Perception of time in music in patients with parkinson's disease - The processing of musical syntax compensates for rhythmic deficits. Frontiers in Neuroscience. 11, 68 (2017).

- Plourde, M., Gamache, P. L., Laflamme, V., Grondin, S. Using time-processing skills to predict reading abilities in elementary school children. Timing & Time Perception. 5 (1), 35-60 (2017).

- Saloranta, A., Alku, P., Peltola, M. S. Listen-and-repeat training improves perception of second language vowel duration: evidence from mismatch negativity (MMN) and N1 responses and behavioral discrimination. International Journal of Psychophysiology. 147, 72-82 (2020).

- Soares, A. J. C., Sassi, F. C., Fortunato-Tavares, T., Andrade, C. R. F., Befi-Lopes, D. M. How word/non-word length influence reading acquisition in a transparent language: Implications for children's literacy and development. Children (Basel). 10 (1), 49 (2022).

- Sousa, J., Martins, M., Torres, N., Castro, S. L., Silva, S. Rhythm but not melody processing helps reading via phonological awareness and phonological memory. Scientific Reports. 12 (1), 13224 (2022).

- Torres, N. L., Luiz, C., Castro, S. L., Silva, S. The effects of visual movement on beat-based vs. duration-based temporal perception. Timing & Time Perception. 7 (2), 168-187 (2019).

- Torres, N. L., Castro, S. L., Silva, S. Visual movement impairs duration discrimination at short intervals. Quarterly Journal of Experimental Psychology. 77 (1), 57-69 (2024).

- Attard, J., Bindemann, M. Establishing the duration of crimes: an individual differences and eye-tracking investigation into time estimation. Applied Cognitive Psychology. 28 (2), 215-225 (2014).

- Warda, S., Simola, J., Terhune, D. B. Pupillometry tracks errors in interval timing. Behavioral Neuroscience. 136 (5), 495-502 (2022).

- Goswami, U. A neural basis for phonological awareness? An oscillatory temporal-sampling perspective. Current Directions in Psychological Science. 27 (1), 56-63 (2018).

- Catronas, D., et al. Time perception for visual stimuli is impaired in dyslexia but deficits in visual processing may not be the culprits. Scientific Reports. 13, 12873 (2023).

- Zagermann, J., Pfeil, U., Reiterer, H. Studying eye movements as a basis for measuring cognitive load. Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems. , 1-6 (2018).

- Rafiqi, S., et al. PupilWare: Towards pervasive cognitive load measurement using commodity devices. Proceedings of the 8th ACM International Conference on PETRA. , 1-8 (2015).

- Klingner, J., Kumar, R., Hanrahan, P. Measuring the task-evoked pupillary response with a remote eye tracker. Proceedings of the 2008 Symposium on ETRA. , 69-72 (2008).

- Mahanama, B., et al. Eye movement and pupil measures: a review. Frontiers in Computer Science. 3, 733531 (2022).

- Pfleging, B., Fekety, D. K., Schmidt, A., Kun, A. L. A model relating pupil diameter to mental workload and lighting conditions. Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems. , 5776-5788 (2016).

- Cicchini, G. M. Perception of duration in the parvocellular system. Frontiers in Integrative Neuroscience. 6, 14 (2012).

- EyeLink Data Viewer 3.2.1. SR Research Ltd Available from: https://www.sr-research.com/data-viewer/ (2018)

- Spencer, R., Karmarkar, U., Ivry, R. Evaluating dedicated and intrinsic models of temporal encoding by varying contex. Philosophical Transactions of the Royal Society B. 364 (1525), 1853-1863 (2009).

- Coull, J. T. Getting the timing right: experimental protocols for investigating time with functional neuroimaging and psychopharmacology. Advances in Experimental Medicine and Biology. 829, 237-264 (2014).

- Burr, D., Rocca, E. D., Morrone, M. C. Contextual effects in interval-duration judgements in vision, audition and touch. Experimental Brain Research. 230 (1), 87-98 (2013).

- Grossman, S., Gueta, C., Pesin, S., Malach, R., Landau, A. N. Where does time go when you blink. Psychological Science. 30 (6), 907-916 (2019).

- Jongkees, B. J., Colzato, L. S. Spontaneous eye blink rate as predictor of dopamine-related cognitive function-a review. Neuroscience & Biobehavioral Reviews. 71, 58-82 (2016).

- Sadibolova, R., Monaldi, L., Terhune, D. B. A proxy measure of striatal dopamine predicts individual differences in temporal precision. Psychonomic Bulletin & Review. 29 (4), 1307-1316 (2022).

This article has been published

Video Coming Soon

ABOUT JoVE

Copyright © 2024 MyJoVE Corporation. All rights reserved