Decoding Auditory Imagery with Multivoxel Pattern Analysis

Source: Laboratories of Jonas T. Kaplan and Sarah I. Gimbel—University of Southern California

Imagine the sound of a bell ringing. What is happening in the brain when we conjure up a sound like this in the "mind's ear?" There is growing evidence that the brain uses the same mechanisms for imagination that it uses for perception.1 For example, when imagining visual images, the visual cortex becomes activated, and when imagining sounds, the auditory cortex is engaged. However, to what extent are these activations of sensory cortices specific to the content of our imaginations?

One technique that can help to answer this question is multivoxel pattern analysis (MPVA), in which functional brain images are analyzed using machine-learning techniques.2-3 In an MPVA experiment, we train a machine-learning algorithm to distinguish among the various patterns of activity evoked by different stimuli. For example, we might ask if imagining the sound of a bell produces different patterns of activity in auditory cortex compared with imagining the sound of a chainsaw, or the sound of a violin. If our classifier learns to tell apart the brain activity patterns produced by these three stimuli, then we can conclude that the auditory cortex is activated in a distinct way by each stimulus. One way to think of this kind of experiment is that instead of asking a question simply about the activity of a brain region, we ask a question about the information content of that region.

In this experiment, based on Meyer et al., 2010,4 we will cue participants to imagine several sounds by presenting them with silent videos that are likely to evoke auditory imagery. Since we are interested in measuring the subtle patterns evoked by imagination in auditory cortex, it is preferable if the stimuli are presented in complete silence, without interference from the loud noises made by the fMRI scanner. To achieve this, we will use a special kind of functional MRI sequence known as sparse temporal sampling. In this approach, a single fMRI volume is acquired 4-5 s after each stimulus, timed to capture the peak of the hemodynamic response.

1. Participant recruitment

- Recruit 20 participants.

- Participants should be right-handed and have no history of neurological or psychological disorders.

- Participants should have normal or corrected-to-normal vision to ensure that they will be able to see the visual cues properly.

- Participants should not have metal in their body. This is an important safety requirement due to the high magnetic field involved in fMRI.

- Participants should not suffer from claustrophobia

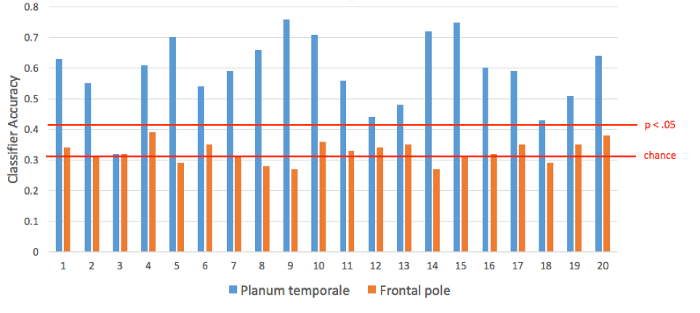

The average classifier accuracy in the planum temporale across all 20 participants was 59%. According to the Wilcoxon Signed-Rank test, this is significantly different from chance level of 33%. The mean performance in the frontal pole mask was 32.5%, which is not greater than chance (Figure 2).

Figure 2. Classification perf

MVPA is a useful tool for understanding how the brain represents information. Instead of considering the time-course of each voxel separately as in a traditional activation analysis, this technique considers patterns across many voxels at once, offering increased sensitivity compared with univariate techniques. Often a multivariate analysis uncovers differences where a univariate technique is not able to. In this case, we learned something about the mechanisms of mental imagery by probing the information content in a spe

- Kosslyn, S.M., Ganis, G. & Thompson, W.L. Neural foundations of imagery. Nat Rev Neurosci 2, 635-642 (2001).

- Haynes, J.D. & Rees, G. Decoding mental states from brain activity in humans. Nat Rev Neurosci 7, 523-534 (2006).

- Norman, K.A., Polyn, S.M., Detre, G.J. & Haxby, J.V. Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends Cogn Sci 10, 424-430 (2006).

- Meyer, K., et al. Predicting visual stimuli on the basis of activity in auditory cortices. Nat Neurosci 13, 667-668 (2010).

Skip to...

ABOUT JoVE

Copyright © 2024 MyJoVE Corporation. All rights reserved