A subscription to JoVE is required to view this content. Sign in or start your free trial.

Interactive and Immersive Visualization of Fluid Dynamics using Virtual Reality

Not Published

In This Article

Summary

The article describes a method to visualize three-dimensional fluid flow data in virtual reality. The detailed protocol and shared data and scripts do this for a sample data set from water tunnel experiments, but it could be used for computational simulation results or 3D data from other fields as well.

Abstract

The last decade has seen a rise in both the technological capacity for data generation and the consumer availability of immersive visualization equipment, like Virtual Reality (VR). This paper outlines a method for visualizing the simulated behavior of fluids within an immersive and interactive virtual environment using an HTC Vive. This method integrates complex three-dimensional data sets as digital models within the video game engine, Unity, and allows for user interaction with these data sets using a VR headset and controllers. Custom scripts and a unique workflow have been developed to facilitate the export of processed data from Matlab and the programming of the controllers. The authors discuss the limitations of this particular protocol in its current manifestation, but also the potential for extending the process from this example to study other kinds of 3D data not limited to fluid dynamics, or for using different VR headsets or hardware.

Introduction

Virtual reality (VR) is a tool that has seen increasing levels of popularity as it provides a new platform for collaboration, education, and research. According to Zhang et al., “VR is an immersive, interactive computer-simulated environment in which the users can interact with the virtual representations of the real world through various input/output devices and sensory channels1.” Fluid dynamics is a field of physics and engineering that attempts to describe the motion of liquids or gases due to pressure gradients or applied forces. Because the study of fluid dynamics is driven by the analysis of large data sets, a significant part of its research process hinges on visualization. This visualization poses a difficult task due to the potential size of the datasets and the four-dimensional (4D) character (3D in space + time) of the data. This complexity can hinder commonly used methods of visualization that are typically constrained to flat screens, limited in scale, and primarily allow the viewing of data from a removed perspective2. To overcome some of the challenges that impede effective visualization, this paper describes a method for processing 3D fluid flow data sets for import and interaction in virtual reality. Especially in the case of fluid dynamics, VR may give the user a more intuitive understanding of data patterns and processes that could otherwise be difficult to detect when exploring the 3D data via a 2D screen. Further, VR can pose as a novel educational tool for students because it provides an opportunity to reinforce ideas that they are learning in a traditional classroom setting in a new format3,4.

To-date implementation of VR for fluid dynamics research has relied on performing computational fluid dynamics (CFD) with in-house or off-the-shelf software packages and using various techniques to import these simulations and results into VR environments. For example, Kuester et al. created simulation code that continually injected particles into a flow field5. From there, they calculated the velocity of the particles which allowed them to visualize the particles in a VR toolkit called VirtualExplorer. The Matar research group at Imperial College London6 has developed a broader range of tools to interrogate additional quantities such as pressure density in the flow field, and they incorporate audio feedback as well. Still, their data is largely referenced on particle points or trajectories. Use of VR in fluid dynamics tends to follow this pattern of calculating particle streaks or streamlines in the highly resolved CFD of flow over immersed objects, e.g. a car or a cylinder, to reveal the structure and organization of the fluid motion7,8,9.

As an alternate to the particle-based modeling techniques, many 3D fluid dynamics results are modeled as 3D isosurfaces. These models are digitally heavier than point-based systems and require significant time and a number of steps to extract from the conventional software tools. The advantage of integrating these volumetric visualizations into VR is that they enable a user to virtually put themselves “inside” the measured or simulated domain. Isosurfaces in volumetric data are commonly used in both computational, and increasingly, experimental results. With the increasing prevalence of tomographic and holographic particle image velocimetry experiments that are acquiring volumetric data sets in a single run, methods to visualize and interrogate these fields will only grow in popularity.

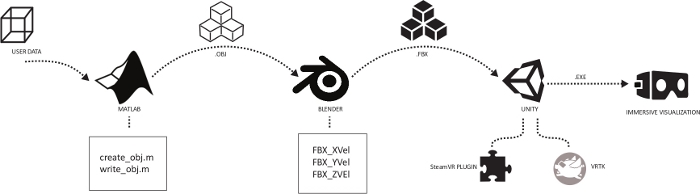

Some of the previous implementations of VR with fluid dynamic data have relied on the creation of a software package written in-house by the researchers, which can be limited in terms of customization and user interaction8,10. There has not been widespread implementation of these packages in other research groups since their creation. In this paper, we describe a method and share software that imports 3D experimental data that was generated by interpolating multiple planes of stereoscopic PIV. Its conversion into digital objects in VR uses three popular software packages: Matlab, Blender, and Unity. A flowchart of how the data objects are processed through these software packages is shown in Figure 1.

Unity, a video-game engine that is free for personal use, offers interesting opportunities to envision and potentially to gamify fluid dynamic data sets with an abundance of resources that allow for representation of objects and customization of features that other packages lack. The method begins in Matlab, processing data from the standard PLOT3D format11, computing three quantities (x-velocity u, y-velocity v, and z-vorticity ωz), and then outputting the data into the .obj file format along with an associated .mtl file for each .obj file that contains the color information for the isosurfaces. These .obj files are then rendered and converted to the .fbx file format in Blender. This step can seem superfluous, but avoids difficulties that the authors encountered by ensuring that .mtl files correctly align with their associated .obj files, and that the colors of the isosurfaces are coordinated properly. These .fbx files are then imported into Unity, where the data is treated as an object that can be manipulated and toggled. Finally, the VR user interface has been designed to allow for the user to move the data in space and time, control visibility settings, and even create drawings of their own in and around the data without removing the VR headset.

Figure 1: Process flow chart. Please click here to view a larger version of this figure.

Data set

The data set used to demonstrate the process of 3D data visualization in virtual reality is from water tunnel experiments that measured the wake of a simplified fish caudal fin geometry and kinematics12. This work entailed experiments that used similar equipment and methods as described by King et al.13, in which a generic caudal fin was modeled as a rigid, trapezoidal panel that pitched about its leading edge. Stereoscopic particle image velocimetry was used to measure the three-component velocity fields in the wakes produced by the fin model, and these experiments were performed in a recirculating water tunnel located at the Syracuse Center of Excellence for Environmental and Energy Systems. Additional details about the experimental data acquisition can be found in Brooks & Green12 or King et al.13.

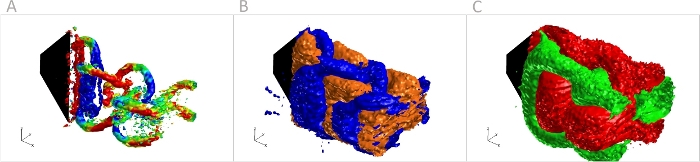

The 3D surfaces used to visualize the structure of the fluid motion are first rendered in Matlab. The structure and organization of the wake is visualized using isosurfaces of the Q-criterion. The Q-criterion, also referred to as Q, is a scalar calculated at every point in 3D space from the three-component velocity fields, and is commonly used for vortex identification. These surfaces are then colored using spanwise vorticity (ωz) to highlight and distinguish the large-scale structures of interest, mainly oriented in the spanwise (z) direction. Other quantities of interest include the streamwise (x-direction) velocity (u) and the transverse (y-direction) velocity (v). Visualization of isosurfaces of these quantities can often give context as to how the fluid has been accelerated by the motion of the fin model.

Example isosurfaces for each of these quantities, taken at the same instance of time, are shown in Figure 2. Isosurfaces of Q are shown in Figure 2A. The trapezoidal fin model is shown in black. The isosurface level was chosen to be 1% of the maximum value, which is commonly used in 3D vortex visualizations to reveal structures while avoiding noise that can be prevalent at lower levels. Blue coloration indicates negative rotation of the vortex tube, or clockwise rotation (when viewed from above).

Figure 2: Example images from visualization software Fieldview.

(A) Q-criterion isosurfaces, shaded from red to blue by spanwise (z) vorticity;(B) x-direction velocity (u) isosurfaces; and (C) y-direction velocity (v) isosurfaces Please click here to view a larger version of this figure.

Red indicates positive rotation of that vortex tube, or counter-clockwise. Green indicates rotation in a direction other than along the z-axis. In Figure 2B, blue isosurfaces indicate streamwise velocity that is 10% lower than the freestream value and orange indicates streamwise velocity that is 10% higher than the freestream value. In Figure 2C, red isosurfaces indicate transverse, or cross-stream velocity that has a magnitude 10% of the freestream value but negative (out of the page) and green indicates streamwise velocity has a magnitude 10% of the freestream value and positive (into the page). These velocity isosurfaces have been used to show how the vortex ring structure is consistent with the periodic acceleration and deceleration of the fluid due to the motion of the fin model.

In the following protocol, we detail the steps for converting these isosurface visualizations into surface objects in 3D space that can be processed and imported into Unity for visualization and interaction in virtual reality.

Protocol

1. Processing data files and exporting objects from Matlab

NOTE: The following steps will detail how to open the shared Matlab processing codes, which open PLOT3D data files and generate the isosurfaces as described. The necessary .obj and .mtl files are then also constructed and exported from Matlab.

- From the supplementary files, download the scripts labeled create_obj.m and write_wobj.m. Open Matlab, then open the create_obj.m and the write_wobj.m files.

- Customize the path FileDir to the folder where scripts and data files are saved. In the File Explorer where FileDir is located, create a new folder called ‘FullSetOBJ’.

- Customize the path of GridDir.vTo make this code compatible with user data, make sure the grid information is in a folder that is a child of FileDir.

- Customize the path variable FileName. This should be the folder where the data files of all 24 time steps are located.

- Run the script.

NOTE: The code will take several hours to complete if all quantities at all time steps are solved for and converted in one execution of the script. Thus, it may be of interest to the user to only run one quantity at a time by commenting out the sections that create the .obj files for the other two quantities and allowing the code to run on only that single quantity. Also, the user can change the number of time-steps created by changing how many iterations the for loop cycles through, which is located at the beginning of the script.

2. Rendering surfaces in Blender

NOTE: In this section, we detail the steps for importing the output object data from Matlab into Blender, where the objects are scaled and then exported in a file format that is compatible with Unity. The shared scripts execute these tasks.

- Use Blender version 2.79 and download the provided Blender scripts in the supplementary files. Double click on the exportAsFBX_XVel Blender script, which will open Blender.

- Customize the path of file_path. This path should be directed to where the .obj files are located. In File Explorer, where file_path is directed and under the FullSetOBJ folder, create a new folder called ‘FBX’.

- Press Run Script which is located just above the interactive console. Open the exportAsFBX_YVel Blender script, customize the file_path, and click Run Script. Open the exportAsFBX_ZVort Blender script, customize the file_path, and click Run Script.

3. Configure Unity, the SteamVR Plugin, and VRTK

NOTE: These steps will ensure that the necessary software is present and configured correctly to import the data and generate the related games. Please note the software versions recommended in these steps, as we cannot guarantee that the shared scripts will work with updated versions of these particular applications.

- Use Unity version 2018.3.7. Create a new 3D scene by choosing 3D under the template option and pressing the Create Project button.

- Do not download the new version of Unity if prompted. Use version 1.2.3. of the SteamVR plugin from the Unity Asset store.

- Once downloaded, drag the SteamVR folder into Assets on the Unity UI. If prompted to update API for SteamVR, click the button labeled “I Made A Backup. Go Ahead.”

- Use the current version of VRTK (version 3.3.0 was used for this manuscript) from the Unity Asset store and import into the game.

- Drag the folder containing the .fbx files into Assets on the Unity UI.

- Go to the Asset Store and type ‘simple color picker’14. Click on the option given with this name and click import. A pop-up screen will appear showing everything that will be imported. Check all boxes except the box label Draggable.cs, and then click import.

- Create a folder in the Assets UI under the Project tab, which is located at the bottom left corner of the screen, and call it Scripts.

- From the supplementary files, download the scripts labeled Left_Controller, Right_Controller, Set_Text_Position, MeshLineRenderer, DrawLineManager, ColorManager and Draggable along with their associated meta files. Then place these in the Scripts folder under Assets in the Project tab.

4. Creating the data visualization game in Unity

NOTE: After successfully configuring Unity in step 3, these steps will use the provided scripts to build the game that can then be saved as a standalone executable launch file.

- Disable the Main Camera by clicking on it in the section labeled SampleScene in the Hierarchy tab and unchecking the box that is next to the title, Main Camera, in the Inspector.

- In the Project tab, go into Assets and open the SteamVR folder. Then go into Prefabs and drag the SteamVR and the CameraRig prefab into the SampleScene.

- Click on CameraRig, and then click the Add Component button located at the bottom of the Inspector menu. Click Scripts, and finally click on the ‘GameBuild’ script.

- In the Project tab open the folder containing fbx files and drag each of them into the SampleScene section.

- Add a plane by going to the upper tab, go to GameObjects | 3D Object | Plane.

- Add a Canvas by going to Component | Layout | Canvas.

- Highlight each .fbx file in the Hierarchy.

- Click on Component | Physics | Rigidbody. Make sure Use Gravity and Is Kinematic do not have check marks next to them.

- Click on Component | Physics | Box Collider. Make sure Is Trigger is checked.

- Under SampleScene, click the drop-down arrow next to CameraRig. Then click the drop-down arrow next to Controller (right) and click on Controller (right).

- Click Add Component | Mesh | Mesh Renderer.

- Click Add Component | Scripts | Right_Controller.

- Under SampleScene, click the drop-down arrow next to CameraRig, then click the drop-down arrow next to Controller (left) and click on Controller (left).

- Click Add Component | Physics | Rigidbody. Uncheck Use Gravity and check Is Kinematic.

- Click Add Component | Mesh | Mesh Renderer.

- Click Add Component | Physics | Sphere Collider. Check Is Trigger and change the radius to Infinity by typing, Infinity, into the space next to radius.

- Click Add Component | Scripts | Left_Controller.

- Click Add Component | Scripts | Steam VR_Laser Pointer.

- Click Assets under the Project tab and drag the ColorPicker into the SampleScene.

- Click on the ColorPicker in the SampleScene and in the Inspector, change the scale to X = Y = Z = 0.003 and change the positions to X = 0, Y = 1, Z = 1.4

- Add an empty game object by right clicking in the SampleScene Hierarchy and clicking create new object. Rename this object to DrawLineManager.

- In the Inspector for the DrawLineManager object, go to Add Component, click on Scripts, then add the DrawLineManager script. Then drag Controller (right) into the Tracked Obj space in the Draggable (Script) section.

- Under the ColorPicker object, choose ColorHuePicker and drag Controller (left) into the Tracked Obj space. Similarly, drag Controller (left) into the Tracked Obj space for ColorSaturationBrightnessPicker in the Draggable (Script) section.

- In the Project section, click on Materials. Then add a new material by right clicking, then Create, then Material and rename this material to DrawingMaterial. Click on the white box, underneath the Opaque option and across from the Albedo option, and change A = B = G = R = 0. Drag this material into the L Mat position of the DrawLineManager object in the Hierarchy, which is located in the section labeled Draw Line Manager (script).

- Add a TextMeshPro Text by highlighting each fbx file, then UI, then TextMeshPro Text. If prompted to add TextMesh Essentials, then close out this window.

- Highlight each TextMeshPro Text under all fbx files and change the position to Pos X = Pos Y = Pos Z = 0.5, change the Width to 10, the Height to 1, and the scale to X = Y = Z = 0.1.

- Delete any text that is in the Text box, change the font size to 14, and change the vertex color, which is the white box located next to Vertex Color, to R = G = B = 0 and A = 255.

- Add a Mesh Renderer by going to Add Component | Mesh | Mesh Renderer.

- Go to VRTK in the Project tab, go into the Prefabs folder, then the ControllerTooltips folder, and drag the ControllerTooltips prefab onto Controller (left) and Controller (right) in the Hierarchy.

- For each controller, click on ControllerTooltips in the Hierarchy and change the Button Text Settings to the controller scheme given in Figure 4.

Results

Using the method described above, the results are created from the example dataset, which was split into 24 time steps and saved in the PLOT3D standard data format. Since 3 quantities were saved, 72 .obj files were created in Matlab and converted into 72 .fbx files in Blender and imported into Unity. The Matlab code can be adjusted according to the number of time steps of the user’s data by changing the number of times that Matlab iterates through the main for loop, which can be found at the very beginning of the s...

Discussion

In this article, we described a method for representing fluid dynamics data in VR. This method is designed to start with data in the format of a PLOT3D structured grid, and therefore can be used to represent analytic, computational, or experimental data. The protocol describes the necessary steps to implement the current method, which creates a VR “scene” that allows the user to move and scale the data objects with the use of hand movements, to cycle through time steps and quantities, and to create drawings a...

Disclosures

The authors have nothing to disclose.

Acknowledgements

This work was supported by the Office of Naval Research under ONR Award No. N00014-17-1-2759. The authors also wish to thank the Syracuse Center of Excellence for Environmental and Energy Systems for providing funds used towards the purchase of lasers and related equipment as well as the HTC Vive hardware. Some images were created using FieldView as provided by Intelligent Light through its University Partners Program. Lastly, we would like to thank Dr. Minghao Rostami of the Math Department at Syracuse University, for her help in testing and refining the written protocol.

Materials

| Name | Company | Catalog Number | Comments |

| Base station | HTC and Valve Corporation | Used to track the user's position in the play area. | |

| Base station | HTC and Valve Corporation | Used to track the user's position in the play area. | |

| Link box | HTC and Valve Corporation | Used to connect the headset with the computer being used. | |

| Vive controller | HTC and Valve Corporation | One of the controllers that can be used. | |

| Vive controller | HTC and Valve Corporation | One of the controllers that can be used. | |

| Vive headset | HTC and Valve Corporation | Headset the user wears. |

References

- Zhang, Z., Zhang, M., Chang, Y., Aziz, E. S., Esche, S. K., Chassapis, C. Collaborative virtual laboratory environments with hardware in the loop. Cyber-Physical Laboratories in Engineering and Science Education. , 363-402 (2018).

- Reshetnikov, V., et al. Big Data Approach to Fluid Dynamics Visualization Problem. Lecture Notes in Computer Science Computational Science - ICCS 2019. , 461-467 (2019).

- Merchant, Z., Goetz, E. T., Cifuentes, L., Keeney-Kennicutt, W., Davis, T. J. Effectiveness of virtual reality-based instruction on students learning outcomes in K-12 and higher education: A meta-analysis. Computers & Education. 70, 29-40 (2014).

- Chang, Y., Aziz, E. S., Zhang, Z., Zhang, M., Esche, S. K. Evaluation of a video game adaptation for mechanical engineering educational laboratories. 2016 IEEE Frontiers in Education Conf (FIE). , (2016).

- Kuester, F., Bruckschen, R., Hamann, B., Joy, K. I. Visualization of particle traces in virtual environments. Proceedings of the ACM Symposium on Virtual Reality Software and Technology - VRST 01. , (2001).

- Innovative Virtual Reality Software Developed to Enhance Fluid Dynamics Lectures: Imperial News: Imperial College London. Imperial News Available from: https://www.imperial.ac.uk/news/189866/innovative-virtual-reality-software-developed-enhance (2019)

- Huang, G., Bryden, K. Introducing Virtual Engineering Technology Into Interactive Design Process with High-fidelity Models. Proceedings of the Winter Simulation Conference, 2005. , (2005).

- Shahnawaz, V. J., Vance, J. M., Kutti, S. V. Visualization and approximation of post processed computational fluid dynamics data in a virtual environment. 1999 ASME Design Engineering Technical Conferences. , (1999).

- Schulz, M., Reck, F., Bertelheimer, W., Ertl, T. Interactive visualization of fluid dynamics simulations in locally refined cartesian grids. Proceedings Visualization 99. , (1999).

- Wu, B., Chen, G. H., Fu, D., Moreland, J., Zhou, C. Q. Integration of Virtual Reality with Computational Fluid Dynamics for Process Optimization. AIP Conference Proceedings. 1207, 1017 (2010).

- Plot3d File Format for Grid and Solution Files. NPARC Alliance CFD Verification and Validation Available from: https://www.grc.nasa.gov/WWW/wind/valid/plot3d.html (2008)

- Brooks, S., Green, M. A. Effects of Upstream Body on Pitching Trapezoidal Panel. AIAA Aviation 2019 Forum. , 2019 (2019).

- King, J., Kumar, R., Green, M. A. Experimental observations of the three-dimensional wake structures and dynamics generated by a rigid, bioinspired pitching panel. Physical Review Fluids. 3, 034701 (2018).

- Simple color picker. Unity Asset Store Available from: https://assetstore.unity.com/packages/tools/gui/simple-color-picker-7353 (2013)

- Krietemeyer, B., Bartosh, A., Covington, L. A shared realities workflow for interactive design using virtual reality and three-dimensional depth sensing. International Journal of Architectural Computing. 17 (2), 220-235 (2019).

- García-Hernández, R. J., Kranzlmüller, D. NOMAD VR: Multiplatform virtual reality viewer for chemistry simulations. Computer Physics Communication. 237, 230-237 (2019).

Reprints and Permissions

Request permission to reuse the text or figures of this JoVE article

Request PermissionExplore More Articles

This article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. All rights reserved