A subscription to JoVE is required to view this content. Sign in or start your free trial.

Method Article

Robotized Testing of Camera Positions to Determine Ideal Configuration for Stereo 3D Visualization of Open-Heart Surgery

In This Article

Summary

The human depth perception of 3D stereo videos depends on the camera separation, point of convergence, distance to, and familiarity of the object. This paper presents a robotized method for rapid and reliable test data collection during live open-heart surgery to determine the ideal camera configuration.

Abstract

Stereo 3D video from surgical procedures can be highly valuable for medical education and improve clinical communication. But access to the operating room and the surgical field is restricted. It is a sterile environment, and the physical space is crowded with surgical staff and technical equipment. In this setting, unobscured capture and realistic reproduction of the surgical procedures are difficult. This paper presents a method for rapid and reliable data collection of stereoscopic 3D videos at different camera baseline distances and distances of convergence. To collect test data with minimum interference during surgery, with high precision and repeatability, the cameras were attached to each hand of a dual-arm robot. The robot was ceiling-mounted in the operating room. It was programmed to perform a timed sequence of synchronized camera movements stepping through a range of test positions with baseline distance between 50-240 mm at incremental steps of 10 mm, and at two convergence distances of 1100 mm and 1400 mm. Surgery was paused to allow 40 consecutive 5-s video samples. A total of 10 surgical scenarios were recorded.

Introduction

In surgery, 3D visualization can be used for education, diagnoses, pre-operative planning, and post-operative evaluation1,2. Realistic depth perception can improve understanding3,4,5,6 of normal and abnormal anatomies. Simple 2D video recordings of surgical procedures are a good start. However, the lack of depth perception can make it hard for the non-surgical colleagues to fully understand the antero-posterior relationships between different anatomical structures and therefore also introduce a risk of misinterpretation of the anatomy7,8,9,10.

The 3D viewing experience is affected by five factors: (1) Camera configuration can either be parallel or toed-in as shown in Figure 1, (2) Baseline distance (the separation between the cameras). (3) Distance to the object of interest and other scene characteristics such as the background. (4) Characteristics of viewing devices such as screen size and viewing position1,11,12,13. (5) Individual preferences of the viewers14,15.

Designing a 3D camera setup begins with the capture of test videos recorded at various camera baseline distances and configurations to be used for subjective or automatic evaluation16,17,18,19,20. The camera distance must be constant to the surgical field to capture sharp images. Fixed focus is preferred because autofocus will adjust to focus on hands, instruments, or heads that may come into view. However, this is not easily achievable when the scene of interest is the surgical field. Operating rooms are restricted access areas because these facilities must be kept clean and sterile. Technical equipment, surgeons, and scrub nurses are often clustered closely around the patient to secure a good visual overview and an efficient workflow. To compare and evaluate the effect of camera positions on the 3D viewing experience, one complete test range of camera positions should be recording the same scene because the object characteristics such as shape, size, and color can affect the 3D viewing experience21.

For the same reason, complete test ranges of camera positions should be repeated on different surgical procedures. The entire sequence of positions must be repeated with high accuracy. In a surgical setting, existing methods that require either manual adjustment of the baseline distance22 or different camera pairs with fixed baseline distances23 are not feasible because of both space and time constraints. To address this challenge, this robotized solution was designed.

The data was collected with a dual-arm collaborative industrial robot mounted in the ceiling in the operating room. Cameras were attached to the wrists of the robot and moved along an arc-shaped trajectory with increasing baseline distance, as shown in Figure 2.

To demonstrate the approach, 10 test series were recorded from 4 different patients with 4 different congenital heart defects. Scenes were chosen when a pause in surgery was feasible: with the beating hearts just before and after surgical repair. Series were also made when the hearts were arrested. The surgeries were paused for 3 min and 20 s to collect forty 5-ssequences with different camera convergence distances and baseline distances to capture the scene. The videos were later post-processed, displayed in 3D for the clinical team, who rated how realistic the 3D video was along a scale from 0-5.

The convergence point for toed-in stereo cameras is where the center points of both images meet. The convergence point can, by principle, be placed either in front, within, or behind the object, see Figure 1A-C. When the convergence point is in front of the object, the object will be captured and displayed left of the midline for the left camera image and right of the midline for the right camera image (Figure 1A). The opposite applies when the convergence point is behind the object (Figure 1B). When the convergence point is on the object, the object will also appear in the midline of the camera images (Figure 1C), which presumably should yield the most comfortable viewing since no squinting is required to merge the images. To achieve comfortable stereo 3D video, the convergence point must be located on, or slightly behind, the object of interest, else the viewer is required to voluntarily squint outwards (exotropia).

The data was collected using a dual-arm collaborative industrial robot to position the cameras (Figure 2A-B). The robot weighs 38 kg without equipment. The robot is intrinsically safe; when it detects an unexpected impact, it stops moving. The robot was programmed to position the 5 Megapixel cameras with C-mount lenses along an arc-shaped trajectory stopping at predetermined baseline distances (Figure 2C). The cameras were attached to the robot hands using adaptor plates, as shown in Figure 3. Each camera recorded at 25 frames per second. Lenses were set at f-stop 1/8 with focus fixed on the object of interest (approximated geometrical center of the heart). Every image frame had a timestamp which was used to synchronize the two video streams.

Offsets between the robot wrist and the camera were calibrated. This can be achieved by aligning the crosshairs of the camera images, as shown in Figure 4. In this setup, the total translational offset from the mounting point on the robot wrist and the center of the camera image sensor was 55.3 mm in the X-direction and 21.2 mm in the Z-direction, displayed in Figure 5. The rotational offsets were calibrated at a convergence distance of 1100 mm and a baseline distance of 50 mm and adjusted manually with the joystick on the robot control panel. The robot in this study had a specified accuracy of 0.02 mm in Cartesian space and 0.01 degrees rotational resolution24. At a radius of 1100 m, an angle difference of 0.01 degrees offsets the center point 0.2 mm. During the full robot motion from 50-240 mm separation, the crosshair for each camera was within 2 mm from the ideal center of convergence.

The baseline distance was increased stepwise by symmetrical separation of the cameras around the center of the field of view in increments of 10 mm ranging from 50-240 mm (Figure 2). The cameras were kept at a standstill for 5 s in each position and moved between the positions at a velocity of 50 mm/s. The convergence point could be adjusted in X and Z directions using a graphical user interface (Figure 6). The robot followed accordingly within its working range.

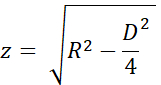

The accuracy of the convergence point was estimated using the uniform triangles and the variable names in Figure 7A and B. The height 'z' was calculated from the convergence distance 'R' with the Pythagorean theorem as

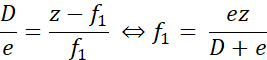

When the real convergence point was closer than the desired point, as shown in Figure 7A, the error distance 'f1' was calculated as

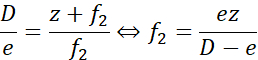

Similarly, when the convergence point was distal to the desired point, the error distance 'f2' was calculated as

Here, 'e' was the maximum separation between the crosshairs, at most 2 mm at maximum baseline separation during calibration (D = 240 mm). For R = 1100 mm (z = 1093 mm), the error was less than ± 9.2 mm. For R = 1400 mm (z = 1395 mm), the error was ± 11.7 mm. That is, the error of the placement of the convergence point was within 1% of the desired. The two test distances of 1100 mm and 1400 mm were therefore well separated.

Access restricted. Please log in or start a trial to view this content.

Protocol

The experiments were approved by the local Ethics Committee in Lund, Sweden. The participation was voluntary, and the patients' legal guardians provided informed written consent.

1. Robot setup and configuration

NOTE: This experiment used a dual-arm collaborative industrial robot and the standard control panel with a touch display. The robot is controlled with RobotWare 6.10.01 controller software and robot integrated development environment (IDE) RobotStudio 2019.525. Software developed by the authors, including the robot application, recording application, and postprocessing scripts, are available at the GitHub repository26.

CAUTION: Use protective eyeglasses and reduced speed during setup and testing of the robot program.

- Mount the robot to the ceiling or a table using bolts dimensioned for 100 kg as described on page 25 in the product specification24, following the manufacturer's specifications. Ensure that the arms can move freely and the line of sight to the field of view is unobscured.

CAUTION: Use lift or safety ropes when mounting the robot in a high position. - Start the robot by turning the start switch located at the base of the robot. Calibrate the robot by following the procedure described in the operating manual on pages 47-5625.

- Start the robot IDE on a Windows computer.

- Connect to the physical robot system (operating manual page 14027).

- Load the code for the robot program and application libraries for the user interface to the robot:

- The robot code for a ceiling-mounted robot is in the folder Robot/InvertedCode and for a table mounted robot in Robot/TableMountedCode. For each of the files left/Data.mod, left/MainModule.mod, right/Data.mod and right/MainModule.mod:

- Create a new program module (see operating manual page 31827) with the same name as the file (Data or MainModule) and copy the file content to the new module.

- Press on Apply in the robot IDE to save the files to the robot.

- Use File Transfer (operating manual page 34627) to transfer the robot application files TpSViewStereo2.dll, TpsViewStereo2.gtpu.dll, and TpsViewStereo2.pdb located in the FPApp folder to the robot. After this step, the robot IDE will not be further used.

- Press the Reset button on the back of the robot touch display (FlexPendant) to reload the graphical interface. The robot application Stereo2 will now be visible under the touch display menu.

- Install the recording application (Liveview) and postprocessing application on an Ubuntu 20.04 computer by running the script install_all_linux.sh, located in the root folder in the Github repository.

- Mount each camera to the robot. The components needed for mounting are displayed in Figure 3A.

- Mount the lens to the camera.

- Mount the camera to the camera adaptor plate with three M2 screws.

- Mount the circular mounting plate to the camera adaptor plate with four M6 screws on the opposite side of the camera.

- Repeat steps 1.9.1-1.9.3 for the other camera. The resulting assemblies are mirrored, as shown in Figure 3B and Figure 3C.

- Mount the adaptor plate to the robot wrist with four M2.5 screws, as shown in Figure 3D.

- For a ceiling-mounted robot: attach the left camera in Figure 3C to the left robot arm as shown in Figure 2A.

- For a table-mounted robot: attach the left camera in Figure 3C to the right robot arm.

- Connect the USB cables to the cameras, as shown in Figure 3E, and to the Ubuntu computer.

2. Verify the camera calibration

- On the robot touch display, press the Menu button and select Stereo2 to start the robot application. This will open the main screen, as shown in Figure 6A.

- On the main screen, press on Go to start for 1100 mm in the robot application and wait for the robot to move to the start position.

- Remove the protective lens caps from the cameras and connect the USB cables to the Ubuntu computer.

- Place a printed calibration grid (CalibrationGrid.png in the repository) 1100 mm from the camera sensors. To facilitate correct identification of the corresponding squares, place a small screw-nut or mark somewhere in the center of the grid.

- Start the recording application on the Ubuntu computer (run the script start.sh located in the liveview folder inside the Github repository). This starts the interface, as shown in Figure 4.

- Adjust the aperture and focus on the lens with the aperture and focus rings.

- In the recording application, check Crosshair to visualize the crosshairs.

- In the recording application, ensure that the crosshairs align with the calibration grid in the same position in both camera images, as shown in Figure 4. Most likely, some adjustment will be required as follows:

- If the crosses do not overlap, press the Gear icon (bottom left Figure 6A) in the robot application on the robot touch display to open the setting screen, as shown in Figure 6B.

- Press on 1. Go to Start Pos, as shown in Figure 6B.

- Jog the robot with the joystick to adjust the camera position (operating manual page 3123).

- Update the tool position for each robot arm. Press 3. Update Left Tool and 4. Update Right Tool to save the calibration for the left and the right arm, respectively.

- Press on the Back Arrow icon (top right, Figure 6B) to return to the main screen.

- Press on Run Experiment (Figure 6A) in the robot application and verify that the crosshairs align. Otherwise, repeat steps 2.3-2.3.5.

- Add and test any changes to the distances and/or time at this point. This requires changes in the robot program code and advanced robot programming skills. Change the following variables in the Data module in the left task (arm): the desired separation distances in the integer array variable Distances, the convergence distances in the integer array ConvergencePos and edit the time at each step by editing the variable Nwaittime (value in seconds).

CAUTION: Never run an untested robot program during live surgery. - When the calibration is complete, press on Raise to raise the robot arms to the standby position.

- Optionally turn off the robot.

NOTE: The procedure can be paused between any of the steps above.

3. Preparation at the start of the surgery

- Dust the robot.

- If the robot was turned off, start it by turning on the Start switch located at the base of the robot.

- Start the robot application on the touch display and recording application described in steps 2.1 and 2.2.

- In the recording application, create and then select the folder where to save the video (press Change Folder).

- In the robot application: press the gear icon, position the cameras in relation to the patient. Change X and Z direction by pressing +/- for Hand Distance from Robot and Height, respectively, so that the image captures the surgical field. Perform the positioning in the Y-direction by manually moving the robot or patient.

NOTE: The preparations can be paused between the preparation steps 3.1-3.4.

4. Experiment

CAUTION: All personnel should be informed about the experiment beforehand.

- Pause the surgery.

- Inform the OR personnel that the experiment is started.

- Press Record in the recording application.

- Press Run experiment in the robot application.

- Wait while the program is running; the robot displays "Done" in the robot application on the touch display when finished.

- Stop recording in the recording application by pressing Quit.

- Inform the OR personnel that the experiment has finished.

- Resume surgery.

NOTE: The experiment cannot be paused during steps 4.1-4.6.

5. Repeat

- Repeat steps 4.1-4.6 to capture another sequence and steps 3.1-3.4 and steps 4.1-4.6 to capture sequences from different surgeries. Capture around ten full sequences.

6. Postprocessing

NOTE: The following steps can be carried out using most video editing software or the provided scripts in the postprocessing folder.

- In this case, debayer the video as it is saved in the RAW format:

- Run the script postprocessing/debayer/run.sh to open the debayer application shown in Figure 8A.

- Press Browse Input Directory and select the folder with the RAW video.

- Press Browse Output Directory and select a folder for the resulting debayered and color-adjusted video files.

- Press Debayer! and wait until the process is finished - both progress bars are full, as shown in Figure 8B.

- Merge the right and left synchronized videos to 3D stereo format28:

- Run the script postprocessing/merge_tb/run.sh to start the merge application; it opens the graphical user interface shown in Figure 8C.

- Press Browse Input Directory and select the folder with the debayered video files.

- Press Browse Output Directory and select a folder for the resulting merged 3D stereo file.

- Press Merge! and wait until the finish screen in Figure 8D is shown.

- Use off-the-shelf video editing software such as Premiere Pro to add text labels to each camera distance in the video.

NOTE: In the video, there is a visible shake every time the robot moved, and the camera distance increased. In this experiment, labels A-T were used for the camera distances.

7. Evaluation

- Display the video in top-bottom 3D format with an active 3D projector.

- Viewing experience depends on the viewing angle and the distance to screen; evaluate the video using intended audience and setup.

Access restricted. Please log in or start a trial to view this content.

Results

An acceptable evaluation video with the right image placed at the top in top-bottom stereoscopic 3D is shown in Video1. A successful sequence should be sharp, focused, and without unsynchronized image frames. Unsynchronized video streams will cause blur, as shown in the file Video 2. The convergence point should be centered horizontally, independent of the camera separation, as seen in Figure 9A,B. When the robot transitions between the posi...

Access restricted. Please log in or start a trial to view this content.

Discussion

During live surgery, the total time of the experiment used for 3D video data collection was limited to be safe for the patient. If the object is unfocused or overexposed, the data cannot be used. The critical steps are during camera tool calibration and setup (step 2). The camera aperture and focus cannot be changed when the surgery has started; the same lighting conditions and distance should be used during setup and surgery. The camera calibration in steps 2.1-2.4 must be carried out carefully to ensure that the heart ...

Access restricted. Please log in or start a trial to view this content.

Disclosures

The authors have nothing to disclose.

Acknowledgements

The research was carried out with funding from Vinnova (2017-03728, 2018-05302 and 2018-03651), Heart-Lung Foundation (20180390), Family Kamprad Foundation (20190194), and Anna-Lisa and Sven Eric Lundgren Foundation (2017 and 2018).

Access restricted. Please log in or start a trial to view this content.

Materials

| Name | Company | Catalog Number | Comments |

| 2 C-mount lenses (35 mm F2.1, 5 M pixel) | Tamron | M112FM35 | Rated for 5 Mpixel |

| 3D glasses (DLP-link active shutter) | Celexon | G1000 | Any compatible 3D glasses can be used |

| 3D Projector | Viewsonic | X10-4K | Displays 3D in 1080, can be exchanged for other 3D projectors |

| 6 M2 x 8 screws | To attach the cXimea cameras to the camera adaptor plates | ||

| 8 M2.5 x 8 screws | To attach the circular mounting plates to the robot wrist | ||

| 8 M5 x 40 screws | To mount the robot | ||

| 8 M6 x 10 screws with flat heads | For attaching the circular mounting plate and the camera adaptor plates | ||

| Calibration checker board plate (25 by 25 mm) | Any standard checkerboard can be used, including printed, as long as the grid is clearly visible in the cameras | ||

| Camera adaptor plates, x2 | Designed by the authors in robot_camera_adaptor_plates.dwg, milled in aluminium. | ||

| Circular mounting plates, x2 | Distributed with the permission of the designer Julius Klein and printed with ABS plastic on an FDM 3D printer. License Tecnalia Research & Innovation 2017. Attached as Mountingplate_ROBOT_SIDE_ NewDesign_4.stl | ||

| Fix focus usb cameras, x2 (5 Mpixel) | Ximea | MC050CG-SY-UB | With Sony IMX250LQR sensor |

| Flexpendant | ABB | 3HAC028357-001 | robot touch display |

| Liveview | recording application | ||

| RobotStudio | robot integrated development environment (IDE) | ||

| USB3 active cables (10.0 m), x2 | Thumbscrew lock connector, water proofed. | ||

| YuMi dual-arm robot | ABB | IRB14000 |

References

- Held, R. T., Hui, T. T. A guide to stereoscopic 3D displays in medicine. Academic Radiology. 18 (8), 1035-1048 (2011).

- van Beurden, M. H. P. H., IJsselsteijn, W. A., Juola, J. F. Effectiveness of stereoscopic displays in medicine: A review. 3D Research. 3 (1), 1-13 (2012).

- Luursema, J. M., Verwey, W. B., Kommers, P. A. M., Geelkerken, R. H., Vos, H. J. Optimizing conditions for computer-assisted anatomical learning. Interacting with Computers. 18 (5), 1123-1138 (2006).

- Takano, M., et al. Usefulness and capability of three-dimensional, full high-definition movies for surgical education. Maxillofacial Plastic and Reconstructive Surgery. 39 (1), 10(2017).

- Triepels, C. P. R., et al. Does three-dimensional anatomy improve student understanding. Clinical Anatomy. 33 (1), 25-33 (2020).

- Beermann, J., et al. Three-dimensional visualisation improves understanding of surgical liver anatomy. Medical Education. 44 (9), 936-940 (2010).

- Battulga, B., Konishi, T., Tamura, Y., Moriguchi, H. The Effectiveness of an interactive 3-dimensional computer graphics model for medical education. Interactive Journal of Medical Research. 1 (2), (2012).

- Yammine, K., Violato, C. A meta-analysis of the educational effectiveness of three-dimensional visualization technologies in teaching anatomy. Anatomical Sciences Education. 8 (6), 525-538 (2015).

- Fitzgerald, J. E. F., White, M. J., Tang, S. W., Maxwell-Armstrong, C. A., James, D. K. Are we teaching sufficient anatomy at medical school? The opinions of newly qualified doctors. Clinical Anatomy. 21 (7), 718-724 (2008).

- Bergman, E. M., Van Der Vleuten, C. P. M., Scherpbier, A. J. J. A. Why don't they know enough about anatomy? A narrative review. Medical Teacher. 33 (5), 403-409 (2011).

- Terzić, K., Hansard, M. Methods for reducing visual discomfort in stereoscopic 3D: A review. Signal Processing: Image Communication. 47, 402-416 (2016).

- Fan, Z., Weng, Y., Chen, G., Liao, H. 3D interactive surgical visualization system using mobile spatial information acquisition and autostereoscopic display. Journal of Biomedical Informatics. 71, 154-164 (2017).

- Fan, Z., Zhang, S., Weng, Y., Chen, G., Liao, H. 3D quantitative evaluation system for autostereoscopic display. Journal of Display Technology. 12 (10), 1185-1196 (2016).

- McIntire, J. P., et al. Binocular fusion ranges and stereoacuity predict positional and rotational spatial task performance on a stereoscopic 3D display. Journal of Display Technology. 11 (11), 959-966 (2015).

- Kalia, M., Navab, N., Fels, S. S., Salcudean, T. A method to introduce & evaluate motion parallax with stereo for medical AR/MR. IEEE Conference on Virtual Reality and 3D User Interfaces. , 1755-1759 (2019).

- Kytö, M., Hakala, J., Oittinen, P., Häkkinen, J. Effect of camera separation on the viewing experience of stereoscopic photographs. Journal of Electronic Imaging. 21 (1), 1-9 (2012).

- Moorthy, A. K., Su, C. C., Mittal, A., Bovik, A. C. Subjective evaluation of stereoscopic image quality. Signal Processing: Image Communication. 28 (8), 870-883 (2013).

- Yilmaz, G. N. A depth perception evaluation metric for immersive 3D video services. 3DTV Conference: The True Vision - Capture, Transmission and Display of 3D Video. , 1-4 (2017).

- Lebreton, P., Raake, A., Barkowsky, M., Le Callet, P. Evaluating depth perception of 3D stereoscopic videos. IEEE Journal on Selected Topics in Signal Processing. 6, 710-720 (2012).

- López, J. P., Rodrigo, J. A., Jiménez, D., Menéndez, J. M. Stereoscopic 3D video quality assessment based on depth maps and video motion. EURASIP Journal on Image and Video Processing. 2013 (1), 62(2013).

- Banks, M. S., Read, J. C., Allison, R. S., Watt, S. J. Stereoscopy and the human visual system. SMPTE Motion Imaging Journal. 121 (4), 24-43 (2012).

- Kytö, M., Nuutinen, M., Oittinen, P. Method for measuring stereo camera depth accuracy based on stereoscopic vision. Three-Dimensional Imaging, Interaction, and Measurement. 7864, 168-176 (2011).

- Kang, Y. S., Ho, Y. S. Geometrical compensation algorithm of multiview image for arc multi-camera arrays. Advances in Multimedia Information Processing. 2008, 543-552 (2008).

- Product Specification IRB 14000. DocumentID: 3HAC052982-001 Revision J. ABB Robotics. , Available from: https://library.abb.com/en/results (2018).

- Operating Manual IRB 14000. Document ID: 3HAC052986-001 Revision F. ABB Robotics. , Available from: https://library.abb.com/en/results (2019).

- Github repository. , Available from: https://github.com/majstenmark/stereo2 (2021).

- Operating manual RobotStudio. Document ID: 3HAC032104-001 Revision Y. ABB Robotics. , Available from: https://library.abb.com/en/results (2019).

- Won, C. S. Adaptive interpolation for 3D stereoscopic video in frame-compatible top-bottom packing. IEEE International Conference on Consumer Electronics. 2011, 179-180 (2011).

- Kim, S. K., Lee, C., Kim, K. T. Multi-view image acquisition and display. Three-Dimensional Imaging, Visualization, and Display. Javidi, B., Okano, F., Son, J. Y. , Springer. New York. 227-249 (2009).

- Liu, F., Niu, Y., Jin, H. Keystone correction for stereoscopic cinematography. IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops. 2012, 1-7 (2012).

- Kang, W., Lee, S. Horizontal parallax distortion correction method in toed-in camera with wide-angle lens. 3DTV Conference: The True Vision - Capture, Transmission and Display of 3D Video. 2009, 1-4 (2009).

Access restricted. Please log in or start a trial to view this content.

Reprints and Permissions

Request permission to reuse the text or figures of this JoVE article

Request PermissionThis article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. All rights reserved