A subscription to JoVE is required to view this content. Sign in or start your free trial.

Method Article

Measuring Attention and Visual Processing Speed by Model-based Analysis of Temporal-order Judgments

In This Article

Summary

Temporal-order judgments can be used to estimate processing speed parameters and attentional weights and thereby to infer the mechanisms of attentional processing. This methodology can be applied to a wide range of visual stimuli and works with many attention manipulations.

Abstract

This protocol describes how to conduct temporal-order experiments to measure visual processing speed and the attentional resource distribution. The proposed method is based on a new and synergistic combination of three components: the temporal-order judgments (TOJ) paradigm, Bundesen's Theory of Visual Attention (TVA), and a hierarchical Bayesian estimation framework. The method provides readily interpretable parameters, which are supported by the theoretical and neurophysiological underpinnings of TVA. Using TOJs, TVA-based estimates can be obtained for a broad range of stimuli, whereas traditional paradigms used with TVA are mainly limited to letters and digits. Finally, the meaningful parameters of the proposed model allow for the establishment of a hierarchical Bayesian model. Such a statistical model allows assessing results in one coherent analysis both on the subject and the group level.

To demonstrate the feasibility and versatility of this new approach, three experiments are reported with attention manipulations in synthetic pop-out displays, natural images, and a cued letter-report paradigm.

Introduction

How attention is distributed in space and time is one of the most important factors in human visual perception. Objects that capture attention because of their conspicuity or importance are typically processed faster and with higher accuracy. In behavioral research, such performance benefits have been demonstrated in a variety of experimental paradigms. For instance, allocating attention to the target location speeds up the reaction in probe detection tasks1. Similarly, the accuracy of reporting letters is improved by attention2. Such findings prove that attention enhances processing, but they remain hopelessly mute about how this enhancement is established.

The present paper shows that low-level mechanisms behind attentional advantages can be assessed by measuring the processing speed of individual stimuli in a model-based framework that relates the measurements to fine-grained components of attention. With such a model, the overall processing capacity and its distribution among the stimuli can be inferred from processing speed measurements.

Bundesen's Theory of Visual Attention (TVA)3 provides a suitable model for this endeavor. It is typically applied to data from letter report tasks. In the following, the fundamentals of TVA are explained and it is shown how they can be extended to model temporal-order judgment (TOJ) data obtained with (almost) arbitrary stimuli. This novel method provides estimates of processing speed and resource distribution which can be readily interpreted. The protocol in this article explains how to plan and conduct such experiments and details how the data can be analyzed.

As mentioned above, the usual paradigm in TVA-based modeling and estimation of attention parameters is the letter report task. Participants report the identities of a set of letters which is briefly flashed and typically masked after a varying delay. Among other parameters, the rate at which visual elements are encoded into visual short-term memory can be estimated. The method has been successfully applied to questions in fundamental and clinical research. For instance, Bublak and colleagues4 assessed which attentional parameters are affected in different stages of age-related cognitive deficits. In fundamental attention research, Petersen, Kyllingsbæk, and Bundesen5 used TVA to model the attentional dwell time effect, the observer's difficulty in perceiving the second of two targets at certain time intervals. A major drawback of the letter report paradigm is that it requires sufficiently overlearned and maskable stimuli. This requirement limits the method to letters and digits. Other stimuli would require heavy training of participants.

The TOJ paradigm requires neither specific stimuli nor masking. It can be used with any kind of stimuli for which the order of appearance can be judged. This extends the stimulus range to pretty much everything that could be of interest, including direct cross-modal comparisons6.

Investigating attention with TOJs is based on the phenomenon of attentional prior entry which is a measure of how much earlier an attended stimulus is perceived compared to an unattended one. Unfortunately, the usual method for analyzing TOJ data, fitting observer performance psychometric functions (such as cumulative Gaussian or logistic functions), cannot distinguish whether attention increases the processing rate of the attended stimulus or if it decreases the rate of the unattended stimulus7. This ambiguity is a major problem because the question whether the perception of a stimulus is truly enhanced or if it benefits because of the withdrawal of resources from a competing stimulus is a question of both fundamental and practical relevance. For instance, for the design of human-machine interfaces it is highly relevant to know if increasing the prominence of one element works at the expense of another one.

The TOJ task usually proceeds as follows: A fixation mark is presented for a brief delay, typically a randomly drawn interval shorter than a second. Then, the first target is presented, followed after a variable stimulus onset asynchrony (SOA) by the second target. At negative SOAs, the probe, the attended stimulus, is shown first. At positive SOAs, the reference, the unattended stimulus, leads. At an SOA of zero, both targets are shown simultaneously.

Typically, presenting the target refers to switching the stimulus on. Under certain conditions, however, other temporal events, such as a flicker of an already present target or offsets are used8.

In TOJs, responses are collected in an unspeeded manner, usually by keys mapped to the stimulus identities and presentation orders (e.g., if stimuli are squares and diamonds, one key indicates "square first" and another one "diamond first"). Importantly, for the evaluation, these judgments must be converted to "probe first" (or "reference first") judgments.

In the present work, a combination of the processing model of TVA and the TOJ experimental paradigm is used to eliminate the problems in either individual domain. With this method, readily interpretable speed parameters can be estimated for almost arbitrary visual stimuli, enabling to infer how the observer's attention is allocated to competing visual elements.

The model is based on TVA's equations for the processing of individual stimuli, which will be shortly explained in the following. The probability that one stimulus is encoded into visual short-term memory before the other is interpreted as the probability of judging this stimulus as appearing first. The individual encoding durations are exponentially distributed9:

(1)

(1)

The maximum ineffective exposure duration t0 is a threshold before which nothing is encoded at all. According to TVA, the rate vx,i at which object x is encoded as member of a perceptual category i (such as color or a shape) is given by the rate equation,

. (2)

. (2)

The strength of the sensory evidence that x belongs to category i is expressed in ηx,i, and βi is a decision bias for categorizing stimuli as members of category i. This is multiplied by attentional weights. Individual attentional weights wx are divided by the attentional weights of all objects in the visual field. Hence, the relative attentional weight is calculated as

(3)

(3)

where R represents all categories and ηx,i represents the sensory evidence that object x belongs to category j. The value πj is called pertinence of category j and reflects a bias to make categorizations in j. The overall processing capacity C is the sum of all processing rates for all stimuli and categorizations. For a more detailed description of TVA, refer to Bundesen and Habekost's book9.

In our novel method, Equation 1, which describes the encoding of individual stimuli, is transformed into a model of TOJs. Assuming that selection biases and report categories are constant within an experimental task, the processing rates vp and vr of the two target stimuli probe (p) and reference (r) depend on C and the attentional weights in the form vp= C · wp and vr = C · wr, respectively. The new TOJ model expresses the success probability Pp1st that a participant judges the probe stimulus to be first as a function of the SOA and the processing rates. It can be formalized as follows:

(4)

(4)

A more detailed description of how this equation is derived from the basic TVA equations is described by Tünnermann, Petersen, and Scharlau7.

For the sake of simplicity, the parameter t0 is omitted in the model in Equation 1. According to the original TVA, t0 should be identical for both targets in the TOJ task, and, therefore, it cancels out. However, this assumption may sometimes be violated (see section Discussion).

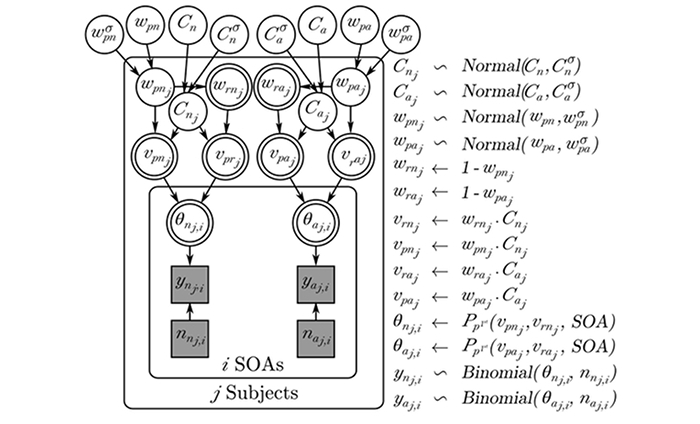

For fitting this equation to TOJ data, a hierarchical Bayesian estimation scheme11 is suggested. This approach allows to estimate the attentional weights wp and wr of the probe and reference stimuli and the overall processing rate C. These parameters, the resulting uptake rates vp and vr, and attention-induced differences between them, can be assessed on the subject and group levels along with estimated uncertainties. The hierarchical model is illustrated in Figure 1. During the planning stage for an experiment, convenient Bayesian power analysis can be conducted.

The following protocol describes how to plan, execute and analyze TOJ experiments from which processing speed parameters and attentional weights for visual stimuli can be obtained. The protocol assumes that the researcher is interested in how an attentional manipulation influences the processing speeds of some targets of interest.

Figure 1: Graphical model used in the Bayesian estimation procedure. Circles indicate estimated distributions; double circles indicate deterministic nodes. Squares indicate data. The relations are given on the right side of the figure. The nodes outside the rounded frames (“plates”) represent mean and dispersion estimates of TVA parameters (see Introduction) on the group level. In the “j Subjects” plate, it can be seen how attentional weights (w) are combined with the overall processing rates (C) to from stimulus processing rates (v) on the subject level. Plate “i SOAs” shows how these TVA parameters are then transformed (via the function Pp1st described in the Introduction) into the success probability (θ) for the binomially distributed responses at each SOA. Therefore, the θ together with the repetitions of the SOA (n) describe the data points (y). For more details on notation and interpretation of graphical models, refer to Lee and Wagenmakers23. Note that for the sake of clarity, the nodes that represent differences of parameters have been omitted. These deterministic parameters are indicated in the figures of the experimental results instead. Please click here to view a larger version of this figure.

Protocol

NOTE: Some steps in this protocol can be accomplished using custom software provided (along with installation instructions) at http://groups.upb.de/viat/TVATOJ. In the protocol, this collection of programs and scripts is referred to as “TVATOJ”.

1. Selection of Stimulus Material

- Select stimuli according to the research question.

NOTE: In general, two targets are shown at different locations on the screen. Stimuli that have been used with the present method include, for example, shapes, digits, letters, singletons in pop-out displays, and objects in natural images. The latter three types were used in this protocol.

NOTE: Several different stimulus types are included in the TOJ plugin ("psylab_toj_stimulus" provided with TVATOJ) for the experiment builder OpenSesame12. - When creating new stimulus types, make sure that the properties of interest have to be encoded for the judgment by making them important for the task or select stimuli where the properties of interest are encoded automatically (e.g., singletons in pop-out displays).

2. Power Estimation and Planning

- Perform a Bayesian power analysis by simulating data sets with the selected model, planned design (SOA distribution and repetitions), sample sizes, and hypothesized parameters. Estimate whether it is likely to reach the research goal (for instance, a certain difference in the parameters). If the power is not sufficient, alter the design by adding or shifting SOAs or repetitions and repeat the analysis.

- To use the provided TVATOJ software, open and edit the script "exp1-power.R". Follow the comments in the file to adjust it for the specific analysis. For general information on Bayesian power estimation refer to Kruschke13.

3. Specification or Programming of the Experiment

- Use an experiment builder or psychophysical presentation library to implement the experiment.

- To use the OpenSesame TOJ plugin provided in TVATOJ, drag the "psylab_toj_stimulus" plugin into a trial presentation loop. Alternatively, open the "simple-toj.osexp" example experiment in OpenSesame.

- Select the desired stimulus type from the dropdown menu "Stimulus type" in the psylab_toj_stimulus configuration. Follow the instructions in TVATOJ for adding new stimulus types if required.

- Specify the trials as described in the following steps.

- For every experimental condition, create trials with the planned SOAs. When using the psylab_toj_stimulus plugin and OpenSesame, add all varied factors as variables to the trial loop (e.g. "SOA").

- Add rows to the table to realize all factor combinations (e.g., seven SOAs, from -100 to 100 msec, crossed with the experimental conditions "attention" and "neutral"). Adjust the loop's "Repeat" attribute to create sufficient repetitions (see protocol step 2 for determining the distribution and repetition of SOAs).

NOTE: Typically, at most 800 trials can be presented within one hour. If more repetitions are needed, consider splitting the experiment into several sessions. Make sure that the "Order" attribute of the loop is set to "Random" before running the experiment. - In the psylab_toj_stimulus plugin configuration, add placeholders (e.g. "[SOA]") for the varied factors in the respective fields. Enter constant values in the fields of factors that are not varied.

NOTE: Before running the experiment, make sure that accurate timing is guaranteed. If appropriate timing behavior of newer monitors was not verified, use CRT monitors and synchronize with the vertical retrace signal12.

4. Experimental Procedure

- Welcoming and briefing of the participants

- Welcome the participants and inform them about the general form of the experiment (computer-based perception experiment). Inform the participants about the prospective duration of the experiment. Obtain the participants' informed consent to participate in the experiment.

- Ensure that the participants show normal or corrected-to-normal vision (optimally by conducting short vision tests). Some deficits, such as color blindness, may be tolerable if they do not interfere with the research question for the particular type of stimulus material.

- Provide a quiet booth where the experiment is conducted. Adjust the chair, chin rest, keyboard position, and so on, to ensure optimal viewing and response conditions for the experiment.

- Make the participants aware that the experiment requires attention and mental focus and can be fatiguing. Ask them to take short breaks when required. It is, however, equally important not to perform these simple tasks under strong attentional strain. Tell the participants that it is okay to make some errors.

- Instruction and warm-up

- Present onscreen instructions for the task, detailing the presentation sequence and response collection procedure. Inform the participants that the task is to report the order in which the targets arrived, and that this will be difficult in some trials. Ask the participants to report their first impression when they cannot tell the order for certain, and let them guess if they have no such impression at all.

NOTE: In the binary TOJs used here, there is no option to indicate the perception of simultaneity. To avoid excessive guessing, do not point out the presence of trials with simultaneously presented targets explicitly. Let these simply be difficult trials with the instructions outlined above. - To avoid eye movements during the trials, ask participants to fixate a mark which is shown in the center of the screen. Ask them to rest their head on a chin rest.

- Ask the participants to take short breaks if necessary. Let them know when breaks are allowed and when they must be avoided (e.g., during the target presentation and before the response).

- Include a short training in which participants can get used to the task. To this end, present a random subset of the experimental trials (see protocol step 3.2).

NOTE: Because the task itself is rather simple, ten to twenty trials are usually sufficient. It can be advantageous to increase the participants' confidence in their performance in this task. This can be done by slowing down the presentation and providing feedback. - Obtain the participants' confirmation that they have understood the task (let them explain it) and that they have no further questions.

- Present onscreen instructions for the task, detailing the presentation sequence and response collection procedure. Inform the participants that the task is to report the order in which the targets arrived, and that this will be difficult in some trials. Ask the participants to report their first impression when they cannot tell the order for certain, and let them guess if they have no such impression at all.

- Running the main experiment

- Let the experimental software start with the presentation of the main trials. Leave the booth for the main experiment.

5. Model-based Analysis of the TOJ Data

- Convert the raw data files into counts of "probe first" judgments for every SOA. For instance, run the script "os2toj.py" provided with TVATOJ.

- Run the Bayesian estimation procedure to estimate the main parameters wp and C, the derived ones vp and vr and the differences of the parameters. For this purpose, run the script "run-evaluation.R" after editing it according to the instructions provided in the file.

- When the sampling has finished, the differences of interest for the research questions can be assessed. Examples can be found in the following section.

Results

In the following, results obtained with the proposed method are reported. Three experiments measured the influence of different attentional manipulations with three highly different types of stimulus material. The stimuli are simple line segments in pop-out patterns, action space objects in natural images, and cued letter targets.

Experiment 1: Salience in pop-out displays

Experiment 1 aimed at measuring the influence of v...

Discussion

The protocol in this article describes how to conduct simple TOJs and fit the data with models based on fundamental stimulus encoding. Three experiments demonstrated how the results can be evaluated in a hierarchical Bayesian estimation framework to assess the influence of attention in highly different stimulus material. Salience in pop-out displays led to increased attentional weights. Also, increased weights were estimated for action space objects in natural images. However, due to the persisting advantage when spatial...

Disclosures

The authors have nothing to disclose.

Acknowledgements

Parts of this work have been supported by the German Research Foundation (DFG) via grants 1515/1-2 and 1515/6-1 to Ingrid Scharlau.

Materials

| Name | Company | Catalog Number | Comments |

| Personal Computer | |||

| (Open Source) Experimentation and evaluation software |

References

- Posner, M. I. Orienting of attention. Quarterly Journal of Experimental Psychology. 32 (1), 3-25 (1980).

- Van der Heijden, A., Wolters, G., Groep, J., Hagenaar, R. Single-letter recognition accuracy benefits from advance cuing of location. Perception & Psychophysics. 42 (5), 503-509 (1987).

- Bundesen, C. A theory of visual attention. Psychological Review. 97 (4), 523-547 (1990).

- Bublak, P., et al. Staged decline of visual processing capacity in mild cognitive impairment and Alzheimer's disease. Neurobiology of Aging. 32 (7), 1219-1230 (2011).

- Petersen, A., Kyllingsbæk, S., Bundesen, C. Measuring and modeling attentional dwell time. Psychonomic Bulletin & Review. 19 (6), 1029-1046 (2012).

- Vroomen, J., Keetels, M. Perception of intersensory synchrony: A tutorial review. Attention, Perception, & Psychophysics. 72 (4), 871-884 (2010).

- Tünnermann, J., Petersen, A., Scharlau, I. Does attention speed up processing? Decreases and increases of processing rates in visual prior entry. Journal of Vision. 15 (3), 1-27 (2015).

- Krüger, A., Tünnermann, J., Scharlau, I. Fast and conspicuous? Quantifying salience with the Theory of Visual Attention. Advances in Cognitive Psychology. 12 (1), 20 (2016).

- Bundesen, C., Habekost, T. . Principles of Visual Attention: Linking Mind and Brain. , (2008).

- Plummer, M. JAGS: A program for analysis of Bayesian graphical models using Gibbs sampling. Proceedings of the 3rd international workshop on distributed statistical computing. , 124-125 (2003).

- Kruschke, J. K., Vanpaemel, W., Busemeyer, J., Townsend, J., Wang, Z. J., Eidels, A. Bayesian estimation in hierarchical models. The Oxford Handbook of Computational and Mathematical Psychology. , 279-299 (2015).

- Mathôt, S., Schreij, D., Theeuwes, J. OpenSesame: An open-source, graphical experiment builder for the social sciences. Behavior Research Methods. 44 (2), 314-324 (2012).

- Kruschke, J. K. . Doing Bayesian data analysis: A tutorial with R, JAGS, and Stan. , (2015).

- Rensink, R. A., O'Regan, J. K., Clark, J. J. To see or not to see: The need for attention to perceive changes in scenes. Psychological Science. 8 (5), 368-373 (1997).

- Tünnermann, J., Krüger, N., Mertsching, B., Mustafa, W. Affordance estimation enhances artificial visual attention: Evidence from a change-blindness study. Cognitive Computation. 7 (5), 525-538 (2015).

- Shore, D. I., Klein, R. M. The effects of scene inversion on change blindness. The Journal of General Psychology. 127 (1), 27-43 (2000).

- Scharlau, I., Neumann, O. Temporal parameters and time course of perceptual latency priming. Acta Psychologica. 113 (2), 185-203 (2003).

- Schneider, K. A., Bavelier, D. Components of visual prior entry. Cognitive Psychology. 47 (4), 333-366 (2003).

- Scharlau, I., Neumann, O. Perceptual latency priming by masked and unmasked stimuli: Evidence for an attentional interpretation. Psychological Research. 67 (3), 184-196 (2003).

- Shore, D. I., Spence, C., Klein, R. M. Visual prior entry. Psychological Science. 12 (3), 205-212 (2001).

- Alcalá-Quintana, R., García-Pérez, M. A. Fitting model-based psychometric functions to simultaneity and temporal-order judgment data: MATLAB and R routines. Behavior Research Methods. 45 (4), 972-998 (2013).

- Hoffman, M. D., Gelman, A. The No-U-turn sampler: adaptively setting path lengths in Hamiltonian Monte Carlo. Journal of Machine Learning Research. 15 (1), 1593-1623 (2014).

- Lee, M. D., Wagenmakers, E. J. . Bayesian cognitive modeling: A practical course. , (2014).

- Vangkilde, S., Bundesen, C., Coull, J. T. Prompt but inefficient: Nicotine differentially modulates discrete components of attention. Psychopharmacology. 218 (4), 667-680 (2011).

- Tünnermann, J., Scharlau, I. Peripheral Visual Cues: Their Fate in Processing and Effects on Attention and Temporal-order. Front. Psychol. 7 (1442), (2016).

Reprints and Permissions

Request permission to reuse the text or figures of this JoVE article

Request PermissionThis article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. All rights reserved