Method Article

Eliciando e Analisando Canções de Vocalização Ultrassônica de Rato Masculino (USV)

Neste Artigo

Resumo

Os ratos produzem um complexo repertório multisilábico de vocalizações ultra-sônicas (USVs). Estes USVs são amplamente utilizados como leituras para transtornos neuropsiquiátricos. Este protocolo descreve algumas das práticas que aprendemos e desenvolvemos para consistentemente induzir, coletar e analisar as características acústicas e sintaxe de canções de mouse.

Resumo

Os ratos produzem vocalizações ultra-sônicas (USVs) em uma variedade de contextos sociais ao longo do desenvolvimento e da idade adulta. Estes USVs são usados para a recuperação 1 da mãe-filhote, interações juvenis 2 , interações opostas e do mesmo sexo 3 , 4 , 5 , e interações territoriais 6 . Durante décadas, os USVs têm sido utilizados pelos investigadores como proxies para estudar neuropsiquiátricos e transtornos comportamentais ou de desenvolvimento 7 , 8 , 9 e, mais recentemente, para compreender mecanismos e evolução da comunicação vocal entre os vertebrados 10 . Dentro das interações sexuais, ratos machos adultos produzem canções USV, que têm algumas características semelhantes às canções de namoro de aves canoras 11 . A utilização de tal repertório multisilábicoToires pode aumentar a flexibilidade potencial ea informação que carregam, porque podem ser variados em como os elementos são organizados e recombined, a saber syntax. Neste protocolo é descrito um método confiável para a obtenção de canções USV de ratos machos em vários contextos sociais, tais como exposição a urina feminina fresca, animais anestesiados e fêmeas em estro. Isto inclui condições para induzir uma grande quantidade de sílabas dos ratinhos. Reduzimos a gravação de ruídos ambientais com câmaras de som de baixo custo e apresentamos um método de quantificação para detectar, classificar e analisar automaticamente os USVs. Este último inclui a avaliação da taxa de chamada, repertório vocal, parâmetros acústicos e sintaxe. Várias abordagens e insights sobre o uso de reproduções para estudar a preferência de um animal por tipos de canções específicas são descritos. Estes métodos foram utilizados para descrever alterações acústicas e de sintaxe em diferentes contextos em camundongos machos e preferências de canções em ratinhos fêmeas.

Introdução

Em relação aos seres humanos, os ratinhos produzem vocalizações de frequências baixas e altas, as mais tarde conhecidas como vocalizações ultra-sônicas (USVs) acima da nossa faixa auditiva. Os USVs são produzidos em uma variedade de contextos, incluindo de mãe-pup retrieval, interações juvenis, ao oposto ou mesmo sexo adulto interações 4 , 12 . Estes USVs são compostos de um repertório multisilábico diverso que pode ser categorizado manualmente 9 ou automaticamente 10 , 11 . O papel destes USVs na comunicação tem estado sob crescente investigação nos últimos anos. Estes incluem o uso de USVs como leituras de mouse modelos de transtornos neuropsiquiátricos, de desenvolvimento ou comportamentais 7 , 8 , internos e motivacionais / estados emocionais [ 13] . Os USVs são pensados para transmitir informações confiáveis sobre o eQue é útil para o receptor 14 , 15 .

Em 2005, Holy e Guo 11 , avançaram a idéia de que os ratos machos adultos USVs foram organizados como uma sucessão de elementos de chamada multisilábica ou sílabas semelhantes aos pássaros canoras. Em muitas espécies, um repertório multisilábico permite ao emissor combinar e ordenar sílabas de diferentes maneiras para aumentar as informações potenciais transportadas pela música. Acredita-se que a variação nesta sintaxe tenha uma relevância etológica para o comportamento sexual e as preferências de mate 16 , 17 . Estudos posteriores mostraram que os ratos machos foram capazes de alterar a composição relativa dos tipos de sílaba que produzem antes, durante e depois da presença de uma fêmea 5 , 18 . Ou seja, os ratos machos adultos usam seus USVs para comportamento de namoro, seja para atrairOu manter contato próximo com uma fêmea, ou para facilitar o acasalamento 19 , 20 , 21 . Eles também são emitidos nas interações homem-macho, provavelmente para transmitir informações sociais durante as interações 4 . Para captar essas mudanças nos repertórios, os cientistas geralmente medem as características espectrales (parâmetros acústicos, como amplitude, freqüências, etc. ), número de sílabas ou chamadas de USVs e latência para o primeiro USV. No entanto, poucos realmente olhar para a dinâmica de seqüência destes USVs em detalhe 22 . Recentemente, nosso grupo desenvolveu um novo método para medir mudanças dinâmicas nas seqüências de sílaba USV 23 . Mostramos que a ordem da sílaba dentro de uma canção sobre (ou seja, a sintaxe) não é aleatória, que muda dependendo do contexto social, e que os animais ouvintes detectam essas mudanças como sendo etologicamente relevantes.

Nós notamosEm muitos investigadores que estudam a comunicação animal não atribuem ao termo "sintaxe" o mesmo significado exato que a sintaxe usada na fala humana. Para estudos de comunicação animal, nós simplesmente queremos dizer uma sequência ordenada, não-aleatória de sons com algumas regras. Para seres humanos, além disso, as sequências específicas são conhecidas por terem significados específicos. Não sabemos se esse é o caso dos ratos.

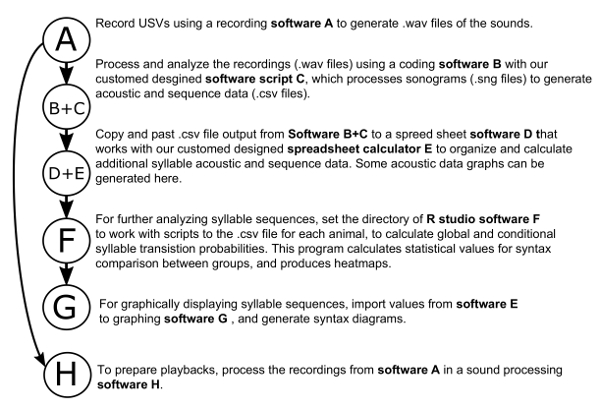

Neste artigo e vídeo associado, pretendemos fornecer protocolos fiáveis para gravar os camundongos machos em vários contextos e fazer reproduções. O uso de três software utilizado sequencialmente para: 1) gravações automatizadas; 2) detecção e codificação de sílabas; E 3) análise em profundidade das características da sílaba e sintaxe é demonstrada ( Figura 1 ). Isso nos permite aprender mais sobre a estrutura e função do USV camundongos. Acreditamos que tais métodos facilitam a análise de dados e podem abrir novos horizontes na caracterização da comunicação vocal normal e anormalSe modelos de comunicação e distúrbios neuropsiquiátricos, respectivamente.

Protocolo

Declaração de Ética: Todos os protocolos experimentais foram aprovados pelo Comitê Institucional de Cuidados e Uso de Animais da Universidade Duke (IACUC) sob o protocolo # A095-14-04. Nota: Consulte a Tabela 1 na seção " Materiais e equipamentos " para obter detalhes sobre o software utilizado .

1. Stimulando e Gravando Mouse USVs

- Preparação dos machos antes das sessões de gravação

NOTA: Os resultados representativos foram obtidos utilizando ratinhos machos adultos jovens B6D2F1 / J (7 - 8 semanas de idade). Este protocolo pode ser adaptado para qualquer estirpe.- Defina o ciclo de luz da sala de animais em um ciclo de luz / escuridão de 12 horas, a menos que seja necessário. Siga regras padrão de habitação de 4 a 5 homens por gaiola, a menos que seja necessário ou necessário. Três dias antes da gravação, exponha os machos individualmente a uma fêmea sexualmente madura e receptiva (até 3 machos com uma fêmea por gaiola) da mesma cepa durante a noite.

- No dia seguinte removaA fêmea da gaiola do macho e casa os machos sem as fêmeas, pelo menos, dois dias antes da primeira sessão de gravação para aumentar a motivação social para cantar (com base em nossas análises anedóticas de tentativa e erro).

- Preparação das caixas de gravação

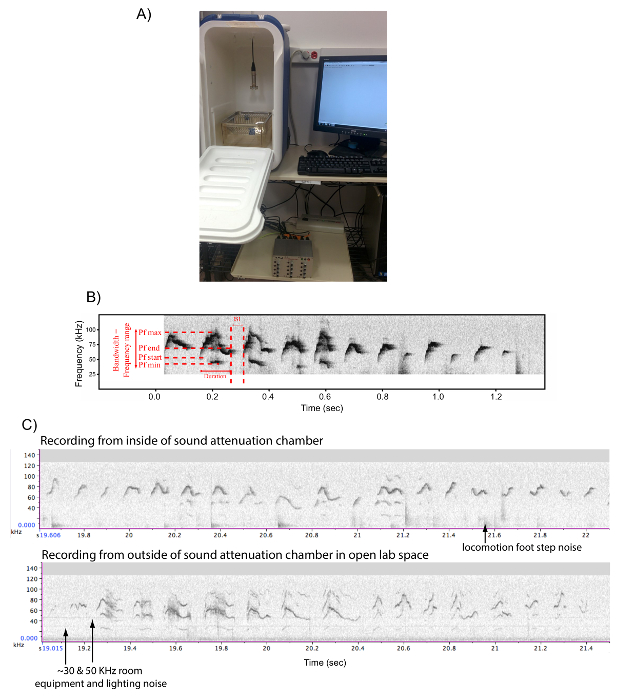

- Use um refrigerador de praia (dimensões internas são L 27 x W 23 x H 47 cm) para atuar como um estúdio caixa de atenuação de som ( Figura 2A ). Faça um pequeno furo na parte superior da caixa para deixar o fio do microfone rodar.

NOTA: É preferível gravar os animais em um ambiente sadio atenuado e isolado visualmente, de forma a gravar dezenas de camundongos de uma vez sem que eles se ouçam ou se vejam, evitem o ruído contaminante do equipamento do ambiente e as pessoas na sala e obtenham Gravações sonoras dos ratinhos ( Figura 2B ). Nós não observamos ecos de som ou distorções ao som ao comparar vocalizações do mesmo mouse dentro versus fora de tCâmara de atenuação de som ( Figura 2C ); Em vez disso, os sons podem ser mais altos e ter menos harmônicos dentro da câmara. - Conecte o microfone ao fio, o fio à placa de som ea placa de som ao computador para trabalhar com um software de gravação de som ( por exemplo, Software A na Figura 1 e Tabela 1 ). É necessário um software adequado de gravação de som, como o software A que gera arquivos de som .wav.

- Coloque uma gaiola vazia (58 x 33 x 40 cm) dentro da caixa insonorizada e ajuste a altura do microfone para que a membrana do microfone fique 35-40 cm acima do fundo da gaiola e que o microfone esteja centrado acima da gaiola ( Ver Figura 2A ).

- Use um refrigerador de praia (dimensões internas são L 27 x W 23 x H 47 cm) para atuar como um estúdio caixa de atenuação de som ( Figura 2A ). Faça um pequeno furo na parte superior da caixa para deixar o fio do microfone rodar.

- Configuração do software de gravação A ( Tabela 1 ) para gravação contínua

- Clique duas vezes e abra o software A. Clique e abra o menu "Configuração" e selecione o dispositivo chamado, Taxa de amostragem (250.000 Hz), Formato (16 bits).

- Selecione a chave "Trigger" opção e marque "Toggle".

NOTA: Esta definição permite iniciar a gravação premindo uma tecla (F1, F2, etc. ) enquanto coloca o estímulo na caixa do rato. - Digite o ID do mouse sob o parâmetro "Nome".

- Defina o tamanho máximo do arquivo para os minutos de gravação desejados (normalmente, definimos como 5 min).

NOTA: Quanto mais tempo os minutos, maior a quantidade de memória de armazenamento do computador necessária. Se o modo contínuo não estiver definido, o software interromperá os episódios de canção no início ou no final de uma seqüência com base em parâmetros definidos e, portanto, não é possível quantificar de forma confiável as seqüências.

- Registre USVs usando os diferentes estímulos. Nota: Cada estímulo pode ser usado independentemente dependendo das necessidades experimentais do usuário.

- Levantar suavemente o animal a ser gravado pela cauda, e colocá-lo em uma gaiola sem cama (para evitar movRuído do ement na cama) dentro da caixa soundproof, e põr a tampa metálica aberta-prendida da gaiola na parte superior dela, com a tampa para cima.

- Feche a caixa de som atenuado e deixe o animal habituar a ele por 15 min. Realizar a preparação de estímulos (1.4) neste momento.

- Preparação dos estímulos

- Preparação de amostras de urina fresca (UR) como um estímulo

- Obter amostras de urina no máximo de 5 minutos antes da sessão de gravação para garantir o efeito máximo na indução canção dos machos. NOTA: A urina que esteve sentada por mais tempo, e especialmente por horas ou durante a noite, não é tão eficaz 24 , 25 , que temos empiricamente verificado 23 .

- Escolha uma fêmea ou macho (dependendo do sexo do estímulo a ser usado) em uma gaiola, pegue a pele por trás do pescoço e restringir o animal em uma mão como para um procedimento de injeção de drogas com a barriga exposta.

- Com um par de pinças, pegue uma ponta de algodão (3 - 4 mm de comprimento x 2 mm de largura). Esfregue suavemente e empurre a bexiga do animal para extrair uma gota de urina fresca. Limpe a vagina da mulher ou pênis do macho para recolher a gota inteira sobre a ponta de algodão.

- Em seguida, selecione outra gaiola. E repetir o mesmo procedimento com outra fêmea ou macho, mas com a mesma ponta de algodão utilizada anteriormente.

NOTA: Este procedimento assegura misturar a urina de pelo menos duas fêmeas ou machos de duas gaiolas independentes na mesma ponta de algodão para garantir contra qualquer estro ou outros efeitos individuais, uma vez que se sabe que o ciclo estral pode influenciar o comportamento do canto 18 , 26 . - Coloque a ponta de algodão para ser usado em um vidro limpo ou plástico petri prato.

NOTA: Uma vez que a ponta de algodão com urina terá de ser utilizado dentro dos próximos 5 min, não há necessidade de cobri-lo para evitar a evaporação.

- PreparaçãoSobre o estímulo da fêmea viva (FE) como estímulo

- Selecione uma ou duas novas gaiolas de fêmeas sexualmente maduras. Identificar fêmeas no estágio pro-estro ou estro por inspeção visual (abertura ampla da vagina e arredondamento rosa, como mostrado em 27 , 28 ). Separe-os em uma gaiola diferente até o uso.

- Preparação dos animais anestesiados fêmeas (AF) ou machos (AM) como estímulo

- Para a FA, selecione uma fêmea da piscina acima (em pro-estro ou estro). Para a AM, selecione um macho de uma gaiola de ratos machos adultos.

- Anestesiar a fêmea ou o macho com uma injeção intraperitoneal de uma solução de Ketamina / Xilazina (100 e 10 mg / kg, respectivamente).

- Use pomada ocular para evitar a desidratação dos olhos enquanto o animal é anestesiado. Verifique a anestesia adequada testando o reflexo de retração da pata quando comprimido. Coloque o animal anestesiado em uma gaiola limpa em uma toalha de papel,H a gaiola na almofada térmica ajustada em "calor mínimo" para garantir o controle da temperatura corporal.

- Reutilize os mesmos animais até 2 a 3 vezes para diferentes sessões de gravação, se necessário, antes de acordarem (geralmente em torno de 45 min). Coloque-os de volta no bloco de calor após cada sessão de gravação.

- Controle a freqüência respiratória por inspeção visual (~ 60 - 80 respirações por min) e temperatura corporal tocando o animal a cada 5 min (deve ser quente ao toque).

- Quando estiver pronto para gravar, clique no botão "Gravar" do software A.

NOTA: As gravações não serão iniciadas a menos que o usuário clique no botão de tecla associado a cada canal; Monitorar a alimentação de áudio ao vivo das gaiolas na tela do computador para se certificar de que os animais cantar e as gravações estão sendo adequadamente obtidas. - Simultaneamente, pressione o botão de tecla associado da (s) caixa (s) desejada (s) a ser gravada ( ie F1 para a caixa 1) e introduza o estímulo desejado.

- Apresente um dos estímulos da seguinte forma. Coloque a ponta de algodão com amostras de urina frescas dentro da gaiola, ou coloque a fêmea viva dentro da gaiola, ou coloque um dos animais anestesiados (AF ou AM) sobre a tampa de metal da gaiola.

- Feche silenciosamente a caixa de gravação e deixe a gravação ir para o número predefinido de minutos ( por exemplo, 5 min como descrito na secção 1.3).

- Após a gravação, clique no botão vermelho de parada do quadrado para parar a gravação.

- Se um animal anestesiado foi usado como estímulo, abra a caixa de gravação à prova de som, retire o animal anestesiado da tampa de metal da gaiola e volte a colocá-lo na almofada de aquecimento antes da próxima sessão de gravação, ou se não usar o animal como um estímulo A cada 15 min até que ela tenha recuperado a consciência suficiente para manter a recumbência esternal.

- Abra a gaiola e remover o animal de teste consciente, e colocá-lo de volta para sua gaiola casa. Limpar a gaiola de teste com 70% de álcool aÁgua destilada.

- Preparação de amostras de urina fresca (UR) como um estímulo

2. Processamento de arquivos .wav e codificação de sílabas usando o Mouse Song Analyzer v1.3

- Abra o software de codificação B ( Figura 1 , Tabela 1 ) e coloque a pasta contendo o Software Script C de "Mouse Song Analyzer" ( Figura 1 , Tabela 1 ) no caminho do software B clicando em "set path" e adicione a pasta no software B. Em seguida, feche o software B para salvar.

- Configure as configurações de identificação da sílaba do software combinado B + C. NOTA: O código de script de software C cria automaticamente uma nova pasta chamada "sonogramas" com arquivos em formato .sng na mesma pasta onde os arquivos .wav estão localizados. Geralmente, é melhor colocar todos os arquivos .wav da mesma sessão de gravação gerada usando o software A na mesma pasta.

- Abra o software B configurado com C.

NOTA: Esta versão do software B é inteiramente cOmpatible com o script de software C. Não se pode garantir que qualquer versão posterior aceite todas as funções incluídas no código atual. - Navegue até a pasta de interesse que contém os arquivos .wav de gravação para serem analisados usando a janela "pasta atual".

- Na "janela de comando", digite o comando "whis_gui".

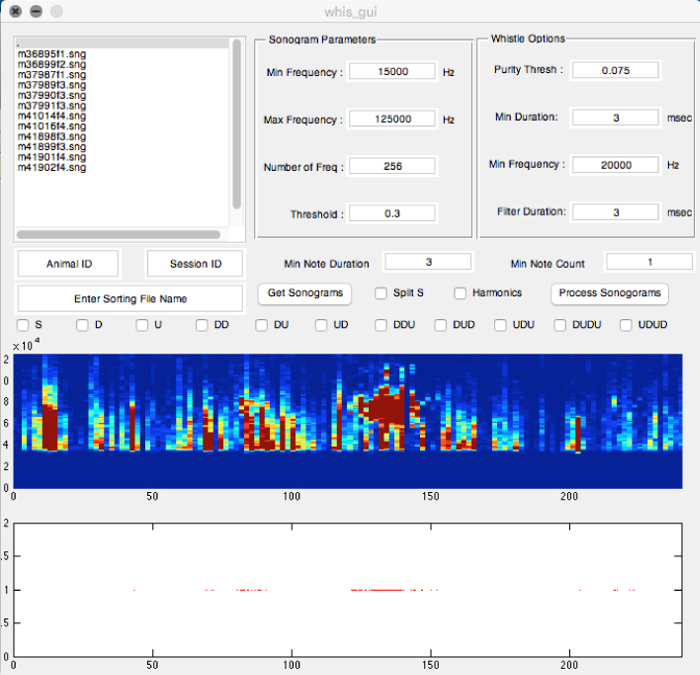

- Na nova janela de whis-gui, observe várias seções de sub-janela com diferentes parâmetros ( Figura 3 ), incluindo "Sonogram Parameters", "Whistle Options" e todos os outros. Ajuste os parâmetros para detectar USVs. Use as seguintes configurações de parâmetros para detectar sílabas de USV de ratos de laboratório ( por exemplo, estirpes de rato B6D2F1 / J e C57BL / 6J utilizadas em nossos estudos):

- Na seção Sonogram Parameters, ajuste a Frequência Mínima para 15.000 Hz, a Freqüência Máxima para 125.000 Hz, a freqüência de amostragem ( Número de freqüência ) tO 256 kHz, eo Limiar para 0,3.

- Na seção Opções de Apito, ajuste o Limite de Pureza para 0,075, a Duração Min da sílaba para 3 ms, a Varredura da Frequência Mínima para 20,000 Hz ea Duração do Filtro para 3 ms.

- Nas outras seções, ajuste a duração da nota mínima para 3 ms e a contagem da nota mínima para 1.

- Para o protocolo de categorização de sílabas, selecione caixas na seção do meio da janela whis_gui:

A categorização padrão é baseada em Holy e Guo 11 e Arriaga et al. 10 , que codifica a sílaba pelo número de saltos de passo e a direção do salto: S para sílaba contínua simples; D para um salto de passo para baixo; U para um salto de altura; DD para dois saltos descendentes seqüenciais; DU por um dowN e um salto para cima; Etc Isto será padrão se o usuário não selecionar nada. O usuário tem a opção de executar a análise em determinados tipos de sílabas selecionando cada uma das caixas representativas.

Para escolher a categorização de sílabas como descrito por Scattoni, et al. 9 , adicionalmente selecione Split s categoria, que separa este tipo em mais sub-categorias baseadas em forma de sílaba.

Selecione Harmônicos se o usuário quiser classificar as sílabas mais adiante naquelas com e sem harmônicas.

- Abra o software B configurado com C.

- Classifique as sílabas nos arquivos .wav de interesse

- Selecione todos os arquivos .wav de uma sessão de gravação na seção superior esquerda da janela whis_gui.

- Clique em "Get sonograms" no meio da janela whis_gui ( Figura 3 ). Uma nova pasta contendo os sonogramas será criada, com formato de arquivo .sng. Selecione todos os sonogramas (arquivos .sng)Canto esquerdo da janela de whis-gui.Entre "Animal ID" e "Session ID" nas caixas abaixo da janela do arquivo de sonograma. Em seguida, clique em sonogramas de processo.

- Observe três tipos de arquivo na pasta de sonograma: "Animal ID-Session ID -Notes.csv" (contém informações sobre as notas extraídas das sílabas), "Animal ID-Session ID -Syllables.csv", (contém valores de todos os Incluindo as suas características espectrais eo número total de sílabas detectadas nos sonogramas), "Animal ID-Session ID -Traces.mat", (contém representações gráficas de todas as sílabas).

NOTA: O arquivo "Animal ID-Session ID -Syllables" às vezes contém um pequeno percentual (2 - 16%) de sílabas USV não classificadas que são mais complexas do que as selecionadas ou que têm duas sílabas de animais sobrepostas 23 . Estes podem ser examinados separadamente do arquivo de rastreio, se necessário.

- Observe três tipos de arquivo na pasta de sonograma: "Animal ID-Session ID -Notes.csv" (contém informações sobre as notas extraídas das sílabas), "Animal ID-Session ID -Syllables.csv", (contém valores de todos os Incluindo as suas características espectrais eo número total de sílabas detectadas nos sonogramas), "Animal ID-Session ID -Traces.mat", (contém representações gráficas de todas as sílabas).

3. Quantificação da estrutura e sintaxe acústica da sílaba

NOTA: As instruções para as etapas a serem tomadas para as análises de sintaxe iniciais estão incluídas na seção "READ ME!" Planilha eletrônica do arquivo "Song Analysis Guide v1.1.xlsx", nossa calculadora de planilha personalizada E ( Figura 1 , Tabela 1 ).

- Abra a saída do arquivo de script C do software "ID-Sessão de ID de Animal -Syllables.csv" obtida na seção acima com o software de planilha D ( Figura 1 , Tabela 1 ). Contém o número total de sílabas detectadas em todos os sonogramas, e todas as suas características espectrais.

- Se não for convertido ainda, no software de planilha D converter este arquivo .csv para separação de coluna no software D para colocar cada valor em colunas individuais.

- Abra o arquivo "Song Analysis Guide v1.1.xlsx" também no software D. Em seguida, clique emE planilha de modelo , e copiar e colar os dados do arquivo "Animal ID-Session ID -Syllables.csv" nesta folha conforme recomendado nas instruções da folha de modelo. Remova as linhas com a categoria de sílabas "Não Classificada".

- Depois de remover as linhas 'Unclassified', primeiro copie e recalcule os dados ISI (intervalo entre sílabas) na coluna O na coluna E. Em segundo lugar, copie todos os dados da coluna A para N para a planilha 'Dados'.

- Na planilha de Dados digite os IDs dos animais (coluna AF) eo comprimento da gravação (em minutos, coluna AC).

NOTA: Os IDs dos animais têm de corresponder ao que foi introduzido na gravação configurada. O arquivo irá detectar os caracteres inseridos e compará-lo com o nome dos arquivos .wav. - Determine o corte de ISI para definir uma seqüência usando a planilha rotulada "Density ISI", o resultado do "ISI plot".

NOTA: No nosso estudo anterior 23 , definimos o corte em dois standaDesvio do centro do último pico. Consistia de longos intervalos (LI) de mais de 250 ms, que separavam diferentes lutas de canções dentro de uma sessão de canto. - Veja os principais resultados na planilha 'Recursos' (agrupando todas as características espectrais medidas de cada animal).

NOTA: Se o usuário usou a definição de categoria de sílaba padrão no script de software C descrito na seção 2.8.9, as sílabas são então classificadas em 4 categorias como descrito acima e em Chabout et al. 23 : 1) sílaba simples sem saltos de altura, «s»; 2) sílabas de duas notas separadas por um único salto ascendente («u»); 3) sílabas de duas notas separadas por um único salto descendente («d»); E 4) sílabas mais complexas com dois ou mais saltos de passo entre notas («m»). - Para valores de sintaxe, clique na planilha "Probabilidades Globais" que calcula as probabilidades de cada par de sIndependentemente das sílabas iniciais, usando a seguinte equação 23 .

P (Ocorrência de um tipo de transição) = Número total de ocorrências de um tipo de transição / Número total de transições de todos os tipos - Clique na planilha de probabilidades condicionais para calcular as probabilidades condicionais para cada tipo de transição em relação às sílabas iniciais, que usa a seguinte equação:

P (ocorrência de um tipo de transição dada a sílaba inicial) = Número total de ocorrências de um tipo de transição / Número total de ocorrências de todos os tipos de transição com a mesma sílaba inicial - Para testar se e quais das probabilidades de transição acima diferem de não-aleatórias, usando um modelo de Markov de primeira ordem, seguindo a abordagem em 23 , para usar o software personalizado F ( por exemplo, decodificador de sintaxe no estúdio R, Figura 1 ,Forte> Tabela 1) com os seguintes scripts R: Tests_For_Differences_In_Dynamics_Between_Contexts.R para dentro de grupos em condições diferentes; Ou Tests_For_Differences_In_Dynamics_Between_Genotypes.R para entre grupos nas mesmas condições.

- Executar um teste Qui-quadrado, ou outro teste de sua preferência, para testar as diferenças estatísticas nas probabilidades de transição do mesmo animal em diferentes contextos (par-wise), seguindo a abordagem em 23 .

NOTA: Mais detalhes sobre o modelo estatístico utilizado para comparar a sintaxe entre os grupos são encontrados em 23 . Os pesquisadores podem usar outras abordagens para analisar as probabilidades de transição globais ou condicionais que eles ou outros desenvolveram. - Para exibir graficamente as seqüências como diagramas de sintaxe, insira os valores em um software de representação gráfica de rede G (veja a Figura 1 , Tabela 1 ), com nós que designam diferentes códigos de sílabasGories e cor de seta e / ou pixel de espessura representando intervalos de valores de probabilidade entre sílabas.

NOTA: Para maior clareza, para os gráficos de probabilidade global, apenas mostramos transições superiores a 0,005 (superior a 0,5% da ocorrência casual). Para as probabilidades condicionais, usamos um limiar de 0,05 porque cada probabilidade no "modelo global" é menor considerando que dividimos pelo número total de sílabas e não apenas por um tipo de sílaba específico.

4. Edição de Músicas e Testes de Preferência para um Tipo de Canção

NOTA: Reproduções de USVs podem ser usadas para testar experimentalmente a resposta comportamental de um animal, incluindo a preferência por um tipo de canção específico. Como as preferências femininas podem mudar dependendo do estado de estro, para as mulheres certifique-se de que estão no mesmo estado de estro antes do teste da seguinte maneira:

- Prepare as fêmeas com experiência sexual vários dias antes do experimento de reprodução

- Exponha fêmeas sexualmente maduras (> 7 semanas) a um macho por 3 dias antes das experiências para desencadear o estro (efeito Whitten 28 ), colocando-as em uma gaiola de separação (plástico sólido transparente com orifícios perfurados) permitindo que a fêmea veja e Cheirar o macho, mas impedindo a relação sexual.

- Monitore o ciclo estro e, quando o estro ou pró-estro é evidente (abertura vaginal e arredondamento rosa como mostrado em 27 ), coloque as fêmeas de volta em sua própria gaiola. Eles estão prontos para serem testados no dia seguinte.

- Prepare os arquivos de música para reprodução

- Use as funções copiar e colar em um software de processamento de som H ( por exemplo SASLap Pro, Figura 1 , Tabela 1 ) para criar dois arquivos .wav editados com as condições desejadas para serem usados para estímulos. Para se proteger contra a quantidade de vocalizações como uma variável, verifique se os dois arquivos de som contêm o mesmo número de sOs yllables eo comprimento das seqüências (bouts da canção) dos mesmos ou diferentes machos / fêmeas dos contextos desejados ( por exemplo UR).

- Abra o primeiro arquivo contendo a canção da condição 1 no software H. Em seguida, vá para File> Specials> Add channel (s) do arquivo e selecione o segundo arquivo de som para testar a partir da condição 2. Isso cria 2 canais, um para cada condição.

- Ajuste o volume visualmente se necessário para se certificar de que os volumes dos dois arquivos correspondem uns aos outros, indo para Editar> Volume.

- Em seguida, vá para Edit> Format> Sampling Frequency Conversion e selecione converter de 250,000 Hz para 1,000,000 Hz para transformar os arquivos .wav de uma freqüência de amostragem de 256 kHz para 1 MHz. Esta etapa é necessária para o dispositivo de reprodução para ler os arquivos .wav.

- Salve esses novos arquivos como os arquivos de teste a serem reproduzidos. Certifique-se de identificar qual música está em qual canal (1 ou 2). Para fins de clareza, nomeie-o "nome_do_arquivo.wav".

- Ir para EXit> Format> Swap Channels e troque os dois canais. Salve as versões trocadas usando um nome diferente. NOTA: Esta será a cópia oposta com os canais invertidos, nomeados mais tarde 'nome do arquivo_swapped.wav'.

- Prepare o aparelho de reprodução

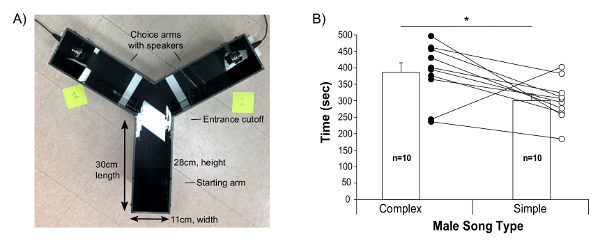

- Limpe o aparelho de reprodução "Y-labirinto" com álcool a 70% seguido de água destilada. Secá-lo com toalhas de papel. Nosso labirinto em Y é um aparelho de plástico preto sólido opaco caseiro, com braços de 30 cm de comprimento e dois furos nas extremidades do labirinto para permitir que o alto-falante de ultrassom encaixe ao nível do chão dos braços ( Figura 4A ).

- Certifique-se de que os alto-falantes estejam na posição correta e conectados à placa de som e o cartão esteja conectado ao computador.

- Abra o software A, selecione Reproduzir> Dispositivo e selecione o dispositivo de cartão de reprodução de som. Selecione "usar taxa de cabeçalho de arquivo". Vá para Play> Playlist e carregue o arquivo de interesse contendo os dois chaNnels ( ou seja, "file.wav"). Selecione "modo de loop". Configure o gravador de vídeo acima do labirinto para cobrir todo o labirinto.

- Executar a experiência de reprodução

- Coloque a fêmea de teste no labirinto durante um período de habituação de 10 minutos. Após 10 minutos, se a fêmea não estiver no braço de partida, empurre suavemente a fêmea de volta para o braço de partida e feche a janela de separação de plástico.

- Selecione o arquivo preparado ("file.wav") para reproduzi-lo e reproduzi-lo. Inicie a gravação de vídeo e certifique-se de identificar qual canal está posicionado no braço do Y-labirinto ( ie : UR na esquerda ou FE no braço direito) com uma nota de papel no campo de visualização da gravação de vídeo.

- Permitir que a fêmea para ouvir as músicas em modo de loop e explorar o labirinto durante um número desejado de minutos ( ou seja, 5 min): esta é uma sessão. Retorne a fêmea de volta para o braço de partida. Permita-lhe descansar por 1 min enquanto prepara a próxima sessão. RemOve quaisquer traços de urina e excrementos com água destilada.

- Carregue o arquivo "file_swapped.wav" Altere a localização das notas de papel para a gravação de vídeo Mova a esquerda para a direita e vice-versa Depois de 1 min, reproduza o arquivo Livre a fêmea para a segunda sessão .

- Repita os passos 4.4.3 a 4.4.4 para um total de 4 sessões x 5 minutos para controlar a detecção de potencial viés lateral durante o teste. Pare a gravação de vídeo no final de todas as sessões clicando no botão vermelho de paragem. Limpe o labirinto com álcool a 70% e água destilada entre as fêmeas.

- Repita todos os passos com diferentes exemplares canção uma semana mais tarde com as mesmas fêmeas para obter resultados suficientes para testar a confiabilidade dos resultados.

- Depois observe os vídeos e use o cronômetro no vídeo e um cronômetro para medir o tempo gasto pelas fêmeas em cada braço para cada sessão. Analise os dados resultantes estatisticamente para possíveis preferências de música.

Resultados

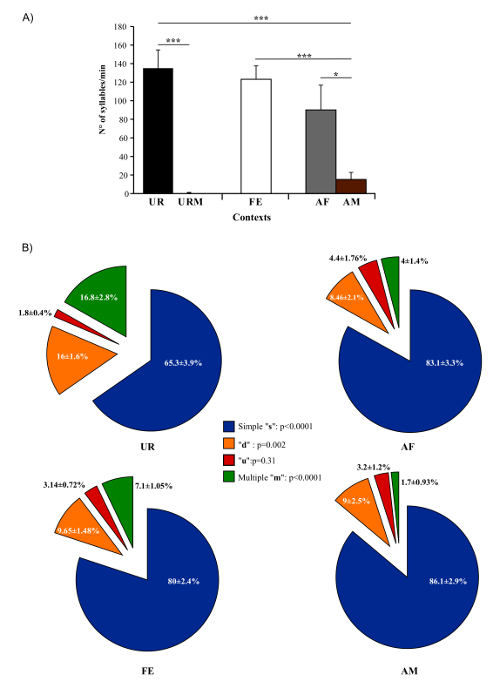

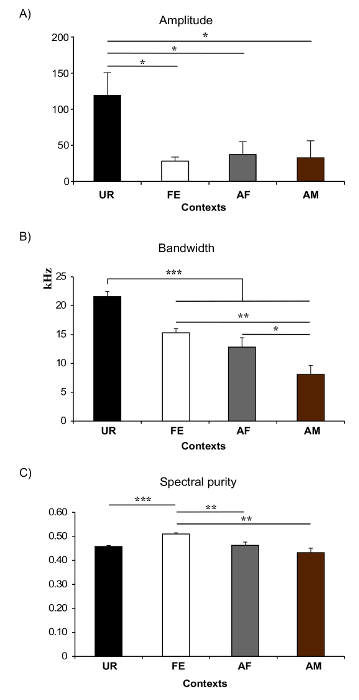

No presente protocolo, foram caracterizadas alterações no comportamento vocal e sintaxe de camundongos B6D2F1 / J machos. Em geral, usando este protocolo, foi possível registrar, em média por macho por sessão de 5 min, 675 ± 98,5 sílabas classificadas em resposta à UR feminina, 615,6 ± 72 na FE, 450 ± 134 na FA, 75,6 ± 38,9 na AM e 0,2 ± 0,1 em UR macho (n = 12 machos). As taxas foram ~ 130 sílabas / min para mulheres UR, ~ 120 sílabas / min para FE, ou ~ 100 sílabas / min para AF contextos ( Figura 5A ). Os machos produzem uma quantidade muito maior de sílabas em resposta à urina recém-coletada em relação à urina coletada durante a noite 10 , 23 . Os machos também cantam consideravelmente menos na presença de uma anestesia masculina ou urina masculina fresca Figura 5A ). Os homens também mudam seu repertório através do contexto 23 . Por exemplo, os machos B6D2F1 / J aumentam significativamente Produção de sílabas de "m" de salto de passo múltiplo na condição de urina feminina ( Figura 5B ). Eles também mudam as características acústicas das sílabas individuais através do contexto. Por exemplo, os machos B6D2F1 / J cantam sílabas com maior amplitude e largura de banda no contexto da urina feminina e maior pureza espectral no contexto feminino acordado em comparação com os outros ( Figura 6 ) 23 .

Este protocolo também fornece um meio para medir características dinâmicas de seqüência e, portanto, mudanças de sintaxe. Utilizando um m�odo adaptado de Ey, et al. 22 , usamos o ISI para definir os intervalos entre as sequências ( Figura 7A ) 23 , e então usamos as lacunas para distinguir e analisar os padrões temporais de seqüências de sílabas. Mostramos que os comprimentos de sequência mais longos são produzidos no contexto feminino acordado ( Figura 7B )F "> 23. Esta técnica permite calcular a razão de sequências complexas (composta por pelo menos 2 ocorrências do tipo" sílica m ") em relação a sequências simples (composta de um ou nenhum tipo" m "e, portanto, principalmente por" ( Figura 7C ) 23 , indicando que produziram sílabas mais complexas na condição da urina feminina, mas também que tais sílabas estão distribuídas Sobre mais sequências.

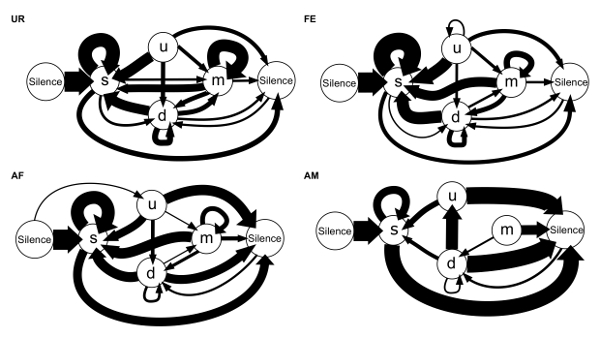

Também somos capazes de calcular as probabilidades de transição condicional de um tipo de sílaba para outro (24 tipos de transição total incluindo as transições de e para o estado de "silêncio") 23 . Descobrimos que em contextos diferentes, a escolha dos ratos de tipos de transição para as sílabas de partida dadas difere, e que há mais sintaxe divIdades na condição da urina feminina ( Figura 8 ) 23 . Estas observações são consistentes com relatos anteriores que mostram que os machos podem alterar as características acústicas ou repertório composição de suas vocalizações em resposta a diferentes estímulos e experiências 4 , 5 , 24 .

Finalmente, o presente protocolo fornece orientação para testar preferências femininas com playbacks. Descobrimos que as fêmeas B6D2F1 / J preferem canções mais complexas (contendo 2 ou mais sílabas "m") em relação a canções simples 23 . A maioria das mulheres escolheu ficar mais frequentemente do lado do labirinto em Y que tocava as músicas complexas ( Figura 4B ).

Figura 1: Fluxograma de SofUtilização e Análises. Cada programa e código associado recebe um nome de letra para ajudar a explicar sua identidade e uso no texto principal. Em () são os programas específicos que usamos em nosso protocolo. Clique aqui para ver uma versão maior desta figura.

Figura 2: Configuração para gravar músicas de ratos masculinos. (A) Imagem de uma caixa de gravação de atenuação de som e configurada para gravar vocalizações de USV. (B) Exemplo de sonograma de uma gravação feita com o Software A ( Tabela 1 ), incluindo características espectrais detalhadas calculadas por "Mouse Song Analyzer v1.3": duração, intervalo entre sílabas (ISI), frequência de pico min (Pf min) Frequência máxima de pico (Pf máx.), Início de frequência de pico (Pf start), frequência de pico Fim (fim Pf) e largura de banda. (C) Sonogramas de outro macho cantando para uma fêmea viva, dentro da caixa de atenuação de som e fora da caixa no banco de laboratório na mesma sala. Nossas observações anedóticas indicam que as gravações na caixa do mesmo animal mostram maior volume (intensidade mais forte) e menos harmônicos, mas nenhuma evidência de ecos na caixa sem espuma de som. Clique aqui para ver uma versão maior desta figura.

Figura 3: Captura de tela do "Mouse Song Analyzer v1.3" whis_gui Janela mostrando as diferentes opções disponíveis para análises. Os parâmetros mostrados são aqueles usados para registrar USVs masculinos nas figuras e as análises de dados apresentadas (exceto a duração da nota min foi de 3 ms). .com / files / ftp_upload / 54137 / 54137fig3large.jpg "target =" _ blank "> Clique aqui para ver uma versão ampliada desta figura.

Figura 4: Escolhas Femininas entre Reproduções de Canções Complexas e Simples. (A) Imagem do aparelho Y-labirinto utilizado e medições de dimensão. (B) Tempo gasto pelas fêmeas em cada braço, jogando a partir de um complexo (orina feminina eliciada) ou mais simples (acordada feminino eliciado) canção do mesmo macho. Os dados são apresentados para n = 10 ratinhos fêmeas B6D2F1J como média ± SE, com valores individuais também mostrados; 9 das 10 fêmeas mostraram preferência pela canção sílaba / seqüência mais complexa. * P <0,05 teste t pareado de aluno. Figura modificada de Chabout, et al. 23 com permissão.Blank "> Clique aqui para ver uma versão ampliada desta figura.

Figura 5: Número de sílabas emitidas e repertório em condições diferentes . (A) Taxa de produção de sílaba de machos em diferentes contextos. (B) Composições do repertório de machos quando na presença de urina feminina (UR), feminina anestesiada (FA), vigília feminina (FE) e anestesiada (AM). Os dados são apresentados como média ± SEM. P <0,03; ** p <0,005; *** p <0,0001 para teste post-hoc de t de Student pareado após correção de Benjamini e Hochberg (n = 12 machos). Figura de Chabout, et al. 23 com permissão. Clique aqui para ver uma versão maior desta figura.

Together.within-page = "1">

Figura 6: Exemplos de características espectrais em contexto diferente. (A) Amplitude. * P <0,025 para teste post-hoc de t de Student pareado após correção. (B) Faixa de freqüência ou largura de banda. *: P & lt; 0,041; **: p & lt; 0,005; ***: p <0,0001 após correção. (C) Pureza espectral das sílabas. * P: <0,025; **: p & lt; 0,005; ***: p <0,0001 após correção. Abreviações: urina feminina (UR), fêmea anestesiada (FA), mulher acordada (FE) e anestesiada (AM). As figuras modificadas de Chabout, et al. 23 com permissão. Clique aqui para ver uma versão maior desta figura.

37fig7.jpg "/>

Figura 7: Medições de Seqüência. (A) Uso do ISI para separar a seqüência. O ISI curto (SI) e o ISI médio (MI) são usados para separar sílabas dentro de uma seqüência, e ISI longo mais de 250 ms (LI) separam duas seqüências. (B) Comprimento das seqüências, medido como número de sílabas por seqüência, produzido por machos em diferentes contextos. *: P & lt; 0,025; ** p <0,005; *** p <0,0001 após a correção. (C) Relação de músicas complexas sobre canções simples produzidas por homens em contextos diferentes. * P <0,041; ** p <0,005; *** p <0,0001 após a correção. Os dados são apresentados como média ± SEM (n = 12 machos). Figura de Chabout, et al. 23 com permissão. Clique aqui para ver uma versão maior desta figura.

Figura 8: Diagramas de sintaxe da sílaba de seqüências baseadas em probabilidades condicionais para cada contexto. A espessura da seta é proporcional à ocorrência de probabilidade condicional de um tipo de transição em cada contexto em média de n = 12 machos: P (ocorrência de uma transição dada a sílaba inicial). Para maior clareza, raras transições abaixo de uma probabilidade de 0,05 não são mostradas. Figura de Chabout, et al. 23 com permissão. Clique aqui para ver uma versão maior desta figura.

Discussão

Este protocolo fornece abordagens para coletar, quantificar e estudar vocalizações macho namoro namoro no laboratório em uma variedade de estímulos, principalmente feminino-relacionados. Conforme apresentado anteriormente em Chabout, et al. 23 e nos resultados representativos, o uso deste método nos permitiu descobrir vocalizações contextuais e sintaxe que importam para as fêmeas receptoras. A padronização desses estímulos proporcionará a coleta de um número confiável de USVs e permitirá análises detalhadas das canções e repertórios do namoro masculino.

Quando uma fêmea viva está presente com o macho, o protocolo não nos permite identificar claramente o emissor das vocalizações. No entanto, estudos anteriores mostraram que a maioria das vocalizações emitidas nesse contexto eram do sexo masculino 26 , 29 . A maioria dos estudos usando um congênero (masculino ou feminino)Como um estímulo para os machos acreditam que a quantidade de vocalizações femininas nesses contextos é desprezível 4 , 5 , 22 , 30 . No entanto, um artigo recente usou a triangulação para localizar a vocalização do emissor em condições de alojamento em grupo 31 e mostrou que dentro de uma díade, a fêmea contribui para ~ 10% dos USVs. No presente protocolo o uso da fêmea anestesiada permite ao usuário estudar as vocalizações masculinas na presença de uma fêmea sem sua vocalização. Em contraste com as expectativas deste estudo recente 31 , não encontramos diferença no número de sílabas emitidas entre as condições FE e AF 23 . É possível que as fêmeas vivas não contribuíram significativamente para as gravações ou que os machos vocalizaram menos na presença de fêmeas vivas versus fêmeas anestesiadasAles No entanto, acreditamos que futuras experiências devem considerar o uso deste método de triangulação para avaliar o efeito potencial da contribuição feminina.

Há outros softwares disponíveis que podem fazer algumas das etapas que descrevemos, embora não acreditemos de forma suficiente para as perguntas que fizemos usando uma combinação de três programas: Software A, Mouse Song Analyzer software C usando o software B Software de análise de sintaxe usando cálculos de D + E de um software de planilha personalizado e decodificação de sintaxe usando R. Por exemplo, um artigo recente propôs um software chamado VoICE que permite ao usuário extrair variáveis acústicas automaticamente a partir dos sonogramas ou diretamente em unidades que tinham sido manualmente Selecionado pelo usuário 32 . Mas, as análises de seqüência automatizadas ou semi-automatizadas não são tão detalhadas quanto a nossa abordagem. Alguns softwares comerciais podem analisar automaticamente os recursos acústicos, mas não fornecemClassificação das sílabas; O usuário tem que classificar as diferentes sílabas depois. Grimsley, Gadziola, et ai. 33 desenvolveu um programa de órgão vocal de rato virtual baseado em mesa que agrupa sílabas baseadas em características acústicas compartilhadas, mas não fornece detecção automática das sílabas. Seu programa 34 é único porque cria novas sequências de músicas gravadas usando modelos de Markov e, portanto, possui recursos mais avançados do que simples edição.

A maioria dos estudos prévios de comunicação em ratos concentrou-se no lado do emissor 35 , 36 . Poucos estudos exploraram o lado do receptor 30 , 37 , 38 . Os protocolos de reprodução e discriminação fornecem um teste simples para estudar o lado do receptor, como o descrito também recentemente por Asaba, Kato, etAl. 39 . Nesse estudo, os autores usaram uma caixa de teste de duas opções separada com espuma acústica em vez da caixa Y-labirinto descrita aqui. Ambas as configurações de escolha têm vantagens e desvantagens. Primeiro, o labirinto Y não isolar o som de um braço para o outro, mas a caixa de duas opções faz. No entanto, usando o design Y-labirinto, o animal pode rapidamente avaliar as duas músicas que são tocadas simultaneamente, e avançar para o preferido. No entanto, experimentos de reprodução em geral ajudam os experimentadores a determinar o significado e, portanto, as funções das vocalizações geradas para animais conspécificos. Em conclusão, após dominar as técnicas deste protocolo e análises, os leitores devem ser capazes de abordar muitas questões que influenciam o contexto, genética e neurobiologia de USVs mouse.

Usando ratos B6D2F1 / J, os estímulos associados femininos quase sempre provocam USVs dos machos testados em nosso laboratório. É crítico paraCt sílabas suficientes (> 100 em 5 min) para ser capaz de obter forte análise estatística. Para solução de problemas, se nenhum USVs for gravado (ou não o suficiente), verifique a configuração para certificar-se de que os sons são gravados. Fazer uma inspeção ao vivo do que está acontecendo na gaiola durante a gravação, olhando para o ultra-som em tempo real na tela do computador após a introdução do estímulo. Caso contrário, tente re-expor o macho a uma fêmea sexualmente madura / receptiva durante a noite e depois deixá-los sozinhos por vários dias ou até uma semana antes de gravar novamente. Com base em observações anedóticas, descobrimos que alguns machos cantam muito em um dia (por quase todos os 5 min), e não muito no dia seguinte e, em seguida, novamente outro dia. Nós não sabemos a razão pela qual tal variabilidade sujeito dentro ocorre, mas supomos que é provavelmente um motivacional ou sazonal para os homens, eo estado de estro para a urina feminina. Se nenhum USVs for gravado, tente gravar o animal em vários dias para pegar esses efeitos variáveis. NãoIke nas aves canoras, não observamos diferenças evidentes na quantidade de canto com base na hora do dia. Descobrimos que os machos não cantam muito (<100 sílabas em 5 min) antes de terem 7 semanas de idade.

Os métodos de detecção apresentados aqui podem extrair milhares de sílabas e todos os parâmetros acústicos em poucos minutos. Mas como qualquer método de detecção automática, é muito sensível ao ruído de fundo. O uso do software de detecção de Song Song com gravações barulhentas (por exemplo, de animais gravados com camas) pode exigir ajuste do "limiar" de detecção para permitir mais flexibilidade. No entanto, isso também aumentará o número de sílabas falso-positivas ea detecção automática poderá falhar. Em tais circunstâncias, a codificação manual pode ser usada.

Como mencionado anteriormente, o número, os repertórios e a latência das vocalizações são amplamente variáveis dependendo da tensão, assim, pode-se ter que mudar os parâmetros (comprimento da gravação,Estímulo, detecção automatizada de sílabas, etc. ) para algumas cepas para garantir gravações óptimas para análises estatísticas.

Divulgações

Os autores não têm nada a revelar.

Agradecimentos

Este trabalho foi apoiado pelo Howard Hughes Medical Institute fundos para EDJ. Agradecemos ao Pr. Sylvie Granon (NeuroPSI - Universidade Paris sul XI - França) por nos emprestar o hardware do alto-falante. Agradecemos também aos membros do Laboratório Jarvis por seu apoio, discussões, correções e comentários sobre este trabalho, especialmente Joshua Jones Macopson para ajuda com figuras e testes. Agradecemos ao Dr. Gustavo Arriaga pela ajuda com o software Mouse Song Analyzer, atualizando-o para a V1.3 e outros aspectos deste protocolo. V1.0 do software foi desenvolvido por Holy e Guo, e v1.1 e v1.3 por Arriaga.

Materiais

| Name | Company | Catalog Number | Comments |

| Sound proof beach cooler | See Gus paper has more info on specific kind | Inside dimensions (L 27 x W 23 x H 47 cm): | |

| Condenser ultrasound microphone CM16/CMPA | Avisoft Bioacoustics, Berlin, Germany | #40011 | Includes extension cable |

| Ultrasound Gate 1216H sound card | Avisoft Bioacoustics, Berlin, Germany | #34175 | 12 channel sound card |

| Ultrasound Gate Player 216H | Avisoft Bioacoustics, Berlin, Germany | #70117 | 2 channels playback player |

| Ultrasonic Electrostatic Speaker ESS polaroid | Avisoft Bioacoustics, Berlin, Germany | #60103 | 2 playback speakers |

| Test cage | Ace | #PC75J | 30 x 8 x 13 cm height; plexiglas |

| plexiglas separation | home made | - | 4 x 13 cm plexiglas with 1 cm holes |

| Video camera | Logitech | C920 | logitech HD Pro webcam C920 |

| Heat pad | Sunbeam | 722-810-000 | |

| Y-maze | Home made | - | Inside dimensions (L 30 x W 11 x H 29 cm): |

| Tweezers | |||

| Software | |||

| Avisoft Recorder (Software A) | Avisoft Bioacoustics, Berlin, Germany | #10101, #10111, #10102, #10112 | http://www.avisoft.com |

| MATLAB R2013a (Software B) | MathWorks | - | MATLAB R2013a (8.1.0.604) |

| Mouse Song Analyzer v1.3 (Software C) | Custom designed by Holy, Guo, Arriaga, & Jarvis; Runs with software B | http://jarvislab.net/wp-content/uploads/2014/12/Mouse_Song_Analyzer_ v1.3-2015-03-23.zip | |

| Microsoft Office Excel 2013 (Software D) | Microsoft | - | Microsoft Office Excel |

| Song Analysis Guide v1.1 (Software E) | Custom designed by Chabout & Jarvis. Excel calculator sheets, runs with software D | http://jarvislab.net/wp-content/uploads/2014/12/Song-analysis_Guided.xlsx | |

| Syntax decorder v1.1 (Software F) | Custom designed by Sakar, Chabout, Dunson, Jarvis - in R studio | https://www.rstudio.com/products/rstudio/download/ | |

| Graphiz (Software G) | AT&T Research and others | http://www.graphviz.org | |

| Avisoft SASLab (Software H) | Avisoft Bioacoustics, Berlin, Germany | #10101, #10111, #10102, #10112 | http://www.avisoft.com |

| Reagents | |||

| Xylazine (20 mg/mL) | Anased | - | |

| Ketamine HCl (100 mg/mL) | Henry Schein | #045822 | |

| distilled water | |||

| Eye ointment | Puralube Vet Ointment | NDC 17033-211-38 | |

| Cotton tips | |||

| Petri dish |

Referências

- Amato, F. R., Scalera, E., Sarli, C., Moles, A. Pups call, mothers rush: does maternal responsiveness affect the amount of ultrasonic vocalizations in mouse pups. Behav. Genet. 35, 103-112 (2005).

- Panksepp, J. B., et al. Affiliative behavior, ultrasonic communication and social reward are influenced by genetic variation in adolescent mice. PloS ONE. 2, e351 (2007).

- Moles, A., Costantini, F., Garbugino, L., Zanettini, C., D'Amato, F. R. Ultrasonic vocalizations emitted during dyadic interactions in female mice: a possible index of sociability. Behav. Brain Res. 182, 223-230 (2007).

- Chabout, J., et al. Adult male mice emit context-specific ultrasonic vocalizations that are modulated by prior isolation or group rearing environment. PloS ONE. 7, e29401 (2012).

- Yang, M., Loureiro, D., Kalikhman, D., Crawley, J. N. Male mice emit distinct ultrasonic vocalizations when the female leaves the social interaction arena. Front. Behav. Neurosci. 7, (2013).

- Petric, R., Kalcounis-Rueppell, M. C. Female and male adult brush mice (Peromyscus boylii) use ultrasonic vocalizations in the wild. Behaviour. 150, 1747-1766 (2013).

- Bishop, S. L., Lahvis, G. P. The autism diagnosis in translation: shared affect in children and mouse models of ASD. Autism Res. 4, 317-335 (2011).

- Lahvis, G. P., Alleva, E., Scattoni, M. L. Translating mouse vocalizations: prosody and frequency modulation. Genes Brain & Behav. 10, 4-16 (2011).

- Scattoni, M. L., Gandhy, S. U., Ricceri, L., Crawley, J. N. Unusual repertoire of vocalizations in the BTBR T+tf/J mouse model of autism. PloS ONE. 3, (2008).

- Arriaga, G., Zhou, E. P., Jarvis, E. D. Of mice, birds, and men: the mouse ultrasonic song system has some features similar to humans and song-learning birds. PloS ONE. 7, (2012).

- Holy, T. E., Guo, Z. Ultrasonic songs of male mice. PLoS Biol. 3, 2177-2186 (2005).

- Portfors, C. V. Types and functions of ultrasonic vocalizations in laboratory rats and mice. J. Amer. Assoc. Lab. Anim. Science: JAALAS. 46, 28-28 (2007).

- Wohr, M., Schwarting, R. K. Affective communication in rodents: ultrasonic vocalizations as a tool for research on emotion and motivation. Cell Tissue Res. , 81-97 (2013).

- Pasch, B., George, A. S., Campbell, P., Phelps, S. M. Androgen-dependent male vocal performance influences female preference in Neotropical singing mice. Animal Behav. 82, 177-183 (2011).

- Asaba, A., Hattori, T., Mogi, K., Kikusui, T. Sexual attractiveness of male chemicals and vocalizations in mice. Front. Neurosci. 8, (2014).

- Balaban, E. Bird song syntax: learned intraspecific variation is meaningful. Proc. Natl. Acad. Sci. USA. 85, 3657-3660 (1988).

- Byers, B. E., Kroodsma, D. E. Female mate choice and songbird song repertoires. Animal Behav. 77, 13-22 (2009).

- Hanson, J. L., Hurley, L. M. Female presence and estrous state influence mouse ultrasonic courtship vocalizations. PloS ONE. 7, (2012).

- Pomerantz, S. M., Nunez, A. A., Bean, N. J. Female behavior is affected by male ultrasonic vocalizations in house mice. Physiol. & Behav. 31, 91-96 (1983).

- White, N. R., Prasad, M., Barfield, R. J., Nyby, J. G. 40- and 70-kHz Vocalizations of Mice (Mus musculus) during Copulation. Physiol. & Behav. 63, 467-473 (1998).

- Hammerschmidt, K., Radyushkin, K., Ehrenreich, H., Fischer, J. Female mice respond to male ultrasonic 'songs' with approach behaviour. Biology Letters. 5, 589-592 (2009).

- Ey, E., et al. The Autism ProSAP1/Shank2 mouse model displays quantitative and structural abnormalities in ultrasonic vocalisations. Behav. Brain Res. 256, 677-689 (2013).

- Chabout, J., Sarkar, A., Dunson, D. B., Jarvis, E. D. Male mice song syntax depends on social contexts and influences female preferences. Front. Behav. Neurosci. 9. 76, (2015).

- Hoffmann, F., Musolf, K., Penn, D. J. Freezing urine reduces its efficacy for eliciting ultrasonic vocalizations from male mice. Physiol. & Behav. 96, 602-605 (2009).

- Roullet, F. I., Wohr, M., Crawley, J. N. Female urine-induced male mice ultrasonic vocalizations, but not scent-marking, is modulated by social experience. Behav. Brain Res. 216, 19-28 (2011).

- Barthelemy, M., Gourbal, B. E., Gabrion, C., Petit, G. Influence of the female sexual cycle on BALB/c mouse calling behaviour during mating. Die Naturwissenschaften. 91, 135-138 (2004).

- Byers, S. L., Wiles, M. V., Dunn, S. L., Taft, R. A. Mouse estrous cycle identification tool and images. PloS ONE. 7, (2012).

- Whitten, W. K. Modification of the oestrous cycle of the mouse by external stimuli associated with the male. J. Endocrinol. 13, 399-404 (1956).

- Whitney, G., Coble, J. R., Stockton, M. D., Tilson, E. F. ULTRASONIC EMISSIONS: DO THEY FACILITATE COURTSHIP OF MICE. J. Comp. Physiolog. Psych. 84, 445-452 (1973).

- Asaba, A., et al. Developmental social environment imprints female preference for male song in mice. PloS ONE. 9, 87186 (2014).

- Neunuebel, J. P., Taylor, A. L., Arthur, B. J., Egnor, S. E. Female mice ultrasonically interact with males during courtship displays. eLife. 4, (2015).

- Burkett, Z. D., Day, N. F., Penagarikano, O., Geschwind, D. H., White, S. A. VoICE: A semi-automated pipeline for standardizing vocal analysis across models. Scientific Reports. 5, 10237 (2015).

- Grimsley, J. M., Gadziola, M. A., Wenstrup, J. J. Automated classification of mouse pup isolation syllables: from cluster analysis to an Excel-based "mouse pup syllable classification calculator". Front. Behav. Neurosci. 6, (2012).

- Grimsley, J. M. S., Monaghan, J. J. M., Wenstrup, J. J. Development of social vocalizations in mice. PloS ONE. 6, (2011).

- Portfors, C. V., Perkel, D. J. The role of ultrasonic vocalizations in mouse communication. Curr. Opin. Neurobiol. 28, 115-120 (2014).

- Merten, S., Hoier, S., Pfeifle, C., Tautz, D. A role for ultrasonic vocalisation in social communication and divergence of natural populations of the house mouse (Mus musculus domesticus). PloS ONE. 9, 97244 (2014).

- Neilans, E. G., Holfoth, D. P., Radziwon, K. E., Portfors, C. V., Dent, M. L. Discrimination of ultrasonic vocalizations by CBA/CaJ mice (Mus musculus) is related to spectrotemporal dissimilarity of vocalizations. PloS ONE. 9, 85405 (2014).

- Holfoth, D. P., Neilans, E. G., Dent, M. L. Discrimination of partial from whole ultrasonic vocalizations using a go/no-go task in mice. J. Acoust. Soc. Am. 136, 3401 (2014).

- Asaba, A., Kato, M., Koshida, N., Kikusui, T. Determining Ultrasonic Vocalization Preferences in Mice using a Two-choice Playback. J Vis Exp. , (2015).

Reimpressões e Permissões

Solicitar permissão para reutilizar o texto ou figuras deste artigo JoVE

Solicitar PermissãoThis article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. Todos os direitos reservados