A subscription to JoVE is required to view this content. Sign in or start your free trial.

Methods Article

Integrating Visual Psychophysical Assays within a Y-Maze to Isolate the Role that Visual Features Play in Navigational Decisions

In This Article

Summary

Here, we present a protocol to demonstrate a behavioral assay that quantifies how alternative visual features, such as motion cues, influence directional decisions in fish. Representative data are presented on the speed and accuracy where Golden Shiner (Notemigonus crysoleucas) follow virtual fish movements.

Abstract

Collective animal behavior arises from individual motivations and social interactions that are critical for individual fitness. Fish have long inspired investigations into collective motion, specifically, their ability to integrate environmental and social information across ecological contexts. This demonstration illustrates techniques used for quantifying behavioral responses of fish, in this case, Golden Shiner (Notemigonus crysoleucas), to visual stimuli using computer visualization and digital image analysis. Recent advancements in computer visualization allow for empirical testing in the lab where visual features can be controlled and finely manipulated to isolate the mechanisms of social interactions. The purpose of this method is to isolate visual features that can influence the directional decisions of the individual, whether solitary or with groups. This protocol provides specifics on the physical Y-maze domain, recording equipment, settings and calibrations of the projector and animation, experimental steps and data analyses. These techniques demonstrate that computer animation can elicit biologically-meaningful responses. Moreover, the techniques are easily adaptable to test alternative hypotheses, domains, and species for a broad range of experimental applications. The use of virtual stimuli allows for the reduction and replacement of the number of live animals required, and consequently reduces laboratory overhead.

This demonstration tests the hypothesis that small relative differences in the movement speeds (2 body lengths per second) of virtual conspecifics will improve the speed and accuracy with which shiners follow the directional cues provided by the virtual silhouettes. Results show that shiners directional decisions are significantly affected by increases in the speed of the visual cues, even in the presence of background noise (67% image coherency). In the absence of any motion cues, subjects chose their directions at random. The relationship between decision speed and cue speed was variable and increases in cue speed had a modestly disproportionate influence on directional accuracy.

Introduction

Animals sense and interpret their habitat continuously to make informed decisions when interacting with others and navigating noisy surroundings. Individuals can enhance their situational awareness and decision making by integrating social information into their actions. Social information, however, largely stems from inference through unintended cues (i.e., sudden maneuvers to avoid a predator), which can be unreliable, rather than through direct signals that have evolved to communicate specific messages (e.g., the waggle dance in honey bees)1. Identifying how individuals rapidly assess the value of social cues, or any sensory information, can be a challenging task for investigators, particularly when individuals are traveling in groups. Vision plays an important role in governing social interactions2,3,4 and studies have inferred the interaction networks that may arise in fish schools based on each individual’s field of view5,6. Fish schools are dynamic systems, however, making it difficult to isolate individual responses to particular features, or neighbor behaviors, due to the inherent collinearities and confounding factors that arise from the interactions among group members. The purpose of this protocol is to complement current work by isolating how alternative visual features can influence the directional decisions of individuals traveling alone or within groups.

The benefit of the current protocol is to combine a manipulative experiment with computer visualization techniques to isolate the elementary visual features an individual may experience in nature. Specifically, the Y-maze (Figure 1) is used to collapse directional choice to a binary response and introduce computer animated images designed to mimic the swimming behaviors of virtual neighbors. These images are projected up from below the maze to mimic the silhouettes of conspecifics swimming beneath one or more subjects. The visual characteristics of these silhouettes, such as their morphology, speed, coherency, and swimming behavior are easily tailored to test alternative hypotheses7.

This paper demonstrates the utility of this approach by isolating how individuals of a model social fish species, the Golden Shiner (Notemigonus crysoleucas), respond to the relative speed of virtual neighbors. The protocol focus, here, is on whether the directional influence of virtual neighbors change with their speed and, if so, quantifying the form of the observed relationship. In particular, the directional cue is generated by having a fixed proportion of the silhouettes act as leaders and move ballistically towards one arm or another. The remaining silhouettes act as distractors by moving about at random to provide background noise that can be tuned by adjusting the leader/distractor ratio. The ratio of leaders to distractors captures the coherency of the directional cues and can be adjusted accordingly. Distractor silhouettes remain confined to the decision area (“DA”, Figure 1A) by having the silhouettes reflect off of the boundary. Leader silhouettes, however, are allowed to leave the DA region and enter their designated arm before slowly fading away once the silhouettes traversed 1/3 the length of the arm. As leaders leave the DA, new leader silhouettes take their place and retrace their exact path to ensure that the leader/distractor ratio remains constant in the DA throughout the experiment.

The use of virtual fish allows for the control of the visual sensory information, while monitoring the directional response of the subject, which may reveal novel features of social navigation, movement, or decision making in groups. The approach used here can be applied to a broad range of questions, such as effects of sublethal stress or predation on social interactions, by manipulating the computer animation to produce behavioral patterns of varying complexity.

Protocol

All experimental protocols were approved by the Institutional Animal Care and Use Committee of the Environmental Laboratory, US Army Engineer and Research and Development Center, Vicksburg, MS, USA (IACUC# 2013-3284-01).

1. Sensory maze design

- Conduct the experiment in a watertight poly methyl methacrylate Y-maze platform (made in-house) set atop of a transparent support platform in a dedicated room. Here the platform is 1.9 cm thick and is supported by 4 7.62 cm beams of extruded aluminum that is 1.3 m in width, 1.3 m in length, and 0.19 m in height.

- Construct the holding and decision areas to be identical in construction (Figure 1A). Here, the Y-maze arms are 46 cm in length, 23 cm in width, and 20 cm in depth with a central decision area approximately 46 cm in diameter.

- Adhere white project-through theater screen at the bottom of the Y-maze for projecting visual stimuli into the domain.

- Coat the sides of the Y-maze with white vinyl to limit external visual stimuli.

- Install a remotely controlled clear gate (via clear monofilament) to partition the holding area from the central decision area to release subjects into the maze after acclimation.

- Place additional blinds to prevent the fish from viewing lights, housing, and equipment, such as light-blocking blinds that reach the floor in door frames to minimize light effects and shadow movements from the external room or hallway.

2. Recording equipment

- Select an overhead camera (black and white) based on the contrast needed between the background imagery, virtual fish and subject fish.

- Install an overhead camera to record the maze from above and to record the behaviors of the fish and the visual projections.

- For this demonstration, use b/w Gigabyte Ethernet (GigE) cameras, such that 9 m IP cables were attached to a computer with a 1 Gb Ethernet card in a control room.

- Connect the camera to a computer in an adjoining room where the observer can remotely control the gate, visual stimuli program, and camera recording software.

- Ensure that camera settings are at sampling and frequency rates that prevent any flickering effects, which occur when the camera and software are out of phase with the room lights.

- Check the electrical frequency of the location; offset the camera sampling rate (frames per second, fps) to prevent flickering by multiplying or dividing the AC frequency by a whole number.

- Set the camera settings so that image clarity is optimized using the software and computer to visualize the relevant behaviors.

- For this demonstration, perform sampling at 30 fps with a spatial resolution of 1280 pixels x 1024 pixels.

3. Calibrate lighting, projector, and camera settings

- Install four overhead track lighting systems along the walls of the experimental room.

- Install adjustable control switches for the lights to provide greater flexibility in achieving the correct room ambient light.

- Position the lights to avoid reflections on the maze (Figure 1B).

- Secure a short throw (ST) projector to the bottom edge of the maze’s support structure (Figure 1C).

- Select the projection resolution (set to 1440 pixels x 900 pixels for this demonstration).

- Adjust ambient light levels, created by the overhead lights and projector, to match the lighting conditions found in the subjects’ housing room (here set to 134 ± 5 lux during the demonstration experiment, which is equivalent to natural lighting on an overcast day).

- Lock or mark the location of the dimmer switch for ease and consistency during experimental trials.

- Use a camera viewer program to configure the camera(s) to control exposure mode, gain, and white balance control.

- In this demonstration, set the Pylon Viewer to “continuous shot”, 8000 μs exposure time, 0 gain, and 96 white balance, which provides control of the video recording.

4. Calibrate visual projection program: background

- Project a homogenous background up onto the bottom of the maze and measure any light distortion from the projector. Here the background was created using Processing (v. 3), which is a tractable and well documented platform to create customized visualizations for scientific projects (https://processing.org/examples/).

- Create a program that will run a processing window to be projected onto the bottom of the maze. Customizing the background color of the window is done with the background command, which accepts an RGB color code. Several small example programs are found in the Processing tutorials (https://processing.org/tutorials/).

- Use the background color program to calibrate the projector and external lighting conditions.

- Measure any light distortion created by the projector using an image processing program to identify any deviations from the expected homogeneous background created. The following steps apply to using ImageJ (v. 1.52h; https://imagej.nih.gov/ij/).

- Capture a still frame image of the illuminated Y-maze with a uniform background color and open in ImageJ.

- Using the straight, segmented, or freehand line tool draw a straight vertical line from the brightest location in the center of the hotspot to the top of the Y-maze (Figure 2A).

- From the analyze menu, select Plot Profile to create a graph of gray scale values versus distance in pixels.

- Save pixel data as a comma separated file (.csv file extension) consisting of an index column and a pixel value column.

- Align the projection area with the maze (Figure 2B) and model any unwanted light distortion to reduce any color distortion that may be created by the projector (Figure 2C). The following outline the steps taken in the current demonstration.

- Import the ImageJ pixel intensity data file using the appropriate tab delimited read function (e.g., read_csv from the tidyverse package to read in comma separated files).

- Calculate the variability in light intensity along the sample transect, such as with a coefficient of variation, to provide a baseline reference for the level of distortion created in the background.

- Transform the raw pixel values to reflect a relative change in intensity from brightest to dimmest, where the smallest pixel intensity will approach the desired background color value selected in the image program.

- Plot the transform pixel intensity values beginning at the brightest part of the anomaly generally yields a decaying trend in intensity values as a function of the distance from the source. Use nonlinear least squares (function nls) to estimate the parameter values that best fit the data (here, a Gaussian decay function).

- Create the counter gradient using the same program adopted to generate the background counter image (Processing v. 3) to reduce any color distortion that may be created by the projector using R (v. 3.5.1).

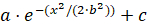

NOTE: The gradient function will generate a series of concentric circles centered on the brightest spot in the image that change in pixel intensity as a function of the distance from the center. The color of each ring is defined by subtracting the change in pixel intensity predicted by the model from the background color. Correspondingly, ring radius increases with distance from the source as well. The best fit model should reduce, if not eliminate, any pixel intensity across the gradient to provide a background uniformity.- Create a Gaussian gradient (

) using the visual stimulus program by adjusting the required parameters.

) using the visual stimulus program by adjusting the required parameters.

- Parameter a affect the brightness/darkness of the Gaussian distribution gradient. The higher the value, the darker the gradient.

- Parameter b affects the variance of the gradient. The larger the value, the broader the gradient will extend before leveling out to the desired background pixel intensity, c.

- Parameter c sets the desired background pixel intensity. The larger the value, the darker the background.

- Save the image to a folder using the saveFrame function, so that a fixed background image can be uploaded during the experiments to minimize memory load when rendering the stimuli during an experimental trial.

- Rerun the background generating program and visually inspect the results, as shown in Figure 2C. Repeat step 4.3 to quantify any observed improvements in reducing the degree of variability in light intensity across the sample transect.

- Create a Gaussian gradient (

- Empirically adjust the lighting levels, model parameters, or the distance covered in the transect (e.g., outer radius of the counter gradient) to make any additional manual adjustments until RGB values of the acclimation zone are similar to the decision area. Model parameters in this test were: a = 215, b = 800, and c = 4.

- Add the final filter to the experiment visual stimuli program.

5. Calibrate visual projection program: visual stimuli

NOTE: Rendering and animating the visual stimuli can also be done in Processing using the steps below as guides along with the platform’s tutorials. A schematic of the current program’s logic is provided in (Figure 3) and additional details can be found in Lemasson et al. (2018)7. The following steps provide examples of the calibration steps taken in the current experiment.

- Open the visual projection program Vfish.pde to center the projection within the maze’s decision area (Figure 1A) and calibrate the visual projections based on the hypotheses being tested (e.g., calibrate the size and speeds of the silhouettes to match those of the test subjects). Calibrations are hand-tuned in the header of the main program (Vfish.pde) using pre-selected debugging flags. In debugging mode (DEBUG = TRUE) sequentially step through each DEBUGGING_LEVEL_# flag (numbers 0-2) to make the necessary adjustments

- Set the DEBUGGING_LEVEL_0 flag to ‘true’ and run the program by pressing the play icon in the sketch window. Change the x and y position values (Domain parameters dx and dy, respectively) until the projection is centered.

- Set the DEBUGGING_LEVEL_1 to ‘true’ to scale the size of the fish silhouette (rendered as an ellipse). Run the program and iteratively adjust the width (eW) and length (eL) of the ellipse until it matches the average size of the test subjects. Afterwards, set the DEBUGGING_LEVEL_2 to ‘true’ to adjust the baseline speed of the silhouettes (ss).

- Set DEBUG = FALSE to exit debugging mode.

- Check that distractor silhouettes remain bounded to the Decision Area (DA, Figure 1A), that leader silhouette trajectories are properly aligned with either arm, and that the leader/distractor ratio within the DA remains constant.

- Step through the program’s GUI to ensure functionality of the options.

- Check that data are being properly written out to file.

- Ensure that the recording software can track the subject fish with visual projections in place. Steps to track fish have previously been described in Kaidanovich-Berlin et al. (2011)8, Holcomb et al. (2014)9, Way et al. (2016)10 and Zhang et al. (2018)11.

6. Animal preparation

- Choose the subject species based on the research question and application, including sex, age, genotype. Assign subjects to the experimental holding tanks and record baseline biometric statistics (e.g., body length and mass).

- Set the environmental conditions in the maze to that of the holding system. Water quality conditions for baseline experiments of behavior are often held at optimal for the species and for the experimental domain setup.

- In this demonstration, use the following conditions: 12 h light/12 h dark cycle, overhead flicker-free halogen lights set to 134 ± 5 lux, 22 ± 0.3°C, 97.4 ± 1.3% dissolved oxygen, and pH of 7.8 ± 0.1.

- Habituate the animals by transferring them to the domain for up to 30 min per day for 5 days without the computer-generated visual stimuli (e.g., fish silhouettes) before the start of the experimental trials.

- Ensure that the subject fish at that time is selected, assigned, weighed, measured and transferred to experimental tanks.

NOTE: Here, Golden Shiners standard length and wet weight were 63.4 ± 3.5 mm SL and 1.8 ± 0.3 g WW, respectively. - Use a water-to-water transfer when moving fish between tanks and the maze to reduce stress from handling and air exposure.

- Conduct experiments during a regular, fixed light cycle reflecting the subjects’ natural biological rhythm. This allows the subjects to be fed at the end of each day’s experimental trials to limit digestion effects on behavior.

7. Experimental procedure

- Turn on room projector and LED light track systems to predetermined level of brightness (in this demonstration 134 ± 5 lux) allowing the bulbs to warm (approximately 10 minutes).

- Open the camera viewer program and load the settings for aperture, color, and recording saved from setup to ensure best quality video can be attained.

- Open Pylon Viewer and activate the camera to be used for recording.

- Select Load Features from the camera dropdown menu and navigate to the saved camera settings folder.

- Open the saved settings (here labeled as camerasettings_20181001) to ensure video quality and click on continuous shot.

- Close Pylon Viewer.

- Open the visual projection program Vfish.pde and check that the projection remains centered in the maze, that the DataOut folder is empty, and that the program is operating as expected

- Check that the calibration ring is centered in the DA using step 5.1.1.

- Open the DataOut folder to ensure that it is empty for the day.

- Run the visual stimuli program by pressing play in the sketch window of Vfish.pde and use dummy variables to ensure program functionality.

- Enter fish id number (1-16), press Enter, and then confirm the selection by pressing Y or N for yes or no.

- Enter group size (fixed here at 1) and confirm selection.

- Enter desired silhouette speed (0-10 BL/s) and confirm selection.

- Press Enter to move past the acclimatization period and check the projection of the virtual fish in the decision area.

- Press Pause to pause the program and enter the dummy outcome choice, i.e., left (1) or right (2).

- Press Stop to terminate the program and write the data out to file.

- Check that data were properly written to file in the DataOut folder and log the file as a test run in the lab notes before fish are placed into the domain for acclimation.

- Use clock time and a stopwatch to log start and stop times of the trial in lab notebook to complement the elapsed times that can later be extracted from video playback due to the short duration of some replicate trials.

- Conduct a water change (e.g., 30%) using the holding system sump water before transferring a subject to the maze.

- Confirm that water quality is similar between the maze and holding system, and check gate functioning to ensure that it slides smoothly to just above water height.

- Using the predetermined experimental schedule, which has randomized subject-treatment exposures over the course of the experiment, enter the values selected for the current trial (stopping at the acclimatization screen, steps 7.3.3.1 - 7.3.3.3).

- Record treatment combination data into the lab notebook.

- Transfer the subject into the Y-maze holding area for a 10-minute acclimation period.

- Start the video recording, then hit the Return key in the Vfish.pde window at the end of the acclimation period. This will start the visual projections.

- When the virtual fish appear in the domain, log the clock time, and lift the holding gate (Figure 4A).

- End the trial when 50% of the subject’s body moves into a choice arm (Figure 4B) or when the designated period of time elapses (e.g., 5 min).

- Log the clock time, start and stop times from the stopwatch, and the subjects’ choice (i.e., left (1), right (2), or no choice(0)).

- Stop the video recording and press Pause in the visual stimuli program, which will prompt the user for trial outcome data (the arm number selected or a 0 to indicate that no choice was made). Upon confirming the selection, the program will return to the first screen and await the values expected for the next experimental trial.

- Collect the subject and return it to the respective holding tank. Repeat Steps 7.7-7.13 for each trial.

- At the conclusion of a session (AM or PM) press Stop in the program once the last fish of the session has made a decision. Pressing Stop will write the session’s data out to file.

- Repeat the water exchange at the conclusion of the morning session to ensure water quality stability.

- After the last trial of the day, review the lab notebook and make any needed notes.

- Press Stop in the visual stimuli program to output the collected data to the DataOut folder, after the last trial of the day.

- Verify the number, name, and location of the data files saved by the visualization program.

- Log water quality, along with light levels in the maze room to compare with the morning settings. Place the aeration system and heaters into the Y-maze.

- Turn off the projector and experimental room tracking lighting.

- Feed fish the predetermined daily ration.

8. Data Analysis

- Ensure that the experimental data contain the necessary variables (e.g., date, trial, subject id, arm selected by program, visual factors tested, subject choice, start and stop times, and comments).

- Check for any recording errors (human or program induced).

- Tabulate responses and check for signs of any directional biases on the part of the subjects (e.g., binomial test on arm choice in the control condition)7.

- When the experiment is designed using repeated measurements on the same individuals, as in the case here, the use of mixed effects models is suggested.

Results

Hypothesis and design

To demonstrate the utility of this experimental system we tested the hypothesis that the accuracy with which Golden Shiner follow a visual cue will improve with the speed of that cue. Wild type Golden Shiner were used (N = 16, body lengths, BL, and wet weights, WW, were 63.4 ± 3.5 mm and 1.8 ± 0.3 g, respectfully). The coherency of the visual stimuli (leader/distractor r...

Discussion

Visual cues are known to trigger an optomotor response in fish exposed to black and white gratings13 and there is increasing theoretical and empirical evidence that neighbor speed plays an influential role in governing the dynamical interactions observed in fish schools7,14,15,16,17. Contrasting hypotheses exist to explain how individua...

Disclosures

All authors contributed to the experimental design, analyses and writing the paper. A.C.U. and C.M.W. setup and collected the data. The authors have nothing to disclose.

Acknowledgements

We thank Bryton Hixson for setup assistance. This program was supported by the Basic Research Program, Environmental Quality and Installations (EQI; Dr. Elizabeth Ferguson, Technical Director), US Army Engineer Research and Development Center.

Materials

| Name | Company | Catalog Number | Comments |

| Black and white IP camera | Noldus, Leesburg, VA, USA | https://www.noldus.com/ | |

| Extruded aluminum | 80/20 Inc., Columbia City, IN, USA | 3030-S | https://www.8020.net 3.00" X 3.00" Smooth T-Slotted Profile, Eight Open T-Slots |

| Finfish Starter with Vpak, 1.5 mm extruded pellets | Zeigler Bros. Inc., Gardners, PA, USA | http://www.zeiglerfeed.com/ | |

| Golden shiners | Saul Minnow Farm, AR, USA | http://saulminnow.com/ | |

| ImageJ (v 1.52h) freeware | National Institute for Health (NIH), USA | https://imagej.nih.gov/ij/ | |

| LED track lighting | Lithonia Lightening, Conyers, GA, USA | BR20MW-M4 | https://lithonia.acuitybrands.com/residential-track |

| Oracle 651 white cut vinyl | 651Vinyl, Louisville, KY, USA | 651-010M-12:5ft | http://www.651vinyl.com. Can order various sizes. |

| PowerLite 570 overhead projector | Epson, Long Beach CA, USA | V11H605020 | https://epson.com/For-Work/Projectors/Classroom/PowerLite-570-XGA-3LCD-Projector/p/V11H605020 |

| Processing (v 3) freeware | Processing Foundation | https://processing.org/ | |

| R (3.5.1) freeware | The R Project for Statistical Computing | https://www.r-project.org/ | |

| Ultra-white 360 theater screen | Alternative Screen Solutions, Clinton, MI, USA | 1950 | https://www.gooscreen.com. Must call for special cut size |

| Z-Hab system | Pentair Aquatic Ecosystems, Apopka, FL, USA | https://pentairaes.com/. Call for details and sizing. |

References

- Dall, S. R. X., Olsson, O., McNamara, J. M., Stephens, D. W., Giraldeau, L. A. Information and its use by animals in evolutionary ecology. Trends in Ecology and Evolution. 20 (4), 187-193 (2005).

- Pitcher, T. Sensory information and the organization of behaviour in a shoaling cyprinid fish. Animal Behaviour. 27, 126-149 (1979).

- Partridge, B. The structure and function of fish schools. Scientific American. 246 (6), 114-123 (1982).

- Fernández-Juricic, E., Erichsen, J. T., Kacelnik, A. Visual perception and social foraging in birds. Trends in Ecology and Evolution. 19 (1), 25-31 (2004).

- Strandburg-Peshkin, A., et al. Visual sensory networks and effective information transfer in animal groups. Current Biology. 23 (17), R709-R711 (2013).

- Rosenthal, S. B., Twomey, C. R., Hartnett, A. T., Wu, S. H., Couzin, I. D. Behavioral contagion in mobile animal groups. Proceedings of the National Academy of Sciences (U.S.A.). 112 (15), 4690-4695 (2015).

- Lemasson, B. H., et al. Motion cues tune social influence in shoaling fish. Scientific Reports. 8 (1), e9785 (2018).

- Kaidanovich-Beilin, O., Lipina, T., Vukobradovic, I., Roder, J., Woodgett, J. R. Assessment of social interaction behaviors. Journal of Visualized. Experiments. (48), e2473 (2011).

- Holcombe, A., Schalomon, M., Hamilton, T. J. A novel method of drug administration to multiple zebrafish (Danio rerio) and the quantification of withdrawal. Journal of Visualized. Experiments. (93), e51851 (2014).

- Way, G. P., Southwell, M., McRobert, S. P. Boldness, aggression, and shoaling assays for zebrafish behavioral syndromes. Journal of Visualized. Experiments. (114), e54049 (2016).

- Zhang, Q., Kobayashi, Y., Goto, H., Itohara, S. An automated T-maze based apparatus and protocol for analyzing delay- and effort-based decision making in free moving rodents. Journal of Visualized. Experiments. (138), e57895 (2018).

- Videler, J. J. . Fish Swimming. , (1993).

- Orger, M. B., Smear, M. C., Anstis, S. M., Baier, H. Perception of Fourier and non-Fourier motion by larval zebrafish. Nature Neuroscience. 3 (11), 1128-1133 (2000).

- Romey, W. L. Individual differences make a difference in the trajectories of simulated schools of fish. Ecological Modeling. 92 (1), 65-77 (1996).

- Katz, Y., Tunstrom, K., Ioannou, C. C., Huepe, C., Couzin, I. D. Inferring the structure and dynamics of interactions in schooling fish. Proceedings of the National Academy of Sciences (U.S.A.). 108 (46), 18720-18725 (2011).

- Herbert-Read, J. E., Buhl, J., Hu, F., Ward, A. J. W., Sumpter, D. J. T. Initiation and spread of escape waves within animal groups). Proceedings of the National Academy of Sciences (U.S.A.). 2 (4), 140355 (2015).

- Lemasson, B. H., Anderson, J. J., Goodwin, R. A. Motion-guided attention promotes adaptive communications during social navigation. Proceedings of the Royal Society. 280 (1754), e20122003 (2013).

- Moussaïd, M., Helbing, D., Theraulaz, G. How simple rules determine pedestrian behavior and crowd disasters. Proceedings of the National Academy of Sciences (U.S.A.). 108 (17), 6884-6888 (2011).

- Bianco, I. H., Engert, F. Visuomotor transformations underlying hunting behavior in zebrafish). Current Biology. 25 (7), 831-846 (2015).

- Chouinard-Thuly, L., et al. Technical and conceptual considerations for using animated stimuli in studies of animal behavior. Current Zoology. 63 (1), 5-19 (2017).

- Nakayasu, T., Yasugi, M., Shiraishi, S., Uchida, S., Watanabe, E. Three-dimensional computer graphic animations for studying social approach behaviour in medaka fish: Effects of systematic manipulation of morphological and motion cues. PLoS One. 12 (4), e0175059 (2017).

- Stowers, J. R., et al. Virtual reality for freely moving animals. Nature Methods. 14 (10), 995-1002 (2017).

- Warren, W. H., Kay, B., Zosh, W. D., Duchon, A. P., Sahuc, S. Optic flow is used to control human walking. Nature Neuroscience. 4 (2), 213-216 (2001).

- Silverman, J., Suckow, M. A., Murthy, S. . The IACUC Handbook. , (2014).

Reprints and Permissions

Request permission to reuse the text or figures of this JoVE article

Request PermissionThis article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. All rights reserved