A subscription to JoVE is required to view this content. Sign in or start your free trial.

Methods Article

A Psychophysics Paradigm for the Collection and Analysis of Similarity Judgments

In This Article

Summary

The protocol presents an experimental psychophysics paradigm to obtain large quantities of similarity judgments, and an accompanying analysis workflow. The paradigm probes context effects and enables modeling of similarity data in terms of Euclidean spaces of at least five dimensions.

Abstract

Similarity judgments are commonly used to study mental representations and their neural correlates. This approach has been used to characterize perceptual spaces in many domains: colors, objects, images, words, and sounds. Ideally, one might want to compare estimates of perceived similarity between all pairs of stimuli, but this is often impractical. For example, if one asks a subject to compare the similarity of two items with the similarity of two other items, the number of comparisons grows with the fourth power of the stimulus set size. An alternative strategy is to ask a subject to rate similarities of isolated pairs, e.g., on a Likert scale. This is much more efficient (the number of ratings grows quadratically with set size rather than quartically), but these ratings tend to be unstable and have limited resolution, and the approach also assumes that there are no context effects.

Here, a novel ranking paradigm for efficient collection of similarity judgments is presented, along with an analysis pipeline (software provided) that tests whether Euclidean distance models account for the data. Typical trials consist of eight stimuli around a central reference stimulus: the subject ranks stimuli in order of their similarity to the reference. By judicious selection of combinations of stimuli used in each trial, the approach has internal controls for consistency and context effects. The approach was validated for stimuli drawn from Euclidean spaces of up to five dimensions.

The approach is illustrated with an experiment measuring similarities among 37 words. Each trial yields the results of 28 pairwise comparisons of the form, "Was A more similar to the reference than B was to the reference?" While directly comparing all pairs of pairs of stimuli would have required 221445 trials, this design enables reconstruction of the perceptual space from 5994 such comparisons obtained from 222 trials.

Introduction

Humans mentally process and represent incoming sensory information to perform a wide range of tasks, such as object recognition, navigation, making inferences about the environment, and many others. Similarity judgments are commonly used to probe these mental representations1. Understanding the structure of mental representations can provide insight into the organization of conceptual knowledge2. It is also possible to gain insight into neural computations, by relating similarity judgments to brain activation patterns3. Additionally, similarity judgments reveal features that are salient in perception4. Studying how mental representations change during development can shed light on how they are learned5. Thus, similarity judgments provide valuable insight into information processing in the brain.

A common model of mental representations using similarities is a geometric space model6,7,8. Applied to sensory domains, this kind of model is often referred to as a perceptual space9. Points in the space represent stimuli and distances between points correspond to the perceived dissimilarity between them. From similarity judgments, one can obtain quantitative estimates of dissimilarities. These pairwise dissimilarities (or perceptual distances) can then be used to model the perceptual space via multidimensional scaling10.

There are many methods for collecting similarity judgments, each with its advantages and disadvantages. The most straightforward way of obtaining quantitative measures of dissimilarity is to ask subjects to rate on a scale the degree of dissimilarity between each pair of stimuli. While this is relatively quick, estimates tend to be unstable across long sessions as subjects cannot go back to previous judgments, and context effects, if present, cannot be detected. (Here, a context effect is defined as a change in the judged similarity between two stimuli, based on the presence of other stimuli that are not being compared.) Alternatively, subjects can be asked to compare all pairs of stimuli to all other pairs of stimuli. While this would yield a more reliable rank ordering of dissimilarities, the number of comparisons required scales with the fourth power of the number of stimuli, making it feasible for only small stimulus sets. Quicker alternatives, like sorting into a predefined number of clusters11 or free sorting have their own limitations. Free sorting (into any number of piles) is intuitive, but it forces the subject to categorize the stimuli, even if the stimuli do not easily lend themselves to categorization. The more recent multi-arrangement method, inverse MDS, circumvents many of these limitations and is very efficient12. However, this method requires subjects to project their mental representations onto a 2D Euclidean plane and to consider similarities in a specific geometric manner, making the assumption that similarity structure can be recovered from Euclidean distances on a plane. Thus, there remains a need for an efficient method to collect large amounts of similarity judgments, without making assumptions about the geometry underlying the judgments.

Described here is a method that is both reasonably efficient and also avoids the above potential pitfalls. By asking subjects to rank stimuli in order of similarity to a central reference in each trial13, relative similarity can be probed directly, without assuming anything about the geometric structure of the subjects' responses. The paradigm repeats a subset of comparisons with both identical and different contexts, allowing for direct assessment of context effects as well as the acquisition of graded responses in terms of choice probabilities. The analysis procedure decomposes these rank judgments into multiple pairwise comparisons and uses them to build and search for Euclidean models of perceptual spaces that explain the judgments. The method is suitable for describing in detail the representation of stimulus sets of moderate sizes (e.g., 19 to 49).

To demonstrate the feasibility of the approach, an experiment was conducted, using a set of 37 animals as stimuli. Data was collected over the course of 10 one-hour sessions and then analyzed separately for each subject. Analysis revealed consistency across subjects and negligible context effects. It also assessed consistency of perceived dissimilarities between stimuli with Euclidean models of their perceptual spaces. The paradigm and analysis procedures outlined in this paper are flexible and are expected to be of use to researchers interested in characterizing the geometric properties of a range of perceptual spaces.

Protocol

Prior to beginning the experiments, all subjects provide informed consent in accordance with institutional guidelines and the Declaration of Helsinki. In the case of this study, the protocol was approved by the institutional review board of Weill Cornell Medical College.

1. Installation and set-up

- Download the code from the GitHub repository, similarities (https://github.com/jvlab/similarities). In the command line, run: git clone https://github.com/jvlab/similarities.git.- If git is not installed, download the code as a zipped folder from the repository.

NOTE: In the repository are two subdirectories: experiments, which contains two sample experiments, and analysis, which contains a set of python scripts to analyze collected similarity data. In the experiments directory one (word_exp) makes use of word stimuli and the other (image_exp) displays image stimuli. Some familiarity with Python will be helpful, but not necessary. Familiarity with the command line is assumed: multiple steps require running scripts from the command line. - Install the following tools and set up a virtual environment.

- python 3: See the link for instructions: https://realpython.com/installing-python/. This project requires Python version 3.8.

- PsychoPy: From the link (https://www.psychopy.org/download.html), download the latest standalone version of PsychoPy for the relevant operating system, using the blue button, under Installation. This project uses PsychoPy version 2021.2; the provided sample experiments must be run with the correct version of PsychoPy as specified below.

- conda: From the link (https://docs.conda.io/projects/conda/en/latest/user-guide/install/index.html#regular-installation), download conda, through Miniconda or Anaconda, for the relevant operating system.

- In the command line, run the following to create a virtual environment with the required python packages:

cd ~/similarities

conda env create -f environment.yaml - Check to see if the virtual environment has been created and activate it as follows:

conda env list # venv_sim_3.8 should be listed

conda activate venv_sim_3.8 # to enter the virtual environment

conda deactivate # to exit the virtual environment after running scripts

NOTE: Running scripts in an environment can sometimes be slow. Please allow up to a minute to see any printed output in the command line when you run a script.

- To ensure that downloaded code works as expected, run the provided sample experiments using the steps below.

NOTE: The experiments directory (similarities/experiments) contains sample experiments (word_exp and image_exp), making use of two kinds of stimuli: words and images.- Open PsychoPy. Go to View, then click Coder, because PsychoPy's default builder cannot open .py files. Go to File, then click Open, and open word_exp.py (similarities/experiments/word_exp/word_exp.py).

- To load the experiment, click the green Run Experiment button. Enter initials or name and session number and click OK.

- Follow the instructions and run through a few trials to check that stimuli gray out when clicked. Press Escape when ready to exit.

NOTE: PsychoPy will open in fullscreen, first displaying instructions, and then a few trials, with placeholder text instead of stimulus words. When clicked, words gray out. When all words have been clicked, the next trial begins. At any time, PsychoPy can be terminated by pressing the Escape key. If the program terminates during steps 1.3.2 or 1.3.3, it is possible that the user's operating system requires access to the keyboard and mouse. If so, a descriptive error message will be printed in the PsychoPy Runner window, which will guide the user. - Next, check that the image experiment runs with placeholder images. Open PsychoPy. Go to File. Click Open and choose image_exp.psyexp (similarities/experiments/image_exp/image_exp.psyexp).

- To ensure the correct version is used, click the Gear icon. From the option Use PsychoPy version select 2021.2 from the dropdown menu.

- As before, click the green Run Experiment button. Enter initials or name and session number and click OK.

NOTE: As in step 1.3.2, PsychoPy will first display instructions and then render trials after images have been loaded. Each trial will contain eight placeholder images surrounding a central image. Clicking on an image will gray it out. The program can be quit by pressing Escape. - Navigate to the data directory in each of the experiment directories to see the output:

similarities/experiments/image_exp/data

similarities/experiments/word_exp/data

NOTE: Experimental data are written to the data directory. The responses.csv file contains trial-by-trial click responses. The log file contains all keypresses and mouse clicks. It is useful for troubleshooting, if PsychoPy quits unexpectedly.

- Optionally, to verify that the analysis scripts work as expected, reproduce some of the figures in the Representative Results section as follows.

- Make a directory for preprocessed data:

cd ~/similarities

mkdir sample-materials/subject-data/preprocessed - Combine the raw data from all the responses.csv files to one json file. In the command line, run the following:

cd similarities

conda activate venv_sim_3.8

python -m analysis.preprocess.py - When prompted, enter the following values for the input parameters: 1) path to subject-data: ./sample-materials/subject-data, 2), name of experiment: sample_word, and 3) subject ID: S7. The json file will be in similarities/sample-materials/subject-data/preprocessed.

- Once data is preprocessed, follow the steps in the project README under reproducing figures. These analysis scripts will be run later to analyze data collected from the user's own experiment.

- Make a directory for preprocessed data:

2. Data collection by setting up a custom experiment

NOTE: Procedures are outlined for both the image and word experiments up to step 3.1. Following this step, the process is the same for both experiments, so the image experiment is not explicitly mentioned.

- Select an experiment to run. Navigate to the word experiment (similarities/experiments/word_exp) or the image experiment (similarities/experiments/image_exp).

- Decide on the number of stimuli. The default size of the stimulus set is 37. To change this, open the configuration file (similarities/analysis/config.yaml) in a source code editor. In the num_stimuli parameter of the analysis configuration file, set the stimulus size equal to mk + 1 as required by the experimental design for integers k and m.

NOTE: In the standard design, k ≥ 3 and m = 6. Therefore, valid values for num_stimuli include 19, 25, 31, 37, 43, and 49 (see Table 1 for possible extensions of the design). - Finalize the experimental stimuli. If the word experiment is being run, prepare a list of words. For the image experiment, make a new directory and place all the stimulus images in it. Supported image types are png and jpeg. Do not use periods as separators in filenames (e.g., image.1.png is invalid but image1.png or image_1.png are valid).

- If running the word experiment, prepare the stimuli as follows.

- Create a new file in experiments/word_exp named stimuli.txt. This file will be read in step 3.3.

- In the file, write the words in the stimulus set as they are meant to appear in the display, with each word in a separate line. Avoid extra empty lines or extra spaces next to the words. See sample materials for reference (similarities/sample-materials/word-exp-materials/sample_word_stimuli.txt).

- If the image experiment is being run, set the path to the stimulus set as follows.

- In the experiments directory, find the configuration file called config.yaml (similarities/experiments/config.yaml).

- Open the file in a source code editor and update the value of the files variable to the path to the directory containing the stimulus set (step 2.3). This is where PsychoPy will look for the image stimuli.

3. Creating ranking trials

- Use a stimuli.txt file. If the word experiment is being run, the file created in step 2.4 can be used. Otherwise, use the list of filenames (for reference, see similarities/sample-materials/image-exp-materials/sample_image_stimuli.txt). Place this file in the appropriate experiment directory (word_exp or image_exp).

- Avoid extra empty lines, as well as any spaces in the names. Use camelCase or snake_case for stimulus names.

- Next, create trial configurations. Open the config.yaml file in the analysis directory and set the value of the path_to_stimulus_list parameter to the path to stimuli.txt (created in step 3.1).

- From the similarities directory, run the script by executing the following commands one after the other:

cd ~/similarities

conda activate venv_sim_3.8

python -m analysis.trial_configuration

conda deactivate # exit the virtual environment - This creates a file called trial_conditions.csv in similarities in which each row contains the names of the stimuli appearing in a trial, along with their positions in the display. A sample trial_conditions.csv file is provided (similarities/sample-materials). For details on input parameters for the analysis scripts, refer to the project README under Usage.

- From the similarities directory, run the script by executing the following commands one after the other:

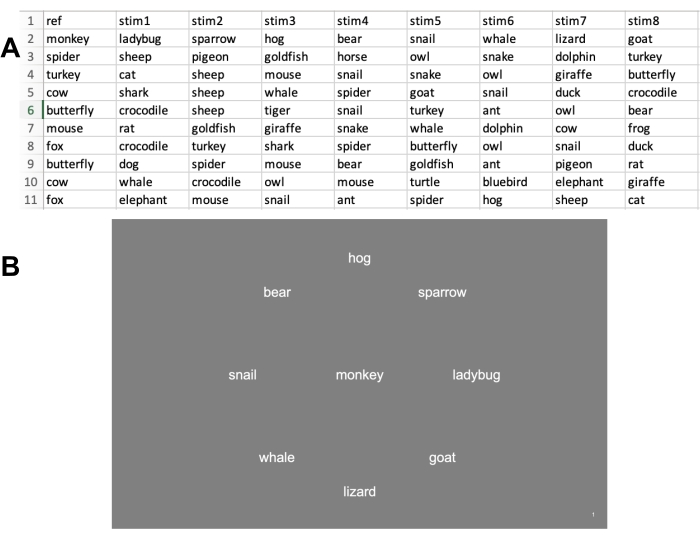

Figure 1: Representative examples of trials (step 3.3). (A) Each row contains the details of a single trial. Headers indicate the position of the stimulus around the circle. The stimulus under ref appears in the center and stim 1 to stim 8 appear around the reference. (B) The first trial (row) from A is rendered by PsychoPy to display the eight stimuli around the reference stimulus, monkey. Please click here to view a larger version of this figure.

NOTE: At this point, a full set of 222 trials for one complete experimental run, i.e., for one full data set, has been generated. Figure 1A shows part of a conditions file generated by the above script, for the word experiment (see Representative Results).

- Next, break these 222 trials into sessions and randomize the trial order. In the typical design, sessions comprise of 111 trials, each of which requires approximately 1 h to run.

- To do this, in the command line run the following:

conda activate venv_sim_3.8

cd ~/similarities

python -m analysis.randomize_session_trials - When prompted, enter the following input parameters: path to trial_conditions.csv created in step 3.3.2; output directory; number of trials per session: 111; number of repeats: 5.

NOTE: The number of repeats can also be varied but will affect the number of sessions conducted in step 4 (see Discussion: Experimental Paradigm). If changing the default value of the number of repeats, be sure to edit the value of the num_repeats parameter in the config file (similarities/analysis/config.yaml). If needed, check the step-by-step instructions for doing the above manually in the README file under the section Create Trials.

- To do this, in the command line run the following:

- Rename and save each of the generated files as conditions.csv, in its own directory. See the recommended directory structure here: similarities/sample-materials/subject-data and in the project README.

NOTE: As outlined in step 4, each experiment is repeated five times in the standard design, over the course of 10 h long sessions, each on a separate day. Subjects should be asked to come for only one session per day to avoid fatigue. See Table 1 for the number of trials and sessions needed for stimulus sets of different sizes.

4. Running the experiment and collecting similarity data

- Explain the task to the subjects and give them instructions. In each trial, subjects will view a central reference stimulus surrounded by eight stimuli and be asked to click the stimuli in the surround, in order of similarity to the central reference, i.e., they should click the most similar first and least similar last.

- Ask them to try to use a consistent strategy. Tell them that they will be shown the same configuration of stimuli multiple times over the course of the 10 sessions. If the study probes representation of semantic information, ensure that subjects are familiar with the stimuli before starting.

- Navigate to the relevant experiment directory (see step 2.1). If this is the first time running the experiment, create a directory called subject-data to store subject responses. Create two subdirectories in it: raw and preprocessed. For each subject, create a subdirectory within subject-data/raw.

- Copy the conditions.csv file prepared in step 3 for the specific session and paste it into the current directory, i.e., the directory containing the psyexp file. If there is already a file there, named conditions.csv, make sure to replace it with the one for the current session.

- Open PsychoPy and then open the psyexp or py file in the relevant experiment's directory. In PsychoPy, click on the green Play button to run the experiment. In the modal pop-up, enter the subject name or ID and session number. Click OK to start. Instructions will be displayed at the start of each session.

- Allow the subject about 1 h to complete the task. As the task is self-paced, encourage the subjects to take breaks if needed. When the subject finishes the session, PsychoPy will automatically terminate, and files will be generated in the similarities/experiments/<image or word>_exp/data directory.

- Transfer these into the subject-data/raw/<subjectID> directory (created in step 4.3). See README for the recommended directory structure.

NOTE: As mentioned, the log file is for troubleshooting. The most common cause for PsychoPy to close unexpectedly is that a subject accidentally presses Escape during a session. If this happens, responses for trials up until the last completed trial will still be written to the responses.csv file. - If PsychoPy closes unexpectedly, reopen it and create a new conditions.csv file, with only the trials that had not been attempted. Replace the existing session's conditions file with this one and rerun the experiment. Be sure to save the generated files in the appropriate place. At the end of the session, the two responses files can be manually combined into one, though this is not necessary.

- For each of the remaining sessions, repeat steps 4.4 to 4.8.

- After all sessions are completed, combine the raw data files and reformat them into a single json file for further processing. To do this, run preprocess.py in the terminal (similarities/analysis/preprocess.py) as follows:

cd ~/similarities

conda activate venv_sim_3.8

python -m analysis.preprocess - When prompted, enter the requested input parameters: the path to the subject-data directory, subject IDs for which to preprocess the data, and the experiment name (used to name the output file). Press Enter.

- Exit the virtual environment:

conda deactivate

NOTE: This will create a json file in the output directory that combines responses across repeats for each trial. Similarity data is read in from subject-data/raw and written to subject-data/preprocessed.

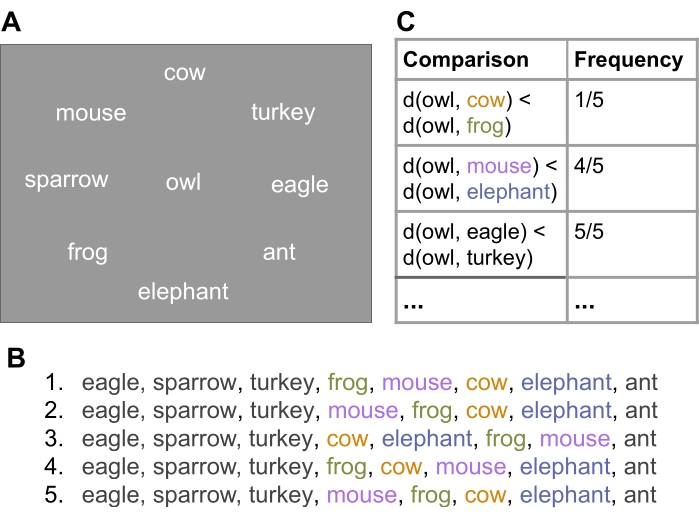

5. Analyzing similarity judgments

NOTE: Subjects are asked to click stimuli in order of similarity to the reference, thus providing a ranking in each trial. For standard experiments, repeat each trial five times, generating five rank orderings of the same eight stimuli (see Figure 2B). These rank judgments are interpreted as a series of comparisons in which a subject compares pairs of perceptual distances. It is assumed the subject is asking the following question before each click: "Is the (perceptual) distance between the reference and stimulus A smaller than the distance between the reference and stimulus B?" As shown in Figure 2C, this yields choice probabilities for multiple pairwise similarity comparisons for each trial. The analysis below uses these choice probabilities.

Figure 2: Obtaining choice probabilities from ranking judgments. (A) An illustration of a trial from the word experiment we conducted. (B) Five rank orderings were obtained for the same trial, over the course of multiple sessions. (C) Choice probabilities for the pairwise dissimilarity comparisons that the ranking judgments represent. Please click here to view a larger version of this figure.

- Determine pairwise choice probabilities from rank order judgments.

- In similarities/analysis, run describe_data.py in the command line.

cd ~/similarities

conda activate venv_sim_3.8

python -m analysis.describe_data - When prompted, enter the path to subject-data/preprocessed and the list of subjects for which to run the analysis.

NOTE: This will create three kinds of plots: i) the distribution of choice probabilities for a given subject's complete data set, ii) heatmaps to assess consistency across choice probabilities for pairs of subjects, and iii) a heatmap of choice probabilities for all comparisons that occur in two contexts to assess context effects. Operationally, this means comparing choice probabilities in pairs of trials that contain the same reference and a common pair of stimuli in the ring but differ in all other stimuli in the ring: the heatmap shows how the choice probability depends on this context.

- In similarities/analysis, run describe_data.py in the command line.

- Generate low-dimensional Euclidean models of the perceptual spaces, using the choice probabilities. Run model_fitting.py in the command line as follows:

cd ~/similarities

conda activate venv_sim_3.8

python -m analysis.model_fitting- Provide the following input parameters when prompted: path to the subject-data/preprocessed directory; the number of stimuli (37 by default); the number of iterations (the number of times the modeling analysis should be run); the output directory; and the amount of Gaussian noise (0.18 by default).

NOTE: This script takes a few hours to run. When finished, npy files containing the best-fit coordinates for 1D, 2D, 3D, 4D and 5D models describing the similarity data will be written to the output directory. A csv file containing log-likelihood values of the different models will be generated.

- Provide the following input parameters when prompted: path to the subject-data/preprocessed directory; the number of stimuli (37 by default); the number of iterations (the number of times the modeling analysis should be run); the output directory; and the amount of Gaussian noise (0.18 by default).

- Visualize the log-likelihood of the obtained models and assess their fit. To do so, run similarities/analysis/model_fitting_figure.py in the command line:

cd ~/similarities

python -m analysis.model_fitting_figure- When prompted, input the needed parameter: the path to the csv files containing log-likelihoods (from step 5.2).

- Analyze the figure generated, showing log-likelihoods on the y-axis and model dimensions on the x-axis. As a sanity check, two models in addition to the Euclidean models are included: a random choice model and a best possible model.

NOTE: The random choice model assumes subjects click randomly. Thus, it provides an absolute lower bound on the log-likelihood for any model that is better than random. Similarly, as an upper bound for the log-likelihood (labeled best), there is the log-likelihood of a model that uses the empirical choice probabilities as its model probabilities. - Verify that no Euclidean model outperforms the best model, as the best model is, by design, overfit and unconstrained by geometrical considerations. Check that the likelihoods plotted are relative to the best log-likelihood.

- Visualize the perceptual spaces for each subject. Generate scatterplots showing the points from the 5D model projected onto the first two principal components. To do so, run similarities/analysis/perceptual_space_visualizations.py in the command line:

cd ~/similarities

python -m analysis.perceptual_space_visualizations- When prompted, input the parameters: the subject IDs (separated by spaces) and the path to the npy file containing the 5D points obtained from step 5.2.

- After the script has finished executing, exit the virtual environment:

conda deactivate

NOTE: This script is for visualization of the similarity judgments. It will create a 2D scatter plot, by projecting the 5D points onto the first two principal components, normalized to have equal variance. Two points will be farther apart if the subject considered them less similar and vice versa.

Results

Figure 1A shows part of a conditions file generated by the script in step 3.3, for the word experiment. Each row corresponds to a trial. The stimulus in the ref column appears in the center of the display. The column names stim1 to stim8 correspond to eight positions along a circle, running counterclockwise, starting from the position to the right of the central reference. A sample trial from the word experiment is shown in Figure 1B.

Discussion

The protocol outlined here is effective for obtaining and analyzing similarity judgments for stimuli that can be presented visually. The experimental paradigm, the analysis, and possible extensions are discussed first, and later the advantages and disadvantages of the method.

Experimental paradigm: The proposed method is demonstrated using a domain of 37 animal names, and a sample dataset of perceptual judgments is provided so that one can follow the analysis in step 5 and rep...

Disclosures

The authors have nothing to disclose.

Acknowledgements

The work is supported by funding from the National Institutes of Health (NIH), grant EY07977. The authors would also like to thank Usman Ayyaz for his assistance in testing the software, and Muhammad Naeem Ayyaz for his comments on the manuscript.

Materials

| Name | Company | Catalog Number | Comments |

| Computer Workstation | N/A | N/A | OS: Windows/ MacOS 10 or higher/ Linux; 3.1 GHz Dual-Core Intel Core i5 or similar; 8GB or more memory; User permissions for writing and executing files |

| conda | Version 4.11 | OS: Windows/ MacOS 10 or higher/ Linux | |

| Microsoft Excel | Microsoft | Any | To open and shuffle rows and columns in trial conditions files. |

| PsychoPy | N/A | Version 2021.2 | Framework for running psychophysical studies |

| Python 3 | Python Software Foundation | Python Version 3.8 | Python3 and associated built-in libraries |

| Required Python Libraries | N/A | numpy version: 1.17.2 or higher; matplotlib version 3.4.3 or higher; scipy version 1.3.1 or higher; pandas version 0.25.3 or higher; seaborn version 0.9.0 or higher; scikit_learn version 0.23.1 or higher; yaml version 6.0 or higher | numpy, scipy and scikit_learn are computing modules with in-built functions for optimization and vector operations. matplotlib and seaborn are plotting libraries. pandas is used to reading in and edit data from csv files. |

References

- Edelman, S. Representation is representation of similarities. TheBehavioral and Brain Sciences. 21 (4), 449-498 (1998).

- Hahn, U., Chater, N. Concepts and similarity. Knowledge, Concepts and Categories. , 43-84 (1997).

- Kriegeskorte, N., Kievit, R. A. Representational geometry: integrating cognition, computation, and the brain. Trends in Cognitive Sciences. 17 (8), 401-412 (2013).

- Hebart, M. N., Zheng, C. Y., Pereira, F., Baker, C. I. Revealing the multidimensional mental representations of natural objects underlying human similarity judgements. Nature Human Behaviour. 4 (11), 1173-1185 (2020).

- Deng, W. S., Sloutsky, V. M. The development of categorization: Effects of classification and inference training on category representation. Developmental Psychology. 51 (3), 392-405 (2015).

- Shepard, R. N. Stimulus and response generalization: tests of a model relating generalization to distance in psychological space. Journal of Experimental Psychology. 55 (6), 509-523 (1958).

- Coombs, C. H. A method for the study of interstimulus similarity. Psychometrika. 19 (3), 183-194 (1954).

- Gärdenfors, P. . Conceptual Spaces: The Geometry of Thought. , (2000).

- Zaidi, Q., et al. Perceptual spaces: mathematical structures to neural mechanisms. The Journal of Neuroscience The Official Journal of the Society for Neuroscience. 33 (45), 17597-17602 (2013).

- Krishnaiah, P. R., Kanal, L. N. . Handbook of Statistics 2. , (1982).

- Tsogo, L., Masson, M. H., Bardot, A. Multidimensional Scaling Methods for Many-Object Sets: A Review. Multivariate Behavioral Research. 35 (3), 307-319 (2000).

- Kriegeskorte, N., Mur, M. Inverse MDS: Inferring dissimilarity structure from multiple item arrangements. Frontiers in Psychology. 3, 245 (2012).

- Rao, V. R., Katz, R. Alternative Multidimensional Scaling Methods for Large Stimulus Sets. Journal of Marketing Research. 8 (4), 488-494 (1971).

- Hoffman, J. I. E. Hypergeometric Distribution. Biostatistics for Medical and Biomedical Practitioners. , 179-182 (2015).

- Victor, J. D., Rizvi, S. M., Conte, M. M. Two representations of a high-dimensional perceptual space. Vision Research. 137, 1-23 (2017).

- Knoblauch, K., Maloney, L. T. Estimating classification images with generalized linear and additive models. Journal of Vision. 8 (16), 1-19 (2008).

- Maloney, L. T., Yang, J. N. Maximum likelihood difference scaling. Journal of Vision. 3 (8), 573-585 (2003).

- Logvinenko, A. D., Maloney, L. T. The proximity structure of achromatic surface colors and the impossibility of asymmetric lightness matching. Perception & Psychophysics. 68 (1), 76-83 (2006).

- Zhou, Y., Smith, B. H., Sharpee, T. O. Hyperbolic geometry of the olfactory space. Science Advances. 4 (8), (2018).

- Goldstone, R. An efficient method for obtaining similarity data. Behavior Research Methods, Instruments, & Computers. 26 (4), 381-386 (1994).

- Townsend, J. T. Theoretical analysis of an alphabetic confusion matrix. Perception & Psychophysics. 9, 40-50 (1971).

Reprints and Permissions

Request permission to reuse the text or figures of this JoVE article

Request PermissionThis article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. All rights reserved