A subscription to JoVE is required to view this content. Sign in or start your free trial.

Method Article

The Collective Trust Game: An Online Group Adaptation of the Trust Game Based on the HoneyComb Paradigm

* These authors contributed equally

In This Article

Summary

The Collective Trust Game is a computer-based, multi-agent trust game based on the HoneyComb paradigm, which enables researchers to assess the emergence of collective trust and related constructs, such as fairness, reciprocity, or forward-signaling. The game allows detailed observations of group processes through movement behavior in the game.

Abstract

The need to understand trust in groups holistically has led to a surge in new approaches to measuring collective trust. However, this construct is often not fully captured in its emergent qualities by the available research methods. In this paper, the Collective Trust Game (CTG) is presented, a computer-based, multi-agent trust game based on the HoneyComb paradigm, which enables researchers to assess the emergence of collective trust. The CTG builds on previous research on interpersonal trust and adapts the widely known Trust Game to a group setting in the HoneyComb paradigm. Participants take on the role of either an investor or trustee; both roles can be played by groups. Initially, investors and trustees are endowed with a sum of money. Then, the investors need to decide how much, if any, of their endowment they want to send to the trustees. They communicate their tendencies as well as their final decision by moving back and forth on a playfield displaying possible investment amounts. At the end of their decision time, the amount the investors have agreed upon is multiplied and sent to the trustees. The trustees have to communicate how much of that investment, if any, they want to return to the investors. Again, they do so by moving on the playfield. This procedure is repeated for multiple rounds so that collective trust can emerge as a shared construct through repeated interactions. With this procedure, the CTG provides the opportunity to follow the emergence of collective trust in real time through the recording of movement data. The CTG is highly customizable to specific research questions and can be run as an online experiment with little, low cost equipment. This paper shows that the CTG combines the richness of group interaction data with the high internal validity and time-effectiveness of economic games.

Introduction

The Collective Trust Game (CTG) provides the opportunity to measure collective trust online within a group of humans. It generalizes the original Trust Game by Berg, Dickhaut, and McCabe1 (BDM) to the group level and can capture and quantify collective trust in its emergent qualities2,3,4, as well as related concepts such as fairness, reciprocity, or forward-signaling.

Previous research mostly conceptualizes trust as a solely interpersonal construct, for example, between a leader and a follower5,6, excluding higher levels of analysis. Especially in organizational contexts, this might not be enough to comprehend trust holistically, so there is great need to understand the processes by which trust builds (and diminishes) on a group level.

Recently, trust research has incorporated more multi-level thinking. Fulmer and Gelfand7 reviewed a number of studies on trust and categorized them according to the level of analysis that is investigated in each study. The three different levels of analysis are interpersonal (dyadic), group, and organizational. Importantly, Fulmer and Gelfand7 additionally distinguish between different referents. The referents are those entities at which trust is directed. This means that when "A trusts B to X", then A (the investor in economic games) is represented by the level (individual, group, organizational) and B (the trustee) is represented by the referent (individual, group, organizational). X represents a specific domain to which trust refers. This means that X can be anything such as a generally positive inclination, active support, reliability, or financial exchanges as in economic games1.

Here, collective trust is defined based on Rousseau and colleagues' definition of interpersonal trust8, and similar to previous studies on collective trust9,10,11,12,13,14; collective trust comprises a group's intention to accept vulnerability based upon positive expectations of the intentions or behavior of another individual, group, or organization. Collective trust is a psychological state shared among a group of humans and formed in interaction among this group. The crucial aspect of collective trust is therefore the sharedness within a group.

This means that research on collective trust needs to look beyond a simple average of individual processes and conceptualize collective trust as an emergent phenomenon2,3,4, as new developments in group science show that group processes are fluid, dynamic, and emergent2,15. We define emergence as a "process by which lower level system elements interact and through those dynamics create phenomena that manifest at a higher level of the system"16 (p. 335). Proposedly, this should also apply to collective trust.

Research that reflects the focus on emergence and dynamics of group processes should use appropriate methodologies17 to capture these qualities. However, the current status of collective trust measurement seems to lag behind. Most studies have employed a simple averaging technique across the data of each individual in the group9,10,12,13,18. Arguably, this approach has only little predictive validity2 as it disregards that groups are not simply aggregations of individuals but higher-level entities with unique processes. Some studies have tried to address these drawbacks: A study by Adams19 employed a latent variable approach, while Kim and colleagues10 used vignettes to estimate collective trust. These approaches are promising in that they recognize collective trust as a higher-level construct. Yet, as Chetty and colleagues20 note, survey-based measures lack incentives to answer truthfully, so research on trust has increasingly adopted behavioral or incentive-compatible measures21,22.

This concern is addressed by a number of studies which have adapted a behavioral method, namely the BDM1, to be played by groups23,24,25,26. In the BDM, two parties act as either investors (A) or trustees (B). In this sequential economic game, both A and B receive an initial endowment (e.g., 10 Euros). Then, A needs to decide how much, if any, of their endowment they would like to send to B (e.g., 5 Euros). This amount is then tripled by the experimenter, before B can decide how much, if any, of the received money (e.g., 15 Euros) they would like to send back to A (e.g., 7.5 Euros). The amount of money A sends to B is operationalized to be the level of trust of A toward B, while the amount that B sends back can be used to measure the trustworthiness of B or the degree of fairness in the dyad of A and B. A large body of research has investigated behavior in dyadic trust games27. The BDM can be played both as a so-called 'one-shot' game, in which participants play the game only once with a specific person, and in repeated rounds, in which aspects such as reciprocity28,29 as well as forward-signaling might play a role.

In many studies that have adapted the BDM for groups23,24,25,26, either the investor, the trustee, or both roles were played by groups. However, none of these studies recorded group processes. Simply substituting individuals with groups in study designs does not meet the standards Kolbe and Boos17 or Kozlowski15 set up for investigations of emergent phenomena. To fill this gap, the CTG was developed.

The aim of developing the CTG was to create a paradigm that would combine the widely used BDM1 with an approach that captures collective trust as an emergent behavior-based construct that is shared among a group.

The CTG is based on the HoneyComb paradigm by Boos and colleagues30, that has also been published in the Journal of Visualized Experiments31 and has now been adapted for use in trust research. As described by Ritter and colleagues32, the HoneyComb paradigm is "a multi-agent computer-based virtual game platform that was designed to eliminate all sensory and communication channels except the perception of participant-assigned avatar movements on the playfield" (p. 3). The HoneyComb paradigm is especially suitable to research group processes as it allows researchers to record the movement of members of a real group with spatio-temporal data. It could be argued that, next to group interaction analysis17, HoneyComb is one of the few tools that allows researchers to follow group processes in great detail. In contrast to group interaction analysis, quantitative analysis of the spatio-temporal data of HoneyComb is less time-intensive. Additionally, the reductionist environment and possibility to exclude all interpersonal communication between participants except the movement on the playfield allows researchers to limit confounding factors (e.g., physical appearance, voice, facial expressions) and create experiments with high internal validity. While it is difficult to identify all influential aspects of a group process in studies employing group discussion designs33, the focus on basic principles of group interaction in a movement paradigm allows researchers to quantify all aspects of the group process in this experiment. Additionally, previous research has used proxemic behavior34-so reducing space between oneself and another individual-to investigate trust35,36.

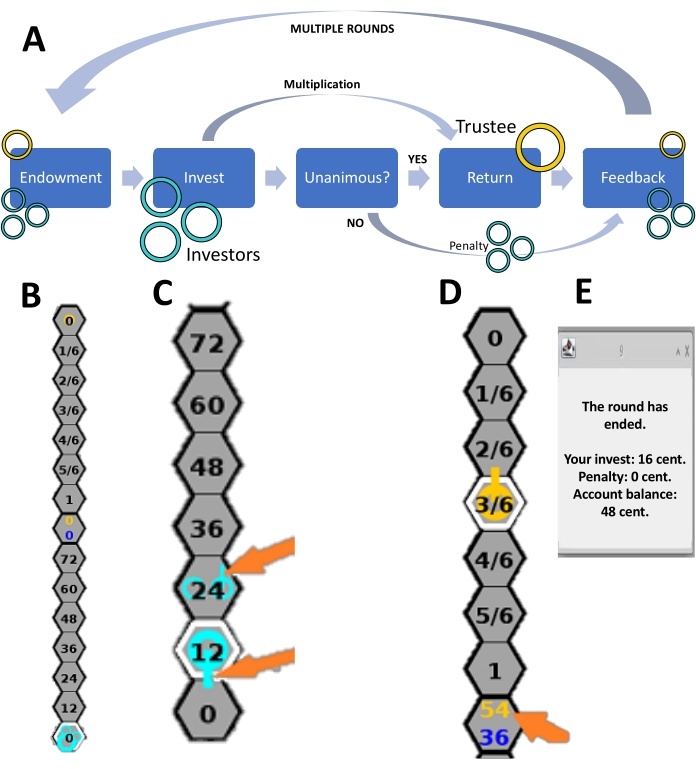

Figure 1: Schematic overview of the CTG. (A) Schematic procedure of one CTG round. (B) Initial placement of avatars at beginning of round. The three blue-colored investors are standing on the initial field "0". The yellow trustee is standing on the initial field "0". (C) Screenshot during the invest phase showing three investors (blue avatars) on the lower half of the playfield. One (big blue avatar) is currently standing on "12", two investors are currently standing on "24". Two avatars have tails (indicated by orange arrows). The tails are indicating from which direction they moved to their current field (e.g., one investor (big blue avatar) just moved from "0" to "12"). The avatar without a tail has been standing on this field for at least 4000 ms. (D) Screenshot during the return phase showing one trustee (yellow avatar) and the upper half of the playfield. The trustee is currently standing on "3/6" and has recently moved there from "2/6" as indicated by the tail. The blue number below (36) indicates the investment made by the investors. The yellow number, indicated by the arrow, is the current return (54) as depicted in the middle of the playfield. The return is calculated as follows: (invest (36 cents) x 3) x current return fraction (3/6) = 54 cent. (E) Pop-up window giving feedback to participants on how much they have earned during the round, displayed for 15 s after time-out of trustee expires. Please click here to view a larger version of this figure.

The main procedure of the CTG (Figure 1A) is closely based on the procedure of the BDM1, in order to make results comparable to previous studies using this economic game. As the HoneyComb paradigm is based on the principle of movement, participants indicate the amount they would like to invest or return by moving their avatar onto the small hexagon field that indicates a certain amount of money or fraction to return (Figure 1C,D). Prior to each round, both the investors and trustees are endowed with a certain amount of money (e.g., 72 cents) with the investors being placed in the lower half of the playfield and the trustees being placed in the upper half of the playfield (Figure 1B). In the default setting, the investors are allowed to move first, while the trustees remain still. The investors move across the playfield to indicate how much, if any, of their endowment they would like to send to the trustee (Figure 1C). Through moving back and forth on the field, participants may also communicate to other investors how much they would like to send to the trustee. Depending on the configuration, participants need to reach a unanimous decision on how much they would like to invest by converging on one playfield when the time-out is reached. Unanimous decisions were required in order to enforce that investors need to interact with each other, instead of simply play alongside one another. If the investors do not reach a joint decision, a penalty (e.g., 24 cents) is deducted from their account. This was implemented to ensure that investors would be highly motivated to reach a shared level of collective trust. Once the investors' time is up, the invested money is multiplied and sent to the trustees who are then allowed to move while the investors remain still. The trustees indicate through movement how much they would like to return to the investors (Figure 1D). The available return options are displayed as fractions on the playfield to keep cognitive load on trustees comparatively low. The playfield on which trustees stand once their allocated time runs out indicates which fraction (e.g., 4/6) is returned to investors. The round ends with a pop-up (Figure 1E) that summarizes for each participant how much they earned during that round and what their current account balance is.

Rounds should be repeated multiple times. Researchers should have participants play the CTG for at least 10 or 15 rounds in the same roles. This is necessary as collective trust is an emergent construct and needs to develop during repeated interactions within a group. Similarly, other concepts such as forward-signaling (i.e., reciprocating high returns from trustees with high investments in the next round) will only emerge in repeated interactions. It is crucial, however, that participants are unaware of the exact number of rounds to be played as it has been shown that behavior can drastically change when participants are aware that they are playing the last round (i.e., more unfair behavior or deflections in economic games37,38).

In this way, the CTG provides information about the emergence of collective trust on multiple levels. First, the level of collective trust exhibited in the final round should be a close representation of the shared level of trust investors hold towards the trustee(s). Second, the amount invested in each round can serve as a proxy for the emergence of collective trust over repeated interactions. Third, movement data sheds light on the group process that determines how much money is invested in each round.

Access restricted. Please log in or start a trial to view this content.

Protocol

Data collection and data analysis in this project have been approved by the Ethics Committee of the Georg-Elias-Müller Institute for Psychology of the University of Göttingen (proposal 289/2021); the protocol follows the guidelines on human research of the Ethics Committees of the Georg-Elias-Müller-Institute for Psychology. The CTG software can be downloaded from the OSF project (DOI 10.17605/OSF.IO/U24PX) under the link: https://s.gwdg.de/w88YNL.

1. Prepare technical setup

- Prepare online consent forms and questionnaires

- Prepare an online consent form in an online questionnaire tool.

- If applicable, prepare an online questionnaire in an online questionnaire tool.

NOTE: It is possible to include a short questionnaire within the HoneyComb program (see step 1.3.5). To use longer questionnaires, use a separate online questionnaire tool instead. Examples for online questionnaire tools are given in the Table of Materials.

- Prepare remote desktop server

- Install a Linux-based operating system on a remote server. If possible, ask technical assistants about the available resources at the institution. Otherwise, follow an installation guideline39.

- Create different users on this server40.

- Create a user admin which has root permissions and is accessed solely by the technical lead in the experiment.

- Create a user experimenter which has permissions to create shared folders, import and export data, and can be accessed by all personnel collecting data (including students/research assistants, etc.).

- Create multiple users named participant-1, participant-2, etc.

NOTE: Researchers will only be able to test as many participants in one experimental session as users that are created.

- Execute the command java -version on the admin user to ensure that a Java runtime environment is available on the server. If not, install the most recent Java version before continuing and make sure all users can access it.

- Install the program

- Download the program.

NOTE: The program can be downloaded as a zip-file HC_CTG.zip containing 1) the runnable HC.jar, 2) three files for configuration (hc_server.config, hc_panel.config, and hc_client.config), and 3) two subfolders named intro and rawdata. - Create a folder on the experimenter user and share it with the other users41. Extract the files from the compressed file HC_CTG.zip into this folder.

- For each participant user, access this shared folder and check that the user can access the files.

- Download the program.

- Open the three configuration files.

- Edit hc_server.config and save the edited file.

- Configure the number of players by setting n_Pl to the desired number. For example, enter 4 behind the =.

- Configure the number of rounds to play (playOrder) by repeating the game number 54a (e.g., 54a, 54a, 54a, 54a for four rounds).

NOTE: i54a stands for the instructions and should not be deleted in the configuration file. - Configure whether a questionnaire should be shown in HoneyComb by including 200 at the end of playOrder. Delete 200 if a separate online questionnaire tool is used.

- Configure the investment scale. To configure the scale for investors (iscale), enter which values should be available as investment steps (e.g., 0, 12, 24, 36, 48, 60, 72). Use integers that are multiples of three so that payouts are also integers.

NOTE: These configured values are also displayed as possible investment steps to the investors.- Configure the display scale for trustees (tlabel) by choosing which values should be displayed as possible returns on the playfield (e.g., 0, 1/6, 2/6, 3/6, 4/6, 5/6, 1). NOTE: This scale does not influence the calculation of payouts.

- Configure the scale for trustees (tscala) by choosing which return values should be possible as returns (e.g., 0, 0.166666, 0.3333, 0.5, 0.6666, 0.833331, 1). Use digital values only (i.e., no fractions).

NOTE: These values are used to calculate payouts and are NOT displayed on the playfield.

- Configure the time-ins (timeInI for investors, timeInT for trustees) and time-outs (timeOutI for investors, timeout for trustees) in seconds. For example, timeInI = 0, timeOutI = 30, timeInT = 30, and timeout = 45.

- Configure the amount of money investors and trustees are endowed with in each round in cents (r52).

- Configure the factor with which the investment is multiplied before being sent to the trustee (f52).

- Configure whether the group has to reach a unanimous decision (set bUnanimous to true) or not (set unanimous to false)

- Configure whether the group is paid out in equal parts (set bCommon to true) or according to how much each investor has contributed to the investment (set bCommon to false).

- If bUnanimous is set to true, configure the penalty-the amount of money deducted from the investors if a unanimous decision is not reached (p52).

- Edit hc_client.config if necessary. Make sure to set ip_nr to localhost so that the clients can connect to the experimenter.

- Edit hc_panel.config.

- Adjust the size of the hexagons (radius) according to the screen resolution. Test the experiment on multiple different screens to ensure that the experiment will be visible on a wide variety of screens.

- Adjust the text that is displayed on the playfield under labels (e.g., Your role is: investor, Account Balance, etc.)

- Adjust and/or translate the instructions, if necessary. To do so, edit and save the simple HTML-files (Figure 2A) in the "intro" folder within the HoneyComb program folder.

- If you want to use the questionnaire within the HoneyComb program, adjust and/or translate the questionnaire in the file qq.txt and save the file.

- Keep this setup constant across all experiment sessions (within one experiment condition). Document all configurations.

- Edit hc_server.config and save the edited file.

2. Participant recruitment

- Online advertisement

- Recruit participants over available channels (e.g., social media, university blog, flyer with QR-code). Name important information about the experiment, such as its purpose, duration, and maximum payment calculated according to game behavior.

NOTE: The sample presented here was recruited via an online blog for psychology students at the University of Göttingen as well as unpaid advertisements in social media groups. An example flyer can be seen in Supplemental Figure 1. - Make potential participants aware that participation will require usage of personal laptops/PCs with a stable internet connection and in a quiet, secluded area. Make participants aware that they might need to install a program to establish the Remote Desktop connection.

NOTE: Participation via mobile phones or tablets is not possible. - Make sure the participants meet the experiment's inclusion criteria such as language requirements or color sightedness.

- Make sure the participants have not taken part in previous experiments on the CTG.

- Recruit participants over available channels (e.g., social media, university blog, flyer with QR-code). Name important information about the experiment, such as its purpose, duration, and maximum payment calculated according to game behavior.

- Book experimental sessions with the participants

- Ask the participants to book time-slots for their participation.

- Use a participant management software to send automated invitation or reminder e-mails.

- Overbook time-slots by at least one participant to ensure enough participants are present to run the experiment.

- Send participants a confirmation e-mail with the following details: guide on computer setup, installation of Remote Desktop Connection Tool, and establishing connection to Remote Desktop. Make sure to NOT send any log in information yet, in order to avoid technical issues due to earlier log in.

- Send participants reminder e-mails about 24 h prior to the experiment, including the link to the video conferencing platform. Include the information about installation that was sent in the confirmation e-mail.

3. Experimental setup (before each experimental session)

- Prepare the video conferencing platform (Figure 3)

- Make sure the participants are blocked from sharing their microphone or camera. Make sure the participants cannot see each other's names.

- Share the experimenter's microphone and camera, and share the screen with minimal instructions on the video conferencing platform (Figure 3).

- Prepare the remote desktop

- User experimenter

- Start a remote desktop connection with the experimenter user. Open the shared folder and start a terminal by right clicking in the directory and choosing Open Terminal here.

- Start the server program HC_Gui.jar by typing the command java -jar HC_Gui.jar in the terminal and pressing ENTER.

- Users participant-1, participant-2, etc.

- Establish a remote desktop connection with users participant-1, participant-2, .... Open the shared folder and start a terminal in this folder as before.

- Start the client programs for each user by typing the command java -jar HC.jar in the terminal and pressing ENTER.

- Check whether the connections are established correctly on all participant users.

NOTE: The participant users' screens should display the message Please wait. The computer is connecting to the server. It is recommended to have as many laptops present as users (Figure 4).

- User experimenter

- Check that a line appears in the server GUI, displaying the IP address of each of the participant users. When all participant users are connected, check that the server program displays the message All Clients are connected. Ready to start?. Click on OK.

- Check that the screens of the participant users display the welcome screen of the experiment (first instructions page).

NOTE: The experimenter can prepare the session up to this point.

- User experimenter

4. Experimental procedure

- Admit participants to the video conference at the scheduled experiment time-slot. Welcome all participants using a standardized text. Explain the technical procedure to participants.

- Share the link to the online consent form. Check that all participants have given written consent.

- Guide participants to open the Remote Desktop Connection tool and send each participant their individual login data via personal chat in the video conference.

NOTE: When the participants log in to the participant users, the notebooks in the laboratory will lose connection to the participant users. From here on, the experiment runs automatically until the participants reach the final page, instructing them to return to the video conference. - Have participants confirm that they have read the first instructions page by clicking on OK. Once all participants have confirmed, wait until the participants have completed the game.

NOTE: The participants can page through the instructions at their preferred pace. Once all participants have confirmed that they have read the instructions, the CTG automatically commences. The game progresses automatically through as many rounds as indicated in the server.config file. - Testing phase

- Assign participants to one of two roles: investor or trustee.

NOTE: Multiple participants can be assigned the same role. - Have investors start on the bottom-most field (indicated investment of 0) and trustees on the upmost field (indicating return of 0) (Figure 1B).

- Instruct participants to move their avatar by left-click into an adjacent hexagon field. Instruct participants that only adjacent fields can be chosen and fields cannot be skipped. Instruct participants that their avatar will display a small tail for 4000 ms after each move that indicates the last direction from which they moved to the current field (Figure 1C).

- Allow investors to move from the beginning (time-in = 0) to indicate through movement how much they would like to invest. After a certain amount of time, prohibit the movement of investors (time-out).

NOTE: The field on which they stand will then indicate how much is invested. In the middle of the playfield, a blue number will additionally show the amount sent to the trustee. If the experiment is set up to require unanimous invests, investments will only be made if all participants stand on the same field. - Explain in the instructions that the invested amount is multiplied by a factor (e.g., three) and sent to the trustees. Restrict the trustees from moving for as long as the investors are moving by setting the trustee time-in to the length of the trustee time-out.

- Instruct the trustees to move to indicate the fraction they would like to return to the investors. Once the trustee time-out is reached, the field on which the trustees stand is taken to indicate the fraction that is returned to the investors. The amount returned is also indicated in the middle of the playfield by a yellow number (Figure 1D).

- Have the pop-up window display the amount of money the person has earned at the end of the round (Figure 1E).

- Repeat the game round as needed (i.e., as indicated in the server.config file).

- Once all rounds are completed, ask participants to generate a personal unique code so that the in-game earnings can be connected to their name while keeping the behavioral data anonymous.

- After participants have generated the code, display a screen which instructs participants to return to the video conference and close the Remote Desktop connection.

NOTE: The experimental procedure (section 4 in this protocol with 15 game rounds) takes 35 min. - If technical issues or failure of a participant require that the experiment session is aborted, refrain from restarting the experiment with the same participants.

- Assign participants to one of two roles: investor or trustee.

- Post-testing phase

- Once the game is completed, make sure that all participants have closed the Remote Desktop connection. Have the participants fill out questionnaires as seen fit for a specific research question.

- While the participants are filling out the questionnaires, close the server program on the experimenter user by clicking on Stop & Exit. This will also close the program on the participant users.

- Thank participants for their time and explain how and when their earnings will be transferred to them. Make sure all participants have left the video conference, especially if another experiment time-slot is scheduled directly afterwards.

5. Finishing the experiment

- Transfer and back up the data (e.g., in the cloud), in the form of one *.csv and one *.txt file per group and experiment time-slot, marked by a day- and time-stamp of the experiment.

- Close all Remote Desktop connections.

Access restricted. Please log in or start a trial to view this content.

Results

This paper presents results of a pilot study conducted with the CTG with 16 participants (five men, 11 women; Age: M = 21, SD = 2.07). According to Johanson and Brooks42, this sample size is sufficient in a pilot experiment, especially when paired with a qualitative approach to reach a high information density about participants' subjective experience during the experiment. It is recommended that whenever researchers intend to adapt the CTG to their specific research idea, fo...

Access restricted. Please log in or start a trial to view this content.

Discussion

The CTG provides researchers with the opportunity to adapt the classic BDM1 for groups and observe emergent processes within the groups in depth. While other work23,24,25,26 has already attempted to adapt the BDM1 to group settings, the only way to access group processes in these studies are laborious group interaction analyses of video-taped disc...

Access restricted. Please log in or start a trial to view this content.

Disclosures

The authors have nothing to disclose.

Acknowledgements

This research did not receive any external funding.

Access restricted. Please log in or start a trial to view this content.

Materials

| Name | Company | Catalog Number | Comments |

| Data Analysis Software and Packages | R | version 4.2.1 (2022-06-23 ucrt) | R Core Team R: A Language and Environment for Statistical Computing. at [https://www.R-project.org/]. R Foundation for Statistical Computing. Vienna, Austria. (2020). |

| Data Analysis Software and Packages | R Studio | version 2022.2.3.492 "Prairie Trillium" | RStudio Team RStudio: Integrated Development Environment for R. at [http://www.rstudio.com/]. RStudio, PBC. Boston, MA. (2020). |

| Data Analysis Software and Packages | ggplot2 | version 3.3.6 | Wickham, H. ggplot2: Elegant Graphics for Data Analysis. at [https://ggplot2.tidyverse.org]. Springer-Verlag New York. (2016). |

| Data Analysis Software and Packages | cowplot | version 1.1.1 | Wilke, C.O. cowplot: Streamlined Plot Theme and Plot Annotations for “ggplot2.” at [https://CRAN.R-project.org/package=cowplot]. (2020). |

| OnlineQuestionnaireTool | LimeSurvey | Community Edition Version 3.28.16+220621 | Any preferred online questionnaire tool can be used. LimeSurvey or SoSciSurvey are recommended. |

| Notebooks or PCs | DELL | Latitude 7400 | Any laptop that is able to establish a stable Remote Desktop Connection can be used. |

| Participant Management Software | ORSEE | version 3.1.0 | It is recommended to use ORSEE (Greiner, B. [2015]. Subject pool recruitment procedures: Organizing experiments with ORSEE. Journal of the Economic Science Association, 1, 114–125. https://doi.org/10.1007/s40881-015-0004-4), but other software options might be available. |

| Program to Open RemoteDesktop Connection | Remote Desktop Connection (Program distributed with each Windows 10 installation.) | The following tools are recommended: RemoteDesktopConnection (for Windows), Remmina (for Linux), or Microsoft Remote Desktop (for Mac OS). | |

| Server to run RemoteDesktop Environment | VMware vSphere environment based on vSphere ESXi | version 6.5 | Ideally provided by IT department of university/institution. |

| VideoConference Platform | BigBlueButton | Version 2.3 | It is recommend to use a platform such as BigBlueButton or other free software that does not record participant data on an external server. The platform should provide the following functions: 1) possibility to restrict access to microphone and camera for participants, 2) hide participant names from other participants, 3) possibility to send private chat message to participants. |

| Virtual Machine running Linux-Installation | Xubuntu | version 20.04 "Focal Fossa" | Other Linux-based systems will also be possible. |

References

- Berg, J., Dickhaut, J., McCabe, K. Trust, reciprocity, and social history. Games and Economic Behavior. 10 (1), 122-142 (1995).

- Costa, A. C., Fulmer, C. A., Anderson, N. R. Trust in work teams: An integrative review, multilevel model, and future directions. Journal of Organizational Behavior. 39 (2), 169-184 (2018).

- Kiffin-Petersen, S. Trust: A neglected variable in team effectiveness research. Journal of the Australian and New Zealand Academy of Management. 10 (1), 38-53 (2004).

- Grossman, R., Feitosa, J. Team trust over time: Modeling reciprocal and contextual influences in action teams. Human Resource Management Review. 28 (4), 395-410 (2018).

- Schoorman, F. D., Mayer, R. C., Davis, J. H. An integrative model of organizational trust: Past, present, and future. Academy of Management Review. 32 (2), 344-354 (2007).

- Shamir, B., Lapidot, Y. Trust in organizational superiors: Systemic and collective considerations. Organization Studies. 24 (3), 463-491 (2003).

- Fulmer, C. A., Gelfand, M. J. At what level (and in whom) we trust: Trust across multiple organizational levels. Journal of Management. 38 (4), 1167-1230 (2012).

- Rousseau, D. M., Sitkin, S. B., Burt, R. S., Camerer, C. Not so different after all: A cross-discipline view of trust. Academy of Management Review. 23 (3), 393-404 (1998).

- Dirks, K. T. Trust in leadership and team performance: Evidence from NCAA basketball. Journal of Applied Psychology. 85 (6), 1004-1012 (2000).

- Kim, P. H., Cooper, C. D., Dirks, K. T., Ferrin, D. L. Repairing trust with individuals vs. groups. Organizational Behavior and Human Decision Processes. 120 (1), 1-14 (2013).

- Forsyth, P. B., Barnes, L. L. B., Adams, C. M. Trust-effectiveness patterns in schools. Journal of Educational Administration. 44 (2), 122-141 (2006).

- Gray, J. Investigating the role of collective trust, collective efficacy, and enabling school structures on overall school effectiveness. Education Leadership Review. 17 (1), 114-128 (2016).

- Kramer, R. M. Collective trust within organizations: Conceptual foundations and empirical insights. Corporate Reputation Review. 13 (2), 82-97 (2010).

- Kramer, R. M. The sinister attribution error: Paranoid cognition and collective distrust in organizations. Motivation and Emotion. 18 (2), 199-230 (1994).

- Kozlowski, S. W. J. Advancing research on team process dynamics: Theoretical, methodological, and measurement considerations. Organizational Psychology Review. 5 (4), 270-299 (2015).

- Kozlowski, S. W. J., Chao, G. T. The dynamics of emergence: Cognition and cohesion in work teams. Managerial and Decision Economics. 33 (5-6), 335-354 (2012).

- Kolbe, M., Boos, M. Laborious but elaborate: The benefits of really studying team dynamics. Frontiers in Psychology. 10, 1478(2019).

- McEvily, B. J., Weber, R. A., Bicchieri, C., Ho, V. Can groups be trusted? An experimental study of collective trust. Handbook of Trust Research. , 52-67 (2002).

- Adams, C. M. Collective trust: A social indicator of instructional capacity. Journal of Educational Administration. 51 (3), 363-382 (2013).

- Chetty, R., Hofmeyr, A., Kincaid, H., Monroe, B. The trust game does not (only) measure trust: The risk-trust confound revisited. Journal of Behavioral and Experimental Economics. 90, 101520(2021).

- Harrison, G. W. Hypothetical bias over uncertain outcomes. Using Experimental Methods in Environmental and Resource Economics. , 41-69 (2006).

- Harrison, G. W. Real choices and hypothetical choices. Handbook of Choice Modelling. , Edward Elgar Publishing. 236-254 (2014).

- Holm, H. J., Nystedt, P. Collective trust behavior. The Scandinavian Journal of Economics. 112 (1), 25-53 (2010).

- Kugler, T., Kausel, E. E., Kocher, M. G. Are groups more rational than individuals? A review of interactive decision making in groups. WIREs Cognitive Science. 3 (4), 471-482 (2012).

- Cox, J. C. Trust, reciprocity, and other-regarding preferences: Groups vs. individuals and males vs. females. Experimental Business Research. Zwick, R., Rapoport, A. , Springer. Boston, MA. 331-350 (2002).

- Song, F. Intergroup trust and reciprocity in strategic interactions: Effects of group decision-making mechanisms. Organizational Behavior and Human Decision Processes. 108 (1), 164-173 (2009).

- Johnson, N. D., Mislin, A. A. Trust games: A meta-analysis. Journal of Economic Psychology. 32 (5), 865-889 (2011).

- Rosanas, J. M., Velilla, M. Loyalty and trust as the ethical bases of organizations. Journal of Business Ethics. 44, 49-59 (2003).

- Dunn, J. R., Schweitzer, M. E. Feeling and believing: The influence of emotion on trust. Journal of Personality and Social Psychology. 88 (5), 736-748 (2005).

- Boos, M., Pritz, J., Lange, S., Belz, M. Leadership in moving human groups. PLOS Computational Biology. 10 (4), 1003541(2014).

- Boos, M., Pritz, J., Belz, M. The HoneyComb paradigm for research on collective human behavior. Journal of Visualized Experiments. (143), e58719(2019).

- Ritter, M., Wang, M., Pritz, J., Menssen, O., Boos, M. How collective reward structure impedes group decision making: An experimental study using the HoneyComb paradigm. PLOS One. 16 (11), 0259963(2021).

- Kocher, M., Sutter, M. Individual versus group behavior and the role of the decision making process in gift-exchange experiments. Empirica. 34 (1), 63-88 (2007).

- Ickinger, W. J. A behavioral game methodology for the study of proxemic behavior. , Doctoral Dissertation (1985).

- Deligianis, C., Stanton, C. J., McGarty, C., Stevens, C. J. The impact of intergroup bias on trust and approach behaviour towards a humanoid robot. Journal of Human-Robot Interaction. 6 (3), 4-20 (2017).

- Haring, K. S., Matsumoto, Y., Watanabe, K. How do people perceive and trust a lifelike robot. Proceedings of the World Congress on Engineering and Computer Science. 1, 425-430 (2013).

- Gintis, H. Behavioral game theory and contemporary economic theory. Analyse & Kritik. 27 (1), 48-72 (2005).

- Weimann, J. Individual behaviour in a free riding experiment. Journal of Public Economics. 54 (2), 185-200 (1994).

- How to install Xrdp server (remote desktop) on Ubuntu 20.04. Linuxize. , Available from: https://linuxize.com/post/how-to-install-xrdp-on-ubuntu-20-04/ (2020).

- How to create users in Linux (useradd Command). Linuxize. , Available from: https://linuxize.com/post/how-to-create-users-in-linux-using-the-useradd-command/ (2018).

- How to create a shared folder between two local user in Linux. GeeksforGeeks. , Available from: https://www.geeksforgeeks.org/how-to-create-a-shared-folder-between-two-local-user-in-linux/ (2019).

- Johanson, G. A., Brooks, G. P. Initial scale development: Sample size for pilot studies. Educational and Psychological Measurement. 70 (3), 394-400 (2010).

- Glaeser, E. L., Laibson, D. I., Scheinkman, J. A., Soutter, C. L. Measuring trust. The Quarterly Journal of Economics. 115 (3), 811-846 (2000).

- Mayring, P. Qualitative Content Analysis: Theoretical Background and Procedures. Approaches to Qualitative Research in Mathematics Education: Examples of Methodology and Advances in Mathematics Education. Kikner-Ahsbahs, A., Knipping, C., Presmed, N. , Springer. Dordrecht. 365-380 (2015).

- Chandler, J., Paolacci, G., Peer, E., Mueller, P., Ratliff, K. A. Using nonnaive participants can reduce effect sizes. Psychological Science. 26 (7), 1131-1139 (2015).

- Belz, M., Pyritz, L. W., Boos, M. Spontaneous flocking in human groups. Behavioural Processes. 92, 6-14 (2013).

- Boos, M., Franiel, X., Belz, M. Competition in human groups-Impact on group cohesion, perceived stress and outcome satisfaction. Behavioural Processes. 120, 64-68 (2015).

Access restricted. Please log in or start a trial to view this content.

Reprints and Permissions

Request permission to reuse the text or figures of this JoVE article

Request PermissionThis article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. All rights reserved