Method Article

爆風曝露シミュレーションのための武器訓練シーン画像からの戦闘員アバターの生成

要約

画像や動画データを用いて、兵士の3次元アバターからなる仮想シーンを自動的に再構築するための計算フレームワークを開発しました。仮想シーンは、武器の訓練中の爆風過圧曝露を推定するのに役立ちます。このツールは、さまざまなシナリオでの爆風曝露シミュレーションで人間の姿勢をリアルに表現することを容易にします。

要約

武器訓練に関与する軍人は、低レベルの爆風を繰り返し受けます。爆風負荷を推定する一般的な方法には、ウェアラブル爆風ゲージが含まれます。しかし 、ウェアラブルセンサーのデータでは、軍人の身体姿勢を知らなければ、頭部や他の臓器への爆風負荷を正確に推定することはできません。より安全な武器訓練を実施するための、画像/ビデオで補強された補完的な実験計算プラットフォームが開発されました。本研究では、爆風曝露シミュレーションのためのビデオデータから武器訓練シーンを自動生成するためのプロトコルについて説明します。映像データから抽出された武器発射瞬間の爆破シーンには、軍人の身体アバター、武器、地面などの構造物が関与しています。この計算プロトコルは、このデータを使用して軍人の位置と姿勢を再構築するために使用されます。軍人の身体シルエットから抽出された画像またはビデオデータを使用して、解剖学的骨格と主要な人体測定データを生成します。これらのデータは、個々の身体部位に分割され、抽出された軍人の姿勢に一致するように幾何学的に変換された3D身体表面アバターを生成するために使用されます。最終的な仮想兵器訓練シーンは、軍人に対する兵器の爆風波負荷の3D計算シミュレーションに使用されます。武器訓練シーンジェネレータは、さまざまな向きや姿勢の画像や動画から、個々の軍人の身体の3D解剖学的アバターを構築するために使用されてきました。肩に装着したアサルトウェポンシステムと迫撃砲システムの画像データから訓練シーンを生成した結果を示します。Blast Overpressure (BOP) ツールは、仮想武器のトレーニング シーンを使用して、軍人のアバター ボディに爆風波が負荷される 3D シミュレーションを行います。この論文では、武器の発砲による爆風波の伝播と、訓練中の軍人に対する対応する爆風負荷の3D計算シミュレーションを紹介します。

概要

軍事訓練中、軍人や教官は重火器と軽火器による低レベルの爆風に頻繁にさらされます。最近の研究では、爆風への曝露が神経認知能力の低下1,2および血液バイオマーカー3,4,5,6の変化につながる可能性があることが示されています。低レベルの爆風への曝露を繰り返すと、最適なパフォーマンスを維持し、怪我のリスクを最小限に抑えることが困難になります7,8。ウェアラブル圧力センサを使用する従来のアプローチには、特に頭部9の爆風圧力を正確に決定することに関して欠点があります。低レベルの爆風への曝露が人間のパフォーマンスに及ぼす既知の悪影響 (例: 訓練中や運用上の役割) は、この問題を悪化させます。議会の命令(第734条および第717条)は、訓練および戦闘における爆風被曝の監視と、それを軍人の医療記録に含めるための要件を規定している10。

ウェアラブルセンサーは、これらの戦闘訓練作戦中の爆風の過圧を監視するために使用できます。しかし、これらのセンサは、人体との爆風波相互作用の複雑な性質のために、身体の姿勢、向き、爆風源からの距離などの変数の影響を受けます9。圧力分布とセンサーの計測には、次の要因が影響します9。

爆風源からの距離: 圧力強度は、爆風波が分散して減衰するにつれて距離によって変化します。ブラストに近いセンサーはより高い圧力を記録し、データの精度と一貫性に影響を与えます。

体の姿勢:さまざまな姿勢がさまざまな体の表面を爆風にさらし、圧力分布を変化させます。たとえば、立っているのとしゃがんでいるのでは、圧力の測定値は異なります 9,11。

オリエンテーション:爆風源に対する物体の角度は、圧力波が物体とどのように相互作用するかに影響を与え、測定値9の不一致につながります。物理ベースの数値シミュレーションは、これらの変数を体系的に考慮することで、より正確な評価を提供し、これらの要因に本質的に影響を受けるウェアラブルセンサーと比較して、制御された包括的な分析を提供します。

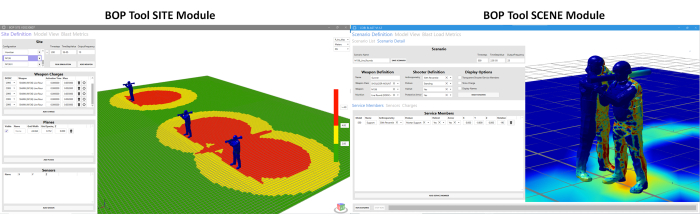

これらの課題に対応するため、より高度なツールを開発するための協調的な取り組みが行われてきました。この方向で、ブラスト過圧(BOP)ツールが開発されます。このツールは、さまざまな軍人の姿勢や兵器システム周辺の位置での過圧曝露を推定するために開発されました。BOPツール11の下には2つの異なるモジュールがあります。それらは、(a) BOP ツールの SCENE モジュールと (b) BOP ツールの SITE モジュールです。これらのモジュールは、武器の発砲中の爆風過圧を推定するために使用される12。BOP SCENEモジュールは、訓練シナリオに参加する個々の軍人または教官が経験する爆風の過圧を推定するために開発され、BOP SITEモジュールは、訓練コースの鳥瞰図を再構築し、複数の発射ステーションによって生成された爆風の過圧ゾーンを描写します。 図 1 は、両方のモジュールのスナップショットを示しています。現在、BOP Toolモジュールは、M107 .50口径特殊用途スナイパーライフル(SASR)、M136ショルダーマウントアサルトウェポン、M120間接火力迫撃砲、ブリーチングチャージなど、国防総省が定義する4つのTier-1兵器システムの武器爆風過圧特性(同等の爆風源用語)で構成されています。武器のブラストカーネルという用語は、実際の兵器と同じ兵器システムを取り巻くブラストフィールドを再現するために開発された同等のブラストソース用語を指します。BOPツールの開発に使用された計算フレームワークの詳細な説明は、さらなる参考文献11で利用可能である。過圧シミュレーションは、CoBi-Blast ソルバー エンジンを使用して実行されます。これは、爆風の過圧をシミュレートするためのマルチスケールマルチフィジックスツールです。エンジンのブラストモデリング機能は、文献12の実験データに対して検証されています。このBOPツールは、現在、さまざまな武器訓練範囲で使用するために、Range Managers Toolkit(RMTK)に統合されています。RMTKは、陸軍、海兵隊、空軍、海軍のレンジマネージャーのニーズを満たすために設計された、マルチサービスデスクトップツールスイートで、レンジの運用、安全性、および近代化のプロセスを自動化します。

図1:BOPツールSCENEモジュールとBOPツールSITEモジュールのグラフィカルユーザーインターフェース(GUI)。 BOP SCENEモジュールは、軍人および教官の身体モデルに対する爆風の過圧を推定するように設計されています。一方、BOP SITEモジュールは、訓練フィールドを表す平面上の過圧コンターを推定することを目的としています。ユーザーは、地面に対して平面が配置される高さを選択するオプションがあります。 この図の拡大版を表示するには、ここをクリックしてください。

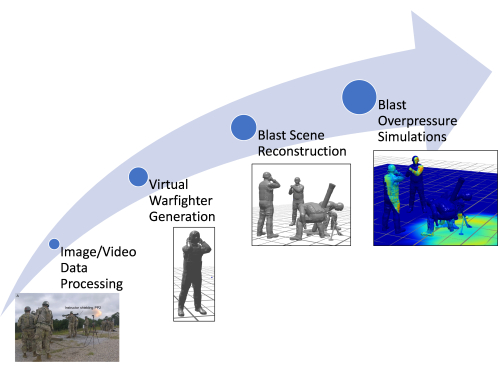

既存のBOPツールであるSCENEモジュールの制限の1つは、人体測定、姿勢、位置など、仮想軍人の身体モデルを構築するために手動で推定されたデータを使用することです。適切な姿勢で仮想軍人を手動で生成するには、労働集約的で時間がかかります11,12.従来のBOPツール(従来のアプローチ)は、事前に設定された姿勢のデータベースを使用して、画像データ(利用可能な場合)に基づいて武器の訓練シーンを構築します。さらに、姿勢は視覚的な評価を通じて手動で近似されるため、複雑な姿勢設定では正しい姿勢がキャプチャされない可能性があります。その結果、このアプローチでは、個々の軍人の推定過圧曝露に不正確さが生じます (姿勢の変更により、より脆弱な地域の過圧曝露が変更される可能性があるため)。この論文では、既存の最先端の姿勢推定ツールを使用して軍人モデルを迅速かつ自動的に生成できるように、既存の計算フレームワークに加えられた改善点を紹介しています。この論文では、BOPツールの強化について、特にビデオと画像データを使用して爆風シーンを再構築するための新しく高速な計算パイプラインの開発に焦点を当てています。また、改良されたツールでは、武器が発砲された瞬間の軍人や教官の詳細な身体モデルを再構築することもでき、ビデオデータを活用して、従来のアプローチと比較してパーソナライズされたアバターを作成することができます。これらのアバターは、軍人の姿勢を正確に反映しています。この作業により、ブラストシーンの生成プロセスが効率化され、追加の兵器システムのためのブラストシーンをより迅速に含めることができるため、兵器の訓練シーンの作成に必要な時間と労力が大幅に削減されます。図2は、この論文で説明した拡張計算フレームワークの概略図を示しています。

図2:計算フレームワークの全体的なプロセスフローチャートを示す概略図。 さまざまなステップには、画像/ビデオデータ処理、仮想戦闘機の生成、爆風シーンの再構築、爆風過圧シミュレーションが含まれます。 この図の拡大版を表示するには、ここをクリックしてください。

この論文では、BOP ツールに実装されている自動化されたアプローチを紹介しており、これは、トレーニングおよび運用中の過圧曝露を推定するために利用可能な計算ツールの大幅な改善を表しています。このツールは、パーソナライズされたアバターとトレーニングシナリオの迅速な生成により、没入型の爆風過圧シミュレーションを可能にすることで際立っています。これは、従来の人口平均人体モデルへの依存から大きく逸脱し、より正確で個別化されたアプローチを提供します。

自動化プロセスで使用される計算ツール

仮想軍人モデル生成の自動化は、高度な計算ツールを活用して生の画像またはビデオデータを詳細な3D表現に変換する多段階のプロセスです。プロセス全体は自動化されていますが、必要に応じて既知の測定値を手動で入力できるように適合させることができます。

3D姿勢推定ツール: 自動化パイプラインの中核となるのは、3D 姿勢推定ツールです。これらのツールは、画像データを分析して、軍人の体の各関節の位置と向きを特定し、デジタルスケルトンを効果的に作成します。パイプラインは現在、Python API を提供する Mediapipe と MMPose をサポートしています。ただし、このシステムは柔軟性を念頭に置いて設計されており、必要な3D関節および骨データを出力できる場合は、深度カメラなどの他のツールを組み込むことができます。

人体モデルジェネレータ(AMG): 3Dポーズが推定されると、AMGが機能します。このツールは、ポーズデータを利用して、サービスメンバーの固有の体寸法に一致する3Dスキンサーフェスモデルを作成します。AMGツールでは、人体測定の自動または手動入力が可能で、それをツール内の主要コンポーネントにリンクして、それに応じて3Dボディメッシュをモーフィングします。

OpenSim スケルトンモデリング: 次のステップは、オープンソースのOpenSimプラットフォーム13で、骨格モデルが3Dポーズデータに合わせて調整されます。姿勢推定ツールは、スケルトン内の一貫したボーンの長さを強制しないため、体に非現実的な非対称性が生じる可能性があります。解剖学的に正しいOpenSimスケルトンを使用すると、よりリアルな骨の構造が得られます。マーカーは、姿勢推定ツールによって識別された関節の中心に対応するようにOpenSimスケルトンに配置されます。次に、このスケルトン モデルは、標準のアニメーション手法を使用して 3D スキン メッシュにリギングされます。

インバースキネマティクスとPythonスクリプト: 仮想サービス メンバーの姿勢を確定するために、インバース キネマティクス アルゴリズムが使用されます。このアルゴリズムは、OpenSim のスケルトンモデルを推定された 3D 姿勢に最も適合するように調整します。ポージングパイプライン全体は完全に自動化されており、Python 3で実装されています。これらのツールの統合により、仮想サービスメンバーモデルの生成プロセスが大幅に迅速化され、必要な時間が数日から数秒または数分に短縮されました。この進歩は、武器訓練シナリオのシミュレーションと分析における飛躍的な進歩を表しており、画像やビデオを使用して文書化された特定のシナリオを迅速に再構築できます。

プロトコル

この研究で使用された画像とビデオは、著者が人間の被験者から直接取得したものではありません。1つの画像は、パブリックドメインライセンスの下で利用可能なウィキメディアコモンズのフリーリソースから供給されました。もう1つの画像は、ウォルター・リード陸軍研究所(WRAIR)の共同研究者から提供されたものです。WRAIRから取得したデータは特定されておらず、機関のガイドラインに従って共有されました。WRAIRから提供された画像については、プロトコルはWRAIRのヒト研究倫理委員会のガイドラインに従い、必要なすべての承認と同意を得ました。

1. BOP Tool SCENEモジュールへのアクセス

- BOP Tool Interface から BOP Tool SCENE Module ボタンをクリックして、BOP Tool SCENE モジュールを開きます。

- 「Scenario Definition」をクリックし、「Scenario Detail」タブをクリックします。

2. 画像データの読み込みと処理

- BOP ツールの SCENE モジュールの POSETOOL IMPORT ボタンをクリックします。ポップアップウィンドウが開き、関連する画像またはビデオを選択するように求められます。

- フォルダに移動し、マウス操作を使用して関連する画像/ビデオを選択します。

- 画像を選択したら、ウィンドウポップアップで [開く ]をクリックします。

注:この操作は、画像またはビデオを読み取ります file、ビデオが選択されている場合は画像を生成し、バックグラウンドで姿勢推定アルゴリズムを実行し、武器のトレーニングシーンに関与する仮想サービスメンバーモデルを生成し、それらをそれぞれの位置でBOP ToolSCENEモジュールにロードします。

3.武器とシューターの構成

- [シナリオ名] の下のテキスト ボックスを使用して、シナリオの名前を選択します。これはユーザーの選択です。ここで説明するシナリオでは、開発者はシナリオ名を BlastDemo1 にしています。

- [Weapon Definition] の下の [Name] フィールドでカスタム名を選択します。これもまた、ユーザーの選択です。ここで説明するシナリオでは、開発者は武器名120Mortarを選択しました。

- ドロップダウンメニューのオプションのリストから、適切な武器クラス(例:ヘビーモルタル)を選択します。

- ドロップダウンの選択肢のリストから武器(この場合はM120)を選択します。武器システムを選択すると、対応するブラストカーネルがGUIの「Charges」サブタブに自動的にロードされます。

注:ドロップダウンには、上記で選択した武器クラスに固有のさまざまな可能な武器システムが表示されます。 - ドロップダウンオプションを使用して、選択した武器システムの 弾薬シェル(フルレンジトレーニングラウンド) を選択します。

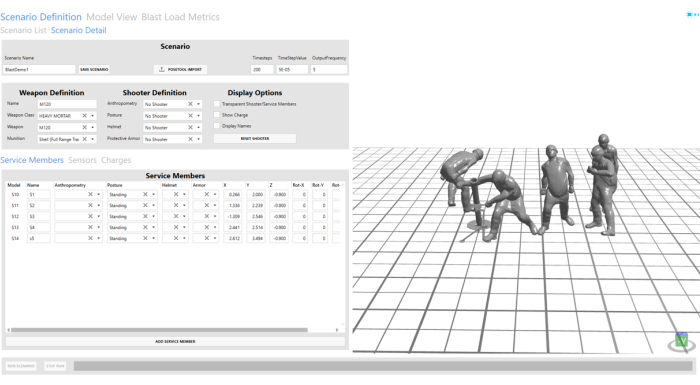

- ドロップダウンオプションの「Shooter Definition」の下にある「Anthropometry」、「Posture」、「Helmet」、「Protective armor」フィールドで「 No Shooter 」を選択します。構成されたシューターとサービスメンバーについては、 図3 を参照してください。

注: シューターは手順 4 でインポートされたシーンに含まれるため、シューター定義ではシューターは選択されていません。

図3:画像データからインポートされた爆風シーン。 爆破シーンは、GUI ツールの右側にある視覚化ウィンドウに表示されます。 この図の拡大版を表示するには、ここをクリックしてください。

4. サービスメンバーの設定

- 「Service Members」タブをクリックします。このタブでは、画像データからインポートされたサービスメンバーの位置と向きを制御します。これを行うには、X、Y、Z、および回転オプションを使用します。助手に対応するアバターについては、著者たちはY位置を2.456から2に調整し、より合理的な補佐銃手の位置にしました。

- 「Service Members」タブの「Name」フィールドを使用して、GUI にインポートされたすべての仮想サービス・メンバー・モデルのカスタム名を選択します。ここでは、自動的にインポートされたすべてのサービス・メンバー・モデルに対して S1、S2、S3、S4、S5 が選択されます。

注 : ここで説明するシナリオでは、S1 と S2 という名前が付けられています。その他のフィールドは自動的に入力されます。ユーザーはそれらを変更して、自動的に推定される位置/姿勢を微調整できます。 - [削除]ボタンを使用して、S2、S4、およびS5を削除します。ここで示すシナリオでは、射撃時に射手と補助砲手のみが存在します。

注: これは、必要に応じて既存のシーンにサービス メンバー モデルを削除または追加するためのカスタム ユーザー オプションを示すために実行されました。

5. 仮想センサーの構成

- [センサー] タブに移動します。新しい仮想センサーを追加するには、[センサーの追加] をクリックします。

- [名前] フィールドでカスタム名を選択します。開発者は、このホワイト ペーパーのデモ用に V1 を選択しました。

- [Sensor Type] フィールドの下のドロップダウンをクリックして、タイプを [Virtual] として選択します。

- センサーの位置を (-0.5, 2, 0.545) にするには、[X]、[Y]、および [Z] フィールドの下のテキスト ボックスを編集します。ここで説明するシナリオでは、開発者はデモンストレーションの目的で 4 つの異なる場所に 4 つの異なるセンサーを作成しました。開発者は、追加のセンサーのセンサー名として V2、V3、V4 を選択しました。

- 5.1 から 5.4 までの手順を繰り返して、(-0.5, 1, 0.545)、(-0.5, 0.5, 0.545)、(-0.5, 0, 0.545) に追加のセンサーを作成します。[回転] の値は 0 のままにします。

注:ユーザーは、回転を使用してブラストチャージに対するセンサーの向きを調整できる平面センサーを選択することもできます。

6. プログラムの保存と実行

- 上部のGUIで[ シナリオを保存 ]ボタンをクリックして、武器のトレーニングシーンを保存します。

- M120 兵器のトレーニング シーンの爆風過圧シミュレーションを実行するには、下部にある [Run Scenario (シナリオの実行 )] ボタンをクリックします。シミュレーションの進行状況は、下部の進行状況バーを使用して表示されます。

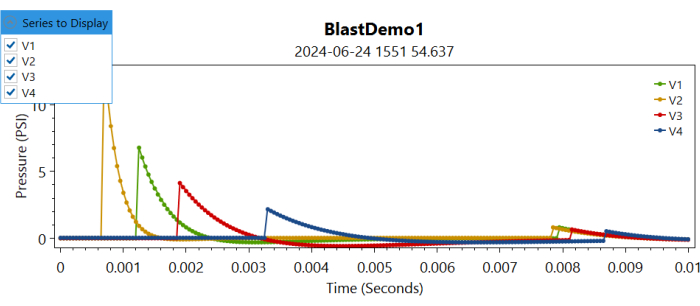

7. 結果を視覚化する

図4:さまざまな仮想センサーの過圧の制御を経時的にプロットします。 ユーザーは、さまざまなセンサーをオンまたはオフにすることで、表示するシリーズを選択できます。 この図の拡大版を表示するには、ここをクリックしてください。

結果

仮想軍人と爆発シーンの自動再構築

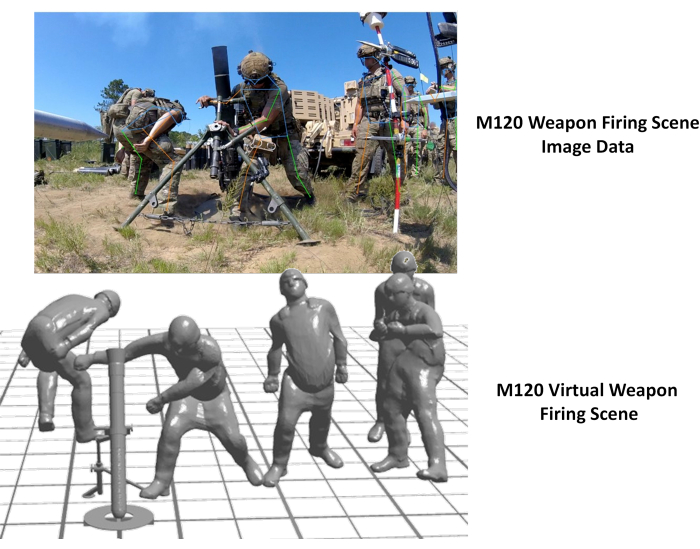

BOP Toolの自動化機能により、自動化された仮想軍人の身体モデルと武器訓練シーンモデルの生成が実現されました。 図5 は、画像データから生成された仮想トレーニングシーンを示しています。ここで観察できるように、結果として得られるシーンは、画像データをよく表現していました。 図 5 のデモンストレーションに使用された画像は、Wikimedia Commons から入手したものです。

図5:画像データから見た仮想兵器の訓練シーン。 左側の写真はAT4兵器の発射に対応する画像データを示し、右側は仮想自動生成された武器の訓練シーンを示しています。この数字はウィキメディア・コモンズから入手しました。 この図の拡大版を表示するには、ここをクリックしてください。

さらに、このアプローチを適用して、M120兵器の発射シーンを再現しました。この画像は、M120迫撃砲の圧力データ収集作業の一環としてWRAIRによって収集されました。下の 図6 は、元の画像と並んで再構築された仮想兵器の発射シーンを示しています。仮想再構成では、アシスタントガンナーの位置に不一致が観察されました。これは、BOP GUIユーザーオプションを使用してアシスタントガンナーの位置を調整することで修正できます。さらに、インストラクターの骨盤の姿勢は不正確に見えましたが、これはおそらく画像の杭による障害物によるものと思われます。このアプローチを他の深度イメージングモダリティと統合することは、これらの不一致に対処するのに有益です。

図6:画像データから見た仮想兵器の訓練シーン。 左側の写真はM120兵器の発射に対応する画像データを示し、右側は仮想自動生成された武器の訓練シーンを示しています。 この図の拡大版を表示するには、ここをクリックしてください。

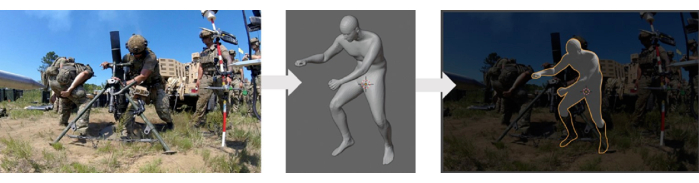

自動シーン生成アプローチの検証

本研究で用いた人体モデルジェネレータは、医用画像データからの人体計測値を含むANSUR II人体スキャンデータベース14を用いて検証を行った。この人体モデルジェネレータを活用した自動再構成法は、手元のデータを用いて定性的な検証を行いました。この検証プロセスでは、再構築されたモデルと実験データ(画像)を重ねて比較しました。 図7 は、3Dアバターモデルと実験データの比較を示しています。ただし、この方法のより徹底的な検証が必要であり、トレーニングシーンに関与するさまざまな軍人の正確な位置、姿勢、向きなど、シーンから追加の実験データが必要になります。

図7:画像で生成された仮想人体モデルの定性的比較。 左のパネルには元の画像が表示され、中央のパネルには生成された仮想ボディモデルが表示され、右のパネルには元の画像に重ねられた仮想モデルが表示されます。 この図の拡大版を表示するには、ここをクリックしてください。

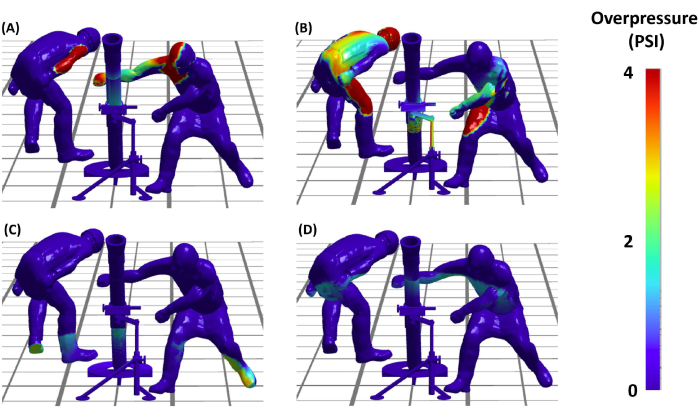

代表的なブラスト過圧シミュレーション

爆風シーンを設定した後、著者たちは爆風過圧(BOP)シミュレーションを実行する重要なフェーズに進むことができました。これにより、武器の発砲現場に関与するさまざまな軍人の爆風負荷の分布を理解できるようになります。 図8 は、AT4兵器発射イベント中のこれらのBOPシミュレーションの結果を示しています。シミュレーションでは、シーン内の仮想軍人にかかる過圧負荷を、時間の経過に伴うさまざまな瞬間に詳細に視覚化できます。結論として、この結果は、兵器訓練シナリオの正確で分析的に有用な再構築を作成する上でのプロトコルの実現可能性だけでなく、有効性も示しており、それによって軍事訓練の安全性と効率性に関するより高度な研究への道が開かれています。

図8:シューターへの爆風の過圧曝露。 (A、B、C、D)4つのパネルは、モデルが予測した爆風の過圧が、異なる時間にM120迫撃砲の発射に関与する仮想軍人に対して予測されたことを示しています。パネル(C)と(D)は、地盤反射による過圧伝播を示しています。 この図の拡大版を表示するには、ここをクリックしてください。

ディスカッション

この論文で紹介した計算フレームワークは、以前に視覚的評価を使用した手動の方法と比較して、ブラストウェポンの訓練シーンの生成を大幅に加速します。このアプローチは、多様な軍事態勢を迅速に捉えて再構築するフレームワークの能力を示しています。

現在のアプローチの利点

特定の姿勢や位置で仮想人体モデルを作成することは困難な作業であり、この目的に使用できるツールは限られています。従来のアプローチ、特にBOPツールで採用されていた従来の方法は、CoBi-DYN 14,15,16,17を使用していました。この方法では、衣服、ヘルメット、防護鎧、ブーツを備えた仮想人体モデルを手動で作成していました。モデルは、体系的なアプローチを欠いていた、近似的な視覚的近似によって生成されました。従来のBOPツールでは、CoBi-DYNを使用して、シナリオ設定ステップでアクセス可能な人体モデルのデータベースを作成しました。ユーザーは、BOPシナリオを実行するために、おおよその姿勢構成を手動で選択し、それらを特定の兵器システムに対しておおよその位置に配置します。既存の軍人データベース(BOPツールのドロップダウンからアクセス)からの爆破シーンの再構築は比較的高速でしたが、仮想軍人モデルデータベースの初期作成には時間がかかり、手作業で大まかなプロセスの性質があるため、シーンごとに約16〜24時間かかりました。対照的に、新しいアプローチでは、確立された姿勢推定ツールを活用して、仮想人体モデルをより自動化し、迅速に作成することができます。これらのツールは、ビデオと画像データを自動的に活用し、仮想の軍人のアバターを使用して爆破シーンを迅速に再構築します。これにより、人体データベースの作成に必要な時間が大幅に短縮されます。この斬新なアプローチでは、ボタンを1回クリックするだけで、追加のソフトウェアを必要とせずに、イメージングデータから完全な仮想シーンを生成または再構築できます。現在、全体のプロセスにはシーンあたり約5〜6秒(ビデオで示されるように、画像データを読み取った後)が必要であり、従来の方法に比べて効率が大幅に向上していることが示されています。この方法は、元のアプローチを置き換えることを意図したものではなく、仮想サービスメンバーモデル(将来的にサービスメンバー本体モデルデータベースに追加可能)の生成を高速化することで補完することを目的としています。これにより、さまざまな複雑さを持つ新しい構成の追加が簡素化され、将来の新しい兵器システムの統合が容易になり、BOP ツールの拡張性が向上します。従来のアプローチと比較すると、提示された方法は、より合理化された自動化されたソリューションを提供し、手動の労力と時間を削減しながら、仮想人体モデル作成の精度と体系的な性質を向上させることは明らかです。これは、この分野での記載された方法の強みと革新性を浮き彫りにしています。

自動シーン生成の検証

このアプローチの定性的な検証は、再構築されたシナリオを画像データに重ね合わせることによって行われました。しかし、これらの画像の位置と姿勢に関する利用可能なデータが不足しているため、定量的な検証は実行可能ではありませんでした。著者たちは、より徹底的な検証の重要性を認識しており、今後の研究でこれに対処する予定である。これを達成するために、著者たちは、正確な測位と姿勢の情報を取得するための包括的なデータ収集の取り組みを想定しています。これにより、詳細な定量的検証を行うことができ、最終的にはアプローチの堅牢性と精度が向上します。

Blastエクスポージャーシミュレーションの検証

武器のブラストカーネルは、武器の発砲中に収集されたペンシルプローブデータを使用して開発および検証されました。武器の爆風核と関連する曝露に関する詳細は、今後の出版物に掲載される予定です。この点に関する一部の情報は、過去の出版物11,12でも入手可能です。この継続的な取り組みは、爆風過圧シミュレーションの精度と有効性の向上に貢献し、ツールの有効性を強化します。

ここで紹介するアプローチは、軍人の仮想アバターを生成するためにも利用でき、その後、BOPツールを使用して選択するためにデータベースに組み込むことができます。現在のプロセスでは、モデルはデータベースに自動的に保存されませんが、作成者はこの機能を BOP ツールの将来のバージョンに含める予定です。さらに、著者は、画像データから自動姿勢と3Dモデルが生成された後、手動で姿勢を変更できる社内ツールを所有しています。現在、この機能は独立して存在し、ユーザーインターフェースでさらなる開発が必要なため、BOP ツールには統合されていません。それにもかかわらず、これは進行中の作業であり、著者はこれをBOPツールの将来のバージョンに組み込む予定です。

爆風負荷データは、ASCIIテキストファイルの形式でエクスポートすることもでき、さらに後処理手順を適用して、さまざまな兵器システムの爆風線量パターンをより詳細に調査することもできます。現在、インパルスや強度などの出力メトリクスのための高度なポストプロセッシングツールを開発する作業が進行中で、これらのシナリオでのより複雑な繰り返しの爆風負荷をユーザーが理解し、調査するのに役立つ可能性があります。さらに、これらのツールは計算効率を高めるために開発されており、高速なシミュレーションを可能にします。したがって、これらのツールを使用すると、逆最適化スタディを実行して、武器の発砲シナリオ中の最適な姿勢と位置を決定できます。このような改善により、さまざまな武器のトレーニング範囲でトレーニングプロトコルを最適化するためのツールの適用性が向上します。爆風線量の推定は、脳、肺、その他18,19,20およびマイクロスケールの傷害モデル20,21などのさまざまな脆弱な解剖学的領域について、より洗練された高解像度マクロスケール計算モデルを開発するためにも使用できます。ここでは、モデルに組み込まれているヘルメットとアーマーは視覚的な表現のみを目的としており、これらのシミュレーションでは爆風量に影響を与えません。これは、モデルが剛体構造と見なされるため、モデルが含まれていても爆風過圧シミュレーションの結果が変更されたり影響を与えたりしないためです。

このホワイトペーパーでは、既存のオープンソースの姿勢推定ツールを活用して、武器のトレーニングシナリオで人間の姿勢を推定します。ここで開発および議論されているフレームワークは、ツールにとらわれない姿勢をとっている、つまり、著者は、より多くの進歩が見られるにつれて、既存のツールを新しいツールに置き換えることができることに注意してください。全体として、テストでは、最新のソフトウェアツールは非常に強力である一方で、画像ベースのポーズ検出は困難な作業であることが示されました。ただし、姿勢検出のパフォーマンスを向上させるために使用できる推奨事項がいくつかあります。これらのツールは、関心のある人物がカメラの視界にはっきりと写っているときに最も効果的です。これは武器訓練演習中に常に可能であるとは限りませんが、カメラを配置するときにこれを考慮すると、ポーズ検出結果を向上させることができます。さらに、訓練演習を行う軍人は、周囲に溶け込むカモフラージュされた疲労を頻繁に着用しています。これにより、人間の目と機械学習(ML)アルゴリズムの両方で検出されにくくなります。迷彩23を着用した人間を検出するMLアルゴリズムの能力を強化する方法は存在しますが、これらを実装するのは簡単ではありません。可能な場合は、コントラストの高い背景のある場所で武器の訓練の画像を収集することで、ポーズの検出を向上させることができます。

さらに、画像から3D量を推定する従来のアプローチは、複数のカメラアングルによる写真測量技術を使用することです。また、複数の角度から画像や動画を撮影することで、姿勢の推定を向上させることもできます。複数のカメラからの姿勢推定を融合するのは比較的簡単です24。結果を改善する可能性のある別のフォトグラメトリ手法は、チェッカーボードまたは既知の寸法の他のオブジェクトを各カメラの共通の基準点として使用することです。複数のカメラを使用する際の課題は、カメラを時刻同期させることです。カスタムトレーニング済みの機械学習モデルを開発して、顔の代わりにヘルメットなどの特性を検出したり、軍専用の衣服や装備(ブーツ、ベスト、防弾チョッキなど)を着用している人を検出したりできます。既存の姿勢推定ツールは、カスタムトレーニング済みのMLモデルを使用して拡張できます。これは時間がかかり、面倒ですが、姿勢推定モデルのパフォーマンスを大幅に向上させることができます。

要約すると、この論文では、強化されたブラスト過圧フレームワークのさまざまなコンポーネントを効果的に概説しています。ここで、著者らは、パイプラインのよりシームレスな統合と完全な自動化を通じて、その適用性と有効性を高める可能性も認識しています。AMGツールを使用したスケーリングされた3Dボディメッシュの生成などの要素は、手動のユーザー入力を減らすために自動化の過程にあります。現在、これらの機能を BOP ツールの SCENE モジュールに統合する作業が進行中です。この技術が発展するにつれて、国防総省のすべての利害関係者と研究所が利用できるようになります。さらに、進行中の作業には、追加の兵器システム用の武器カーネルの特性評価と検証が含まれます。方法論を洗練し検証するためのこの継続的な努力により、著者らが開発したツールは技術進歩の最前線に留まり、軍事訓練演習の安全性と有効性に大きく貢献しています。今後の出版物では、これらの開発についてより詳細に説明し、軍事訓練と安全のより広範な分野に貢献する予定です。

開示事項

著者は何も開示していません。

謝辞

この研究は、MTECプロジェクトコールMTEC-22-02-MPAI-082の下で、国防総省爆破傷害研究調整オフィスによって資金提供されています。また、著者たちは、武器の爆風カーネルに関するHamid Gharahi氏と、兵器発射爆風過圧シミュレーションのモデリング機能の開発に関するZhijian J Chen氏の貢献にも感謝している。このプレゼンテーションで表明された見解、意見、および/または調査結果は著者のものであり、陸軍省または国防総省の公式の方針または立場を反映したものではありません。

資料

| Name | Company | Catalog Number | Comments |

| Anthropometric Model Generator (AMG) | CFD Research | N/A | For generting 3D human body models with different anthropometric characteristics. The tool is DoD Open Source. |

| BOP Tool | CFD Research | N/A | For setting up blast scenes and overpressure simulations. The tool is DoD open source. |

| BOP Tool SCENE Module | CFD Research | N/A | For setting up blast scenes and overpressure simulations. The tool is DoD open source. |

| Mediapipe | Version 0.9 | Open-source pose estimation library. | |

| MMPose | OpenMMLab | Version 1.2 | Open-source pose estimation library. |

| OpenSim | Stanford University | Version 4.4 | Open-source musculoskeletal modeling and simulation platform. |

| Python 3 | Anaconda Inc | Version 3.8 | The open source Individual Edition containing Python 3.8 and preinstalled packages to perform video processing and connecting the pose estimation tools. |

参考文献

- LaValle, C. R., et al. Neurocognitive performance deficits related to immediate and acute blast overpressure exposure. Front Neurol. 10, (2019).

- Kamimori, G. H., et al. Longitudinal investigation of neurotrauma serum biomarkers, behavioral characterization, and brain imaging in soldiers following repeated low-level blast exposure (New Zealand Breacher Study). Military Med. 183 (suppl_1), 28-33 (2018).

- Wang, Z., et al. Acute and chronic molecular signatures and associated symptoms of blast exposure in military breachers. J Neurotrauma. 37 (10), 1221-1232 (2020).

- Gill, J., et al. Moderate blast exposure results in increased IL-6 and TNFα in peripheral blood. Brain Behavior Immunity. 65, 90-94 (2017).

- Carr, W., et al. Ubiquitin carboxy-terminal hydrolase-L1 as a serum neurotrauma biomarker for exposure to occupational low-Level blast. Front Neurol. 6, (2015).

- Boutté, A. M., et al. Brain-related proteins as serum biomarkers of acute, subconcussive blast overpressure exposure: A cohort study of military personnel. PLoS One. 14 (8), e0221036 (2019).

- Skotak, M., et al. Occupational blast wave exposure during multiday 0.50 caliber rifle course. Front Neurol. 10, (2019).

- Kamimori, G. H., Reilly, L. A., LaValle, C. R., Silva, U. B. O. D. Occupational overpressure exposure of breachers and military personnel. Shock Waves. 27 (6), 837-847 (2017).

- Misistia, A., et al. Sensor orientation and other factors which increase the blast overpressure reporting errors. PLoS One. 15 (10), e0240262 (2020).

- National Defense Authorization Act for Fiscal Year 2020. Wikipedia Available from: https://en.wikipedia.org/w/index.php?title=National_Defense_Authorization_Act_for_Fiscal_Year_2020&oldid=1183832580 (2023)

- Przekwas, A., et al. Fast-running tools for personalized monitoring of blast exposure in military training and operations. Military Med. 186 (Supplement_1), 529-536 (2021).

- Spencer, R. W., et al. Fiscal year 2018 National Defense Authorization Act, Section 734, Weapon systems line of inquiry: Overview and blast overpressure tool-A module for human body blast wave exposure for safer weapons training. Military Med. 188 (Supplement_6), 536-544 (2023).

- Delp, S. L., et al. OpenSim: open-source software to create and analyze dynamic simulations of movement. IEEE Trans Biomed Eng. 54 (11), 1940-1950 (2007).

- Zhou, X., Sun, K., Roos, P. E., Li, P., Corner, B. Anthropometry model generation based on ANSUR II database. Int J Digital Human. 1 (4), 321 (2016).

- Zhou, X., Przekwas, A. A fast and robust whole-body control algorithm for running. Int J Human Factors Modell Simulat. 2 (1-2), 127-148 (2011).

- Zhou, X., Whitley, P., Przekwas, A. A musculoskeletal fatigue model for prediction of aviator neck manoeuvring loadings. Int J Human Factors Modell Simulat. 4 (3-4), 191-219 (2014).

- Roos, P. E., Vasavada, A., Zheng, L., Zhou, X. Neck musculoskeletal model generation through anthropometric scaling. PLoS One. 15 (1), e0219954 (2020).

- Garimella, H. T., Kraft, R. H. Modeling the mechanics of axonal fiber tracts using the embedded finite element method. Int J Numer Method Biomed Eng. 33 (5), (2017).

- Garimella, H. T., Kraft, R. H., Przekwas, A. J. Do blast induced skull flexures result in axonal deformation. PLoS One. 13 (3), e0190881 (2018).

- Przekwas, A., et al. Biomechanics of blast TBI with time-resolved consecutive primary, secondary, and tertiary loads. Military Med. 184 (Suppl 1), 195-205 (2019).

- Gharahi, H., Garimella, H. T., Chen, Z. J., Gupta, R. K., Przekwas, A. Mathematical model of mechanobiology of acute and repeated synaptic injury and systemic biomarker kinetics. Front Cell Neurosci. 17, 1007062 (2023).

- Przekwas, A., Somayaji, M. R., Gupta, R. K. Synaptic mechanisms of blast-induced brain injury. Front Neurol. 7, 2 (2016).

- Liu, Y., Wang, C., Zhou, Y. Camouflaged people detection based on a semi-supervised search identification network. Def Technol. 21, 176-183 (2023).

- Real time 3D body pose estimation using MediaPipe. Available from: https://temugeb.github.io/python/computer_vision/2021/09/14/bodypose3d.html (2021)

転載および許可

このJoVE論文のテキスト又は図を再利用するための許可を申請します

許可を申請さらに記事を探す

This article has been published

Video Coming Soon

Copyright © 2023 MyJoVE Corporation. All rights reserved