Aby wyświetlić tę treść, wymagana jest subskrypcja JoVE. Zaloguj się lub rozpocznij bezpłatny okres próbny.

Method Article

Artificial Intelligence-Based System for Detecting Attention Levels in Students

W tym Artykule

Podsumowanie

This paper proposes an artificial intelligence-based system to automatically detect whether students are paying attention to the class or are distracted. This system is designed to help teachers maintain students' attention, optimize their lessons, and dynamically introduce modifications in order for them to be more engaging.

Streszczenie

The attention level of students in a classroom can be improved through the use of Artificial Intelligence (AI) techniques. By automatically identifying the attention level, teachers can employ strategies to regain students' focus. This can be achieved through various sources of information.

One source is to analyze the emotions reflected on students' faces. AI can detect emotions, such as neutral, disgust, surprise, sadness, fear, happiness, and anger. Additionally, the direction of the students' gaze can also potentially indicate their level of attention. Another source is to observe the students' body posture. By using cameras and deep learning techniques, posture can be analyzed to determine the level of attention. For example, students who are slouching or resting their heads on their desks may have a lower level of attention. Smartwatches distributed to the students can provide biometric and other data, including heart rate and inertial measurements, which can also be used as indicators of attention. By combining these sources of information, an AI system can be trained to identify the level of attention in the classroom. However, integrating the different types of data poses a challenge that requires creating a labeled dataset. Expert input and existing studies are consulted for accurate labeling. In this paper, we propose the integration of such measurements and the creation of a dataset and a potential attention classifier. To provide feedback to the teacher, we explore various methods, such as smartwatches or direct computers. Once the teacher becomes aware of attention issues, they can adjust their teaching approach to re-engage and motivate the students. In summary, AI techniques can automatically identify the students' attention level by analyzing their emotions, gaze direction, body posture, and biometric data. This information can assist teachers in optimizing the teaching-learning process.

Wprowadzenie

In modern educational settings, accurately assessing and maintaining students' attention is crucial for effective teaching and learning. However, traditional methods of gauging engagement, such as self-reporting or subjective teacher observations, are time-consuming and prone to biases. To address this challenge, Artificial Intelligence (AI) techniques have emerged as promising solutions for automated attention detection. One significant aspect of understanding students' engagement levels is emotion recognition1. AI systems can analyze facial expressions to identify emotions, such as neutral, disgust, surprise, sadness, fear, happiness, and anger2.

Gaze direction and body posture are also crucial indicators of students' attention3. By utilizing cameras and advanced machine learning algorithms, AI systems can accurately track where students are looking and analyze their body posture to detect signs of disinterest or fatigue4. Furthermore, incorporating biometric data enhances the accuracy and reliability of attention detection5. By collecting measurements, such as heart rate and blood oxygen saturation levels, through smartwatches worn by students, objective indicators of attention can be obtained, complementing other sources of information.

This paper proposes a system that evaluates an individual's level of attention using color cameras and other different sensors. It combines emotion recognition, gaze direction analysis, body posture assessment, and biometric data to provide educators with a comprehensive set of tools for optimizing the teaching-learning process and improving student engagement. By employing these tools, educators can gain a comprehensive understanding of the teaching-learning process and enhance student engagement, thereby optimizing the overall educational experience. By applying AI techniques, it is even possible to automatically evaluate this data.

The main goal of this work is to describe the system that allows us to capture all the information and, once captured, to train an AI model that allows us to obtain the attention of the whole class in real-time. Although other works have already proposed capturing attention using visual or emotional information6, this work proposes the combined use of these techniques, which provides a holistic approach to allow the use of more complex and effective AI techniques. Moreover, the datasets hitherto available are limited to either a set of videos or one of biometric data. The literature includes no datasets that provide complete data with images of the student's face or their body, biometric data, data on the teacher's position, etc. With the system presented here, it is possible to capture this type of dataset.

The system associates a level of attention with each student at each point of time. This value is a probability value of attention between 0% and 100%, which can be interpreted as low level of attention (0%-40%), medium level of attention (40%-75%), and high level of attention (75%-100%). Throughout the text, this probability of attention is referred to as the level of attention, student attention, or whether students are distracted or not, but these are all related to the same output value of our system.

Over the years, the field of automatic engagement detection has grown significantly due to its potential to revolutionize education. Researchers have proposed various approaches for this area of study.

Ma et al.7 introduced a novel method based on a Neural Turing Machine for automatic engagement recognition. They extracted certain features, such as eye gaze, facial action units, head pose, and body pose, to create a comprehensive representation of engagement recognition.

EyeTab8, another innovative system, used models to estimate where someone is looking with both their eyes. It was specially made to work smoothly on a standard tablet with no modifications. This system harnesses well-known algorithms for processing images and analyzing computer vision. Their gaze estimation pipeline includes a Haar-like feature-based eye detector, as well as a RANSAC-based limbus ellipse fitting approach.

Sanghvi et al.9 propose an approach that relies on vision-based techniques to automatically extract expressive postural features from videos recorded from a lateral view, capturing the behavior of the children. An initial evaluation is conducted, involving the training of multiple recognition models using contextualized affective postural expressions. The results obtained demonstrate that patterns of postural behavior can effectively predict the engagement of the children with the robot.

In other works, such as Gupta et al.10, a deep learning-based method is employed to detect the real-time engagement of online learners by analyzing their facial expressions and classifying their emotions. The approach utilizes facial emotion recognition to calculate an engagement index (EI) that predicts two engagement states: engaged and disengaged. Various deep learning models, including Inception-V3, VGG19, and ResNet-50, are evaluated and compared to identify the most effective predictive classification model for real-time engagement detection.

In Altuwairqi et al.11, the researchers present a novel automatic multimodal approach for assessing student engagement levels in real-time. To ensure accurate and dependable measurements, the team integrated and analyzed three distinct modalities that capture students' behaviors: facial expressions for emotions, keyboard keystrokes, and mouse movements.

Guillén et al.12 propose the development of a monitoring system that uses electrocardiography (ECG) as a primary physiological signal to analyze and predict the presence or absence of cognitive attention in individuals while performing a task.

Alban et al.13 utilize a neural network (NN) to detect emotions by analyzing the heart rate (HR) and electrodermal activity (EDA) values of various participants in both time and frequency domains. They find that an increase in the root-mean-square of successive differences (RMSDD) and the standard deviation normal-to-normal (SDNN) intervals, coupled with a decrease in the average HR, indicate heightened activity in the sympathetic nervous system, which is associated with fear.

Kajiwara et al.14 propose an innovative system that employs wearable sensors and deep neural networks to forecast the level of emotion and engagement in workers. The system follows a three-step process. Initially, wearable sensors capture and collect data on behaviors and pulse waves. Subsequently, time series features are computed based on the behavioral and physiological data acquired. Finally, deep neural networks are used to input the time series features and make predictions on the individual's emotions and engagement levels.

In other research, such as Costante et al.15, an approach based on a novel transfer metric learning algorithm is proposed, which utilizes prior knowledge of a predefined set of gestures to enhance the recognition of user-defined gestures. This improvement is achieved with minimal reliance on additional training samples. Similarly, a sensor-based human activity recognition framework16 is presented to address the goal of the impersonal recognition of complex human activities. Signal data collected from wrist-worn sensors is utilized in the human activity recognition framework developed, employing four RNN-based DL models (Long-Short Term Memories, Bidirectional Long-Short Term Memories, Gated Recurrent Units, and Bidirectional Gated Recurrent Units) to investigate the activities performed by the user of the wearable device.

Protokół

The following protocol follows the guidelines of the University of Alicante's human research ethics committee with the approved protocol number UA-2022-11-12. Informed consent has been obtained from all participants for this experiment and for using the data here.

1. Hardware, software, and class setup

- Set a router with WiFi capabilities (the experiments were carried out using a DLink DSR 1000AC) in the desired location so that its range covers the entire room. Here, 25 m2 classrooms with 30 students were covered.

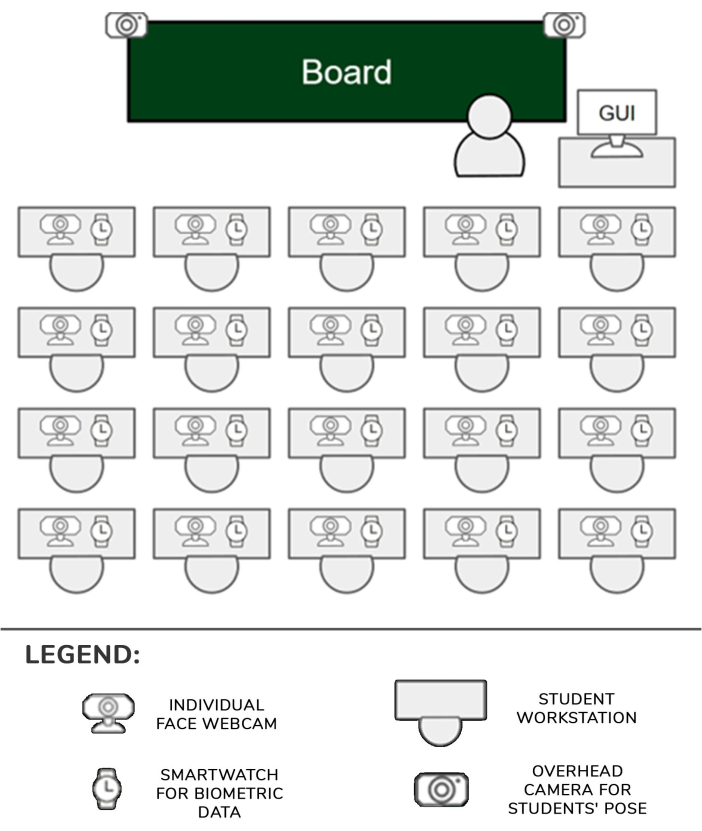

- Set one smartwatch (here Samsung Galaxy Smartwatch 5) and one camera (here Logitech C920 cameras) for each student location. Set one embedded device for every two students. Fix two cameras on two tripods and connect them to another embedded device (hereafter referred to as zenithal cameras).

- Connect the cameras to the corresponding embedded devices with a USB link and turn them ON. Turn ON the smartwatches as well. Connect every embedded device and smartwatch to the WiFi network of the router set up in step 1.1.

- Place the cameras in the corner of each student's desk and point them forward and slightly tilted upwards so that the face of the student is clearly visible in the images.

- Place the two tripods with the cameras in front of the desks that are closest to the aisles in the first row of seats of the classroom. Move the tripod riser to the highest position so the cameras can clearly view most of the students. Each embedded device will be able to manage one or two students, along with their respective cameras and watches. The setup is depicted in Figure 1.

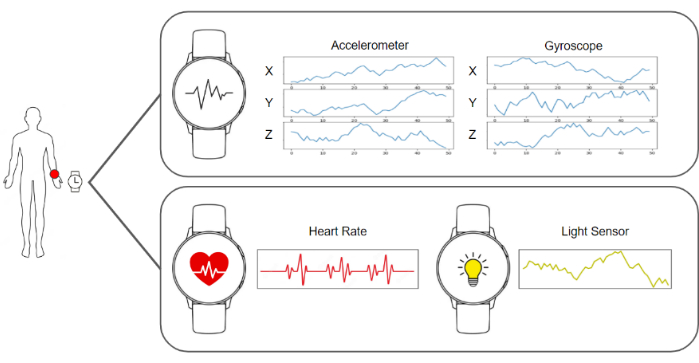

- Run the capturing software in the smartwatches and in the embedded devices so that they are ready to send the images and the accelerometer, gyroscope, heart rate, and lighting data. Run the server software that collects the data and stores it. A diagram of all these elements can be seen in Figure 2.

- Ensure the zenithal cameras dominate the scene so that they have a clear view of the students' bodies. Place an additional individual camera in front of each student.

- Let the students sit in their places, inform them of the goals of the experiment, and instruct them to wear the smartwatches on their dominant hand and not to interact with any of the elements of the setup.

- Start the capture and collection of the data in the server and resume the class lessons as usual.

Figure 1: Hardware and data pipeline. The cameras and smartwatches data are gathered and fed to the machine learning algorithms to be processed. Please click here to view a larger version of this figure.

Figure 2: Position of the sensors, teacher, and students. Diagram showing the positions of the cameras, smartwatches, and GUI in the classroom with the teacher and students. Please click here to view a larger version of this figure.

2. Capture and data processing pipeline

NOTE: All these steps are performed automatically by processing software deployed in a server. The implementation used for the experiments in this work was written in Python 3.8.

- Collect the required data by gathering all the images and biometric data from the smartwatch for each student and build a data frame that includes data from 1 s. This data frame is composed of one image from the individual camera, one image from each zenithal camera (two in this case), fifty registers of the three gyroscope values, fifty registers of the three accelerometer values, one heart-rate value, and one light-condition value.

- To compute the head direction, the first step is to use the webcam that is pointed to the student's face and retrieve an image. Then, that image is processed by the BlazeFace17 landmark estimation algorithm. The output of such an algorithm is a list of 2D key points corresponding to specific areas of the face.

- By using the estimated position of the eyes, nose, mouth, and chin provided by the algorithm, solve the perspective n-point problem using the method cv::SolvePnPMethod of the OpenCV library, a canonical face setting. The rotation matrix that defines the head direction is obtained as a result of this procedure.

- Compute the body pose by forwarding the zenithal image from the data frame in which the student is depicted to the BlazePose18 landmark estimation algorithm and retrieve a list of 2D coordinates of the joints of the body depicted in the image. This list of landmarks describes the student's pose.

NOTE: The body pose is important as it can accurately represent a student's engagement with different activities during the class. For instance, if the student is sitting naturally with their hands on the desk, it could mean that they are taking notes. In contrast, if they are continually moving their arms, it could mean they are speaking to someone. - Obtain the image of the student's face and perform alignment preprocessing. Retrieve the key points of the eyes from the list computed in step 2.3 and project them to the image plane so that the position of the eyes is obtained.

- Trace a virtual vector that joins the position of both eyes. Compute the angle between that vector and the horizontal line and build a homography matrix to apply the reverse angle to the image and the key points so that the eyes are aligned horizontally.

- Crop the face using the key points to detect its limits and feed the patch to the emotion detection convolutional neural network19. Retrieve the output from the network, which is a vector with 7 positions, each position giving the probability of the face showing one of the seven basic emotions: neutral, happy, disgusted, angry, scared, surprised, and sad.

- Measure the level of attention by obtaining the accelerometer, gyroscope, and heart rate data from the data frame (Figure 3).

- Feed the deep learning-based model that was custom-built and trained from scratch described in the representative results with the stream of accelerometer and gyroscope data. Retrieve the output from the model, which is a vector with 4 positions, each position giving the probability of the data representing one of the following possible actions: handwriting, typing on a keyboard, using a mobile phone, or resting, as shown in Figure 4.

- Feed the final attention classifier with a linearization of all the results from the previous systems by merging the head direction, emotion recognition output, body pose, and the gyroscope, accelerometer, and heart rate data. Retrieve the results from this final classifier, which is a score from 0 to 100. Classify this continuous value into one of the three possible discrete categories of attention: low level of attention (0-40%), medium level of attention (40%-75%), and high level of attention (75% - 100%). The structure of the dataset generated is shown in Table 1.

- Show the results of the attention levels to the teacher using the graphical user interface (GUI) from the teacher's computer, accessible from a regular web browser.

Figure 3: Data captured by the smartwatch. The smartwatch provides a gyroscope, accelerometer, heart rate, and light condition as streams of data. Please click here to view a larger version of this figure.

Figure 4: Examples of the categories considered by the activity recognition model. Four different actions are recognized by the activity recognition model: handwriting, typing on a keyboard, using a smartphone, and resting position. Please click here to view a larger version of this figure.

Wyniki

The target group of this study is undergraduate and master's students, and so the main age group is between 18 and 25 years old. This population was selected because they can handle electronic devices with fewer distractions than younger students. In total, the group included 25 people. This age group can provide the most reliable results to test the proposal.

The results of the attention level shown to the teacher have 2 parts. Part A of the result shows individual information about the c...

Dyskusje

This work presents a system that measures the attention level of a student in a classroom using cameras, smartwatches, and artificial intelligence algorithms. This information is subsequently presented to the teacher for them to have an idea of the general state of the class.

One of the main critical steps of the protocol is the synchronization of the smartwatch information with the color camera image, as these have different frequencies. This was solved by deploying raspberries as servers tha...

Ujawnienia

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Podziękowania

This work was developed with funding from Programa Prometeo, project ID CIPROM/2021/017. Prof. Rosabel Roig is the chair of the UNESCO "Education, Research and Digital Inclusion".

Materiały

| Name | Company | Catalog Number | Comments |

| 4 GPUs Nvidia A40 Ampere | NVIDIA | TCSA40M-PB | GPU for centralized model processing server |

| FusionServer 2288H V5 | X-Fusion | 02311XBK | Platform that includes power supply and motherboard for centralized model processing server |

| Memory Card Evo Plus 128 GB | Samsung | MB-MC128KA/EU | Memory card for the operation of the raspberry pi 4b 2gb. One for each raspberry. |

| NEMIX RAM - 512 GB Kit DDR4-3200 PC4-25600 8Rx4 EC | NEMIX | M393AAG40M32-CAE | RAM for centralized model processing server |

| Processor Intel Xeon Gold 6330 | Intel | CD8068904572101 | Processor for centralized model processing server |

| Raspberry PI 4B 2GB | Raspberry | 1822095 | Local server that receives requests from the clocks and sends them to the general server. One every two students. |

| Samsung Galaxy Watch 5 (40mm) | Samsung | SM-R900NZAAPHE | Clock that monitors each student's activity. For each student. |

| Samsung MZQL23T8HCLS-00B7C PM9A3 3.84Tb Nvme U.2 PCI-Express-4 x4 2.5inch Ssd | Samsung | MZQL23T8HCLS-00B7C | Internal storage for centralized model processing server |

| WebCam HD Pro C920 Webcam FullHD | Logitech | 960-001055 | Webcam HD. One for each student plus two for student poses. |

Odniesienia

- Hasnine, M. N., et al. Students' emotion extraction and visualization for engagement detection in online learning. Procedia Comp Sci. 192, 3423-3431 (2021).

- Khare, S. K., Blanes-Vidal, V., Nadimi, E. S., Acharya, U. R. Emotion recognition and artificial intelligence: A systematic review (2014-2023) and research recommendations. Info Fusion. 102, 102019 (2024).

- Bosch, N. Detecting student engagement: Human versus machine. UMAP '16: Proc the 2016 Conf User Model Adapt Personal. , 317-320 (2016).

- Araya, R., Sossa-Rivera, J. Automatic detection of gaze and body orientation in elementary school classrooms. Front Robot AI. 8, 729832 (2021).

- Lu, Y., Zhang, J., Li, B., Chen, P., Zhuang, Z. Harnessing commodity wearable devices for capturing learner engagement. IEEE Access. 7, 15749-15757 (2019).

- Vanneste, P., et al. Computer vision and human behaviour, emotion and cognition detection: A use case on student engagement. Mathematics. 9 (3), 287 (2021).

- Ma, X., Xu, M., Dong, Y., Sun, Z. Automatic student engagement in online learning environment based on neural Turing machine. Int J Info Edu Tech. 11 (3), 107-111 (2021).

- Wood, E., Bulling, A. EyeTab: model-based gaze estimation on unmodified tablet computers. ETRA '14: Proc Symp Eye Tracking Res Appl. , 207-210 (2014).

- Sanghvi, J., et al. Automatic analysis of affective postures and body motion to detect engagement with a game companion. HRI '11: Proc 6th Int Conf Human-robot Interact. , 205-211 (2011).

- Gupta, S., Kumar, P., Tekchandani, R. K. Facial emotion recognition based real-time learner engagement detection system in online learning context using deep learning models. Multimed Tools Appl. 82 (8), 11365-11394 (2023).

- Altuwairqi, K., Jarraya, S. K., Allinjawi, A., Hammami, M. Student behavior analysis to measure engagement levels in online learning environments. Signal Image Video Process. 15 (7), 1387-1395 (2021).

- Belle, A., Hargraves, R. H., Najarian, K. An automated optimal engagement and attention detection system using electrocardiogram. Comput Math Methods Med. 2012, 528781 (2012).

- Alban, A. Q., et al. Heart rate as a predictor of challenging behaviours among children with autism from wearable sensors in social robot interactions. Robotics. 12 (2), 55 (2023).

- Kajiwara, Y., Shimauchi, T., Kimura, H. Predicting emotion and engagement of workers in order picking based on behavior and pulse waves acquired by wearable devices. Sensors. 19 (1), 165 (2019).

- Costante, G., Porzi, L., Lanz, O., Valigi, P., Ricci, E. Personalizing a smartwatch-based gesture interface with transfer learning. , 2530-2534 (2014).

- Mekruksavanich, S., Jitpattanakul, A. Deep convolutional neural network with RNNs for complex activity recognition using wrist-worn wearable sensor data. Electronics. 10 (14), 1685 (2021).

- Bazarevsky, V., Kartynnik, Y., Vakunov, A., Raveendran, K., Grundmann, M. BlazeFace: Sub-millisecond Neural Face Detection on Mobile GPUs. arXiv. , (2019).

- Bazarevsky, V., et al. BlazePose: On-device Real-time Body Pose tracking. arXiv. , (2020).

- Mejia-Escobar, C., Cazorla, M., Martinez-Martin, E. Towards a better performance in facial expression recognition: a data-centric approach. Comput Intelligence Neurosci. , (2023).

- El-Garem, A., Adel, R. Applying systematic literature review and Delphi methods to explore digital transformation key success factors. Int J Eco Mgmt Engi. 16 (7), 383-389 (2022).

- Indumathi, V., Kist, A. A. Using electroencephalography to determine student attention in the classroom. , 1-3 (2023).

- Ma, X., Xie, Y., Wang, H. Research on the construction and application of teacher-student interaction evaluation system for smart classroom in the post COVID-19. Studies Edu Eval. 78, 101286 (2023).

- Andersen, D. Constructing Delphi statements for technology foresight. Futures Foresight Sci. 5 (2), e144 (2022).

- Khodyakov, D., et al. Disciplinary trends in the use of the Delphi method: A bibliometric analysis. PLoS One. 18 (8), e0289009 (2023).

- Martins, A. I., et al. Consensus on the Terms and Procedures for Planning and Reporting a Usability Evaluation of Health-Related Digital Solutions: Delphi Study and a Resulting Checklist. J Medical Internet Res. 25, e44326 (2023).

- Dalmaso, M., Castelli, L., Galfano, G. Social modulators of gaze-mediated orienting of attention: A review. Psychon Bull Rev. 27 (5), 833-855 (2020).

- Klein, R. M. Thinking about attention: Successive approximations to a productive taxonomy. Cognition. 225, 105137 (2022).

- Schindler, S., Bublatzky, F. Attention and emotion: An integrative review of emotional face processing as a function of attention. Cortex. 130, 362-386 (2020).

- Zaletelj, J., Košir, A. Predicting students' attention in the classroom from Kinect facial and body features. J Image Video Proc. 80, (2017).

- Strauch, C., Wang, C. A., Einhäuser, W., Van der Stigchel, S., Naber, M. Pupillometry as an integrated readout of distinct attentional networks. Trends Neurosci. 45 (8), 635-647 (2022).

Przedruki i uprawnienia

Zapytaj o uprawnienia na użycie tekstu lub obrazów z tego artykułu JoVE

Zapytaj o uprawnieniaThis article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. Wszelkie prawa zastrzeżone