A subscription to JoVE is required to view this content. Sign in or start your free trial.

Method Article

Direct Linear Transformation for the Measurement of In-Situ Peripheral Nerve Strain During Stretching

In This Article

Summary

This protocol implements a stereo-imaging camera system calibrated using direct linear transformation to capture three-dimensional in-situ displacements of stretched peripheral nerves. By capturing these displacements, strain induced at varying degrees of stretch can be determined informing the stretch injury thresholds that can advance the science of stretch-dependent nerve repair.

Abstract

Peripheral nerves undergo physiological and non-physiological stretch during development, normal joint movement, injury, and more recently while undergoing surgical repair. Understanding the biomechanical response of peripheral nerves to stretch is critical to the understanding of their response to different loading conditions and thus, to optimizing treatment strategies and surgical interventions. This protocol describes in detail the calibration process of the stereo-imaging camera system via direct linear transformation and the tracking of the three-dimensional in-situ tissue displacement of peripheral nerves during stretch, obtained from three-dimensional coordinates of the video files captured by the calibrated stereo-imaging camera system.

From the obtained three-dimensional coordinates, the nerve length, change in the nerve length, and percent strain with respect to time can be calculated for a stretched peripheral nerve. Using a stereo-imaging camera system provides a non-invasive method for capturing three-dimensional displacements of peripheral nerves when stretched. Direct linear transformation enables three-dimensional reconstructions of peripheral nerve length during stretch to measure strain. Currently, no methodology exists to study the in-situ strain of stretched peripheral nerves using a stereo-imaging camera system calibrated via direct linear transformation. Capturing the in-situ strain of peripheral nerves when stretched can not only aid clinicians in understanding underlying injury mechanisms of nerve damage when overstretched but also help optimize treatment strategies that rely on stretch-induced interventions. The methodology described in the paper has the potential to enhance our understanding of peripheral nerve biomechanics in response to stretch to improve patient outcomes in the field of nerve injury management and rehabilitation.

Introduction

Peripheral nerves (PNs) undergo stretch during development, growth, normal joint movement, injury, and surgery1. PNs display viscoelastic properties to protect the nerve during regular movements2,3 and maintain the structural health of its nerve fibers2. Because PN response to mechanical stretch has been shown to depend on the type of nerve fiber damage4, injuries to adjacent connective tissues2,4, and testing approaches (i.e., loading rate or direction)5,6,7,8,9,10,11,12,13,14, it is essential to distinguish the biomechanical responses of PNs during normal range of motion versus non-physiological range at both slow- and rapid-stretch rates. This can further the understanding of the PN injury mechanism in response to stretch and aid in timely and optimized intervention1,4,15,16. There has been a growing trend in physical therapy to evaluate and intervene based on the relationship between nerve physiology and biomechanics17. By understanding the differences in PN biomechanics at various applied loads, physical therapists can be better prepared to modify current interventions17.

Available biomechanical data of PNs in response to stretch remains variable and can be attributed to testing equipment and procedures and differences in elongation data analysis5,6,7,8,9,10,11,12,13,14,16. Furthermore, measuring three-dimensional (3D) in-situ nerve displacement remains poorly described in the currently available literature. Previous studies have used stereo-imaging techniques to maximize the accuracy of 3D reconstruction of tissue displacement of facet joint capsules18,19. The direct linear transformation (DLT) technique enables the conversion of two or more two-dimensional (2D) views to 3D real-world coordinates (i.e., in mm)20,21,22. DLT provides a high-accuracy calibration method for stereo-imaging camera systems because it enables precise reconstruction of 3D positions, accounting for lens distortion, camera parameters, and image coordinates, and permits flexibility in stereo-imaging camera setup20,21,22. Studies using DLT-calibrated stereo-imaging camera systems are typically used to study locomotion and gait analysis22,23. This protocol aims to offer a detailed methodology to determine the in-situ strain of PNs at varying degrees of stretch using a DLT-calibrated stereo-imaging camera system and an open-source tracking software22.

Protocol

All procedures described were approved by the Drexel University Institutional Animal Care and Use Committee (IACUC). The neonatal piglet was acquired from a United States Department of Agriculture (USDA)-approved farm located in Pennsylvania, USA.

1. Stereo-imaging system setup

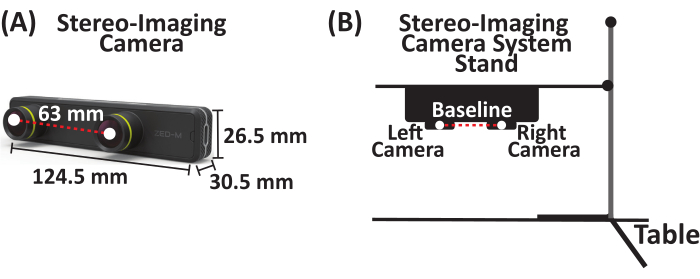

- Attach a stereo-imaging camera system that captures up to 100 frames/s (FPS) to a utility stand. The stereo-imaging camera system used in this study is a passive stereo camera with two horizontally aligned cameras (referred to as the left and right cameras) separated by a baseline of 63 mm (Figure 1).

Figure 1: Stereo-imaging camera system. (A) Parallel stereo-imaging camera system with two cameras (left and right cameras) separated by a baseline of 63 mm. (B) Schematic of stereo-imaging camera system and stand setup. Please click here to view a larger version of this figure.

2. Stereo-imaging system DLT Calibration-digitizing the 3D control volume

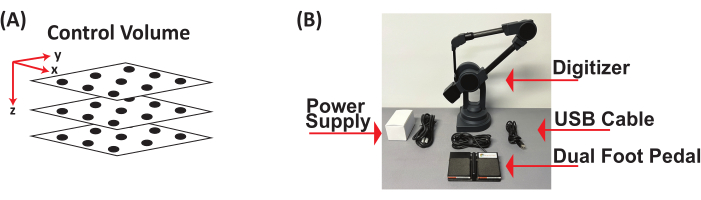

- Obtain three clear acrylic plexiglass square sheets (12 in x 12 in x 0.125 in). On each sheet, place a grid and draw at least 10 points, resulting in a minimum of 30 points distributed across the 3D control volume in the x, y, and z coordinate planes. Construct the 3D control volume by stacking the three sheets at varying heights to capture the maximum height of what will be recorded (Figure 2A).

- Digitize all the points on the 3D control volume using a digitizer with a foot pedal. Acquire x, y, and z coordinates (in mm) (Figure 2B) by establishing the origin (0, 0, 0) on the 3D control cube, defining the positive x- and y-directions, opening a document to save the digitized (x, y, z) coordinates (in mm) of each point, and saving (x, y, z) coordinates (in mm) as a *.csv file.

NOTE: The (x, y, z) coordinates are relative to the set origin on the 3D control cube. - Use these digitized (x, y, z) coordinates (in mm) to calibrate the stereo-imaging camera system's left and right cameras, respectively.

Figure 2: Three-dimensional control volume and digitizer with foot pedal. (A) Schematic of 3D control volume. (B) Components of digitizer with foot pedal used to digitize 3D control volume to obtain (x, y, z) coordinates in mm. Abbreviation: 3D = three-dimensional. Please click here to view a larger version of this figure.

3. Stereo-imaging camera system calibration-generation of direct linear transformation coefficients

- Attach the stereo-imaging camera system to a utility stand (Figure 3A).

- Position the stereo-imaging camera system 6 cm above the 3D control volume (Figure 3A).

- Connect the stereo-imaging camera system to a laptop via a USB type-C cable.

- Open the imaging software (see the Table of Materials).

- Image the 3D control volume. The output image (Supplemental Figure S1) includes both the left and right camera views (Figure 3B).

- Run the custom MATLAB code (Supplemental File 1) to separate the output image into two images, left and right images, respectively (Figure 3C, Supplemental Figures S2, and Supplemental Figures S3, respectively).

- Click Run to initialize the DLTcal5.m GUI22 (Supplemental File 2).

- Click initialize on the DLTcal5 controls window to select the *.csv file with the digitized (x, y, z) coordinates (in mm) (Figure 4A and Supplemental File 3).

- Select the corresponding image of the 3D control volume from the first view of the stereo-imaging camera system (Supplemental Figure S2). For this stereo-imaging camera system, the left camera view corresponds to the first camera view (Figure 4B).

- The first camera view image (i.e., left camera view) pops up.

- Select the points in the order the points were digitized in Section 2 to obtain the 2D pixel coordinates from the left camera view (Figure 4B).

- Set current point on the DLTcal5 Controls window and click on the corresponding current point on the loaded first camera view (i.e., left camera view) image.

- After selecting all the points on the loaded first camera view, click compute coefficients to generate the 11 DLT coefficients for the left camera view (Figure 4B).

- Click add a camera on the DLTcal5 controls window and repeat steps 3.7.2-3.7.6 to generate the 11 DLT coefficients for the right camera view (i.e., second camera view) (Figure 4B, C and Supplemental Figure S3).

- Click save data on the DLTcal5 controls window to select the folder where the output files will be saved (Figure 4D).

- The output files include the 2D (x, y) pixel coordinates (Supplemental File 4) and corresponding 11 DLT coefficients for the left and right camera views of the stereo-imaging camera system (Figure 4D and Supplemental File 5).

- The stereo-imaging camera system is calibrated.

Figure 3: Schematic for acquiring an image of three-dimensional control volume using a stereo-imaging camera system for direct linear transformation calibration. (A) Attach the stereo-imaging camera system to a stand and then connect it to a laptop via a USB type-C cable. Place the 3D control volume 6 cm under the stereo-imaging camera system. (B) Using the imaging software, take an image of the 3D control volume. The output image is a combined image from the left and right cameras. (C) Using a custom MATLAB code, the combined output image is separated into individual left and right images of the 3D control volume. Abbreviation: 3D = three-dimensional. Please click here to view a larger version of this figure.

Figure 4: Schematic for generating direct linear transformation coefficients for left and right camera views of a stereo-camera imaging system. (A) Run DLTcal5.m22, click initialize on the controls window, and select the *.csv file with the digitized (x, y, z) coordinates (in mm) of the 3D control volume. (B) Select the calibration image of the left camera view. Then, select the points on the image in the same order that they were digitized. Then, click compute coefficients to generate the DLT coefficients for the left camera view. Next, click Add camera to repeat the steps for the right camera view. (C) Select the calibration image of the right camera view. Then, select the points on the image in the same order that they were digitized. Then, click compute coefficients to generate the DLT coefficients for the right camera view. (D) Click Save Data to select the directory to save the DLT coefficients for the left and right camera views. Enter the name for the output file and click OK and the DLT coefficients are saved as a *.csv file. Abbreviation: 3D = three-dimensional and DLT = direct linear transformation. Please click here to view a larger version of this figure.

4. Data acquisition

- Place anesthetized neonatal Yorkshire piglet (3-5 days old) in a supine position with upper limbs in abduction to expose the axillary region. Make a midline incision through the skin and fascia overlying the trachea down to the upper third of the sternum.

- Use blunt dissection to expose the brachial plexus nerves.

- Squirt saline solution on the exposed brachial plexus nerve to keep them hydrated before, during, and after testing.

- Cut the distal end of a brachial plexus PN and clamp to the mechanical testing apparatus.

- Attach the stereo-imaging camera system to the utility stand, place it up to 6 cm above the PN to be stretched, and then connect the stereo-imaging camera system to a laptop via a USB type-C cable (Figure 5A).

- Use an ink-based skin marker to place markers on the insertion and clamp sites and an additional two to four markers along the length of the PN depending on the nerve length, for displacement tracking (Figure 5B).

- Place a calibration grid (i.e., laminated grid with 0.5 cm x 0.5 cm squares) and a 1 cm ruler, flat underneath the PN for data analysis (Figure 5B).

- Record the initial length of the PN after clamping and just before stretching.

- Stretch the PN at a displacement rate of 500 mm/min to failure or a predetermined stretch.

Figure 5: Representative schematic for data acquisition of peripheral nerve stretching. (A) Attach the stereo-imaging camera system to a stand and then connect it to a laptop via a USB type-C cable. Place the stereo-imaging camera system up to 6 cm above the peripheral nerve. (B) The peripheral nerve is clamped to the mechanical setup at the distal end. Using an ink-based skin marker, place a marker on the insertion and clamp sites and an additional two to four markers along the nerve length. Saline is squirted on the peripheral nerve to keep it hydrated before, during, and after testing. Please click here to view a larger version of this figure.

5. Data analysis-marker trajectory tracking

- Run the custom MATLAB code (Supplemental File 6) to separate the output video file (Supplemental File 7) into two video files, left and right camera video files (Supplemental File 8 and Supplemental File 9, respectively).

- Click Run to initialize the DLTdv7.m22 GUI (Supplemental File 10).

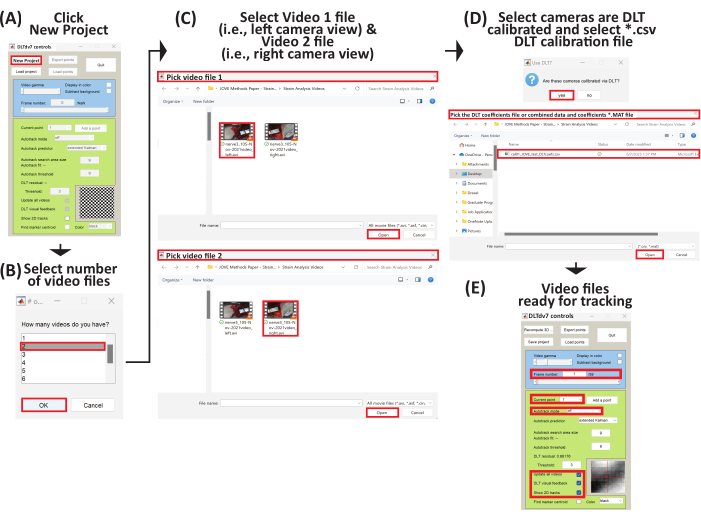

- The DLTdv7 controls window pops up and the new project, load project, and quit buttons are enabled (Figure 6A).

- Click new project on the DLTdv7 controls window to begin a new project.

- When a dialog box pops up, select 2 to indicate two video files (i.e., left and right camera views) to track the displacement marker trajectories on a stretched peripheral nerve (Figure 6B).

- Select the first video file (i.e., Video 1), which is the video file from the left camera view (Supplemental File 8), and click open (Figure 6C). Then, select the second video file (i.e., Video 2), which is the video file from the right camera view (Supplemental File 9), and click open (Figure 6C).

- After the two video files are selected, click yes to signify the video files were acquired from camera views calibrated via DLT.

- Select the corresponding DLT coefficients *.csv file (Supplemental File 5) for the stereo-imaging camera system and click on open (Figure 6D).

- The initial video frames are displayed from both video files and the rest of the DLTdv7 controls window is activated. The new project button is replaced with the recompute 3D points button and the load project button is replaced with the save button (Figure 6E).

- On the DLTdv7 controls window, ensure the frame number is on 1, the current point is set to 1, autotrack mode is off, and update all videos, DLT visual feedback, and show 2D tracks are checked (Figure 6E).

- Ensure that the tracking points are placed on the displacement markers of the PN such that the insertion marker corresponds to point 1, marker 1 corresponds to point 2, and so on with the clamp marker being the final point.

- Place point 1 on the insertion marker on Video 1 (i.e., left camera view video file) ensuring the placed point is at the center of the insertion marker. Use keyboard shortcuts (Table 1) to move the placed point to the center of the insertion marker (Figure 7A).

- Because DLT visual feedback is checked, when a point is placed in Video 1, a blue epipolar line appears in Video 2 (i.e., right camera view video file) (Figure 7). Place point 1 on the insertion marker in Video 2 using the blue epipolar line as reference. Use the keyboard shortcuts (Table 1), as needed, to move the placed point to the center of the insertion marker (Figure 7A).

- Click add a point on the DLTdv7 controls window to add points on the other tissue markers to track their trajectories. Refer to the current point on the DLTdv7 controls window to know which point is active.

- Click add a point. Place point 2 on marker 1 in Video 1. Use the blue epipolar line and keyboard shortcuts to place point 2 on marker 1 in Video 2. Continue adding and placing points, first on Video 1 and then on Video 2, for all displacement markers along the length of the nerve between the insertion and clamp (i.e., final point) (Figure 7B-F).

- Once all initial points are placed in Video 1 and Video 2 (i.e., the left and right camera video files, respectively), ensure the frame number is on 1 and the current point is set to 1 on the DLTdv7 controls window.

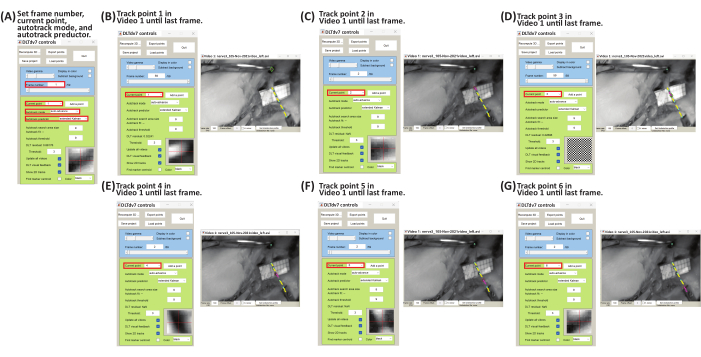

- On the DLTdv7 controls window, change autotrack mode to auto-advance from the dropdown menu and autotrack predictor to extended Kalman from the dropdown menu (Figure 8A).

- Complete the tracking of all the placed points in Video 1 first and then complete tracking in Video 2. Track the marker trajectory by left-clicking on the center of the marker frame by frame until failure (i.e., frame before gross rupture of the peripheral nerve) or the entirety of the video for predetermined stretch is achieved.

- Begin tracking point 1 in Video 1. Zoom in and out (Table 1) as desired to ensure tracking is at the center of the marker; click frame by frame until the failure or end of the video (Figure 8B). After completing the tracking of point 1 in Video 1, return to frame 1 and change the current point to point 2 from the dropdown menu on the DLTdv7 controls window. The previous tracked point will turn light blue and its trajectory will turn yellow. The current point will have a green diamond and a pink center.

- Complete the tracking of all the points in Video 1 by left-clicking frame by frame for each point until failure or end of the video (Figure 8C-G).

- In Video 2, use the blue epipolar line to track points in reference to Video 1 (Figure 9). On the DLTdv7 controls window, return frame number to 1 and set current point to 1, and begin tracking point 1's trajectory in Video 2.

- Follow the same steps (5.2.16-5.2.18) to track the remaining points in Video 2.

- After tracking is complete in Video 1 and Video 2, click export points on the DLTdv7 controls window to export the (x, y, z) coordinates (in mm) of the tracked points.

- A dialog box pops up to select the directory where to save the output files. Click on the directory location.

- Another dialog box pops up to set the name of the output files. Set the output files' name (i.e., nerve1_101-Jan-2001videoanalyzed_cal09.30_trial1_).

- Another dialogue box pops up. Select the save format as flat.

- Another dialog box pops up. Select no to calculate the 95% confidence interval.

- A final dialog box pops up that shows the data is exported and saved, and the four output files are exported to the selected directory location (Supplemental File 11, Supplemental File 12, Supplemental File 13, and Supplemental File 14).

- Click save project on the DLTdv7 controls window to save the current project (Supplemental File 15) in the same directory as the output files.

Figure 6: Schematic to set up a new project to begin three-dimensional trajectory tracking. (A) Run DLTdv7.m22 and click New Project to begin a new project. (B) Select 2 as the number of video files. (C) Select Video 1 file (i.e., left camera view) and then select Video 2 file (i.e., right camera view). (D) Select yes as the video files come from a DLT calibrated stereo-imaging camera system. Then, select the *.csv file containing the DLT coefficients. (E) The selected video files are now ready for tracking. Please click here to view a larger version of this figure.

| Key/Click | Description |

| Left Click | Tracks trajecgtory of a point in frame clicked |

| (+) Key | Zooms current video frame in arount mosue pointer |

| (-) Key | Zooms current video frame out arount mosue pointer |

| (i) Key | Move point up |

| (j) Key | Move point left |

| (k) Key | Move point right |

| (m) Key | Move point down |

Table 1: Keyboard and mouse shortcuts for tracking point trajectory.

Figure 7: Schematic to place initial points on tissue markers for Video 1 and Video 2 using DLTdv7.m22. (A) Set current point to 1. Place point 1 on the insertion marker in Video 1. Using the blue epipolar line in Video 2, place point 1 on the insertion marker. (B) Set current point to 2. Place point 2 on marker 1 in Video 1. Using the blue epipolar line in Video 2, place point 2 on marker 1. (C) Set current point to 3. Place point 3 on marker 2 in Video 1. Using the blue epipolar line in Video 2, place point 3 on marker 2. (D) Set current point to 4. Place point 4 on marker 3 in Video 1. Using the blue epipolar line in Video 2, place point 4 on marker 3. (E) Set current point to 5. Place point 5 on marker 4 in Video 1. Using the blue epipolar line in Video 2, place point 5 on marker 4. (F) Set current point to 6. Place point 6 on the clamp marker in Video 1. Using the blue epipolar line in Video 2, place point 6 on the clamp marker. Please click here to view a larger version of this figure.

Figure 8: Schematic for tracking marker point trajectories of Video 1 using DLTdv7.m22. (A) Set frame number to 1, current point to 1, autotrack mode to auto-advance, and autotrack predictor to extended Kalman. (B) Set current point to 1. On Video 1 file, begin tracking the insertion marker (i.e., point 1) displacement by left-clicking frame-by-frame until the last frame. (C) Set frame number to 1 and current point to 2. On Video 1 file, begin tracking marker 1 (i.e., point 2) displacement by left-clicking frame-by-frame until the last frame. (D) Set frame number to 1 and current point to 3. On Video 1 file, begin tracking marker 2 (i.e., point 3) displacement by left-clicking frame-by-frame until the last frame. (E) Set frame number to 1 and current point to 4. On Video 1 file, begin tracking marker 3 (i.e., point 4) displacement by left-clicking frame-by-frame until the last frame. (F) Set frame number to 1 and current point to 5. On Video 1 file, begin tracking marker 4 (i.e., point 5) displacement by left-clicking frame-by-frame until the last frame. (G) Set frame number to 1 and current point to 6. On Video 1 file, begin tracking the clamp marker (i.e., point 6) displacement by left-clicking frame-by-frame until the last frame. Please click here to view a larger version of this figure.

Figure 9: Schematic for tracking marker point trajectories of Video 2 using DLTdv7.m22. (A) Set frame number to 1, current point to 1, autotrack mode to auto-advance, and autotrack predictor to extended Kalman. (B) Set current point to 1. Using the blue epipolar line on Video 2 file, begin tracking the insertion marker (i.e., point 1) displacement by left-clicking frame-by-frame until the last frame. (C) Set frame number to 1 and current point to 2. Using the blue epipolar line on Video 2 file, begin tracking marker 1 (i.e., point 2) displacement by left-clicking frame-by-frame until the last frame. (D) Set frame number to 1 and current point to 3. Using the blue epipolar line on Video 2 file, begin tracking marker 2 (i.e., point 3) displacement by left-clicking frame-by-frame until the last frame. (E) Set frame number to 1 and current point to 4. Using the blue epipolar line on Video 2 file, begin tracking marker 3 (i.e., point 4) displacement by left-clicking frame-by-frame until the last frame. (F) Set frame number to 1 and current point to 5. Using the blue epipolar line on Video 2 file, begin tracking marker 4 (i.e., point 5) displacement by left-clicking frame-by-frame until the last frame. (G) Set frame number to 1 and current point to 6. Using the blue epipolar line on Video 2 file, begin tracking the clamp marker (i.e., point 6) displacement by left-clicking frame-by-frame until the last frame. Please click here to view a larger version of this figure.

6. Data analysis-strain analysis

- Run a custom MATLAB code (Supplemental File 16) to import the tracked 3D (x, y, z) marker trajectories (in mm).

- On the MATLAB command window, type:

percentStrain, deltaLi, lengthNi, filename] = PercentStrain_3D - Enter the rupture time, for example, if the video files have 59 frames, the time is 0.59 s; enter the number of tracked points, and select the *_xyzpts.csv file, with the tracked 3D (x, y, z) trajectories (in mm).

- Select the directory to save the output length vs time (Supplemental Figure S4), change in length vs time (Supplemental Figure S5), and strain vs time (Supplemental Figure S6) plots and *.xls file with time, length, change in length, and strain (Supplemental File 17).

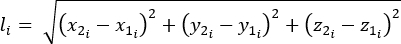

- Calculate the length (l), change in length (Δl), and percent strain using equations 1-3:

(1)

(1)

Where li is the distance between any two markers at any time point; x1i, y1i, z1i are the 3D coordinates of one of the two markers; and x2i, y2i, z2i are the 3D coordinates of the second markers.

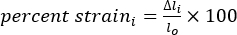

(2)

(2)

Where li is the distance between any two markers at any time point, and lo is the distance between any two markers at the original/zero-time point.

(3)

(3)

Where Δli is the change in length between two markers at any time point, and lo is the distance between any two markers at the original/zero-time point.

Results

Using the described methodology, various output files are obtained. The DLTdv7.m *_xyzpts.csv (Supplemental File 12) contains the (x, y, z) coordinates in millimeters of each tracked point at each time frame that is further used to calculate the length, change in length, and strain of the stretched PN. Representative length-time, change in length-time, and strain-time plots of a stretched PN are shown in Figure 10. The stretched PN had an insertion marker, four markers along...

Discussion

Studies reporting biomechanical properties of peripheral nerves (PNs) because of stretch injury vary, and that variation can be attributed to testing methodologies such as testing equipment and elongation analysis5,6,7,8,9,10,11,12,

Disclosures

The authors have no conflicts of interest to disclose.

Acknowledgements

This research was supported by funding from the Eunice Kennedy Shriver National Institute of Child Health and Human Development of the National Institutes of Health under Award Number R15HD093024 and R01HD104910A and NSF CAREER Award Number 1752513.

Materials

| Name | Company | Catalog Number | Comments |

| Clear Acrylic Plexiglass Square Sheet | W W Grainger Inc | BULKPSACR9 | Construct three-dimensional control volume |

| Stereo-imaging camera system - ZED Mini Stereo Camera | StereoLabs Inc. | N/A | N/A |

| Imaging Software - ZED SDK | StereoLabs Inc. | N/A | N/A |

| Maintence Software - CUDA 12 | StereoLabs Inc. | N/A | Download to run ZED SDK |

| Camera stand - Cast Iron Triangular Support Stand with Rod | Telrose VWR Choice | 76293-346 | N/A |

| MicroSribe G2 Digitizer with Immersion Foot Pedal | SUMMIT Technology Group | N/A | N/A |

| Proramming Software - MATLAB | Mathworks | N/A | version 2019A or newer |

| DLTcal5.m | Hedrick lab | N/A | Open Source |

| DLTdv7.m | Hedrick lab | N/A | Open Source |

References

- Bueno, F. R., Shah, S. B. Implications of tensile loading for the tissue engineering of nerves. Tissue Engineering Part B: Reviews. 14 (3), 219-233 (2008).

- Grewal, R., Xu, J., Sotereanos, D. G., Woo, S. L. Biomechanical properties of peripheral nerves. Hand Clinics. 12 (2), 195-204 (1996).

- Papagiannis, G., et al. Biomechanical behavior and viscoelastic properties of peripheral nerves subjected to tensile stress: common injuries and current repair techniques. Critical Reviews in Physical and Rehabilitation Medicine. 32 (3), 155-168 (2020).

- Castaldo, J., Ochoa, J. Mechanical injury of peripheral nerves. Fine structure and dysfunction. Clinics in Plastic Surgery. 11 (1), 9-16 (1984).

- Singh, A. Extent of impaired axoplasmic transport and neurofilament compaction in traumatically injured axon at various strains and strain rates. Brain Injury. 31 (10), 1387-1395 (2017).

- Singh, A., Kallakuri, S., Chen, C., Cavanaugh, J. M. Structural and functional changes in nerve roots due to tension at various strains and strain rates: an in-vivo study. Journal of Neurotrauma. 26 (4), 627-640 (2009).

- Singh, A., Lu, Y., Chen, C., Kallakuri, S., Cavanaugh, J. M. A new model of traumatic axonal injury to determine the effects of strain and displacement rates. Stapp Car Crash Journal. 50, 601 (2006).

- Singh, A., Lu, Y., Chen, C., Cavanaugh, J. M. Mechanical properties of spinal nerve roots subjected to tension at different strain rates. Journal of Biomechanics. 39 (9), 1669-1676 (2006).

- Singh, A., Shaji, S., Delivoria-Papadopoulos, M., Balasubramanian, S. Biomechanical responses of neonatal brachial plexus to mechanical stretch. Journal of Brachial Plexus and Peripheral Nerve Injury. 13 (01), e8-e14 (2018).

- Zapałowicz, K., Radek, A. Mechanical properties of the human brachial plexus. Neurologia I Neurochirurgia Polska. 34, 89-93 (2000).

- Zapałowicz, K., Radek, A. . Annales Academiae Medicae Stetinensis. 51 (2), 11-14 (2005).

- Zapałowicz, K., Radek, M. The distribution of brachial plexus lesions after experimental traction: a cadaveric study. Journal of Neurosurgery: Spine. 29 (6), 704-710 (2018).

- Kawai, H., et al. Stretching of the brachial plexus in rabbits. Acta Orthopaedica Scandinavica. 60 (6), 635-638 (1989).

- Marani, E., Van Leeuwen, J., Spoor, C. The tensile testing machine applied in the study of human nerve rupture: a preliminary study. Clinical Neurology and Neurosurgery. 95, 33-35 (1993).

- Lee, S. K., Wolfe, S. W. Peripheral nerve injury and repair. JAAOS-Journal of the American Academy of Orthopaedic Surgeons. 8 (4), 243-252 (2000).

- Rickett, T., Connell, S., Bastijanic, J., Hegde, S., Shi, R. Functional and mechanical evaluation of nerve stretch injury. Journal of Medical Systems. 35, 787-793 (2011).

- Topp, K. S., Boyd, B. S. Structure and biomechanics of peripheral nerves: nerve responses to physical stresses and implications for physical therapist practice. Physical Therapy. 86 (1), 92-109 (2006).

- Lu, Y., Chen, C., Kallakuri, S., Patwardhan, A., Cavanaugh, J. M. Development of an in vivo method to investigate biomechanical and neurophysiological properties of spine facet joint capsules. European Spine Journal. 14 (6), 565-572 (2005).

- Kallakuri, S., et al. Tensile stretching of cervical facet joint capsule and related axonal changes. European Spine Journal. 17 (4), 556-563 (2008).

- Abdel-Aziz, Y. I., Karara, H. M. Direct linear transformation from comparator coordinates into object space coordinates in close-range photogrammetry. Photogrammetric Engineering & Remote Sensing. 81 (2), 103-107 (2015).

- Pourcelot, P., Audigié, F., Degueurce, C., Geiger, D., Denoix, J. M. A method to synchronise cameras using the direct linear transformation technique. Journal of Biomechanics. 33 (12), 1751-1754 (2000).

- Hedrick, T. L. Software techniques for two- and three-dimensional kinematic measurements of biological and biomimetic systems. Bioinspiration & Biomimetics. 3 (3), 034001 (2008).

- Chen, L., Armstrong, C. W., Raftopoulos, D. D. An investigation on the accuracy of three-dimensional space reconstruction using the direct linear transformation technique. Journal of Biomechanics. 27 (4), 493-500 (1994).

- Singh, A., Magee, R., Balasubramanian, S. Methods for in vivo biomechanical testing on brachial plexus in neonatal piglets. Journal of Visualized Experiments. (154), e59860 (2019).

- Black, J., Ellis, T. Multi camera image tracking. Image and Vision Computing. 24 (11), 1256-1267 (2006).

- Cardenas-Garcia, J. F., Yao, H. G., Zheng, S. 3D reconstruction of objects using stereo imaging. Optics and Lasers in Engineering. 22 (3), 193-213 (1995).

- Mahan, M. A., Yeoh, S., Monson, K., Light, A. Rapid stretch injury to peripheral nerves: biomechanical results. Neurosurgery. 85 (1), E137-E144 (2019).

- Rydevik, B. L., et al. An in vitro mechanical and histological study of acute stretching on rabbit tibial nerve. Journal of Orthopaedic Research. 8 (5), 694-701 (1990).

- Mahan, M. A., Warner, W. S., Yeoh, S., Light, A. Rapid-stretch injury to peripheral nerves: implications from an animal model. Journal of Neurosurgery. 133 (5), 1537-1547 (2019).

- Singh, A., Ferry, D., Balasubramanian, S. Efficacy of clinical simulation based training in biomedical engineering education. Journal of Biomechanical Engineering. 141 (12), 121011-121017 (2019).

Reprints and Permissions

Request permission to reuse the text or figures of this JoVE article

Request PermissionExplore More Articles

This article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. All rights reserved