Aby wyświetlić tę treść, wymagana jest subskrypcja JoVE. Zaloguj się lub rozpocznij bezpłatny okres próbny.

Methods Article

Substructure Analyzer: A User-Friendly Workflow for Rapid Exploration and Accurate Analysis of Cellular Bodies in Fluorescence Microscopy Images

W tym Artykule

Podsumowanie

We present a freely available workflow built for rapid exploration and accurate analysis of cellular bodies in specific cell compartments in fluorescence microscopy images. This user-friendly workflow is designed on the open-source software Icy and also uses ImageJ functionalities. The pipeline is affordable without knowledge in image analysis.

Streszczenie

The last decade has been characterized by breakthroughs in fluorescence microscopy techniques illustrated by spatial resolution improvement but also in live-cell imaging and high-throughput microscopy techniques. This led to a constant increase in the amount and complexity of the microscopy data for a single experiment. Because manual analysis of microscopy data is very time consuming, subjective, and prohibits quantitative analyses, automation of bioimage analysis is becoming almost unavoidable. We built an informatics workflow called Substructure Analyzer to fully automate signal analysis in bioimages from fluorescent microscopy. This workflow is developed on the user-friendly open-source platform Icy and is completed by functionalities from ImageJ. It includes the pre-processing of images to improve the signal to noise ratio, the individual segmentation of cells (detection of cell boundaries) and the detection/quantification of cell bodies enriched in specific cell compartments. The main advantage of this workflow is to propose complex bio-imaging functionalities to users without image analysis expertise through a user-friendly interface. Moreover, it is highly modular and adapted to several issues from the characterization of nuclear/cytoplasmic translocation to the comparative analysis of different cell bodies in different cellular sub-structures. The functionality of this workflow is illustrated through the study of the Cajal (coiled) Bodies under oxidative stress (OS) conditions. Data from fluorescence microscopy show that their integrity in human cells is impacted a few hours after the induction of OS. This effect is characterized by a decrease of coilin nucleation into characteristic Cajal Bodies, associated with a nucleoplasmic redistribution of coilin into an increased number of smaller foci. The central role of coilin in the exchange between CB components and the surrounding nucleoplasm suggests that OS induced redistribution of coilin could affect the composition and the functionality of Cajal Bodies.

Wprowadzenie

Light microscopy and, more particularly, fluorescence microscopy are robust and versatile techniques commonly used in biological sciences. They give access to the precise localization of various biomolecules like proteins or RNA through their specific fluorescent labeling. The last decade has been characterized by rapid advances in microscopy and imaging technologies as evidenced by the 2014 Nobel Prize in Chemistry awarding Eric Betzig, Stefan W. Hell and William E. Moerner for the development of super-resolved fluorescence microscopy (SRFM)1. SFRM bypasses the diffraction limit of traditional optical microscopy to bring it into the nanodimension. Improvement in techniques like live-imaging or high throughput screening approaches also increases the amount and the complexity of the data to treat for each experiment. Most of the time, researchers are faced with high heterogeneous populations of cells and want to analyze phenotypes at the single-cell level.

Initially, analyses such as foci counting were performed by eye, which is preferred by some researchers since it provides full visual control over the counting process. However, manual analysis of such data is too time consuming, leads to variability between observers, and does not give access to more complex features so that computer-assisted approaches are becoming widely used and almost unavoidable2. Bioimage informatics methods substantially increase the efficiency of data analysis and are free of the unavoidable operator subjectivity and potential bias of the manual counting analysis. The increased demand in this field and the improvement of computer power led to the development of a large number of image analysis platforms. Some of them are freely available and give access to various tools to perform analysis with personal computers. A classification of open access tools has been recently established3 and presents Icy4 as a powerful software combining usability and functionality. Moreover, Icy has the advantage of communicating with ImageJ.

For users without image analysis expertise, the main obstacles are to choose the appropriate tool according to the problematic and correctly tune parameters that are often not well understood. Moreover, setup times are often long. Icy proposes a user-friendly point-and-click interface named “Protocols” to develop workflow by combining some plugins found within an exhaustive collection4. The flexible modular design and the point-and-click interface make setting up an analysis feasible for non-programmers. Here we present a workflow called Substructure Analyzer, developed in Icy’s interface, whose function is to analyze fluorescent signals in specific cellular compartments and measure different features as brightness, foci number, foci size, and spatial distribution. This workflow addresses several issues such as quantification of signal translocation, analysis of transfected cells expressing a fluorescent reporter, or analysis of foci from different cellular substructures in individual cells. It allows the simultaneous processing of multiple images, and output results are exported to a tab-delimited worksheet that can be opened in commonly used spreadsheet programs.

The Substructure Analyzer pipeline is presented in Figure 1. First, all the images contained in a specified folder are pre-processed to improve their signal to noise ratio. This step increases the efficiency of the following steps and decreases the running time. Then, the Regions of Interest (ROIs), corresponding to the image areas where the fluorescent signal should be detected, are identified and segmented. Finally, the fluorescent signal is analyzed, and results are exported into a tab-delimited worksheet.

Object segmentation (detection of boundaries) is the most challenging step in image analysis, and its efficiency determines the accuracy of the resulting cell measurements. The first objects identified in an image (called primary objects) are often nuclei from DNA-stained images (DAPI or Hoechst staining), although primary objects can also be whole cells, beads, speckles, tumors, or whatever stained objects. In most biological images, cells or nuclei touch each other or overlap causing the simple and fast algorithms to fail. To date, no universal algorithm can perform perfect segmentation of all objects, mostly because their characteristics (size, shape, or texture) modulate the efficiency of segmentation5. The segmentation tools commonly distributed with microscopy software (such as the MetaMorph Imaging Software by Molecular Devices6, or the NIS-Elements Advances Research software by Nikon7) are generally based on standard techniques such as correlation matching, thresholding, or morphological operations. Although efficient in basic systems, these overgeneralized methods rapidly present limitations when used in more challenging and specific contexts. Indeed, segmentation is highly sensitive to experimental parameters such as cell type, cell density, or biomarkers, and frequently requires repeated adjustment for a large data set. The Substructure Analyzer workflow integrates both simple and more sophisticated algorithms to propose different alternatives adapted to image complexity and user needs. It notably proposes the marker-based watershed algorithm8 for highly clustered objects. The efficiency of this segmentation method relies on the selection of individual markers on each object. These markers are manually chosen most of the time to get correct parameters for full segmentation, which is highly time consuming when users face a high number of objects. Substructure Analyzer proposes an automatic detection of these markers, providing a highly efficient segmentation process. Segmentation is, most of the time, the limiting step of image analysis and can considerably modify the processing time depending on the resolution of the image, the number of objects per image, and the level of clustering of objects. Typical pipelines require a few seconds to 5 minutes per image on a standard desktop computer. Analysis of more complex images can require a more powerful computer and some basic knowledge in image analysis.

The flexibility and functionality of this workflow are illustrated with various examples in the representative results. The advantages of this workflow are notably displayed through the study of nuclear substructures under oxidative stress (OS) conditions. OS corresponds to an imbalance of the redox homeostasis in favor of oxidants and is associated with high levels of reactive oxygen species (ROS). Since ROS act as signaling molecules, changes in their concentration and subcellular localization affect positively or negatively a myriad of pathways and networks that regulate physiological functions, including signal transduction, repair mechanisms, gene expression, cell death, and proliferation9,10. OS is thus directly involved in various pathologies (neurodegenerative and cardiovascular diseases, cancers, diabetes, etc.), but also cellular aging. Therefore, deciphering the consequences of OS on the human cell’s organization and function constitutes a crucial step in the understanding of the roles of OS in the onset and development of human pathologies. It has been established that OS regulates gene expression by modulating transcription through several transcription factors (p53, Nrf2, FOXO3A)11, but also by affecting the regulation of several co- and post-transcriptional processes such as alternative splicing (AS) of pre-RNAs12,13,14. Alternative splicing of primary coding and non-coding transcripts is an essential mechanism that increases the encoding capacity of the genomes by producing transcript isoforms. AS is performed by a huge ribonucleoprotein complex called spliceosome, containing almost 300 proteins and 5 U-rich small nuclear RNAs (UsnRNAs)15. Spliceosome assembly and AS are tightly controlled in cells and some steps of the spliceosome maturation occur within membrane-less nuclear compartments named Cajal Bodies. These nuclear substructures are characterized by the dynamic nature of their structure and their composition, which are mainly conducted by multivalent interactions of their RNA and protein components with the coilin protein. Analysis of thousands of cells with the Substructure Analyzer workflow allowed characterization of never described effects of OS on Cajal Bodies. Indeed, obtained data suggest that OS modifies the nucleation of Cajal Bodies, inducing a nucleoplasmic redistribution of the coilin protein into numerous smaller nuclear foci. Such a change of the structure of Cajal Bodies might affect the maturation of the spliceosome and participate in AS modulation by OS.

Protokół

NOTE: User-friendly tutorials are available on Icy’s website http://icy.bioimageanalysis.org.

1. Download Icy and the Substructure Analyzer protocol

- Download Icy from the Icy website (http://icy.bioimageanalysis.org/download) and download the Substructure Analyzer protocol: http://icy.bioimageanalysis.org/protocols?sort=latest.

NOTE: If using a 64-bit OS, be sure to use the 64-bit version of Java. This version allows for increasing the memory allocated to Icy (Preferences | General | Max memory).

2. Opening the protocol

- Open Icy and click on Tools in the Ribbon menu.

- Click on Protocols to open the Protocols Editor interface.

- Click on Load and open the protocol Substructure Analyzer. Protocol loading can take a few seconds. Be sure that the opening of the protocol is complete before using it.

NOTE: The workflow is composed of 13 general blocks presented in Figure 2a. Each block works as a pipeline composed of several boxes performing specific subtasks.

3. Interacting with the workflow on Icy

NOTE: Each block or box is numbered and has a specific rank within the workflow (Figure 2b). By clicking on this number, the closest possible position to the first is assigned to the selected block/box then the position of the other blocks/boxes is re-organized. Respect the right order of the blocks when preparing the workflow. For example, Spot Detector block needs pre-defined ROIs so that Segmentation blocks have to run before Spot Detector blocks. Do not modify the position of boxes. Do not use “.” in the image’s name.

- By clicking on the upper left corner icon, collapse, expand, enlarge, narrow, or remove the block (Figure 2b).

- Each pipeline of the workflow is characterized by a network of boxes connected via their input and output (Figure 2b). To create a connection, click on Output and maintain until the cursor attains an input. Connections can be removed by clicking on the Output tag.

4. Merging of the channels of an image

- Use the block Merge Channels to generate merged images. If necessary, rename the files so that sequences to be merged have the same name’s prefix followed by a distinct separator. For example, sequences of individual channels from an Image A are named: ImageA_red, ImageA_blue.

NOTE: For the separator, do not use characters already present in the image’s name. - In the same folder, create one new folder per channel to merge. For example, to merge red, green, and blue channels, create 3 folders, and store the corresponding sequences in these folders.

- Only use the block Merge Channels, remove the other blocks, and save the protocol as Merge Channels.

- Access the boxes to set parameters. For each channel, fill the boxes Channel number X (boxes 1, 5 or 9), Folder channel number X (boxes 2, 6 or 10), Separator channel number X (boxes 3, 7 or 11) and Colormap channel nb X (boxes 4, 8 and 12) respectively.

NOTE: These boxes are horizontally grouped by four, each line corresponding to the same channel. In each line, a display is also available (boxes 23, 24, or 25) to directly visualize the sequence of the corresponding channel.- In the box Channel number X, choose which channel to extract (in classical RGB images, 0=Red, 1=Green, 2=Blue). The user quickly accesses the different channels of an image within the Inspector window of Icy, in the Sequence tab. Write the smallest channel’s value in the upper line and the highest one in the bottom line.

- In the box Folder channel number X, write the \Name of the folder containing images of channel X.

- In the box Separator channel number X, write the separator used for the image’s name (in the previous example: “_red”, “_green” and “_blue”).

- In the box Colormap channel nb X, indicate with a number which colormap model to use to visualize the corresponding channel in Icy. The available colormaps are visible in the Sequence tab of the Inspector window.

- In the box Format of merged images (box 28), write the extension to save merged images: .tif, .gif, .jpg, .bmp or .png.

NOTE: To merge only 2 channels, do not fill the four boxes corresponding to the third channel.

- On the upper left corner of the Merge Channels block, click on the link directly to the right of Folder. In the Open dialog box which appears, double-click on the folder containing sequences of the first channel that has been defined in the box Folder channel number 1 (box 2). Then, click on Open.

- Run the protocol by clicking on the black arrow in the upper left corner of the Merge Channels block (see part 7 for more details). Merged images are saved in a Merge folder in the same directory as the folders of individual channels.

- Access the boxes to set parameters. For each channel, fill the boxes Channel number X (boxes 1, 5 or 9), Folder channel number X (boxes 2, 6 or 10), Separator channel number X (boxes 3, 7 or 11) and Colormap channel nb X (boxes 4, 8 and 12) respectively.

5. Segmentation of the regions of interest

NOTE: Substructure Analyzer integrates both simple and more sophisticated algorithms to propose different alternatives adapted to image complexity and user needs.

- Select the adapted block.

- If objects do not touch each other, or the user does not need to differentiate clustered objects individually, use the block Segmentation A: Non clustered objects.

- When objects do not touch each other, but some of them are close, use the block Segmentation B: Poorly clustered objects.

- For objects with a high clustering level and a convex shape, use the block Segmentation C: Clustered objects with convex shapes.

- If objects present a high clustering level and have irregular shapes, use the block Segmentation D: Clustered objects with irregular shapes.

- Use the block Segmentation E: Clustered cytoplasm to segment touching cytoplasms individually using segmented nuclei as markers. This block imperatively needs segmented nuclei to process.

NOTE: Blocks adapted for primary object segmentation process independently so that several blocks can be used in the same run to compare their efficiency for a particular substructure or to segment different types of substructures. If the clustering level is heterogeneous within the same set of images, then process small and highly clustered objects separately in the adapted blocks.

- Link the output0 (File) of the block Select Folder to the folder input of the chosen segmentation block.

- Set parameters of the chosen segmentation block.

- Segmentation A: Non clustered objects and Segmentation C: Clustered objects with convex shapes

- In box Channel signal (box 1), set the channel of the objects to segment.

- As an option, in box Gaussian filter (box 2), increase the X and Y sigma values if the signal inside objects is heterogeneous. The Gaussian filter smooths out textures to obtain more uniform regions and increases the speed and efficiency of nuclei segmentation. The smaller the objects, the lower the sigma value is. Avoid high sigma values. Set default values to 0.

- In box HK-Means (box 3), set the Intensity classes parameter and the approximate minimum and maximum sizes (in pixels) of objects to be detected.

NOTE: For intensity classes, a value of 2 classifies pixels in 2 classes: background and foreground. It is thus adapted when the contrast between the objects and the background is high. If foreground objects have different intensities or if the contrast with the background is low, increase the number of classes. The default setting is 2. Object size can be quickly evaluated by drawing a ROI manually around the object of interest. Size of the ROI (Interior in pixels) appears directly on the image when pointing it with the cursor or can be accessed in the ROI statistics window (open it from the search bar). Optimal parameters detect each foreground object in a single ROI. They can be manually defined in Icy (Detection and Tracking | HK-Means). - In the box Active Contours (box 4), optimize the detection of object borders. Exhaustive documentation for this plugin is available online: http://icy.bioimageanalysis.org/plugin/Active_Contours. Correct parameters can also be manually defined in Icy (Detection and Tracking | Active Contours).

- During the process, a folder is automatically created to save images of segmented objects. In the box Text (box 6), name this folder (ex: Segmented nuclei). To set the format for saving images of segmented objects (Tiff, Gif, Jpeg, BMP, PNG), fill the box format of images of segmented objects. The folder is created in the folder containing merged images.

- Run the workflow (for details, see part 7).

- Segmentation B: Poorly clustered objects

- Follow the same steps as in 5.3.1 to set parameters of boxes Channel signal, HK-Means, Active Contours, Extension to save segmented objects and Text (Boxes ranks are not the same as in step 5.3.1).

- In the box Call IJ plugin (box 4), set the Rolling parameter to control background subtraction. Set this parameter to at least the size of the largest object that is not part of the background. Decreasing this value increases background removal but can also induce loss of foreground signal.

- In the box Adaptive histogram equalization (box 6), improve the contrasts between the foreground objects and the background. Increasing the slope gives more contrasted sequences.

- Run the workflow (for details, see part 7).

- Segmentation D: Clustered objects with irregular shapes

NOTE: Three different methods of segmentation apply to each image: firstly, HK-means clustering combined with the Active Contours method is applied. Then, the classical watershed algorithm (using the Euclidian distance map) is applied to previously mis-segmented objects. Finally, a marker-based watershed algorithm is used. Only HK-means and marker-based watershed methods need user intervention. For both methods, the same parameters can be applied for all images (fully automated version) or be changed for each image (semi-automated version). If the user is not trained in these segmentation methods, the semi-automated processing is highly recommended. During the processing of this block, manual intervention is needed. When a segmentation method is finished, the user must manually remove mis-segmented objects before the beginning of the next segmentation method. Successfully segmented objects are saved and not considered in the next step(s). This block has to be connected with the block Clustered/heterogeneous shapes primary objects segmentation Dialog Box to work correctly.- Download the ImageJ collection MorphoLibJ on https://github.com/ijpb/MorphoLibJ/releases. The MorphoLibJ 1.4.0 version is used in this protocol. Place the file MorphoLibJ_-1.4.0.jar in the folder icy/ij/plugins. More information about the content of this collection is available on https://imagej.net/MorphoLibJ.

- Follow the same steps as in step 5.3.1 to set parameters of boxes Channel signal, Gaussian filter, Active Contours, Extension to save segmented objects and Text. Boxes ranks are not the same as in step 5.3.1.

- Set parameters of the box Adaptive histogram equalization (see step 5.3.2.3).

- To activate Subtract Background, write yes in Apply Subtract Background? (box 5). Else, write No. If the plugin is activated, set the rolling parameter (see step 5.3.2), in the box Subtract Background parameter (box 7).

- Automatization of HK-means: To apply the same parameters for all images (fully automated processing), set the Nb of classes (box 11), the Minimum size (box 12), and the Maximum size (box13) (see step 5.3.1). These parameters must be set to select a maximum of foreground pixels and to optimize the individualization of foreground objects. For the semi-automated processing version, no intervention is needed.

- Automatization of marker extractions: for the fully-automated version, expand the box Internal Markers extraction (box 27) and set the value of the “dynamic” parameter in line 13 of the script. For the semi-automated processing version, no intervention is needed.

NOTE: Markers are extracted by applying an extended-minima transformation on an input image controlled by a “dynamic” parameter. In the marker-based watershed algorithm, flooding from these markers is simulated to perform object segmentation. For the successful segmentation of foreground objects, one single marker per foreground object should be extracted. The setting of the “dynamic” parameter for optimal markers extraction depends mostly on the resolution of images. Thus, if being not familiar with this parameter, use the semi-automated version. - Run the workflow (for details, see part 7).

- At the beginning of the processing, dialog boxes HK-means parameters and Marker-based watershed successively open. To apply the same parameters for all images (fully-automated version), click on YES. Otherwise, click on NO. An information box opens, asking to “Determine optimal ROIs with HK-Means plugin and close image”. Click on OK and manually apply the HK-Means plugin (Detection and Tracking|HK-Means) on the image, which automatically opens. Select the Export ROIs option in the HK-Means plugin box. Apply the best parameters to have ROIs containing a maximum of foreground pixels and to optimize the individualization of foreground objects. When optimal ROIs are found, directly close the image.

- At the end of the first segmentation method, an information box opens and asks to “Remove unwanted ROIs and close image”. These ROIs correspond to the borders of the segmented objects. Select OK and remove ROIs of mis-segmented objects in the image, which automatically opens. An ROI can be easily removed by placing the cursor on its border and using the “Delete” button of the keyboard. Close the image. Repeat the same procedure after completion of the second segmentation step.

- At this stage, if the YES button was selected for the full-automatization of the marker-based watershed algorithm, the previously set parameters will be applied to all images.

- If the NO button was selected, an information box opens, asking to “Determine and adjust internal markers”. Click on OK and within the ImageJ interface of Icy, go to Plugins | MorphoLibJ | Minima and Maxima|Extended Min & Max. In Operation, select Extended Minima.

- Select Preview to pre-visualize on the automatically opened image the result of the transformation. Move the dynamic until optimal markers are observed. Markers are groups of pixels with a value of 255 (Not necessarily white pixels). Optimal parameters lead to one marker per object. Focus on the remaining objects that have not been well segmented with the two previous segmentation methods.

- If necessary, improve the markers by applying additional morphological operations like “Opening” or “Closing” (Plugins | MorphoLibJ | Morphological Filters). When getting the final image of markers, keep it open and close all the other images ending with the image initially used as an input for the Extended Minima operation. Click on No if an ImageJ box asks to save changes on this image.

- In the box Nb of images with Information Box (box 14), determine how many images with Information Boxes should appear.

- Segmentation E: Clustered cytoplasm

NOTE: This block uses previously segmented nuclei as individual markers to initiate cytoplasm segmentation. Be sure that the block of nuclei segmentation has processed before using it.- In the box Channel cytoplasm (box 1), set the channel of the cytoplasmic signal.

- In the box Extension segmented nuclei (box 2), write the format used to save images of segmented nuclei (tif, jpeg, bmp, png). The default format is tif.

- In the box Text (box3), write the \Name of the folder containing segmented nuclei.

- In the box Format of images of segmented cytoplasms (box 4), set the format to use for saving segmented objects images (Tiff, Gif, Jpeg, BMP, PNG).

- During the process, a folder is automatically created to save images of segmented cytoplasms. In the box Text (box 5), name this folder (ex: Segmented cytoplasms). The folder is created in the folder containing merged images.

- Follow the same steps as in step 5.3.1 to set parameters of boxes Gaussian filter and Active Contours (Be careful, box ranks are not the same as in step 5.3.1).

- Run the workflow (for details, see part 7).

- Segmentation A: Non clustered objects and Segmentation C: Clustered objects with convex shapes

6. Fluorescent signal detection and analysis

- Select the adapted block.

- In the block Fluorescence Analysis A: 1 Channel, perform detection and analysis of foci in one channel inside one type of segmented object: detection of coilin foci (red channel) within the nucleus.

- In the block Fluorescence Analysis B: 2 Channels in the same compartment, perform detection and analysis of foci in two channels inside one type of segmented object: detection of coilin (red channel) and 53BP1 (green channel) foci within the nucleus.

- In the block Fluorescence Analysis C: 2 Channels in two compartments, perform detection and analysis of foci in one or two channels, specifically inside the nuclei and their corresponding cytoplasm: detection of Coilin foci (red channel) both within the nucleus and its corresponding cytoplasm or detection of Coilin foci (red channel) within the nucleus and G3BP foci (green channel) within the corresponding cytoplasm.

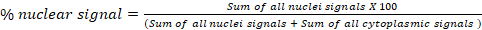

- In the block Fluorescence Analysis D: Global Translocation, calculate the percentage of signal from one channel in two cellular compartments (a and b). For example, in a cytoplasm/nucleus translocation assay, export calculated percentages of nuclear and cytoplasmic signals for each image in the final “Results” spreadsheet. The formula used to calculate the nuclear signal percentage is shown below. This block can be used for any subcellular compartment:

- In the block Fluorescence Analysis E: Individual Cell Translocation, calculate the percentage of signal from one channel in two cellular compartments for each cell. This block is specially optimized for nucleus/cytoplasm translocation assay at the single-cell level.

NOTE: Because the block Fluorescence Analysis E: Individual Cell Translocation performs analysis at the single-cell level, efficient segmentation of nucleus and cytoplasm is needed.

- Link the output0 (File) of the block Select Folder (block 1) to the folder input (white arrows in black circles) of the chosen block.

- Set the parameters of the chosen block.

- Fluorescence Analysis A: 1 Channel, Fluorescence Analysis B: 2 Channels in the same compartment and Fluorescence Analysis C: 2 Channels in two compartments

- In the box Folder images ROI, write the name of the folder containing images of segmented objects preceded by a backslash. (For example: \Segmented nuclei).

- In the box Format of images of segmented objects (box 2), write the format used to save images of segmented objects (tif, jpeg, bmp, png). The default format is tif.

- In the box Kill Borders?, write Yes to remove border objects. Otherwise, write No. The installation of the MorphoLibJ collection of ImageJ is required to use this function (see step 5.3.3).

- In the box(es) Channel spots signal, set the channel where spots have to be detected. In classical RGB images, 0=Red, 1=Green, and 2=Blue.

- In the boxes Name of localized molecule, write the name of the molecule localizing into the spots. The number of fields to enter depends on the number of molecules.

- In the box(es) Wavelet Spot Detector Block, set spot detection parameters for each channel. Set the scale(s) (referred to spot size), and the sensitivity of the detection (a smaller sensitivity decreases the number of detected spots, the default value being 100 and the minimum value being 0). Exhaustive documentation of this plugin is available online: http://icy.bioimageanalysis.org/plugin/Spot_Detector. Parameters can also be manually defined in Icy (Detection and Tracking | Spot Detector).

- As an option, in the box Filter ROI by size, filter the segmented objects where spots are detected by setting an interval of size (in pixels). This step is especially useful to remove under- or over-segmented objects. To manually estimate the size of objects, see step 5.3.1. Default parameters do not include the filtering of ROIs by size. The block 2 Channels in two compartments contains two boxes: Filter nuclei by size (box 19) and Filter cytoplasm by size (box 46).

- Optionally, in the box Filter spots by size, filter the detected spots according to their size (in pixels) to remove unwanted artifacts. To manually estimate spot size, click on Detection and Tracking and open the Spot detector plugin. In Output options, select Export to ROI. Be careful that default parameters do not include filtering of spots by size, and that filtered spots are not taken into account for the analysis. The number of fields to enter depends on the number of channels.

- Optionally, in the box Filter spots, apply an additional filter (contrast, homogeneity, perimeter, roundness) on the detected spots. Be careful that default parameters do not include spot filtering and that filtered spots are not taken into account for the analysis. The number of fields to enter depends on the number of channels.

- Optionally, in the boxes Spot size threshold, set a threshold for the area (in pixels) of analyzed spots. The number of counted spots below and above this threshold is exported in the final Results spreadsheet. The number of boxes to be informed depends on the number of channels.

- Run the workflow (for details, see part 7). Data are exported in a Results spreadsheet saved in the folder containing merged images.

- Fluorescence Analysis D: Global Translocation and Fluorescence Analysis E: Individual Cell Translocation:

- In the boxes Folder images (boxes 1 and 2), write the \Name of the folder containing images of segmented objects. In the block Fluorescence Analysis D: Global Translocation, the two types of ROI are identified as ROI a and ROI b. For the block Fluorescence Analysis E: Individual Cell Translocation, in Folder images segmented nuclei and Folder images segmented cytoplasms boxes, write the name of the folder containing segmented nuclei and cytoplasms, respectively.

- In the box Channel signal (box 3), enter the channel of the signal.

- In the box Format of images of segmented objects (box 4), write the format used to save images of segmented objects (tif, jpeg, bmp, png). The default format is tif. The Kill Borders option is also available to remove border objects (see step 6.3.1).

- Optionally, in boxes Filter ROI by size, filter the segmented objects by setting an interval of size (in pixels). This step could be useful to remove under- or over-segmented objects. To manually estimate object size, see step 5.3.1. There are two fields to enter, one per channel. Default parameters do not include ROI filtering by size.

- Run the workflow (for details, see part 7). Export data in a spreadsheet Results saved in the folder containing merged images.

- Fluorescence Analysis A: 1 Channel, Fluorescence Analysis B: 2 Channels in the same compartment and Fluorescence Analysis C: 2 Channels in two compartments

7. Run the protocol

- To process one block in a run, remove the connection between the selected block and the block Select Folder. Place the wanted block at the 1st rank. On the upper left corner of the wanted block, click on the link directly to the right of folder. In the Open dialog box which appears, double-click on the folder containing the merged images. Then, click on Open. Click on Run to start the workflow. The processing can be stopped by clicking on the Stop button.

- To process different blocks in a run, keep connections of chosen blocks with the block Select Folder (block 1). Make sure that their rank allows the good processing of the workflow. For example, if a specific block needs segmented objects to process, be sure that the segmentation block processes before. Before running the workflow, remove unused blocks and save the new protocol with another name.

- Click on Run to start the workflow. When the open dialog box appears, double-click on the folder containing the merged images. Then, click on Open. The workflow automatically runs. If necessary, stop the processing by clicking on the Stop button.

- At the end of the processing, check that the message The workflow executed successfully appeared in the lower right-hand corner and that all the blocks are flagged with a green sign (Figure 2b). If not, the block and the inside box presenting the error sign indicate the element to correct (Figure 2b).

NOTE: After the workflow executed successfully, a new run cannot be directly started, and to process the workflow again, at least one block should be flagged with the sign “ready to process”. To change the state of a block, either delete and re-create a link between two boxes inside this block or simply close and re-open the protocol. If an error occurs during the processing, a new run can directly be started. During a new run, all the blocks of the pipeline are processed, even if some of them are flagged with the green sign.

Wyniki

All the described analyses have been performed on a standard laptop (64-bit, quad-core processor at 2.80 GHz with 16 GB random-access memory (RAM)) working with the 64-bit version of Java. Random-access memory is an important parameter to consider, depending on the amount and the resolution of images to analyze. Using the 32-bit version of Java limits the memory to about 1300 MB, which could be unsuitable for big data analysis, whereas the 64-bit version allows increasing the memory allocated to Icy.

Dyskusje

An increasing number of free software tools are available for the analysis of fluorescence cell images. Users must correctly choose the adequate software according to the complexity of their problematic, to their knowledge in image processing, and to the time they want to spend in their analysis. Icy, CellProfiler, or ImageJ/Fiji are powerful tools combining both usability and functionality3. Icy is a stand-alone tool that presents a clear graphical user interface (GUI), and notably its “Pro...

Ujawnienia

The authors have nothing to disclose.

Podziękowania

G.H. was supported by a graduate fellowship from the Ministère Délégué à la Recherche et aux Technologies. L.H. was supported by a graduate fellowship from the Institut de Cancérologie de Lorraine (ICL), whereas Q.T. was supported by a public grant overseen by the French National Research Agency (ANR) as part of the second “Investissements d’Avenir” program FIGHT-HF (reference: ANR-15-RHU4570004). This work was funded by CNRS and Université de Lorraine (UMR 7365).

Materiały

| Name | Company | Catalog Number | Comments |

| 16% Formaldehyde solution (w/v) methanol free | Thermo Fisher Scientific | 28908 | to fix the cells |

| Alexa Fluor 488 of goat anti-rabbit | Thermo Fisher Scientific | A-11008 | fluorescent secondary antibody |

| Alexa Fluor 555 of goat anti-mouse | Thermo Fisher Scientific | A-21425 | fluorescent secondary antibody |

| Alexa Fluor 555 Phalloidin | Thermo Fisher Scientific | A34055 | fluorescent secondary antibody |

| Bovine serum albumin standard (BSA) | euromedex | 04-100-812-E | |

| DMEM | Sigma-Aldrich | D5796-500ml | cell culture medium |

| Duolink In Situ Mounting Medium with DAPI | Sigma-Aldrich | DUO82040-5ML | mounting medium |

| Human: HeLa S3 cells | IGBMC, Strasbourg, France | cell line used to perform the experiments | |

| Hydrogen peroxide solution 30% (H2O2) | Sigma-Aldrich | H1009-100ml | used as a stressing agent |

| Lipofectamine 2000 Reagent | Thermo Fisher Scientific | 11668-019 | transfection reagent |

| Mouse monoclonal anti-coilin | abcam | ab11822 | Coilin-specific antibody |

| Nikon Optiphot-2 fluorescence microscope | Nikon | epifluoresecence microscope | |

| Opti-MEM I Reduced Serum Medium | Thermo Fisher Scientific | 31985062 | transfection medium |

| PBS pH 7.4 (10x) | gibco | 70011-036 | to wash the cells |

| Rabbit polyclonal anti-53BP1 | Thermo Fisher Scientific | PA1-16565 | 53BP1-specific antibody |

| Rabbit polyclonal anti-EDC4 | Sigma-Aldrich | SAB4200114 | EDC4-specific antibody |

| Triton X-100 | Roth | 6683 | to permeabilize the cells |

Odniesienia

- Möckl, L., Lamb, D. C., Bräuchle, C. Super-resolved fluorescence microscopy: Nobel Prize in Chemistry 2014 for Eric Betzig, Stefan Hell, and William E. Moerner. Angewandte Chemie. 53 (51), 13972-13977 (2014).

- Meijering, E., Carpenter, A. E., Peng, H., Hamprecht, F. A., Olivo-Marin, J. -. C. Imagining the future of bioimage analysis. Nature Biotechnology. 34 (12), 1250-1255 (2016).

- Wiesmann, V., Franz, D., Held, C., Münzenmayer, C., Palmisano, R., Wittenberg, T. Review of free software tools for image analysis of fluorescence cell micrographs. Journal of Microscopy. 257 (1), 39-53 (2015).

- de Chaumont, F., et al. Icy: an open bioimage informatics platform for extended reproducible research. Nature Methods. 9 (7), 690-696 (2012).

- Girish, V., Vijayalakshmi, A. Affordable image analysis using NIH Image/ImageJ. Indian J Cancer. 41 (1), 47 (2004).

- Zaitoun, N. M., Aqel, M. J. Survey on image segmentation techniques. Procedia Computer Science. 65, 797-806 (2015).

- . MetaMorph Microscopy Automation and Image Analysis Software Available from: https://www.moleculardevices.com/products/cellular-imaging-systems/acquisition-and-analysis-software/metamorph-microscopy (2018)

- . NIS-Elements Imaging Software Available from: https://www.nikon.com/products/microscope-solutions/lineup/img_soft/nis-element (2014)

- Meyer, F., Beucher, S. Morphological segmentation. Journal of Visual Communication and Image Representation. 1 (1), 21-46 (1990).

- Schieber, M., Chandel, N. S. ROS Function in Redox Signaling and Oxidative Stress. Current Biology. 24 (10), 453-462 (2014).

- D'Autréaux, B., Toledano, M. B. ROS as signalling molecules: mechanisms that generate specificity in ROS homeostasis. Nature Reviews. Molecular Cell Biology. 8 (10), 813-824 (2007).

- Davalli, P., Mitic, T., Caporali, A., Lauriola, A., D'Arca, D. ROS, Cell Senescence, and Novel Molecular Mechanisms in Aging and Age-Related Diseases. Oxidative Medicine and Cellular Longevity. 2016, 3565127 (2016).

- Disher, K., Skandalis, A. Evidence of the modulation of mRNA splicing fidelity in humans by oxidative stress and p53. Genome. 50 (10), 946-953 (2007).

- Takeo, K., et al. Oxidative stress-induced alternative splicing of transformer 2β (SFRS10) and CD44 pre-mRNAs in gastric epithelial cells. American Journal of Physiology - Cell Physiology. 297 (2), 330-338 (2009).

- Seo, J., et al. Oxidative Stress Triggers Body-Wide Skipping of Multiple Exons of the Spinal Muscular Atrophy Gene. PLOS ONE. 11 (4), 0154390 (2016).

- Will, C. L., Luhrmann, R. Spliceosome Structure and Function. Cold Spring Harbor Perspectives in Biology. 3 (7), 003707 (2011).

- Ljosa, V., Sokolnicki, K. L., Carpenter, A. E. Annotated high-throughput microscopy image sets for validation. Nature Methods. 9 (7), 637-637 (2012).

- Wang, Q., et al. Cajal bodies are linked to genome conformation. Nature Communications. 7, (2016).

- Carpenter, A. E., et al. CellProfiler: image analysis software for identifying and quantifying cell phenotypes. Genome Biology. 7, 100 (2006).

- McQuin, C., et al. CellProfiler 3.0: Next-generation image processing for biology. PLoS Biology. 16 (7), 2005970 (2018).

Przedruki i uprawnienia

Zapytaj o uprawnienia na użycie tekstu lub obrazów z tego artykułu JoVE

Zapytaj o uprawnieniaThis article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. Wszelkie prawa zastrzeżone